Summary:

- NVIDIA is undergoing a significant transformation in its business model, with a focus on recurring software/services revenue and AI applications.

- The introduction of DGX Cloud, a gateway to an AI factory in the cloud, is gaining traction and driving migration of workloads to the cloud.

- NVIDIA’s native support for FP8 in its H100 product gives it a competitive edge in AI processing, offering higher performance and accuracy.

- By applying a ~33x multiple, the stock should trade around $625. (Providing investors ~40% upside from current trading price).

NVDA,AI Sundry Photography

NVIDIA Corporation (NASDAQ:NVDA) is going through the largest transformation of its business model which is not a short-term story and, in my opinion, its real bull case is just starting. NVDA continues to transform its business model with broad new sources of recurring software/services revenue – a message that should resonate well with investors even after 2023 rally. I remain positive on the stock and continue to see potentially substantial headroom in the context of the broader market opportunity as AI comes into the conventional consciousness with new modalities stretching way beyond current text based applications (e.g. video, music, 3D imagery, etc.).

NVIDIA is known for its graphics processing units (GPUs) that were initially used for gaming and graphics-related tasks but have found applications in a wide range of fields, including artificial intelligence and deep learning. The long-term revenue prospects for NVDA in the AI sector will ultimately be guided by demand. However, when it comes to data center revenue, supply is expected to be the primary influencing factor at least until the end of 2024.

In this article, I’ll explore NVIDIA’s recent capabilities, arguing that past year growth is just the beginning. The recent stock pullback offers a long-term buying opportunity before the upcoming earnings on 8/23.

DGX Cloud – already off to a swift and promising start

Over the past few quarters, NVDA has strategically established essential cloud capacity pathways, culminating in the announcement of approximately $2.3 billion in pre-payments to various Cloud Service Providers (CSPs). A significant development was the introduction of the DGX Cloud offering, a move that seems to be rapidly gaining traction among customers. During a recent customer panel discussion focused on DGX Cloud, NVDA revealed that the product’s unveiling had triggered a surge in interest, with a substantial number of customers eagerly anticipating early access to the platform.

DGX Cloud essentially offers customers a gateway to an AI factory in the cloud, seamlessly delivering the computational might of NVDA’s DGX systems without necessitating the establishment of hardware infrastructure, such as the DGX H100 or DGX BasePOD, within their own data centers. A pivotal component of DGX Cloud’s value proposition, if not the cornerstone, is NVDA’s AI Enterprise software suite. This suite encompasses a wide array of accelerated AI and data science tools, finely tuned frameworks, and pre-trained models that cater to diverse industry sectors. Importantly, many of these resources surpass the capabilities that CSPs themselves can offer.

While, on the surface, the DGX Cloud business model might appear to set up a competitive dynamic between NVDA and its own customer base, the prevailing sentiment suggests that CSPs are approaching DGX Cloud in a pragmatic manner. In fact, there is a realization that DGX Cloud has the potential to funnel a greater volume of customers into the cloud environment. The collaborative ecosystem formed by DGX Cloud’s offerings, including the AI Enterprise software suite, is anticipated to drive a more substantial migration of workloads and projects to the cloud, resulting in a win-win situation for both NVDA and CSPs. This approach aligns with a forward-looking strategy that embraces the transformative power of AI while creating mutually beneficial outcomes for stakeholders in the AI and cloud sectors.

Native FP8 support in H100 is a major advantage

NVDA arranged multiple dedicated sessions that revolved around the development and utilization of large language models (LLMs). These sessions emphasized a concerted effort within the community to make substantial advancements in three key areas:

- Performance: The discussions and presentations likely delved into methods and techniques aimed at making LLMs more powerful and capable. This could involve strategies for increasing their ability to understand and generate human-like text or their capacity to handle complex language tasks.

- Efficiency: In the context of LLMs, efficiency typically refers to optimizing these models to perform tasks more quickly and with fewer computational resources. This is important because LLMs, especially when used at scale, can be resource-intensive. The sessions may have explored techniques to make them more resource-efficient without sacrificing performance.

- Accuracy: Ensuring that LLMs generate highly accurate results is crucial, especially in applications like natural language understanding and generation. The discussions probably touched on ways to improve the precision and reliability of these models, potentially through fine-tuning, better training data, or more advanced algorithms.

An essential focus of multiple sessions was the adoption of FP8 (8-bit floating point) for both training and inference, a novel approach made feasible through NVDA’s integration of native FP8 support into its Ada and Hopper products. Notably, substantial strides have been achieved in terms of maintaining model accuracy even with FP8 quantization.

This development confers a significant edge to NVDA over competitors like AMD (AMD), particularly in light of the industry’s growing inclination towards FP8 for training and inference. The H100, operating at FP8 precision, delivers a compute performance of approximately 2,000 TFLOPS (with a potential surge to around 4,000 TFLOPS when incorporating sparsity). This starkly contrasts with the A100’s FP16 performance, offering a sixfold increase. Critically, the A100 lacks native support for FP8.

The implications are twofold:

Performance Leverage: The H100’s superiority in FP8 precision signifies a substantial enhancement in performance per watt and price/performance. This establishes it as an enticing option for tasks such as Large Language Model (LLM) training and inference, capitalizing on the inherent benefits of higher computational output with less energy consumption.

Competitive Edge: In the competitive landscape, NVDA gains a strategic advantage by offering a solution that aligns with the industry trend towards FP8 for AI-related tasks. This native support empowers users to harness the advantages of FP8 without compromising on model accuracy.

Collectively, NVDA’s embrace of FP8 and the H100’s impressive performance metrics bring forth a compelling choice for LLM training and inference. This strategic approach sets NVDA apart by addressing the evolving demands of AI processing, substantiating its position as a leader in the AI hardware domain.

Delving further into the automotive potential

Generative AI plays a crucial role in facilitating the creation of sophisticated, varied, and controllable simulations necessary for advancing Level 3 to Level 4+ autonomous vehicles. NVDA’s DRIVE Sim derives its potency from the fusion of generative AI and Omniverse, enabling the conversion of real-world sensor data into synthetic 3D models (known as digital twins) for rigorous testing and validation. Complementing this innovation is NVDA’s novel DRIVE Thor chip, which consolidates autonomous vehicle functionalities into a single System-on-Chip (SoC) for digital clusters, infotainment systems, and driver assistance. This approach streamlines development and accelerates software refinement, offering up to 2,000 teraflops in FP8 precision across AI, infotainment, and driver assist functions.

NVDA’s DRIVE Thor empowers automotive Original Equipment Manufacturers (OEMs) to tailor configurations to precise requirements. Furthermore, the company revealed plans to extend GeForce NOW cloud gaming services to vehicles, enhancing in-cabin entertainment offerings. NVDA’s distinct advantage lies in its ability to deliver a comprehensive AI toolkit alongside robust hardware, creating a differentiating solution that leverages data center-based AI training to enable high-performance centralized in-car computing.

Highlighting NVDA’s growth trajectory, its auto design win pipeline has expanded to approximately $14 billion, projected through 2028. The company’s recent notable contracts include a partnership with Mercedes to develop a car computing system powered by NVDA’s DRIVE AGX Orin technology (the predecessor of Thor). This collaboration, based on public statements from Mercedes, has the potential to drive substantial incremental revenue in the 2026-2028 timeframe, potentially amounting to several billion dollars annually.

Forecasting GPU units and its long-term impact on revenue

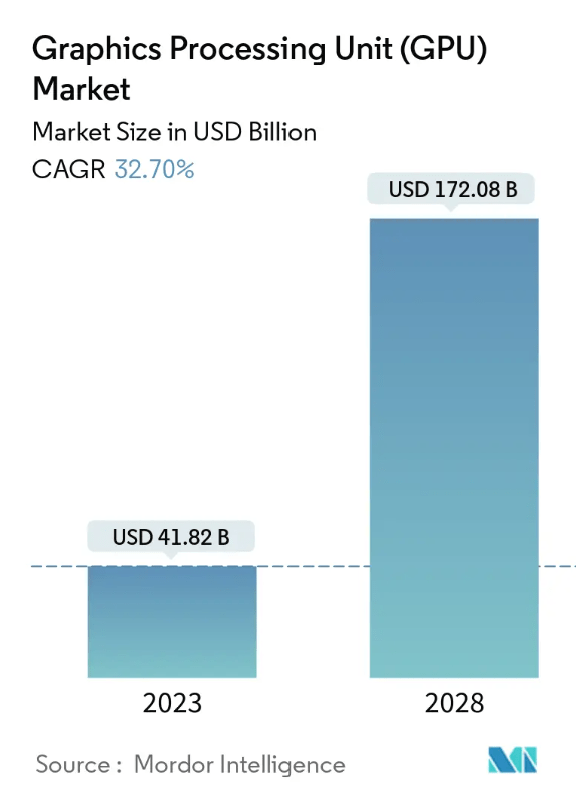

Per Mordor Intelligence Report, Graphics Processing Unit (‘GPU’) Market size is expected to grow from $41.82 billion in 2023 to $172.08 billion by 2028, at a CAGR of 32.70% during the forecast period (2023-2028).

Mordor Intelligence

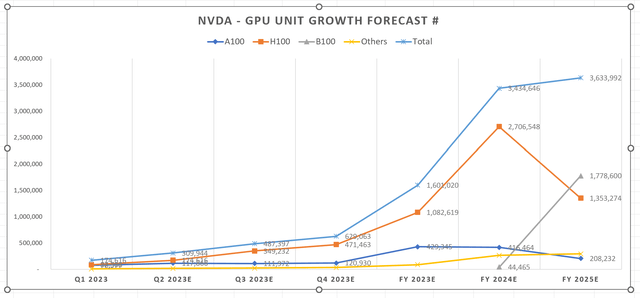

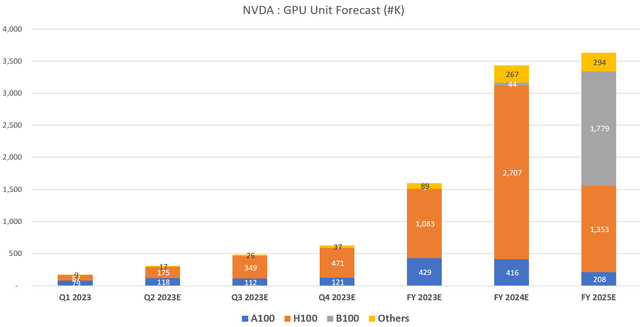

Based on a range of factors, with a strong focus on TSMC’s capacity expansion, we can project GPU units for NVDA and AMD. Regarding AMD, there’s substantial demand for several hundred thousand GPU units across major hyperscale’s. This demand still holds, but it’s important to recognize that capacity constraints and lead times for the MI300X may prevent the shipment of all these units in FY 2024.

Moreover, this situation could extend into FY 2025, as we expect new orders to come in and contribute to revenue backfilling. This underscores the significance of considering capacity limitations and lead times when assessing the timeline for fulfilling GPU unit orders in the context of AMD’s operations.

Chart made using company data and Forecast

Chart made using company data and forecast assumption

NVIDIA is playing a pivotal role that can be likened to a “kingmaker” in the current landscape. This analogy highlights the company’s influential position, as it is not merely a participant but a significant driving force behind the ongoing transformations in the AI sector. The market is witnessing a significant surge in capital investments and the emergence of innovative financing vehicles. These resources are being directed towards novel AI software solutions and specialized cloud infrastructure models, with notable examples being CoreWeave and Lamda Labs.

In parallel, enterprises are encountering a substantial challenge in their early stages of adopting AI at scale – the struggle to secure adequate computing capacity. This capacity is essential for building and deploying AI systems effectively. This demand-supply mismatch is likely to persist well into the upcoming year, reflecting the magnitude of the transition toward AI-driven technologies across industries.

Valuations and expected growth:

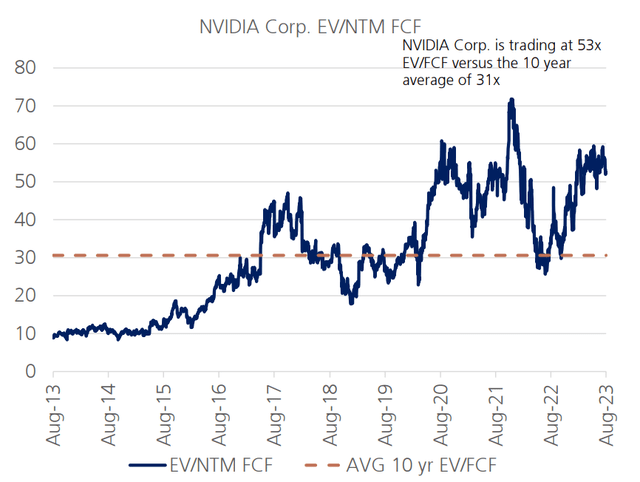

Currently NVDA is trading at ~53X EV/FCF.

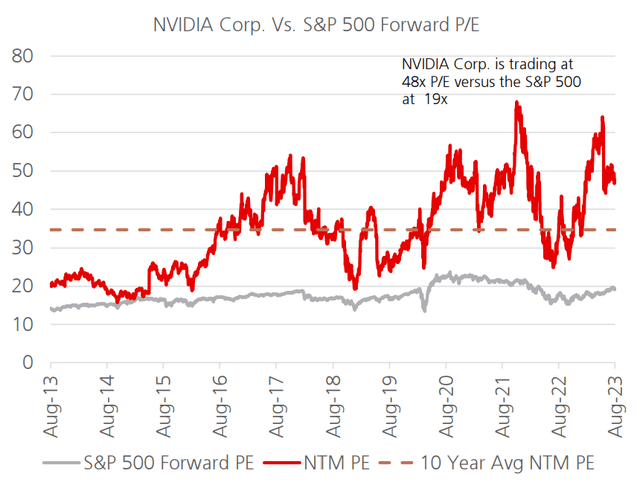

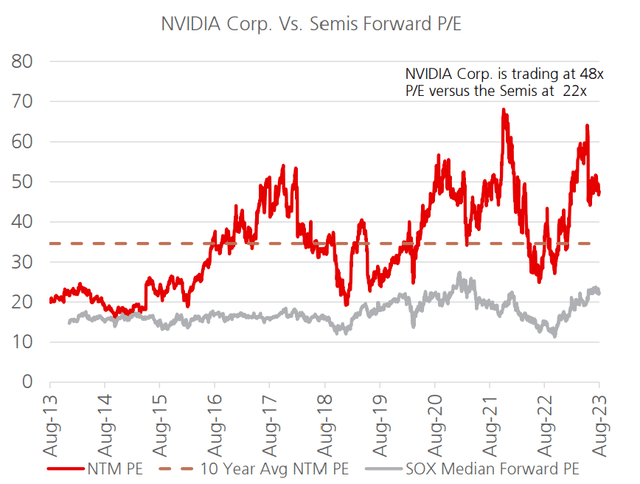

NVDA is trading at ~29X premium to the S&P500 and ~26X premium to other Semi-conductor stocks.

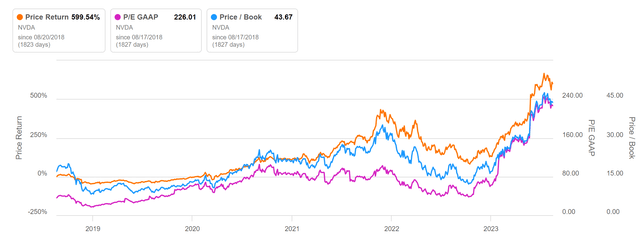

NVDA is currently trading ~29X premium to the S&P 500 and it’s in line with its historical trading range of the stock over the past 5 years, where the median P/E ratio has been around 39X.

If we assume that in FY 2024, it shows 5% growth in Gaming and an impressive 96% growth for Data Center YoY; and in FY 2025, 8% growth for Gaming and 15% for Data Center YoY, then my projections assume net income margins of 49% for FY 2024 and 44% for FY2025.

I am using a conservative multiple of 33X for valuation purposes. This is relatively lower compared to the historical trading range of the stock over the past 5 years, where the median P/E (Price-to-Earnings) ratio has been around 39X. Despite being on the lower end of historical valuations, I believe this lower multiple is justified due to anticipation that the market will begin compressing the multiple. This expectation is based on the substantial increase in earnings I foresee in the upcoming quarters.

By applying a ~33x multiple, stock should trade around $625. (Providing investors ~40% upside from current trading price)

Additionally, it’s important to recognize the historical pattern of NVDA’s stock performance. It has typically followed a trajectory where its peak value tends to occur after the YoY comparisons have reached their peak. This suggests that there might still be significant upside potential for the stock in the foreseeable future, and that the current momentum could potentially carry forward for some time before any substantial corrections or adjustments occur.

Conclusion:

NVDA’s innovative use of generative AI, the integration of DRIVE Thor, and its strategic software-hardware synergy position the company as a pioneer in the autonomous vehicle landscape. The projected revenue growth and strategic partnerships underscore the potential of NVDA’s offerings to significantly shape the automotive industry’s future.

In essence, NVDA’s role as a “kingmaker” is shaping the AI landscape by attracting capital, stimulating innovation, and addressing the computational demands of AI adoption. Both strategic and tactical investors are finding reasons to remain engaged in the company’s growth story, as the prevailing market dynamics and historical patterns indicate promising prospects for the foreseeable future.

Risk Statement:

I established this target price by employing a P/E ratio. Risks specific to NVIDIA include:

- Competition from AMD: In the segments of graphics processors (GPUs) and professional visualization products, which have historically been major contributors to the company’s gross profit, there is a significant threat from AMD. Their competition could impact NVIDIA’s market share and profitability.

- Intense ARM-based Applications Processor Competition: NVIDIA faces strong rivalry in ARM-based applications processors. Competitors are striving to surpass NVIDIA’s initial advantage in dual-core technology. This competitive pressure could challenge NVIDIA’s position in this sector.

- Emerging Competition from Intel: Intel’s (INTC) many-integrated core processor family poses emerging competition against NVIDIA’s professional GPU computing products. Intel’s approach might offer more flexibility and easier software development, potentially posing a challenge to NVIDIA’s products.

- Semiconductor Sector Risk: The semiconductor industry poses inherent risks, as revenue trends historically correlate with corporate profitability. The economic recovery’s relatively subdued nature compared to previous downturns could hinder robust growth for NVIDIA, as it is susceptible to the broader economic environment.

These company-specific and industry-wide risks should be closely monitored, as they can impact NVIDIA’s performance, market positioning, and overall growth prospects.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.