Summary:

- Robust AI-driven data center sales has propelled Nvidia’s blockbuster fiscal Q2 earnings beat.

- The company has guided similar strength in the current quarter, supported by continued deployment of accelerated computing infrastructure to support ongoing AI developments.

- Outsized growth and improving profitability are expected to bolster the stock’s premium at current levels.

Justin Sullivan

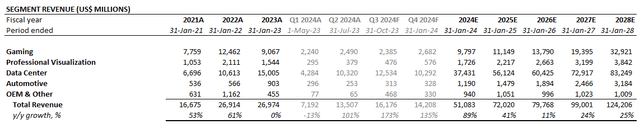

Nvidia (NASDAQ:NVDA) really did and went ahead to pull off a 101% y/y increase to fiscal second quarter revenues, beating its blockbuster guidance to push the stock above $500 a piece in post-market trading. The monstrous quarter was driven primarily by robust AI demand in data center sales – particularly the deployment of “NVIDIA H100 AI infrastructures” – that were up by almost triple of the prior year’s. The blockbuster results were complemented by robust double-digit percentage growth across its consumer-facing gaming and automotive segments, despite a slow consumer backdrop.

Optimism for continued acceleration in data center sales through the second half of fiscal 2024 is largely consistent with previous expectations set by management’s aims to improve supply availability beginning Q3, and support pent-up demand driven by momentum in AI deployments. So how do we remain optimistic that demand will stay? While uncertainties remain on the extent to which recent expectations and momentum in AI will actually materialize, one thing that is certain is that end-market users remain primarily concerned about optimization – there is little room for compromise between performance and low total cost of ownership as the pace of migration to the cloud stabilizes. And Nvidia, the backbone of key next-generation technologies, remains the clear leader in enabling both key components – namely, high performance and low TCO – of optimization, especially in supporting incremental AI opportunities based on capabilities of its latest GH200 superchip.

Pairing Nvidia’s technological advantage, which will bode favorably for industry’s increasingly structural optimization narrative, with persistent momentum in AI developments, the stock is likely to find continued support at current levels. And with growing expectations for gradually improving supply availability, alongside parting cyclical headwinds facing its gaming and pro-vis segments heading into the second half, there are also favorable prospects for incremental ROIC expansion heading into fiscal 2025 that will reinforce durability to the premium currently priced into the stock in our opinion.

Data Center Does It Again

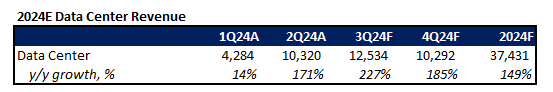

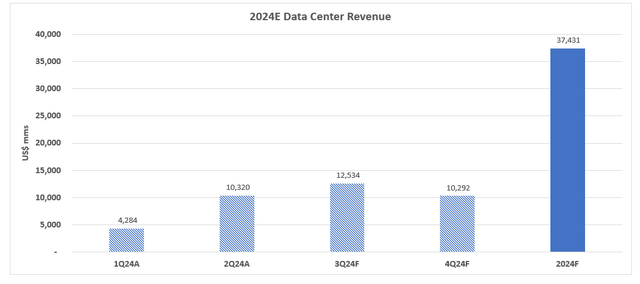

Nvidia overdelivered on data center sales in the fiscal second quarter, with segment revenue up 171% y/y to $10.3 billion to represent an outsized beat. This was a primary driver of its record-setting $13.5 billion in consolidated quarterly revenue, which exceeded management’s previously guided range of $11 billion +/- 2%.

The chipmaker’s robust results continue to be regarded as the standard gauge for AI demand, defying a mixed outlook from both peers and customers across the AI supply chain during the latest earnings season. Paired with management’s updated guidance for another quarter of almost doubling growth to $16 billion revenue in the current period, which would imply continued acceleration in data center sales, Nvidia reinforces confidence that the AI opportunity remains in early stages of realizing its full potential.

Reinforcing the AI Moat

Looking ahead, we see two major drivers for the data center segment – 1) GH200 shipments, and 2) improving supply availability in the second half.

GH200 Superchip Platform

Earlier this month, Nvidia unveiled its latest “GH200 Grace Hopper Superchip” platform, which will start shipping in 2024. The newest technology has many “firsts”, taking Nvidia’s prowess in AI hardware to the next level with a combination of unmatched performance and TCO metrics.

The GH200 Superchip is essentially an accelerator that combines capabilities of Nvidia’s latest “Hopper” architecture GPUs and “Grace” CPUs (see here for our discussion on accelerated computing). First to integrate “HBM3e” – the latest high bandwidth memory processor introduced by SK Hynix that is 50% faster than its predecessor – the GH200 Grace Hopper platform is optimized for “accelerated computing and generative AI”, reinforcing Nvidia’s leading position in capitalizing on impending AI tailwinds.

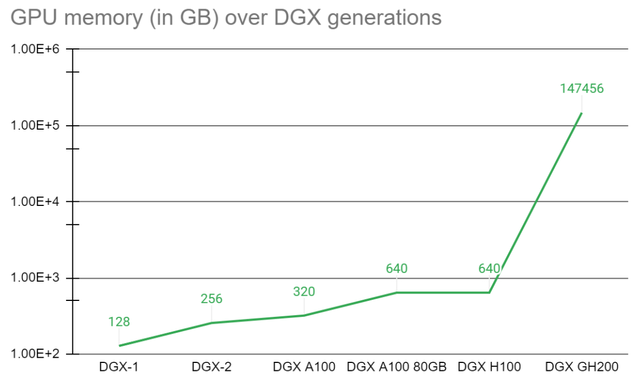

The GH200 also underscores significant scalability, addressing optimization demands from both its hyperscaler customers and enterprise end-users. Using Nvidia’s “NVLink” technology, multiple GH200 superchips can be connected to facilitate the compute demands of increasingly complex AI and high-performance computing workloads. The results continue to highlight synergies from Nvidia’s acquisition of Mellanox in 2019, which has bolstered its expertise in networking technologies. Building on the introduction of the GH200 Superchip platform, Nvidia has also unveiled the latest “DGX GH200” system, which will become available to customers before the end of the year. Nvidia DGX GH200 essentially connects 256 GH200 Superchips using NVLink technology. The system unlocks “144 terabytes of memory” – another first in the industry – to facilitate compute demands for training and inferencing complex generative AI workloads, “spanning large language models, recommender systems and vector databases”. Compared to the preceding DGX A100 system based on Ampere architecture GPUs, the latest DGX GH200 is essentially a “giant data center-sized GPU” with 500x more memory.

In addition to the GH200 superchip, Nvidia has also recently announced the “L40S GPU” optimized for accelerated computing, and unveiled the “NVIDIA MGX” sever system designed for facilitating complex AI workloads. Boiling down to simplified terms, the newest systems can cut model training times from months to weeks or less, while also inferencing more for less, which essentially translates to high performance and lower costs. The advancement highlights the appeal of Nvidia’s AI hardware and software to hyperscalers, which are some of Nvidia’s biggest customers.

Recall in one of our previous analyses on the stock, where we had discussed Microsoft’s (MSFT) significant investments poured into scaling the deployment of massive compute power to facilitate HPC and generative AI workloads. Microsoft has spent “larger than several hundred million dollars” on hardware just to link up the “tens of thousands of [Nvidia A100 GPUs]” needed to support the supercomputer it uses for AI training and inference. But the duality of GH200’s CPU-GPU accelerator capabilities, and its compatibility with NVLink can now further simplify the scalability of HPC workhorses, all while driving TCO lower.

And this is expected to reinforce data center demand at Nvidia by 1) addressing optimization at both the hyperscaler and end-user level, and 2) improving its share capture of incremental cloud TAM enabled by AI.

The optimization narrative has been playing out across the enterprise cloud industry for several quarters now. And the trend is becoming increasingly structural, with businesses prioritizing optimization not just as a “cost-cutting effort [amid] tough macroeconomic conditions”, but instead into the long-run with the intention to do more with less in their respective cloud strategies. By further improving the scalability of computing at the hyperscaler level and complex workload deployments at the enterprise level, the GH200 effectively leads in the optimization conversation.

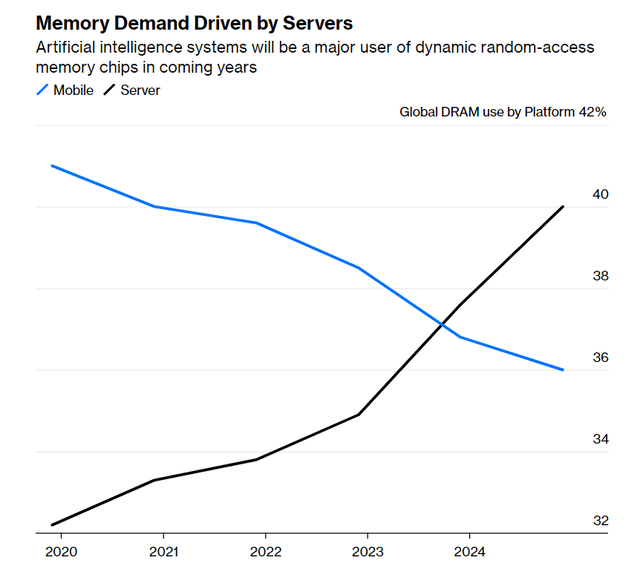

The GH200’s integration of HBM3e is also not to be overlooked. Despite the memory market slump over the past year, these chips also play a critical role in supporting the latest AI sensation, which is contrary to market’s primary focus on opportunities for data center processors. Specifically, memory is a critical component in processing complex workloads, given the significant amounts of data fed into the system. Preliminary research shows that “for every high-end AI processor bought, as much as 1 Terabyte of DRAM, [or] 30-times more than what is used in a high-end laptop, may be installed”, underscoring incremental costs of AI computing attributable to memory demands. By integrating HBM3e into the GH200 superchip, Nvidia improves the “temporary storage available within the chip itself”, reducing incremental costs and, inadvertently, improving scalability of AI model training and inference for its customers.

In addition to optimization, the GH200 superchip is also expected to reinforce Nvidia’s capture of AI-enabled cloud TAM expansion. As mentioned by Nvidia CEO Jensen Huang earlier this year, the majority of current data centers are CPU-based, which provides insufficient scale to support increasingly complex workloads. This implies significant opportunities head of an upgrade cycle driven by increasing demand for accelerated computing, which combines the power of GPU and CPU processors, to optimize tasks such as AI model training and inference. With GH200’s leading performance and TCO, which is expected to be superior to rival AMD’s (AMD) upcoming Instinct MI300 accelerators, it makes a competitive product in ensuring Nvidia’s capture of related opportunities ahead.

Improving Supply Availability

In line with the previous earnings call, management’s latest commentary reinforces expectations for improving supply availability to support pent-up data center demand heading into the second half – especially as deployments of H100-supported AI infrastructures remain strong. The favorable demand prospects are corroborated by rising prices on the H100 GPUs in secondary markets, as well as recent analyst projections that Nvidia is “able to meet only half the demand” for said chips in today’s market, spurred by AI requirements.

Pent-up demand is further corroborated by industry’s commentary in the latest earnings season that suggests a supply constrained environment in the near-term for AI hardware. This is in line with Nvidia customer, Super Micro Computer’s (SMCI) recent growth guidance that implies a “pause” to the AI momentum, which analysts expect to be a sign of “GPU tightness” that is gating sales, rather than demand weakness. Nvidia’s contract manufacturer TSMC (TSM) has also recently highlighted labor limitations and persistent inflation as challenges to ensuring adequate supply of AI hardware to meet near-term demand.

While TSMC has recently advised investors to “temper their expectations” on AI-driven demand for chips, suggesting uncertainties to the extent of relevant opportunities, the trend points to resilience in the near-term. And improving availability of supporting AI hardware at Nvidia is expected to bolster data center sales growth heading into the second half.

Fundamental Implications

The combination of incremental AI-driven demand on top of an anticipated recovery in the broader semiconductor sector continues to maintain investors’ confidence in Nvidia’s AI moat and, inadvertently, the stock’s prospects. Paired with a capex spend outlook that has not significantly changed from Nvidia’s previous guidance of $1.1 billion to $1.3 billion, and better-than-expected operating expense to bolster profitability, despite the outsized upward adjustment to data center sales expectations, are also favorable to driving further ROIC expansion. Recall from our previous analysis that market’s expectations for continued expansion in Nvidia’s investment cost-return spread, reinforced by its long-term moat in enabling critical next-generation growth opportunities, is potentially the core driver of the stock’s premium at current levels.

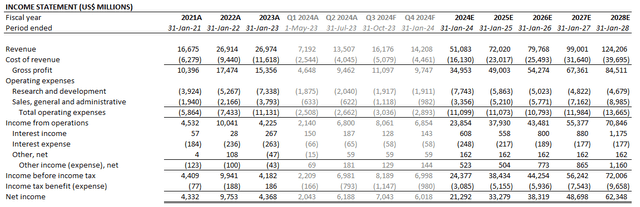

Updating our forecast for Nvidia’s latest earnings results and guidance, we expect fiscal 2024 revenue of $51.1 billion and net income of $21.3 billion, which represents growth of 89% y/y and gross profit margin of 68%. In the base case analysis, we expect data center revenue to accelerate in the second half, in line with the foregoing analysis, supported by improving supply availability. The availability of DGX GH200 later this year is also expected to allow customer testing on the capabilities of the GH200 superchip, and potentially bolster orders ahead of start of shipments in the latter half of calendar 2024.

There is also growing optimism in reacceleration across the macro-sensitive gaming and professional visualization segments in the second half, supported by both seasonality ahead of the holiday and back-to-school shopping months, as well as adjacent opportunities driven by AI momentum. PC makers such as Lenovo pins hope on ‘AI-PC’ as catalyst after another poor quarter (OTCPK:LNVGY) (OTCPK:LNVGF), Acer and HP (HPQ) have been jumping on the AI bandwagon this year, hinting at the potential for an “inflection point for the PC industry” as improvements to portable workstations could restore an upgrade cycle next year. Stabilizing enterprise hiring trends in recent months following aggressive workforce reductions implemented earlier this year also corroborates strength in the upcoming upgrade cycle. This will bode favourably for Nvidia, as it supplies some of the highest performing chips for workstations, including the “4060 Series GeForce RTX” GPUs that have only just started shipping recently. We think these adjacent AI opportunities could be incremental to the current premium priced into the stock, which is largely attributable to expectations over high-margin data center outperformance.

Nvidia_-_Forecasted_Financial_Information.pdf

Valuation Considerations

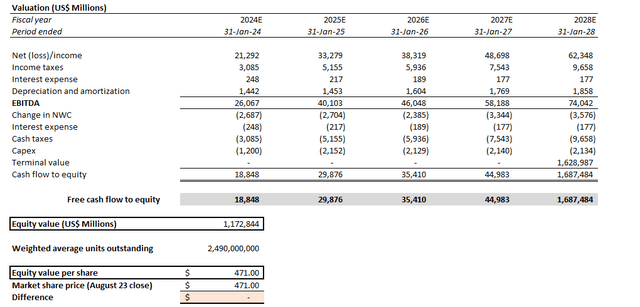

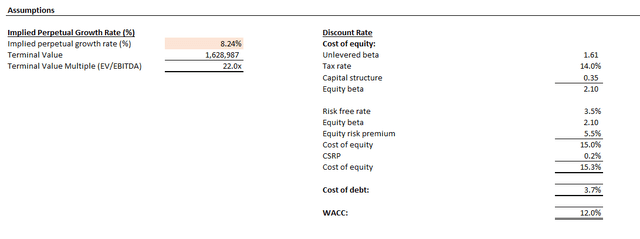

Admittedly, Nvidia’s current valuation includes a substantial premium, priced to reflect market’s high expectations over the underlying business’ AI potential. A discounted cash flow analysis that draws on projections in conjunction with our fundamental analysis, assuming a 12% WACC in line with Nvidia’s capital structure and risk profile, implies An 9% perpetual growth rate or 22x terminal value multiple for the stock at current levels ($471 apiece).

On a multiple basis, the stock implies a 53x forward EBITDA or 45x forward earnings at current levels, which is about 3x the broader market average and 4x the semiconductor peer group average. Yet, trading trends continue to show confidence in the stock. Nvidia’s short-float is at about 1%, one of the lowest among its megacap and semiconductor peers, while its call options are also more expensive than put options, underscoring market optimism for further upside potential despite the lofty valuation premium on the stock. The stock’s performance has also maintained resilience against a selloff in the broader tech sector this month, as a persistently hawkish Fed points to higher for longer rates amid a stronger-than-expected economy to keep inflation in check.

This continues to support market’s optimism over Nvidia’s ROIC expansion prospects, especially given improving end-market demand in macro-sensitive segments that has been “resilient against a challenging consumer spending backdrop”, as well as a supply-constrained environment still for its AI hardware. We believe the latest earnings reinforces a further climb towards the $500 level with durability to its premium, though we remain cautious of volatility amid a cautious spending environment due to macroeconomic challenges.

Final Thoughts

We remain long-term bullish on the Nvidia stock, given consistent tangible evidence supportive of the chipmaker’s mission-critical role in supporting the development of next-generation technologies and enabling ensuing growth opportunities in the economy. However, concerns remain over the durability of Nvidia’s lofty valuation.

As much as there is confidence to its demand environment and long-term fundamental prospects, there is a significant premium currently built into the stock at a time when market sentiment is still fragile and prone to the influence of lingering macroeconomic challenges. This includes potential for further monetary policy tightening, a narrative that is expected to be reinforced later this week by Fed officials at the upcoming Jackson Hole Symposium given resilience in the U.S. economy. Higher for longer rates could potentially weigh further on tech valuations while also tightening financial conditions, limiting near-term growth. Despite our confidence in Nvidia’s strong moat, we remain cautious of market driven challenges to the stock’s premium at current levels given the broader market’s conservative sentiment still

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

Thank you for reading my analysis. If you are interested in interacting with me directly in chat, more research content and tools designed for growth investing, and joining a community of like-minded investors, please take a moment to review my Marketplace service Livy Investment Research. Our service’s key offerings include:

- A subscription to our weekly tech and market news recap

- Full access to our portfolio of research coverage and complementary editing-enabled financial models

- A compilation of growth-focused industry primers and peer comps

Feel free to check it out risk-free through the two-week free trial. I hope to see you there!