Summary:

- Nvidia Corporation’s Data Center business grew by 171% YoY in Q2 2024, with revenue of $10.3 billion, surpassing AMD’s total revenue.

- Nvidia’s success in the data center market is not solely due to AI but also the fundamental advantage of GPUs in various cloud compute tasks.

- Nvidia’s extensive software offerings and control over hardware and software development have contributed to its disruption of the traditional x86 CPU paradigm.

Justin Sullivan

Nvidia Corporation’s (NASDAQ:NVDA) Data Center business grew by a remarkable 171% y/y in its fiscal 2024 Q2. Data Center revenue was $10.3 billion, larger than competitor Advanced Micro Devices, Inc.’s (AMD) total revenue, and almost double the combined revenue of AMD’s and Intel’s (INTC) data center segments. In this article, I’ll explore how Nvidia got to this point, and where it can go from here.

Nvidia’s disruption of the data center compute market is about more than AI

The explosion of interest in generative AI has unquestionably been the most important catalyst in Nvidia’s data center growth, but it’s not the only one. Underlying Nvidia’s disruption of traditional x86 CPU-focused architecture is a fundamental advantage of GPUs in a broad range of cloud compute tasks, including game streaming, metaverse, big data analytics, and supercomputing.

Whenever a task can take advantage of the massive parallelism of the GPU, it outperforms the CPU and does so at a lower energy cost. AI models, especially generative pre-trained transformers (GPTs) happen to be especially well suited to massively parallel GPU computation.

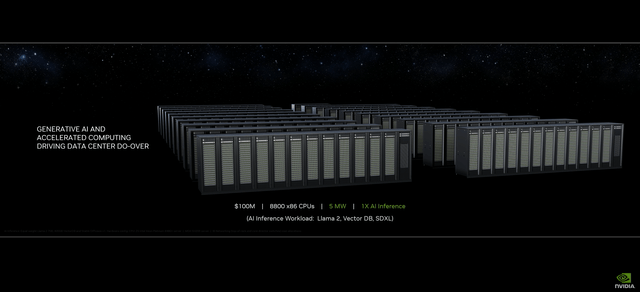

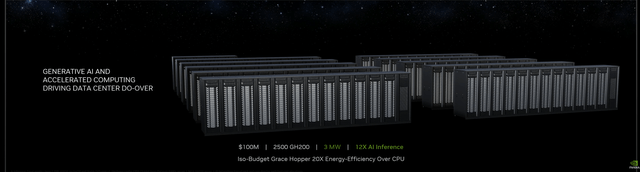

Nvidia CEO Jensen Huang is fond of highlighting the efficiency advantages of GPU accelerated computing, as he did this year at SIGGRAPH. He gave an example of a CPU implementation for a generative AI workload:

And a CPU implementation for the same cost:

But the fact of the superior efficiency of GPUs for many tasks doesn’t completely explain Nvidia’s success in expanding its share of data center spending. For this, we need to look at another important aspect of Nvidia’s product offerings: software.

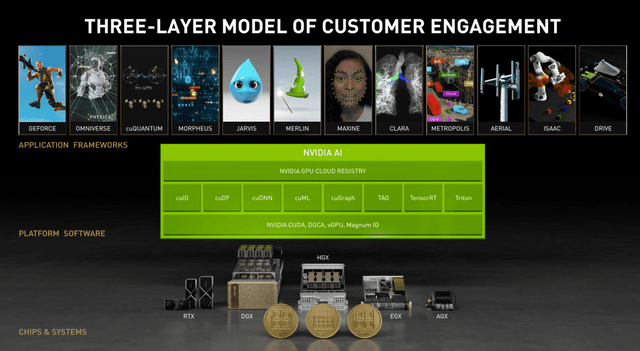

The range of software that Nvidia offers for its GPUs is simply unsurpassed. Nvidia pioneered GPU accelerated computing with its CUDA development environment. Nvidia’s software offerings cover a wide range of applications, including GeForce Now game streaming, Omniverse, various forms of AI, robotics, and autonomous vehicles:

Although Nvidia is starting to generate substantial software licensing revenue at the enterprise level, the value of the software is mainly in the way it enhances the utility and useability of its hardware. In this sense, Nvidia is following the Apple (AAPL) example of selling products that feature well-integrated hardware and software.

Through its extensive software development, Nvidia has become a “new paradigm” semiconductor company in the Apple mold. And Nvidia’s disruption of the data center is very much a disruption of the old x86 CPU paradigm by the new paradigm.

The new paradigm continues to make gains against the old because it enjoys a number of competitive advantages. New paradigm semiconductor companies are fabless and don’t have to shoulder the enormous burden of building leading-edge fabs.

Most importantly, new paradigm semiconductor companies don’t just sell chips that they “throw over the wall” to software and product developers. By controlling hardware and software development in tandem, new paradigm companies can optimize the performance and reliability of their products.

This is basically what happened with ChatGPT hosting by Microsoft (MSFT) on Nvidia hardware. Alert to developing trends in AI research, Nvidia created a “Transformer Engine” to accelerate GPTs. The Transformer Engine doesn’t appear to be specialized hardware but rather a software function that makes better use of the GPU hardware, especially Tensor Cores. Tensor Cores had already been developed and featured to provide hardware acceleration of previous AI models.

The fact that Nvidia had something ready to go to accelerate GPTs on its latest hardware made Nvidia the natural choice for Microsoft and other cloud service providers. Beyond GPTs, the ability of Nvidia GPUs plus software to support a wide variety of workloads takes some of the risks out of the GPT investment. Nvidia GPUs can be repurposed almost at will for a wide variety of tasks or simply offered as “instances” to cloud users.

Sizing up the data center compute opportunity

At Computex in Taipei this year, Jensen Huang spoke blithely of a $1 trillion opportunity in the data center. He believes that much current data center compute infrastructure is outmoded and needs to be replaced with accelerated computing.

For AI acceleration, that’s undoubtedly true, but it remains to be seen how much of future data center spending will go to accelerated computing rather than traditional CPU computing. Making use of GPUs requires specific use cases that the GPU can support. For general-purpose computing, the GPU is usually unnecessary.

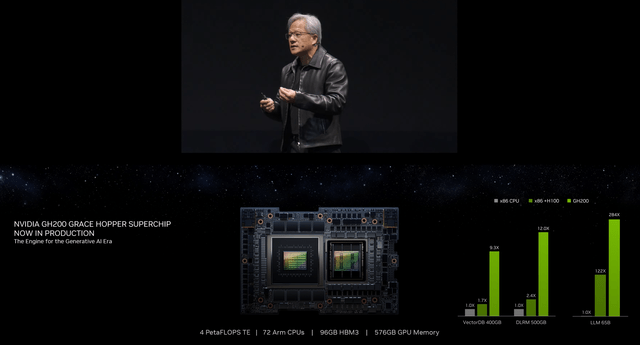

But Nvidia has an answer for that as well, in the Grace CPU, an Arm (ARM) chip with a maximum of 77 Neoverse V2 cores. Neoverse V2 was specifically developed by ARM for server and cloud services applications. While Grace will have an uphill battle against x86 CPUs, it has the usual ARM advantage of energy efficiency, as well as the ability to be joined with an Nvidia GPU via NVLink:

Combined with Nvidia’s most advanced GPU, the H100, Nvidia claims it offers significant performance advantages compared to the H100 combined with an x86 CPU.

But is the data center opportunity really a trillion dollars? I’ve found a couple of sources that seem to say that’s about the right answer for cumulative data center expenditures through calendar 2028.

One source is a Research and Markets report that projected that data center spending would increase at about a 10% CAGR through 2025 to about $430 billion annually. This includes all infrastructure costs, including plant and facilities, as well as computing equipment.

IDC also issues quarterly reports on data center computing spending. As of calendar 2022, IDC estimates that total compute and storage spending for the year was $154.4 billion. IDC also estimates total compute spending to increase at about a 10% CAGR.

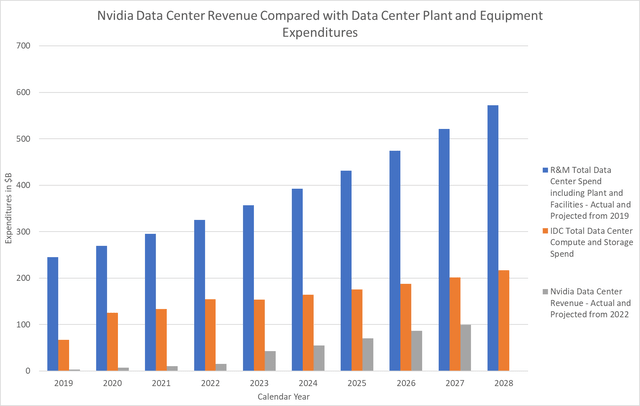

In the chart below, I summarize the data from both companies, as well as data from Nvidia:

Since Nvidia’s fiscal years are almost exactly 1 year ahead of the calendar year, I’ve used data for fiscal 2020 in calendar 2019, etc. The Nvidia data for 2023 through 2027 are projections, with the current year being the highest confidence. The Nvidia projections stop at calendar 2027 since my model currently only goes to fiscal 2028.

For the Research and Markets data past 2025, I simply continued the extrapolation at a 10% CAGR, and similarly with the IDC data. I would have thought that the IDC annual projections would have been a larger fraction of the R&M data, and this may indicate that R&M is overly optimistic.

From 2023 to 2028, the IDC projected expenditures sum to over $1 trillion, lending credence to the notion of a “trillion dollar opportunity”. But it should be noted that neither company was modeling growth in data center expenditures as the result of a massive overhaul and rearchitecting of data center compute. The gradual 10% growth was deemed to be merely due to increased demand for cloud computing.

The data center disruption that is currently underway seems only to be starting to sink in at IDC. In its report for 2023 Q1, IDC said:

. . . spending on compute and storage infrastructure products for cloud deployments, including dedicated and shared IT environments, increased 14.9% year over year in the first quarter of 2023 (1Q23) to $21.5 billion. Spending on cloud infrastructure continues to outpace the non-cloud segment with the latter declining 0.9% in 1Q23 to $13.8 billion. The cloud infrastructure segment saw unit demand down 11.4%, but average selling prices (ASPs) grew 29.7%, driven by inflationary pressure as well as a higher concentration of GPU-accelerated systems being deployed by cloud service providers.

Although I believe that data center compute spending may rise more dramatically than IDC projects, I’m keeping my projections of Nvidia data center revenue conservative. At most, I expect Nvidia to capture about half of the “trillion-dollar opportunity.”

Discounted cash flow estimate of fair value

In order to translate my assumptions about future revenue and profit growth into a current “fair value” for the stock, I rely mainly on a discounted cash flow model (DCF). The DCF is updated on a quarterly basis using the results of the most recently reported quarter.

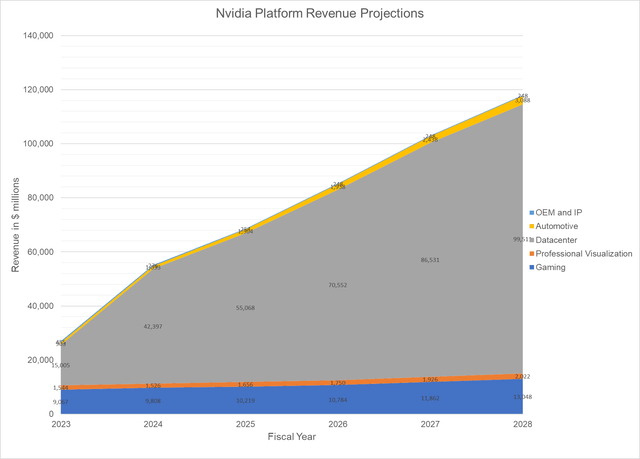

Quarterly results usually translate into minor DCF revisions, but after Nvidia’s fiscal 2024 Q2 results and guidance for Q3, I had to make major revisions. Here are the revenue projections by segment for the model period of five fiscal years:

Data Center revenue in fiscal 2024 will take a huge leap, far larger than I expected at the beginning of the year and dwarfing the other segments. I expect Nvidia’s data center revenue to continue to grow, helped by Grace and successors to Hopper H100. Data Center growth, although seemingly explosive this year, is actually rather supply-constrained, and that could translate into higher than modeled revenue in fiscal 2025.

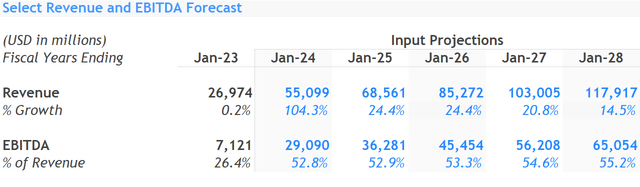

Under the above assumptions the DCF annual revenue and EBITDA profiles are shown below:

The DCF calculated fair value is $437.66.

Investor takeaways: Putting the DCF model results into context

At this point, I feel the need to issue some disclaimers regarding the DCF model results. I’ve seen some authors present DCF results as if they were cast in concrete. My experience is that the DCF model is very much dependent on the inputs provided, especially revenue and earnings.

Projections have to be made five or more years into the future, and these are always to some degree speculative. To me, the value of DCF models is not that they calculate a perfectly reliable net present value. Rather, I see the DCF model as a way to relate assumptions and expectations of future performance to a fair value for the stock.

It’s worth noting that I don’t assume that Nvidia will have the data center compute market all to itself. Far from it. But I do expect Nvidia to garner the lion’s share, based on its continued hardware and software innovation.

With the dramatic rise in Nvidia’s share price this year, one often hears that “Nvidia must be overvalued”. This is usually just based on a few metrics such as the P/E ratio. But if future growth is taken into account, which the DCF model does intrinsically, then I think Nvidia is shown to be close to fairly valued.

Of course, the DCF fair value should not be taken as a prediction of future share pricing. The market is still a stochastic process (actually a huge jumble of them), and Nvidia’s share price will continue to fluctuate.

In the end, I don’t just rely on the DCF model in order to arrive at a rating for a stock. I look at a wide range of factors, including past financial performance, current financial strength, innovation, and how I rank the company relative to its peers. Nvidia is at the top of the class in most of the categories that I care about. As such, I continue to rate Nvidia Corporation stock a Buy.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA, AAPL, MSFT either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.