Summary:

- Nvidia Corporation is facing competition for advanced artificial intelligence from Intel and AMD in the cloud AI market, but they are hindered by weakness on the software front.

- While Google and Amazon have deployed their own AI chips, they are also using Nvidia’s chips.

- Nvidia’s dominance in the AI industry is assured not only by its strong technology but by strategic investments in startups’ technology.

- Several startups aiming to challenge Nvidia in ChatGPT face substantial pressure, given the established dominance and reliability of Nvidia’s technology.

Justin Sullivan

NVIDIA Corporation (NASDAQ:NVDA) was an early leader in the cloud computing space, primarily because of its proprietary Compute Unified Architecture (“CUDA“) platform for general-purpose processing on its GPUs. The company has successfully established a presence in the rapidly expanding cloud AI market. Alphabet Inc. (GOOG), (GOOGL), and Amazon.com, Inc. (AMZN) have deployed their own proprietary AI chips in certain instances, but are also moving to Nvidia. But now, Nvidia is facing competition from Intel Corporation (INTC) and Advanced Micro Devices, Inc. (AMD), which are also aggressively pursuing this market segment.

In addition, there are numerous AI chip startups that have received significant venture capital money that has chosen not to compete with Nvidia, find another way to take some differentiated routes, and try to get a share of the market.

These issues are discussed in this article.

Nvidia’s Dominance

Nvidia has increased its yearly guidance and projected that its fiscal third-quarter revenue will reach around $16 billion. This figure surpasses the consensus forecast of $12.61 billion by a significant margin, representing a 170% growth compared to the same period in the previous year.

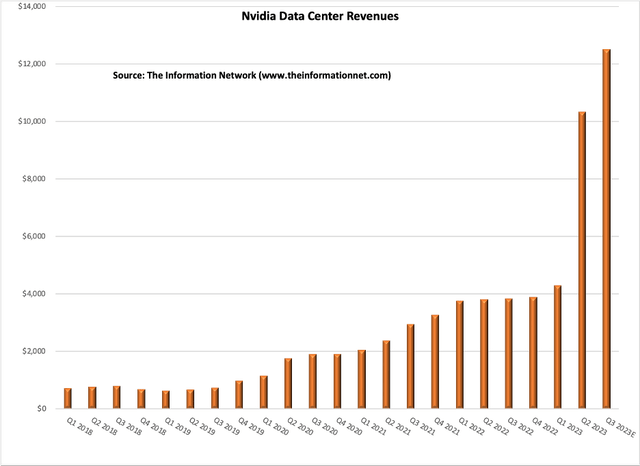

Chart 1 shows Nvidia’s Data Canter revenue growth, demonstrating the demand for its chips and the coat-tail effect on Arm. I estimate a 21% QoQ growth in fiscal Q3 2024 (CY Q3 2023) revenues, which will be reported on November 21, 2023.

In the current quarter, Nvidia is anticipated to report earnings of $3.34 per share, signifying a significant increase of +475.9% compared to the earnings from the same quarter in the previous year.

For the current fiscal year, the consensus earnings estimate is $10.74, reflecting a substantial change of +221.6% compared to the earnings of the previous fiscal year. This data suggests strong growth expectations for Nvidia’s financial performance in the current fiscal year.

Chart 1

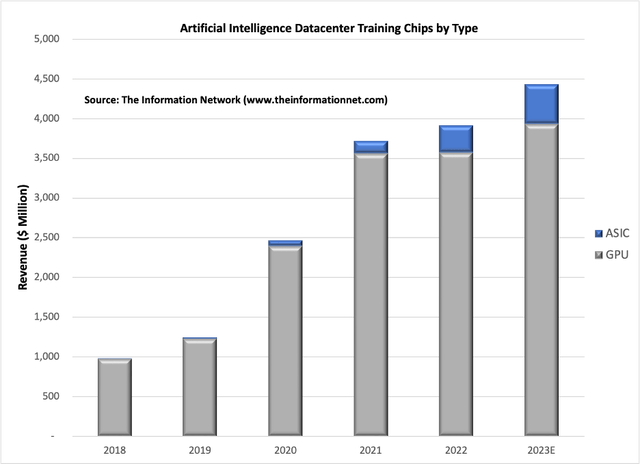

Chart 2 shows the dominance of Nvidia’s GPUs for AI Training at the datacenter.

Chart 2

Cloud Hyperscalers

There have been some notable advancements in the adoption of Arm-based processors in the cloud computing market in recent years. Arm Holdings plc (ARM) claims to have captured a 10.1% share of the cloud computing market, up from 7.2% as of December 31, 2020, largely attributed to Amazon’s increasing use of its in-house Arm chips. Amazon Web Services (AWS) has been deploying its custom Graviton chips in 15% of all server instances in 2021, signifying a significant shift toward Arm architecture within the cloud giant.

Google reported a 22% increase in cloud-computing revenue, reaching $8.41 billion, falling short of the estimated $8.64 billion. In the June quarter, Google’s cloud-computing business saw a 28% growth.

In 2023, Google unveiled its latest self-developed chip, the TPU V4, boasting a remarkable 2.1-fold improvement over its predecessor. By incorporating 4,096 of these chips, the supercomputing performance has surged by an impressive factor of 10.

Google stated that in systems of comparable scale, the TPU V4 outperforms the NVIDIA A100 by 1.7 times in terms of performance and also enhances energy efficiency by 1.9 times. Similar to its predecessor, TPU V3, each TPU V4 comprises two Tensor Core (TC) units. Each TC unit consists of four 128×128 matrix multiplication units (MXU), a vector processing unit (“VPU”) equipped with 128 channels (comprising 16 ALUs per channel), and 16 MiB of vector memory (“VMEM”).

In addition to the next generation of TPUs, Google started making Nvidia’s H100 GPUs generally available to developers as part of its A3 series of virtual machines in late 2023.

Amazon AWS

While Amazon’s Web Service had been experiencing deceleration over the past six quarters, the Q3 results indicated stabilization, holding steady with a 12% year-over-year growth. The segment’s operating income also surged by 29% year-over-year, reaching approximately $7 billion.

In May of this year, AWS introduced the EC2 P5 virtual machine instance, built upon Nvidia H100 GPUs. This configuration includes eight Nvidia H100 Tensor Core GPUs, each equipped with 640 GB of high-bandwidth GPU memory. It further boasts a third-generation AMD EPYC processor, 2TB of system memory, 30TB of local NVMe storage, an impressive aggregate network bandwidth of 3200 Gbps, and support for GPUDirect RDMA. The latter enables direct node-to-node communication without involving the CPU, resulting in lower latency and efficient horizontal scalability performance.

Moreover, the Amazon EC2 P5 instance is deployable within the second generation of ultra-large-scale clusters, known as Amazon EC2 UltraClusters. These encompass high-performance computing, network resources, and cloud storage. These clusters can accommodate up to 20,000 H100 Tensor Core GPUs, enabling users to deploy machine learning models with parameters extending into the billions or trillions.

Microsoft Corporation (MSFT)

Microsoft’s cloud computing revenue experienced a 24% rise, reaching $31.8 billion in the September quarter. Among Microsoft’s three business units, Intelligent Cloud stood out as the top performer, with a 19% revenue increase to $24.3 billion. This unit encompasses server products and cloud services, including Azure, which saw a robust 29% growth, surpassing Wall Street’s anticipated 26% growth.

In March of this year, Microsoft made an announcement via a blog post, revealing its plans for a significant enhancement of Azure. This upgrade involves the incorporation of tens of thousands of Nvidia’s cutting-edge H100 graphics cards, as well as a faster InfiniBand network interconnection technology.

The ND H100 v5 instances also feature Intel Corp.’s latest 4th Gen Intel Xeon Scalable central processing units, with low-latency networking via Nvidia’s Quantum-2 CX7 InfiniBand technology. They also incorporate PCIe Gen5 to provide 64 gigabytes per second bandwidth per GPU, and DDR5 memory that enables faster data transfer speeds to handle the largest AI training datasets.

Successful Startups

Applications like ChatGPT have further solidified Nvidia’s position in the AI industry. Its GPU chips have become a linchpin in a wide array of AI applications. As a result, any startup aiming to challenge Nvidia in this space faces substantial pressure, given the established dominance and reliability of Nvidia’s technology.

Cerebras

Nvidia’s A100 GPU is already substantial, covering almost 826 square millimeters. In contrast, Cerebras’ new WSE-2 chip is massive, occupying an area of 45,225 square millimeters, which essentially blankets the entire surface of an 8-inch silicon wafer. Since its inception in 2016, Cerebras has managed to secure $730 million in funding. According to CB Insights Global Unicorn Club, the company is presently valued at $4 billion.

Cerebras has entered into a collaboration with Abu Dhabi’s G42 to construct the first of nine artificial intelligence supercomputers, a project that costs over $100 million. Additionally, Cerebras is actively pursuing opportunities in the generative AI field. Although it has demonstrated impressive training speeds with its CS-2 model in the context of GPT, it has yet to gain adoption by major manufacturers in the industry.

SambaNova

Founded in 2017, SambaNova has emerged as one of the most well-funded companies in the AI chip industry. It has successfully raised a staggering $1 billion in financing, with notable backers such as Softbank and Intel. This makes SambaNova not only the most heavily financed AI chip startup but also positions it as one of Nvidia’s most formidable emerging competitors, with a valuation of $5 billion.

SambaNova recently introduced its latest fourth-generation SN40L processor. This cutting-edge chip boasts over 102 billion transistors and is manufactured using Taiwan Semiconductor Manufacturing Company Limited’s (TSM) advanced 5nm process, delivering a remarkable computing speed of up to 638 teraflops. It features a unique three-layer memory system comprising on-chip memory, high-bandwidth memory, and high-capacity memory, all designed to efficiently handle the substantial data streams associated with AI workloads. SambaNova claims that just eight of these chips in a node can support models with up to 50 trillion parameters, nearly three times the size of OpenAI’s GPT-4 LLM report.

Tenstorrent

Tenstorrent is another notable startup in the AI chip industry, established in 2016. To date, the company has secured nearly $335 million in funding, with recent investments from major players like Samsung and Hyundai, resulting in a current valuation of approximately $1 billion.

Tenstorrent is setting its sights on challenging Nvidia’s dominance in the AI sector by developing AI CPUs using RISC-V and Chiplet technology. Notably, the company has recently entered into a production collaboration with Samsung and intends to leverage Samsung’s advanced 4nm process for chip manufacturing. This partnership underscores Tenstorrent’s commitment to advancing its technology and competing in the AI chip market.

Not-so-Successful Startups

Graphcore

Graphcore has made a significant mark in the European semiconductor startup scene, particularly in fundraising. Founded in 2016 by Nigel Toon and Simon Knowles, the company has a unique focus on developing Intelligent Processing Units (IPUs), which are distinct from the prevailing GPUs (Graphics Processing Units) used in artificial intelligence applications. Graphcore asserts that its IPU technology offers distinct advantages over GPUs in addressing the specific requirements of AI.

PitchBook data shows that Graphcore has successfully secured over $600 million in investment. However, despite its substantial funding, the company’s revenue has remained relatively modest. The narrative took a significant turn in 2020 when Microsoft decided to discontinue the use of Graphcore’s chips in its cloud computing centers, resulting in the loss of a major customer and posing more formidable challenges.

According to the Financial Times, Graphcore’s revenue plunged by 46% to just $2.7 million by 2022. Simultaneously, its pre-tax losses increased by 11% to $204.6 million, and it ended the year with a cash balance of $157 million. Graphcore stated that additional financing would be required to achieve a balanced income and expenditure by May of the following year. The company attributed this setback to the “adverse macroeconomic environment” and delays in hardware procurement from “key strategic customers,” particularly significant customers in China.

Currently, Graphcore is realigning its business strategy, transitioning its IPU chips from data centers to cloud computing environments. This shift represents a strategic response to adapt to changing market dynamics and challenges in the semiconductor industry.

GSI Technology, Inc. (GSIT)

GSI Technology is the developer of the Gemini Associative Processing Unit (“APU”) for AI and high-performance parallel computing solutions for the networking, telecommunications, and military markets, which I discussed in my May 19, 2023, Seeking Alpha article entitled “GSI Technology: More Than A Meme And A Viable Alternative To Nvidia.”

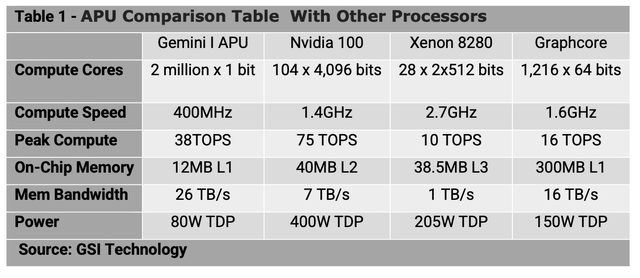

As shown in Table 1, Gemini-I outperforms other types of processors. A Gemini-I chip can perform two million x 1-bit operations per 600MHz clock cycle with a 39 TB/sec memory bandwidth, whereas an Intel Xeon 8280 can do 28 x 2 x 512 bits at 2.7GHz with a 1TB/sec memory bandwidth.

GSI Technology

In the second quarter of fiscal 2024, the company reported a net loss of $(4.1 million), equivalent to $(0.16) per diluted share, with net revenues amounting to $5.7 million. This performance contrasts with the second quarter of fiscal 2023 when the company reported a net loss of $(3.2 million), or $(0.13) per diluted share, and generated net revenues of $9.0 million. Additionally, in the first quarter of fiscal 2024, the company reported a net loss of $(5.1 million), or $(0.21) per diluted share, along with net revenues of $5.6 million.

The gross margin for the second quarter of fiscal 2024 was 54.7%, down from 62.6% in the same period of the previous year and slightly lower than the 54.9% gross margin reported in the preceding first quarter. This data indicates the company’s financial performance and margin trends over the specified periods.

Mythic

Mythic is a prominent company specializing in AI chip simulation, with a focus on Computer-In-Memory (CIM) technology. However, as reported by the technology website The Register, this AI chip startup faced significant challenges in terms of funding. Despite initially raising around $160 million, the company encountered financial difficulties in the past year, to the extent that it was on the brink of ceasing operations.

In a fortunate turn of events in March 2023, Mythic managed to secure $13 million in investment, allowing it to continue its operations. Dave Rick, the CEO of Mythic, expressed that Nvidia, albeit indirectly, contributed to the broader AI chip funding predicament. This is because investors tend to gravitate towards opportunities that have the potential for substantial and rewarding returns, creating a challenging environment for AI chip startups like Mythic to secure the necessary funding for their operations.

Rivos

Rivos, a manufacturer of server chips, finds itself entangled in a legal dispute with Apple, which has been accused of unlawfully recruiting Rivos’ engineers and misappropriating trade secrets. In August 2023, Rivos took the unfortunate step of laying off approximately 20 employees, representing roughly 6% of the company’s workforce. During this process, the management disclosed to the remaining employees that the company’s prospects for securing new funding were diminishing.

Nvidia Investments

Venture funding for chip startups is experiencing an unprecedented decline, largely attributed to Nvidia’s dominant position in the artificial intelligence chip market. Data from the United States shows that chip startups have witnessed a staggering 80% decrease in deals compared to the previous year.

U.S. chip startups have raised $881.4 million through the end of August, according to PitchBook data. That compares to $1.79 billion for the first three quarters of 2022. The number of deals has dropped from 23 to four through the end of August.

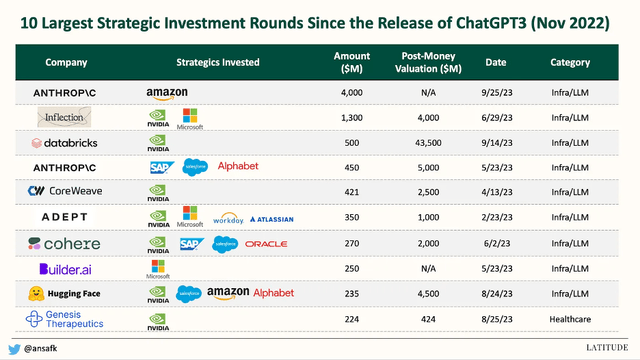

However, Nvidia has been at the center of its own investment universe. Chart 3 shows Pitchbook Data as of October 23rd, 2023 for the top strategic investments since the release of ChatGPT. Nvidia was an investor in all but two of the companies.

Chart 3

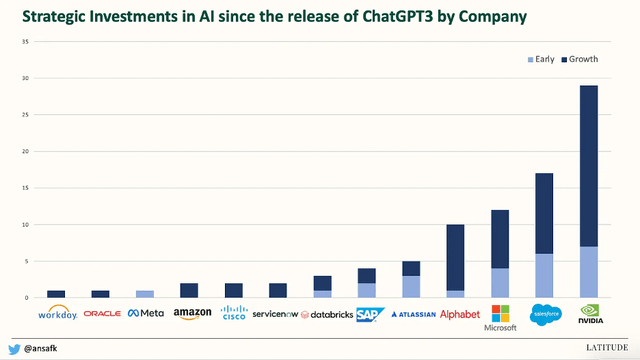

Nvidia has been the most active investor, not just in the top investments by size, but also in overall count. Nearly half of all the investments made in the November 2022 – October 2023 time period included Nvidia as an investor.

Chart 4

Nvidia’s investment strategy appears to be focused heavily on growth-stage companies, with over 75% of their investments directed toward this category. Notably, they have participated in funding rounds for 8 of the 10 largest financings during this period. Infrastructure/LLM (Likewise Learning Models) is the primary sector that received a significant portion of their investments, accounting for nearly half of their total investments. The healthcare/therapeutics sector emerges as the next most substantial area of investment for Nvidia.

Investor Takeaway

At present, it appears unlikely that any emerging companies will emerge as a third major player in the GPU market, alongside industry giants Nvidia and AMD. Even Intel, a dominant force in the chip industry, has encountered challenges in its attempts to create a high-end GPU embraced by gaming enthusiasts. Intel’s next endeavor for a discrete GPU is scheduled for release in 2025. This situation underscores the formidable position of Nvidia and AMD in the GPU market, with limited competition expected in the near future.

GPUs have proven to be the ideal hardware for handling the immense number of calculations necessary for Large Language Models (LLMs) like GPT-3, which can involve training on vast numbers of parameters, such as GPT-3’s 175 billion. Nvidia has strategically cultivated its position in this domain by developing and expanding the Cuda software platform. Cuda offers a range of proprietary libraries, compilers, frameworks, and development tools, providing AI professionals with the tools they need to construct their models. Crucially, Cuda is exclusive to Nvidia GPUs, and this fusion of hardware and software has resulted in substantial customer switching costs within the AI field, contributing to Nvidia’s competitive advantage.

Even if a chip competitor were to produce a GPU comparable to Nvidia’s, it’s reasonable to assume that the code and models already built on Cuda may not be easily transferable to a different GPU. This gives Nvidia an inherent incumbency advantage. While it’s possible that alternative approaches may emerge that do not rely on Cuda or Nvidia GPUs, as of 2023, Nvidia faces minimal competition in this arena. Consequently, any enterprise engaged in LLM development, while awaiting alternatives, risks falling behind as Nvidia continues to dominate the field.

When evaluating the competitive landscape in the chip industry, it’s clear that Nvidia faces several contenders and potential threats:

- AMD: AMD is a well-funded chipmaker with strong GPU expertise. However, its relative weakness on the software front may hinder its ability to compete effectively with Nvidia.

- Intel: Although Intel hasn’t seen much success in AI accelerators or GPUs, it should not be underestimated. As a major player in the semiconductor industry, Intel has the resources and capacity to make significant advancements in this field.

- In-House Solutions from Hyperscalers: Companies like Google, Amazon, Microsoft, and Meta Platform are developing their in-house chips, such as TPUs, Trainium, and Inferentia. While these chips may excel in specific workloads, they might not outperform Nvidia’s GPUs across a wide range of applications.

- Cloud Computing Companies: Cloud providers will need to offer a variety of GPUs and accelerators to cater to their enterprise customers running AI workloads. While Amazon and Google may use their in-house chips for their own AI models, convincing a broad range of enterprise customers to optimize their AI models for these proprietary semiconductors could lead to vendor lock-in, which enterprises typically avoid.

Despite these competitive forces, it is expected that enterprise customers will continue to demand neutral merchant GPU vendors. Nvidia is likely to maintain a leading position in the market for the foreseeable future, primarily due to its strong software and hardware integration, the widespread adoption of its Cuda software platform, and the significant customer switching costs associated with its technology. These factors collectively contribute to Nvidia’s competitive advantage and help solidify its position in the AI chip market.

I rate Nvidia Corporation a Buy.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

This free article presents my analysis of this semiconductor equipment sector. A more detailed analysis is available on my Marketplace newsletter site Semiconductor Deep Dive. You can learn more about it here and start a risk free 2 week trial now.