Summary:

- Despite Nvidia Corporation reporting blockbuster fiscal Q3 results and stellar guidance that reinforces the staying power of emerging AI tailwinds, the stock has slipped from its all-time high valuation.

- Nvidia stock’s latest pullback is likely to adjust for lost revenues stemming from recent export rule changes concerning Nvidia’s GPU sales to the Chinese market.

- At current levels, Nvidia is likely still priced for perfect execution, with several industry-wide and company-specific risks on the horizon that could overshadow existing momentum in AI opportunities.

Justin Sullivan

Despite another blockbuster quarter and a blowout end-of-year guidance, Nvidia Corporation (NASDAQ:NVDA) stock has yet to recapture its all-time high beyond $500 apiece reached on the eve of its F3Q24 earnings release. The investment community has largely attributed the paradox to the stock’s rich valuation that is demanding perfect execution, which Nvidia has yet to deliver on. This is in line with the recent impact of Washington’s updated rules on exports of advanced semiconductor technologies to China, which Nvidia expects to be an offsetting factor to surging demand for its products observed in other regions.

Yet the company’s latest results and forward outlook continue to reinforce prospects for a strong demand environment bolstered by Nvidia’s mission-critical role in supporting next-generation growth opportunities. Looking ahead, we view Nvidia’s unmatched profit margins and growth opportunities relative to its semiconductor and tech peers in the $1+ trillion market cap range, enhanced by emerging AI demand, as key drivers for sustaining the stock’s performance at current levels. However, the potential for further gains in the near term may rest on the extent and timing of which it can recapture opportunities in China and recuperate share gains in the region, as well as its ability to create incremental TAM-expanding opportunities in non-AI forays through continued innovation.

Multiple Demand Drivers to Sustain Growth

After two consecutive quarters of breakneck growth, particularly in the data center segment amidst strong monetization of rising AI opportunities, there has been an emerging chorus of concerns over the trend’s longer-term sustainability. In response, Nvidia CEO Jensen Huang has reaffirmed his confidence in the data center segment’s ability to “grow through 2025,” citing several drivers to said prospects.

Accelerated Computing

Specifically, Huang has attributed Nvidia’s long-term growth trajectory to not only existing demand ensuing from the burst of AI workloads but also the broader transition from general purpose to accelerated computing in general. Considering the $1 trillion spent on the installation of CPU-based data centers over the past four years, Nvidia is well positioned to realize even greater opportunities stemming from the impending upgrade cycle to accelerated computing, spearheaded by the emergence of generative AI as the “primary workload of most of the world’s data centers.”

This is consistent with the gradual rise in prices of Nvidia’s next-generation accelerators, which underscores the potential for a total addressable market, or TAM, that exceeds $1 trillion stemming from the transition to accelerated computing. Specifically, Nvidia’s best-selling H100 GPU based on the Hopper architecture sells at an average price of about $30,000, despite surging towards $50,000 apiece earlier this year in secondary markets due to constrained supplies. And the impending H200 chip, which will be the first GPU to offer next-generation HBM3e – the latest high bandwidth memory processor capable of doubling inference speed relative to the H100 – is expected to cost as much as $40,000 apiece. This compares to the average $10,000 apiece for its predecessor, the A100 based on the Ampere architecture.

Both the DGX A100 and DGX H100 systems consist of eight A100 and eight H100 GPUs, respectively, despite the latter’s capability of greater performance and inference speeds. Meanwhile, the latest DGX GH200 system strings together 256 GH200 Superchips capable of 500x more memory than the DGC H100 system, which, taken together, highlights the TAM-expanding opportunity stemming from the emerging era of accelerated computing.

The era of accelerated computing has also increased demand for next-generation data center GPUs from a wide range of verticals spanning cloud service providers (“CSPs”), enterprise customers, and sovereign cloud infrastructure, which highlights Nvidia’s prospects for incremental market share gains in this foray. This is in line with primary CSPs spanning Amazon Web Services (AMZN), Google Cloud (GOOG, GOOGL), Microsoft Azure (MSFT), and Oracle Cloud’s (ORCL) planned deployment of H200-based instances when the chip enters general availability next year, offering validation to the technology. Nvidia has also made initial shipments of the GH200 Grace Hopper Superchip to the Los Alamos National Lab, Swiss National Supercomputing Center, and the UK government to support the country’s build of “the world’s fastest AI supercomputers called Isambard-AI,” underscoring the chipmaker’s extensive reach into emerging opportunities across the public sector as well.

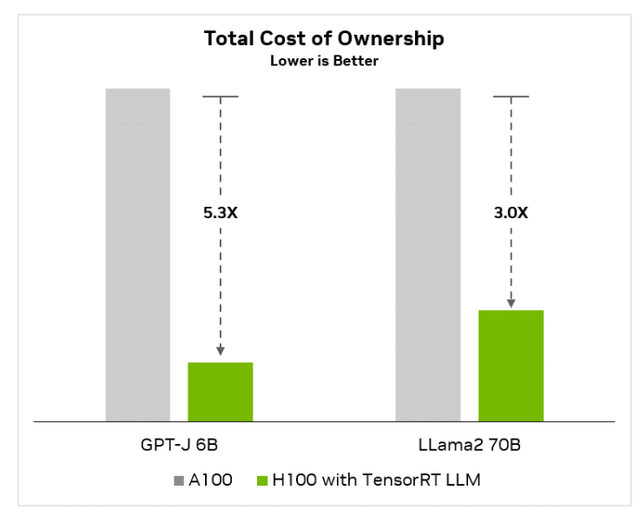

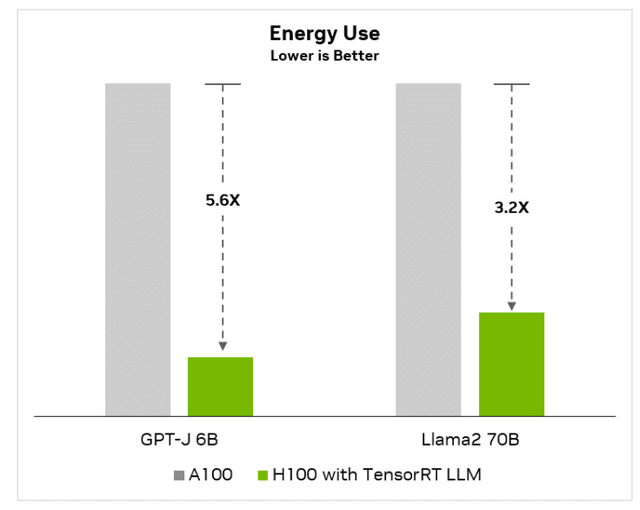

Competitive TCO

Nvidia’s latest innovations have also enabled a competitive TCO (or total cost of ownership) advantage. This continues to effectively address customers’ demands for greater cost efficiencies in the process of improving performance needed to facilitate increasingly complex workloads.

For instance, Nvidia has been actively stepping up its complementary software capabilities in order to optimize TCO on its portfolio of hardware offerings for customers. This includes the recent introduction of TensorRT-LLM, which combined with the H100 GPU is capable of delivering up to 8x better performance relative to the A100 GPU alone on small language models, while also reducing TCO by as much as 5.3x and energy costs by as much as 5.6x.

developer.nvidia.com developer.nvidia.com

The improvements are realized through a compilation of innovations spanning tensor parallelism, in-flight batching, and quantization:

- Tensor parallelism: The feature enables inferencing – or the process of having a trained model make predictions on live data – at scale. Historically, developers have had to make manual adjustments to models in order to coordinate parallel execution across multiple GPUs and optimize LLM inference performance. However, tensor parallelism eliminates a large roadblock by allowing large advanced language models to “run in parallel across multiple GPUs connected through NVLink and across multiple servers without developer intervention or model changes,” thus significantly reducing time and cost to deployment.

- In-flight batching: Inflight-batching is an “optimized scheduling technique” that allows an LLM to continuously execute multiple tasks simultaneously. The feature effectively optimizes GPU usage and minimizes idle capacity, which inadvertently improves TCO.

With in-flight batching, rather than waiting for the whole batch to finish before moving on to the next set of requests, the TensorRT-LLM runtime immediately evicts finished sequences from the batch. It then begins executing new requests while other requests are still in flight.

Source: NVIDIA Technical Blog.

- Quantization: This is the process of reducing the memory in which the billions of model weight values within LLMs occupy in the GPU. This effectively lowers memory consumption in model inferencing on the same hardware, while enabling faster performance and higher accuracy. TensorRT-LLM automatically enables the quantization process, converting model weights into lower precision formats without any moderation required to the model code.

Taken together, TensorRT-LLM troubleshoots the major optimization requirements demanded from enterprise and CSP deployments and continues to provide validation to the value proposition of the NVIDIA CUDA and hardware ecosystem. Coupled with compatibility with major LLM frameworks, such as Meta Platforms’ (META) Llama 2 and OpenAI’s GPT-2 and GPT-3, which the most common/popular generative AI capabilities on built on, the latest introduction of TensorRT-LLM is expected to further reinforce Nvidia’s capture of growth opportunities ahead. By improving TCO, Nvidia also plays a critical role in expanding the reach of generative AI, which in turn reinforces a sustained demand for flywheel for its offerings.

Full Stack Advantage

Nvidia has also bolstered its full-stack advantage in recent years through the build-out of its complementary software-hardware ecosystem. This has been a key source of reinforcement for its sustained trajectory of growth in our opinion, as the strategy increases demand stickiness while also enabling monetization through every stage of the emerging AI opportunity, spanning hardware/infrastructure build-out, foundation model development, and consumer-facing application deployment.

On the hardware front, in addition to the demand for accelerators as discussed in the earlier section, Nvidia has also become a key beneficiary of increased demand for networking solutions. Specifically, the company has expanded the annualized revenue run rate for its networking business beyond $10 billion, bolstered by accelerating demand for its proprietary InfiniBand and NVLink networking technologies. As discussed in previous coverage, Microsoft has spent “several hundred million dollars” on networking hardware just to link up the “tens of thousands of [NVIDIA GPUs]” needed to support the supercomputer it uses for AI training and inference. This includes NVLink and “over 29,000 miles of InfiniBand cabling,” highlighting the criticality of Nvidia’s networking technology in enabling “scale and performance needed for training LLMs.”

Meanwhile, on the software front, Nvidia is progressing toward a $1 billion annualized revenue run rate on related offerings by the end of fiscal 2024. These offerings include DGX Cloud, which facilitates compute demands from customers for training and inferencing complex generative AI workloads in the cloud, as well as NVIDIA AI Enterprise, which comprises comprehensive tools designed for streamlining the development and deployment of custom AI solutions for customers.

Taken together, the combined ecosystem spanning hardware, software and support services is expected to deepen Nvidia’s reach into impending growth opportunities stemming from the advent of AI and beyond. It also offers a diversified revenue portfolio in our opinion, which mitigates Nvidia’s exposure to the imminent downcycle for hardware demand in the future.

Fundamental and Valuation Considerations

The combination of rising accelerated computing adoption, a competitive TCO advantage, and Nvidia’s comprehensive business model is expected to reinforce the chipmaker’s sustained long-term growth trajectory. While the data center segment has been the key beneficiary of the demand drivers discussed in the foregoing analysis, they are also expected to unlock adjacent opportunities to Nvidia’s other business avenues.

This is in line with industry views that the PC and smartphone markets are poised to benefit from an impending AI shift, which potentially harbingers the next growth cycle for Nvidia’s core gaming business. The emergence of industrial generative AI is also poised to unlock the synergies of NVIDIA AI and NVIDIA Omniverse, reinforcing the longer-term growth story for the professional visualization segment. Meanwhile, the automotive segment is also expected to benefit from the ramp of AI-enabled ADAS / self-driving solutions reliant on Nvidia solutions such as NVIDIA DRIVE.

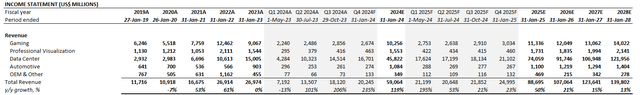

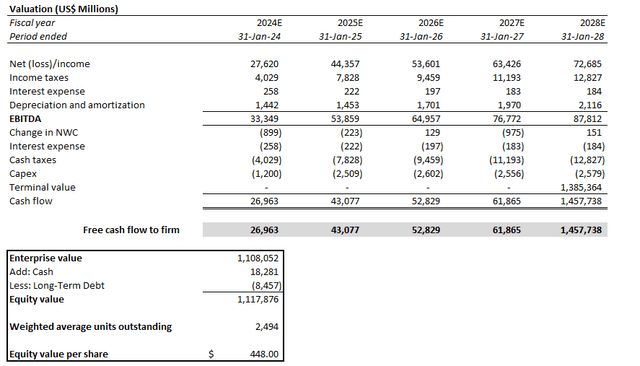

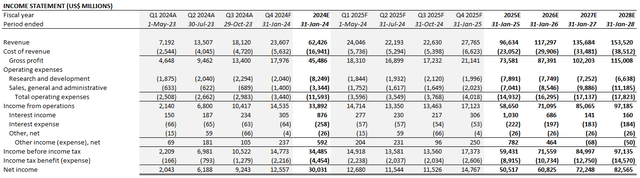

Adjusting our previous forecast for Nvidia’s actual F3Q24 performance and forward prospects based on the foregoing discussion, we expect the company to finish the year with revenue growth of 119% y/y to $59.1 billion. This would imply total revenue of $20.2 billion for the current quarter, in line with management’s guidance.

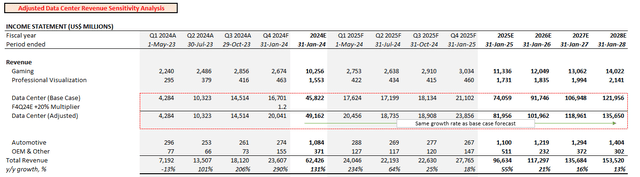

Considering the data center segment’s positioning as the key beneficiary of existing AI tailwinds, as well as Nvidia’s sales mix in recent quarters, relevant revenues are expected to expand by 205% y/y to $45.8 billion for fiscal 2024. Data center revenue growth is expected to remain in the high double-digit percentage range through fiscal 2026 and normalize at lower levels thereafter. This is in line with the impending market opportunities as outlined by management and as discussed in the foregoing analysis, as well as expectations for software and services opportunities being key growth drivers in the data center segment as the next cycle of hardware inventory digestion settles in.

The impending growth of higher-margin data center revenues is expected to bolster the sustainability of Nvidia’s unmatched profit margins within the foreseeable future. We expect GAAP-based gross margins of 72% for fiscal 2024, with normalization towards the mid-70% range over the longer term as complementary high-margin software and services revenues continue to scale.

Nvidia_-_Forecasted_Financial_Information.pdf

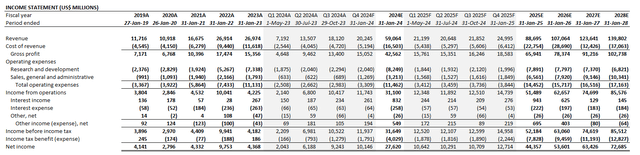

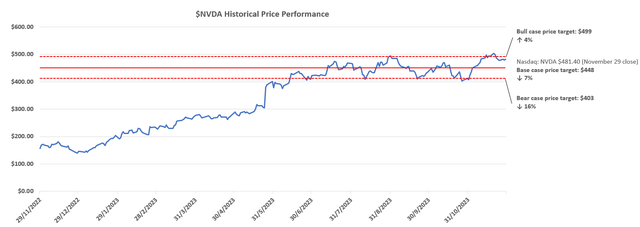

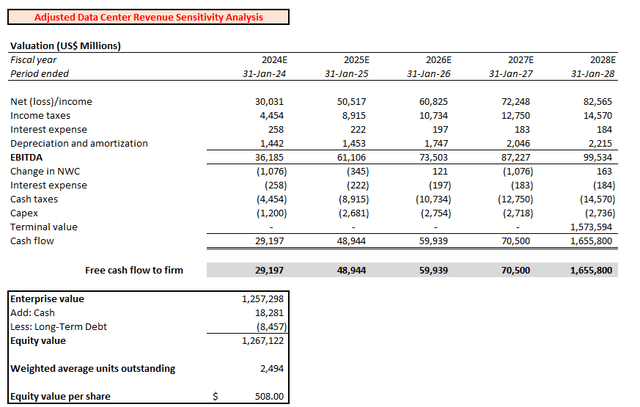

We are setting a base case price target of $448 for Nvidia stock.

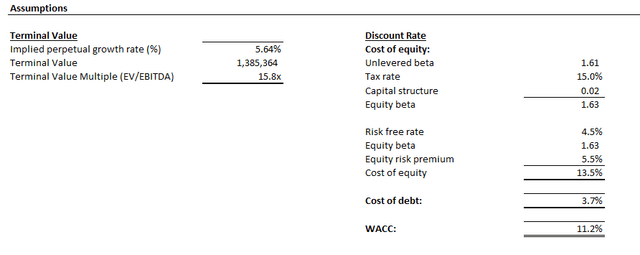

The base case price target is derived under the discounted cash flow (“DCF”) analysis, which takes into consideration cash flow projections in line with the fundamental analysis discussed in the earlier section. The analysis applies an 11% WACC, in line with Nvidia’s capital structure and risk profile relative to the estimated normalized benchmark Treasury rate of 4.5% under the “higher for longer” monetary policy stance. The analysis assumes a 5.6% perpetual growth rate on projected fiscal 2028 ETBIDA, which is in line with Nvidia’s key demand drivers discussed in the foregoing analysis. The perpetual growth rate applied is equivalent to 3% applied on projected fiscal 2023 EBITDA when Nvidia’s growth profile is expected to normalize in line with the pace of estimated economic expansion across its core operating regions.

China Risks

The re-emergence of China headwinds following Washington’s updated export rules on advanced semiconductor technologies is likely a culprit of the Nvidia stock’s recent post-earnings pullback. While AI tailwinds have largely taken precedence over Nvidia’s exposure to China risks this year, the recent updates made to U.S. export rules have brought the relevant challenges back into focus.

Specifically, the new rules prevent shipments of the Nvidia A800 GPUs (a less powerful variant of the A100 GPUs tailored for the Chinese market to comply with previous U.S. export regulations) to China and require regulatory approval on technologies that fall below, but come close, to the new rules’ controlled threshold. Management expects the updated policies, which took effect after October 17, to be a 20% to 25% headwind on data center sales in the current period, though surging demand from other regions is expected to more than compensate for the relevant loss of market share in China and other affected markets.

Based on a simple back-of-the-napkin calculation that adds 20% to our F4Q24 data center revenue estimate to reflect the scenario in which Nvidia continues to partake in China-related GPU demand while keeping all other growth and valuation assumptions unchanged, the ensuing fundamental prospects would yield a base case price target of $508.

This is in line with the stock’s performance prior to Nvidia’s latest earnings release, highlighting the markets expectations for perfect execution being priced in. As such, we expect Nvidia’s eventual introduction of a regulation-compliant solution to be a key near-term driver of incremental upside potential in the stock. However, with “as many as 50 companies in China that are now working on technology that would compete with Nvidia’s offerings,” uncertainties remain on the timing and extent to which the U.S. chipmaker could recapture lost market share in the Chinese market. This is also in line with management’s expectations for immaterial contributions from the Chinese market to data center segment sales in the current period.

Final Thoughts

While AI-driven growth opportunities have ushered Nvidia’s admission to the $1+ trillion market cap club, the stock has largely stayed rangebound in recent months. The stock has also showcased challenges in staying sustainably above the $500-level. Our analysis expects a compelling risk-reward set-up at our base case price target of $448, which considers ongoing macro-related multiple compression risks, uncertainties to the pace of cyclical recovery in consumer-facing verticals such as gaming and automotive, as well as regulatory headwinds facing Nvidia’s key Chinese market. However, market confidence in the impending introduction of a rule-compliant data center GPU solution for the Chinese market remains one of the key near-term drivers for propelling the Nvidia stock back towards the $500-level heading into calendar 2024.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

Thank you for reading my analysis. If you are interested in interacting with me directly in chat, more research content and tools designed for growth investing, and joining a community of like-minded investors, please take a moment to review my Marketplace service Livy Investment Research. Our service’s key offerings include:

- A subscription to our weekly tech and market news recap

- Full access to our portfolio of research coverage and complementary editing-enabled financial models

- A compilation of growth-focused industry primers and peer comps

Feel free to check it out risk-free through the two-week free trial. I hope to see you there!