Summary:

- Nvidia Corporation’s technological supremacy in accelerated computing based on its long-standing experience will be hard to challenge by competitors in the near future.

- There are several signs that the market for accelerated computing will experience continued rapid growth over the upcoming quarters, granting Nvidia the pole position for 2024.

- Looking at the valuation of shares from different perspectives shows that triple-digit gains for this year could be a realistic scenario once again, though, of course, nothing is assured.

- However, there are important Nvidia Corporation risk factors worth monitoring closely on top of increasing competitive forces.

Justin Sullivan

Introduction and investment thesis

Nvidia Corporation (NASDAQ:NVDA) (NEOE:NVDA:CA) has been one of the hottest and most controversial stocks during 2023, which shouldn’t change through 2024. The well-known conflicting forces which are straining against each other are Nvidia’s current artificial intelligence, or AI, supremacy and the several threats to the current status quo.

In the following deep-dive analysis, I weigh these factors against each other and try to provide many quantitative details and latest news on the topic. In the end, I’ll provide different valuation scenarios to help investors determine what the current risk/reward profile of investing in Nvidia shares could look like over a 3-year horizon.

My conclusion is that it will be hard for competitors to challenge Nvidia meaningfully over the upcoming 1-2 years, leaving the company as the dominant supplier of the rapidly growing accelerated computing market. This isn’t reflected properly in the current valuation of shares in my opinion, which could result in further significant share price increase over 2024 as analysts revise their earnings estimates. However, there are some unique risk factors worth monitoring closely, such as the possibility of a Chinese military intervention in Taiwan or renewed restrictions by the U.S. on Chinese chip exports.

Material shift in competitive dynamics unlikely anytime soon

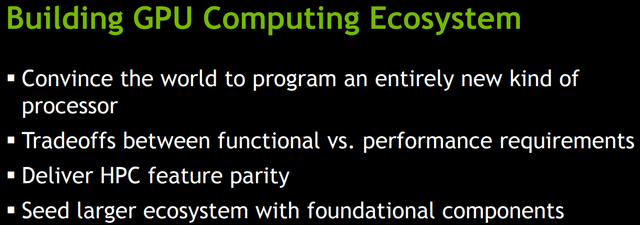

Nvidia has been positioning itself for the era of accelerated computing for more than a decade now. The company’s 2010 GTC (GPU Technology Conference) centered around the idea of the use of GPUs for general purpose computing with special focus on supercomputers. Here is an interesting slide from the 2010 presentation of Ian Buck, Senior Director of GPU Computing Software back then, currently General Manager of Nvidia’s Hyperscale and HPC Computing Business:

Based on the first bullet point, Nvidia already recognized back then that the future of computing would center around GPUs and not CPUs with the growing need for accelerated computing. Four years later, the hottest topics during the 2014 GTC have been biga data analytics and machine learning, which was also highlighted by CEO Jensen Huang in the keynote speech.

Based on these visions, Nvidia began to build out its GPU portfolio for accelerated computing many years ago, ensuring a significant first mover advantage. The company’s efforts manifested in the launch of the Ampere and Hopper GPU microarchitectures in recent years, with Ampere officially introduced in May 2020, while Hopper in March 2022. The world’s most powerful A100, H100 and H200 GPUs based on these architectures have dominated the exploding data center GPU market in 2023, which has been fueled by emerging AI and ML initiatives. These GPUs ensured a ~90% market share for Nvidia during the year. Riding the wave of success from its GPUs, Nvidia managed to establish a multibillion-dollar networking business in 2023 as well, to which I’ll get later to maintain the storyline.

Beside the state-of-the-art GPUs and networking solutions (hardware layer), which offer best-in-class performance for large language model training and interference, Nvidia has another key competitive advantage, namely CUDA (Compute Unified Device Architecture), the company’s proprietary programming model for utilizing its GPUs (software layer).

To efficiently take advantage of Nvidia GPUs’ parallel processing capabilities, developers need to access these through a GPU programming platform. Doing this through general, open models like OpenCL is a more time-consuming and developer-intensive process than simply using CUDA, which provides a low-level hardware access sparing complex details for developers thanks to the use straightforward APIs. API stands for Application Programming Interface, and it contains a set of rules of how different software components can interact with each other. The use of well-defined APIs drastically simplifies the process of using Nvidia’s GPUs for accelerated computing tasks. Nvidia has invested a lot into creating specific CUDA libraries for specific tasks to further improve the developer experience.

CUDA was initially released in 2007, 16 years ago (!), so lot of R&D expenses went into creating a seamless experience for utilizing Nvidia GPUs since then. Currently, CUDA is in the heart of the AI software ecosystem, just like A100, H100 and H200 GPUs in the heart of the hardware ecosystem. Most academic papers on AI used CUDA acceleration when experimenting with GPUs (which were Nvidia GPUs of course), most enterprises use CUDA when developing their AI-powered co-pilots. Even if competitors manage to come up with viable GPU alternatives, building up a similar software ecosystem like CUDA it could take several years. If you’re interested in CUDA’s dominance and the possible threats to it in more detail, I suggest reading the following article from Medium: Nvidia’s CUDA Monopoly.

When making investment decisions into AI infrastructure, CFOs and CTOs must factor in developer costs and the level of support for the given hardware and software infrastructure as well, where Nvidia stands out from the crowd. Even if purchasing Nvidia GPUs comes with a hefty price tag on the one hand, joining its ecosystem has many cost advantages on the other hand. These improve total cost of operation materially, which is a strong sales benefit in my opinion. For now, the world has settled for the Nvidia ecosystem, I doubt that many corporations would take the risk and leave a well-proven solution behind.

At this point it’s important to look at emerging competition. The most important independent competitor for Nvidia in the data center GPU market is Advanced Micro Devices, Inc. (AMD), whose MI300 product family began to ship in Q4 2023. The MI300X standalone accelerator and the MI300A accelerated processing unit will be the first real challengers to Nvidia’s AI monopoly.

The hardware stack comes with AMDs open-source ROCm software (CUDA equivalent), which has been officially launched in 2016. In recent years, ROCm managed to gain traction among some of the most popular deep learning frameworks like PyTorch or TensorFlow, which could remove the most important hurdle for AMDs GPUs to gain significant traction on the market. In 2021, PyTorch announced native AMD GPU integration, enabling the portability of code written in CUDA to run on AMD hardware. This could have been an important milestone in breaking CUDA’s monopoly.

Although many interest groups are pushing it hard, based on several opinions AMD’s ROCm is still far from perfect, while CUDA has been grinded to perfection over the past 15 years. I believe this will leave CUDA the first choice for developers for the time being, while many bugs and deficiencies of ROCm will be only solved in the upcoming years.

Besides ROCm, some hardware-agnostic alternatives for GPU programming are also evolving like Triton from OpenAI or oneAPI from Intel (INTC). It’s sure that as everyone realizes the business potential in AI, it’s only a matter of time until there will be viable alternatives for CUDA, but we still have to wait for breakthroughs on this front.

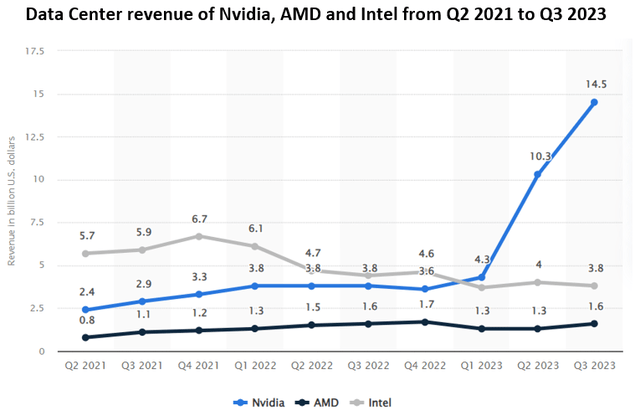

As companies struggle to procure enough GPUs for their AI workloads, I am sure that there will be strong demand for AMD’s solutions in 2024 as well. However, the predicted $2 billion revenue from data center GPUs in 2024 by AMD CEO Lisa Su is a far cry from Nvidia’s most recent quarter, where solely GPU related revenue could have been more than $10 billion, and still increasing rapidly.

The second, and most important, competitive threat during 2024 should come from Nvidia’s largest customers, the hyperscalers, namely Amazon (AMZN), Microsoft (MSFT), and Alphabet/Google (GOOG) (GOOGL). All these companies have managed to develop their own specific AI chips for LLM training and inference. Microsoft introduced Maia in November, Google’s fifth generation TPU came out in August, but these chips are currently used only in the development of in-house models. There is still a long way to go until they begin to power workloads of customers, although Microsoft plans offering Maia as an alternative for Azure customers already this year.

Amazon is different from this perspective, as the company’s AI chip line (Trainium and Inferentia) has been on the market for a few years now. The company recently announced an important strategic partnership with leading AI startup Anthropic, where Anthropic committed to use Trainium and Inferentia chips for its future models. Although Amazon is a leading investor in the startup this is strong evidence that the company’s AI chip line reached a good level of reliability. The company came out with its new Trainium2 chip recently, which could capture some of the LLM training market this year as more cost-sensitive AWS customers could use these chips as another option to Nvidia. However, it’s important to note that the previously discussed software side must keep up with hardware innovations as well, which could slow the process of widespread adoption for these chips.

An important sign that Amazon is far from satisfying increasing AI demand through its own chips alone is the company’s recently strengthened partnership with Nvidia. Jensen Huang joined Adam Selipsky, AWS CEO, on stage at his AWS re: Invent Keynote speech, where the companies announced increasing collaboration efforts in several fields. In recent Nvidia earnings calls, we heard a lot about partnerships with Microsoft, Google or Oracle, but AWS has been mentioned rarely. These recent announcements on increased collaboration show that Amazon still has to rely heavily on Nvidia to remain competitive in the rapidly evolving AI space. I believe this is a strong sign that Nvidia should continue to dominate the AI hardware space in the upcoming years.

Finally, an interesting competitive threat for Nvidia is Huawei on the Chinese market due to the restrictions the U.S. introduced on AI related chip exports. Nvidia had to give up on supplying the Chinese market with its most advance AI chips, which made up consistently 20-25% of the company’s data center revenue. It is rumored that the company had already orders worth of more than $5 billion for 2024 from these chips, which are now in question. Nvidia acted quickly and plans to begin the mass production of the H20, L20 and L2 chips developed specifically for the Chinese market already in Q2 this year. Although the H20 chip is a reduced version of the H100 chip to some extent, it partly uses technology from the recently introduced H200 chip, which has also some benefits over the H100. As an example, based on semi analysis, the H20 is 20% faster in LLM interference than the H100, so it’s still a very competitive chip.

The big question is how large Chinese customers like Alibaba (BABA), Baidu (BIDU), Tencent (OTCPK:TCEHY) or ByteDance (BDNCE) approach this situation, who have relied heavily on the Nvidia AI ecosystem until now. Currently, the most viable Nvidia-alternative regarding AI chips is the Huawei Ascend family on the Chinese market from which the Ascend 910 stands out, whose performance comes closer to Nvidia’s H100. Baidu already ordered a larger amount of these chips last year as a first step to reduce its reliance on Nvidia, and other big Chinese tech names should follow, too. However, since 2020 Huawei can’t rely on TSMC to produce its chips due to U.S. restrictions, it’s mainly left with China’s SMIC to produce them. There are still conflicting news on how SMIC could handle mass production of state-of-the-art AI chips, but several sources (1, 2, 3) suggest that China’s chip manufacturing industry is several years behind.

Also, a significant risk for SMIC and its customers is that the U.S. could further tighten sanctions on equipment used in chip manufacturing, thereby limiting the company’s ability to keep up supplying Huawei’s most advanced AI chips. This could leave the tech giants with Nvidia’s H20 chips as the best option. Furthermore, developers in China got already used to CUDA in recent years as well, which also favors using Nvidia’s chips over the short run.

However, there is also an important risk factor for Chinese tech giants in this case, that the U.S. tightens Nvidia’s export restrictions further, which would leave them vulnerable in the AI race. According to WSJ sources, Chinese companies are not that excited in Nvidia’s downgraded chips, which shows they could perceive using Nvidia’s chips as the greater risk. Over the medium term (3-4 years), I believe China will gradually catch up in producing advanced AI chips and Chinese companies will gradually decrease their reliance on Nvidia. Until then, I think the Chinese market could be still a multibillion-dollar business for Nvidia, although surrounded by significantly higher risks.

In conclusion, there are several efforts undertaken to get close to Nvidia in the hardware and software infrastructure for accelerated computing. Some of these have been going on for the past few years (CUDA alternatives, Amazon’s AI chips), while some of them will be tested by the market only in 2024 (AMD MI300 chip family, Microsoft Maia chip). Currently, there are no real signs that any of these solutions could dethrone Nvidia from its leading AI infrastructure supplier position over the upcoming years, rather they’ll be complementary solutions on a rapidly expanding market from which everyone wants to take its share.

Networking solutions: Where Nvidia is the challenger

There is another important piece of the datacenter accelerator market, where the competitive situation is exactly the opposite than what was discussed until now, namely data center networking solutions. In this case, Nvidia is the challenger to the current equilibrium, and they already showcased how one can quickly disrupt a market.

The original universal protocol for wired computer networking is Ethernet, which has been designed to offer simple, flexible, and scalable interconnect in local area networks or wide area networks. With the emergence of high-performance computing and large-scale data centers, Ethernet networking solutions faced a new expanding market opportunity and quickly established a high penetration due to their general acceptance.

However, a new standard, InfiniBand, has been established around the Millennium, which has been specifically designed to connect servers, storage, and networking devices in high-performance computing environments focusing on low latency, high performance, low power consumption, and reliability. In 2005, 10 of the world’s Top 100 supercomputers used the InfiniBand networking technology, which has risen to 48 for 2010 and stands currently at 61. This shows that the standard gained wide acceptance in high-performance computing environments, where AI technologies reside.

The main supplier of InfiniBand based networking equipment had been Mellanox, founded by former Intel executives in 1999. In 2019, there has been a real bidding war between Nvidia, Intel, and Xilinx (acquired by AMD) to acquire the company, where Nvidia managed to provide the most generous offer with $6.9 billion. With this perfectly timed acquisition, they brought the InfiniBand networking technology in house, which turned out to be a huge success thanks to the rapid emergence of AI in 2023.

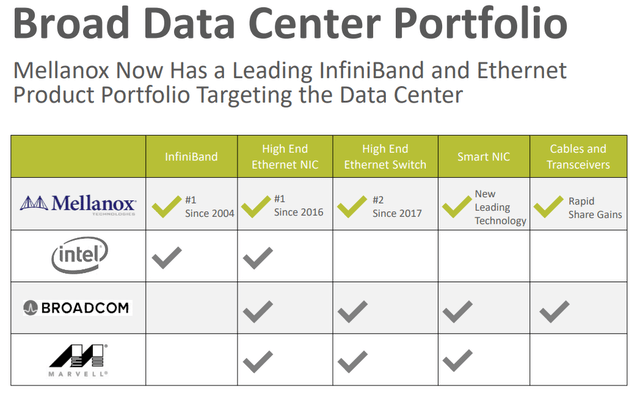

Beside acquiring the InfiniBand know-how of Mellanox, Nvidia gained much more with the acquisition. This can be summarized by the following slide from a Mellanox investor presentation from 2020 April, the last one as a standalone company before Nvidia completed the acquisition:

Mellanox Investor presentation, April 2020

On the top of the InfiniBand standard Mellanox also excelled in producing high end ethernet gears with leading position in adapters, but more importantly also in Ethernet switches and smart network interface (NIC) cards. Based on these technologies Nvidia has been also able to offer competitive networking solutions for those, who want to stick to the Ethernet standards. The recently introduced Ethernet-based Spectrum-X platform is a good example for this, which provides 1.6x faster networking performance according to the company. Dell (DELL), Hewlett Packard Enterprise Company (HPE), and Lenovo Group (OTCPK:LNVGY) have already announced that they will integrate Spectrum-X into their servers, helping those customers who want to speed up AI workloads.

Beside InfiniBand and Spectrum-X technologies, which typically connect entire GPU servers consisting of 8 Nvidia GPUs Nvidia developed the NVLink direct GPU-to-GPU interconnect, which forms the other critical part of data center networking solutions. This technology has also several advantages compared to the standard PCIe bus protocol used in connecting GPUs with each other. Among others, these include direct memory access with eliminating the need for CPU involvement or unified memory allowing GPUs to share a common memory pool.

If we look at current growth rates of Nvidia’s networking business and the expected growth rate for this market over the upcoming years, I believe that the acquisition of Mellanox could be one of the most fruitful investments in the technology sector’s history. The size of Nvidia’s networking business has already surpassed the $10 billion run rate in its most recent Q3 FY2024 quarter, almost tripling from a year ago.

While the collective data center networking market is expected to register a CAGR of ~11–13% over the upcoming years, several sources (1, 2) suggest that within this market InfiniBand is expected to grow at a CAGR of ~40% from its current few billion-dollar size. Besides GPUs this should provide another rapidly increasing revenue stream for Nvidia, which I think is underappreciated by the market.

Combining Nvidia’s state-of-the-art GPUs with its advanced networking solutions in the HGX supercomputing platform has been an excellent sales motion (not to mention the Grace CPU product line), essentially creating the reference architecture for AI workloads. How rapidly this market could evolve in the upcoming years is what I would like to discuss in the upcoming paragraphs.

The pie that outgrows hungry mouths

There are several indications that the steep increase in demand for accelerated computing solutions fueled by AI will continue in the upcoming years. As discussed in the previous sections, Nvidia’s data center product portfolio has been exactly targeted for this market, for which the company is already reaping its benefits. I strongly believe that this could be just the beginning.

At the beginning of December last year, AMD held an AI event, where it discussed its upcoming product line. At the beginning, Lisa Su shared the following:

Now a year ago, when we were thinking about AI, we were super excited. And we estimated the data center, AI accelerated market would grow approximately 50% annually over the next few years, from something like 30 billion in 2023 to more than 150 billion in 2027. And that felt like a big number. (…) We’re now expecting that the data center accelerator TAM will grow more than 70% annually over the next 4 years to over 400 billion in 2027.

The updated numbers haven’t been previously shared by the CEO on the company’s earnings call at the end of October, so they can be regarded as one of the latest predictions how the market could evolve in the upcoming years. To reach a total addressable market, or TAM, of more than $400 billion for 2027 with 70% YOY growth, AMD estimates the current market size for 2023 around $50 billion, which is a significant increase from the previous $30 billion estimate. However, when we look at Nvidia’s latest quarter and its Q4 FY2024 guidance, it’s data center revenue could reach $50 billion for 2023, so it’s absolutely justified. I believe this 70% YOY growth in the upcoming 4 years could be a once in a lifetime opportunity for investors, and current valuations in the sector are far from reflecting it in my opinion (to be discussed later).

As Nvidia’s product portfolio covers most of the data center accelerator market, which should dominate its product portfolio in the upcoming years, this could be a good starting point for estimating the company’s growth prospects over the medium term. Current analyst estimates call for a 54% YOY increase in revenues for 2025, but only for 20% in 2026 and 11% in 2027. I believe these are overly conservative estimates in the light of the facts discussed until now, so there could be significant room for upward revisions on the top and bottom line, which usually results in an increasing share price. It’s no wonder that Seeking Alpha’s Quant Rating system also incorporates EPS revisions into its valuation framework.

Another convincing sign that spending on accelerated computing in data centers is set to continue to soar are the comments that hyperscalers made on their most recent earnings calls. As this segment provides roughly 50% of Nvidia’s data center revenue, it’s worth following them closely. Here are few citations from Microsoft, Alphabet, and Amazon executives, who combined sit on a $328 billion cash balance and seem to have investments in AI as their top priority when it comes to Capex:

“We expect capital expenditures to increase sequentially on a dollar basis, driven by investments in our cloud and AI infrastructure.” – Amy Hood, Microsoft EVP & CEO, Microsoft Q1 FY2024 earnings call.

“We expect fulfillment and transportation CapEx to be down year-over-year, partially offset by increased infrastructure CapEx to support growth of our AWS business, including additional investments related to generative AI and large language model efforts.” – Brian Olsavsky, Amazon SVP &CFO, Amazon Q3 2023 earnings call.

“We continue to invest meaningfully in the technical infrastructure needed to support the opportunities we see in AI across Alphabet and expect elevated levels of investment, increasing in the fourth quarter of 2023 and continuing to grow in 2024.” – Ruth Porat, Alphabet CFO, Alphabet Q3 2023 earnings call.

The frequent mentioning of AI when it comes to Capex signals that investments in accelerated computing could carve out significant part of 2024 IT budgets. The reason why this is especially important is that when planning 2023 Capex budgets, no one imagined that investments in AI could increase so dramatically over the course of one year. So, 2023’s AI spending rush could have been financed from rechanneling resources allocated for other purposes. However, IT and other departments could start 2024 with a clean sheet, which provides significantly more room for this kind of investments. This also shows that it is not a coincidence that AMD CEO Lisa Su upped the company’s accelerated computing infrastructure market growth forecast recently.

It’s important to note at this point that market growth rates by independent research companies seem to show somewhat less rapid growth than projected by AMD, although these become quickly outdated as the market changes so quickly. There are many publicly available forecasts for annualized data center GPU market growth over the upcoming 5-8 years, which typically calculate with a CAGR of 28-35% (1, 2, 3). If we factor in that growth rates should be higher in the upcoming years as the market grows from a lower base, these predictions could be rather closer to AMD’s initial 50% CAGR forecast for the upcoming 4 years. Still, even in the case of this scenario there is ample room to grow for Nvidia, and probably for its best competitors as well.

Finally, another hint for the durability of the demand side is that GPUs can not be only used for performing AI and machine learning workloads in data centers and supercomputers, even if these made up most of the market’s explosive growth in 2023. There are lot of other computational tasks, which are currently performed by CPUs, but could be handled more cost effectively by using well-programmed GPUs for acceleration. Although the initial cost of GPUs is significantly higher, the energy and space in data centers they save with parallelly processing several tasks often result in lower total cost of operation at the end (see a detailed real-life example from Taboola on this topic). In the long run, this could lead to the dominance of GPUs in the data center environment even outside the scope of AI and ML. Who’s going to benefit from this is, of course, Nvidia, which already has demonstrated its leading position in accelerated computing in 2023, leaving competitors standing at the start line:

Rapid change in margin profile

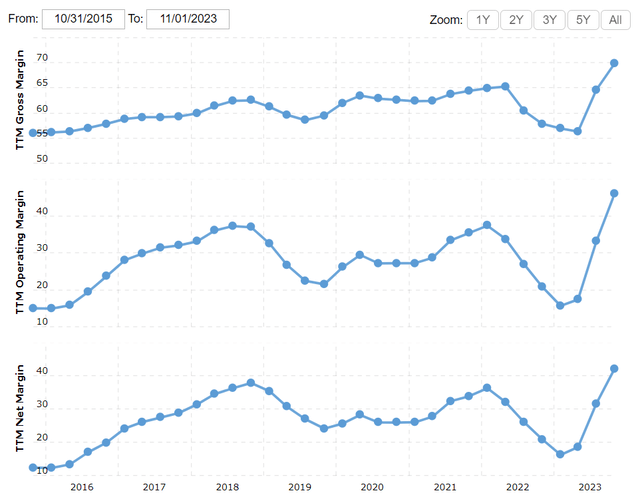

After discussing top line growth prospects, let’s turn to the bottom line before going on with valuing the shares. The significantly increasing share of best-in-class GPUs and networking solutions in Nvidia’s product portfolio has led to quickly rebounding margins after a lackluster 2022:

On a TTM basis, Nvidia’s gross margin increased to 70% but reached already 74% in its most recent Q3 FY2024 quarter. For Q4 FY2024, the company expects further 50 bps increase, which could be a conservative assumption when looking at the guidance and the facts of previous quarters. Net margin increased above 40% on a TTM basis and reached a whopping 51% in the most recent quarter.

In the short run, I believe there could be further upside for margins during 2024 as Nvidia continues to dominate the data center accelerator market and executes its pricing power. However, as competition gets tougher in the following years, it should experience a gradual softening. Nonetheless, in the longer run, margins should stabilize above previous highs in my opinion, as the current technological shift is strongly favoring the company. This would mean 65%+ gross margin and 40%+ net margin for the longer run, which is still remarkable, especially in the hardware business.

Valuation reveals attractive risk/reward

Based on the information presented until know, I have created 3 different valuation scenarios, where I’ll value Nvidia’s shares based on their Price-to-Earnings ratio. Each of these scenarios begin with an estimate for the total data center accelerator market for the upcoming 3 years. For 2023, I have used the assumption of AMD’s Lisa Su, which is ~$50 billion. This aligns with the fact that Nvidia’s data center revenue could be somewhere around $45 billion for 2023, when taking its most recent $20 billion total revenue estimate for the Q4 FY2024 quarter into account. Nvidia’s product portfolio almost spans the entire data center accelerator market (mostly GPUs and networking equipment), and by calculating with a 90% market share, which has been widely rumored about in the market, brings us to the aforementioned total market size of $50 billion.

In the 3 scenarios, I assumed different growth rates for the data center accelerator market, and different scenarios for how Nvidia’s market share could develop over time. As Nvidia’s Gaming, Professional Visualization and Auto segment revenues will have a significantly lower impact on the bottom line in the upcoming years, I used a 10% annual growth rate for these in all 3 scenarios for simplicity.

Afterwards, I applied my net margin estimate for each of the given years also based on 3 different scenarios, which, multiplied by the Total revenue estimate, results in the net income estimate for the given year. The next step is calculating EPS by dividing net income by the number of outstanding shares.

Nvidia had 2,466 million shares outstanding at the end of its Q3 FY2024 quarter, which has been pretty constant over previous quarters as share repurchases compensated for dilution resulting from stock-based compensation, or SBC. Based on this, I have assumed no change in share count during the forecast period.

Finally, to arrive to a share price estimate, an appropriate multiple must be determined. I have used the TTM P/E ratio for this, which compares the company’s EPS for past year to the actual share price. Looking at Nvidia’s TTM P/E ratio, it stands currently at 72, but after including Q4 FY2024 EPS results it should meaningfully drop further:

Looking at the past 3 years, the minimum TTM P/E ratio has been 36.6, but it has been above 50 most of the time. Based on this, I believe Nvidia shares shouldn’t trade meaningfully lower than this level in the upcoming years, as the fundamental landscape changed quite favorably for Nvidia during 2023.

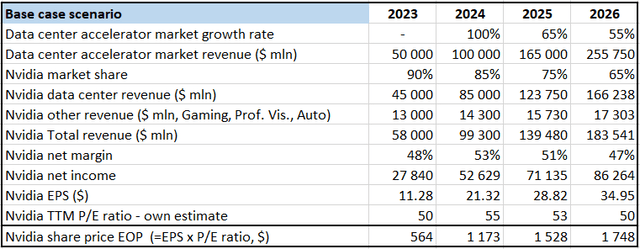

Based on this information, my Base case valuation scenario looks as follows:

Created by author based on own estimates

For the data center accelerator market, I have assumed doubling market size from 2023 to 2024. With a slightly decreasing market share, this would result in $85 billion data center revenue for Nvidia, which would mean $21.25 billion/quarter on average in 2024. Based on the company’s Q4 FY2024 guidance data center revenue could reach $17-18 billion in the current quarter, so a ~$21 million / quarter year in 2024 seems a realistic one.

In this scenario, I assumed that net margin peaks at 53% in 2024, a slight increase from the 51% in the recently closed Q3 FY2024 quarter. Calculating with a P/E ratio of 55, this would result in a $1,173 share price at the end of 2024. This would mean a ~100% increase in the share price from current levels, which is my base case scenario for the year. Assuming gradual slowdown in total market growth, decreasing market share and net margin as competition intensifies, I arrived at an EPS of $35 for 2026, which results in a share price of $1,748 when calculating with a P/E ratio of 50.

The main takeaway from my Base Case scenario is that there could be huge upside for the shares in the upcoming two years, for which, of course, investors have to face larger degree of uncertainty.

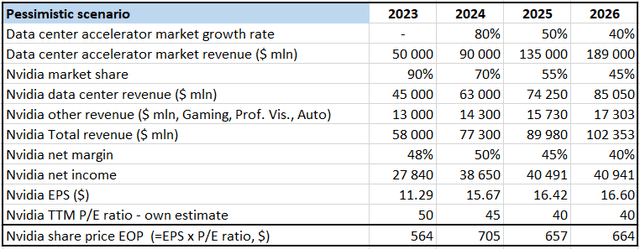

Let’s continue with my pessimistic scenario:

Created by author based on own estimates

In this case, I assumed more conservative growth dynamics for the market (significantly below Lisa Su’s 70% CAGR until 2027), partly resulting from decreasing demand from China. Besides, I assumed more rapid adoption of competing products from AMD, Amazon, or Intel resulting in a 45% market share for Nvidia for 2026. Increasing competition has a dampening effect on margins as well, which goes back to pre-AI highs of 40% in this scenario.

Calculating with a P/E ratio of 45 for 2024, this results in a share price of $705 for the end of they year, which is still a 30% increase from current levels. However, further shrinking margins and a more conservative multiple of 40 result in a slightly decreasing share price for 2025, and stagnation for 2026. The pessimistic scenario shows that most of the AI-related changes in the accelerated computing market are already priced into Nvidia’s shares, and the upside from here is limited.

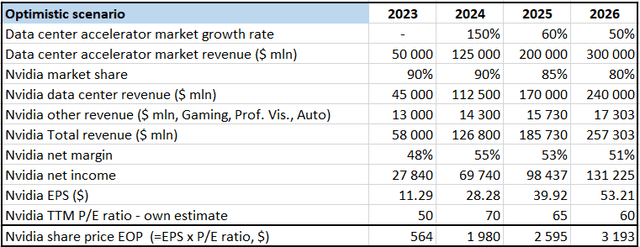

Finally, let’s look at the optimistic scenario:

Created by author based on own estimates

In this case, I assumed that the data center accelerator market continues its exponential growth from its supply-constrained lower base in 2023. I assumed that Nvidia manages to hold onto its market share from 2023, which means that the company’s new product line introduced for China will resonate well with large tech companies. Like in previous scenarios, margins peak in 2024, but at higher levels and come down more gradually. Due to stronger than expected demand in 2024, shares will likely be priced more aggressively, leading to a P/E ratio of 70 for the end of the year.

This would result in a share price close to $2,000 for the end of this year, which is still a possible scenario in my opinion. Share price gains would continue in 2025 and 2026 as well, although at a more moderate pace. I believe this scenario demonstrates it well, that if Nvidia’s growth momentum continues uninterruptedly there could be still significant upside from current levels, similar to 2023.

Taking it all together, I believe Nvidia shares offer an appealing risk/reward at current levels, making them also an attractive investment for 2024. If adding probabilities to the scenarios, I would give 60% to the Base case scenario, 20-25% to the Optimistic, and 15-20% to the Pessimistic one. However, it’s important to add that there could be several other scenarios, better or worse, than presented above.

As there are many moving pieces on the market for accelerated computing, investors should monitor company specific news and quarterly results regularly. It’s not the typical a buy-and-hold-and-lean-back position.

Risk factors

At the beginning of the article, I discussed competitive forces on the accelerated computing market. These are probably the most important risk factor for Nvidia. However, there are many more.

Let’s begin with the one which could have the most devastating effects on Nvidia’s fundamentals, which is a possible Chinese military offensive against Taiwan. In manufacturing its chips, Nvidia relies heavily on Taiwan Semiconductor (TSM) aka TSMC, which has the most advanced semiconductor manufacturing technology but has many of its plants located in Taiwan. A possible offensive from China could cause significant disruption in Nvidia’s supply chain, leading to a sharp fall in the share price.

TSMC is trying to diversify its operations, which has shown mixed results lately. In the U.S., the process of building a new plant takes longer than expected, but there are encouraging signs that Japan could emerge as an important manufacturing hub for the most advanced 3-nanometer technology in the upcoming years as well. However, this will take significant amount of time, which leaves Nvidia fully exposed to this risk over 2024.

China’s communication on “reunification” had been stricter than usual going into the Taiwanese elections, although this hasn’t been enough to prevent William Lai Ching from the pro-sovereignty DPP party to win the presidential election on the 13th of January. However, the DPP lost control of the 113-seat parliament as the China-friendly Kuomintang party gained traction, although they haven’t secured enough votes to have the majority, either.

China is probably dissatisfied with the results overall, but the fact that the DPP couldn’t win the parliament could calm the pre-election tensions to some extent. This has been confirmed by the reaction of China’s Taiwan Affairs Office, which confirmed China’s consistent goal of “reunification,” but also added that they want to “advance the peaceful development of cross-strait relations as well as the cause of national reunification,” thereby striking a somewhat softer tone.

Another important risk factor for Nvidia regarding China is the continued pressure from the U.S. government to restrict semiconductor companies from exporting their most advanced technologies to the country. In its latest move, the U.S. banned Nvidia from exporting its most advanced GPUs to China, but the company already came up with a new solution. However, it can’t be ruled out that Washington decides to tighten export curbs further, leaving Nvidia’s Chinese business exposed to this risk.

As mentioned previously, 20-25% of Nvidia’s data center revenues came from China in recent quarters (which will decline significantly in the upcoming ones), so longer-term growth prospects could be heavily influenced by this. The latest developments on this matter was that the U.S. House of Representatives China Committee asked CEOs from Nvidia, Intel, and Micron (MU) to testify before Congress on their Chinese business, which will be worth monitoring closely.

Conclusion

Nvidia had a great 2023 after the demand for its accelerated computing hardware began to increase exponentially thanks to the increasing adoption of AI and ML based technologies. This increasing demand seems likely to last through 2024 and beyond, and Nvidia should continue to be the best one-stop-shop for these technologies. This should lead to materially increasing earnings estimates throughout the year, which could fuel further share price gains.

Although there are several risk factors like increasing competition and uncertainty around China, which have prevented the share price from increasing to an even greater extent, valuation suggests that current levels still provide an attractive entry point with an attractive risk/reward profile.

Dear Reader, I hope you had a good time reading, feel free to share your thoughts on these topics in the comment section below.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.