Summary:

- NVIDIA recently briefly became the highest-valued company globally, with a market cap of $3.34 trillion.

- The upcoming release of the AI Blackwell chip family should help boost revenue growth.

- It faces competition from ASICs in the AI chip market, but is countering with strategies like Tensor Core GPUs, Deep Learning Accelerators, and entering the customized chip market.

- Investors should monitor the company’s gross margins in future earnings reports for signs of deterioration.

BING-JHEN HONG

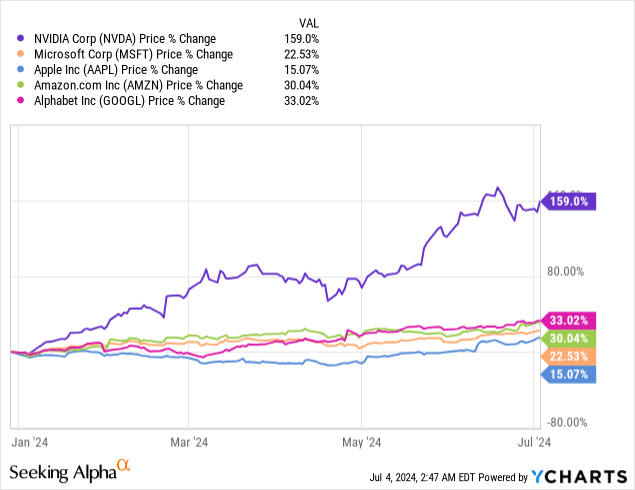

Since I last wrote about NVIDIA (NVDA) (NEOE:NVDA:CA) with a downgrade to hold, it briefly became the highest-valued company globally, reaching a market cap of $3.34 trillion. Of the top five largest global companies, it has risen the highest this year, far outdistancing its peers.

It may still have more gas in the tank. Morgan Stanley (MS) analyst Joseph Moore increased his price target on the stock to $144 from $116 on July 1. A Seeking Alpha article stated, “Recent checks from Taiwan and China were more than enough to keep the firm confident on near-term numbers and the catalyst path remains “strong,” analyst Joseph Moore wrote, citing the “very strong surge” in H20 builds.” The reference to “H20 builds” is a graphics processing unit (“GPU”) based AI chip that NVIDIA is still able to supply to China for AI training.

Investors should also remember that the company will release the new AI Blackwell chip family shortly. Seeking Alpha published an article in March 2024 quoting the same analyst Joseph Moore discussing Blackwell’s impact and the company’s positioning:

“It will take time to evaluate the performance claims for Blackwell, but if they hold up even directionally, our sense is that the company’s ability to raise the bar this much leaves them in a very strong position,” Moore added. “While most of the larger cloud customers remain committed to alternative AI solutions – custom or merchant – they all have limited rack space given ecosystem constraints (with multiple hyperscalers waiting to power up new facilities). Limited rack space is going to lead to cloud vendors choosing the highest ROI [return on investment] solution, which we continue to think is represented by NVIDIA.”

Since my last article on the company, the stock has been up 38.43% compared to the S&P 500’s (SPX) rise of 7.36%. Some NVIDIA bulls may wonder why I downgraded the stock to hold when it looks like it has more upside. The biggest reason that I downgraded it to hold is that investors that buy into the stock today are making massive assumptions about revenue, earnings, and free cash flow (“FCF”) growth beyond fiscal year (“FY”) 2026, where the risks are higher that it may fail to hit the mark. Unless one has keen insights into the semiconductor market and its dynamics, it’s wise to proceed cautiously.

This article will discuss NVIDIA’s primary competitive threat, its plans to counter it, and future company expectations. It will also review the stock’s valuation and explain why I am reiterating my Hold recommendation.

ASICs are a threat

NVIDIA’s rise in relevance in artificial intelligence (“AI”) came from its GPU being the ideal chip for training AI algorithms. Shortly after OpenAI and Microsoft (MSFT) introduced ChatGPT to the world, companies from large to small became interested in training large language models to create their own homegrown chatbots. A UBS Group (UBS) report states:

While most platform and semiconductor companies don’t provide a breakdown of AI chips’ end-usage, we believe inferencing accounted for less than 10% of AI computing chip demand in 2023, versus 90% for training. With the increased usage of generative AI and the rising number of queries per user, we expect inferencing’s share to increase to over 20% by 2025.

The high demand for training chips overwhelming benefits NVIDIA since GPUs are the preferred chip type for training AI algorithms, and NVIDIA is the dominant GPU chip provider. Also, the early days of generative AI have seen most training take place on large cloud providers like Alphabet’s (GOOGL)(GOOG) Google Cloud Platform, Microsoft’s (MSFT) Azure, and Amazon’s (AMZN) AWS, which are a few of only several large companies that can afford to put together enough large AI-chip clusters to rapidly and effectively train AI algorithms. Most companies train their AI using the resources of at least one of the large cloud providers.

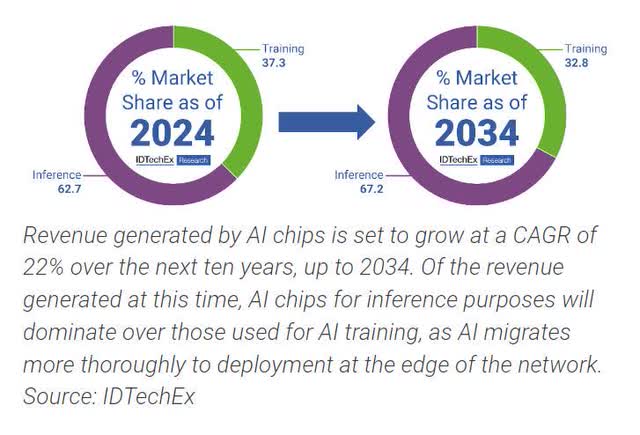

However, there is an opposing point of view. Some already think more companies buy chips for inferencing than training. Inferencing is when a trained AI model generates insights on newly acquired data. The following pie chart comes from the market intelligence company IDTechEx.

Some NVIDIA detractors believe that the more the AI market shifts toward inferencing chips, the more competitive the market will become for the company. The problem with using GPUs for inferencing is that they consume too much power and generate too much heat for various applications. Power efficiency becomes necessary as generative AI moves to more edge devices such as self-driving cars, AI computers, mobile phones, and Internet of Things (“IoT”) devices. Power consumption has also become a problem for cloud providers that are providing AI resources. The website Carbon Credits published an article that said, “Data centers could consume between 4.6% and 9.1% of US electricity by 2030, according to an analysis by the Electric Power Research Institute (EPRI).” That’s a severe drain on the electrical grid.

Application-specific integrated Circuit (ASIC) technology may solve power consumption problems in inference chips. ASIC technology has been around since at least 1967. Google pioneered customizing ASICs specifically for machine learning use. The company developed an ASIC called the Tensor Processing Unit (“TPU”) chip for internal use in 2015 (later made available for third parties in 2018). The TPU is especially useful for convolutional neural networks (CNNs), a valuable deep-learning algorithm for image recognition. Generally, ASICs have lower power consumption and may also have better performance characteristics for a specific task than GPUs. These days, Google is not the only cloud provider with an ASIC; Amazon has developed an inference ASIC specifically for generative AI and deep learning named AWS Inferentia. Microsoft has its Maia 100 AI chip for AI and generative AI.

The big cloud providers are not the only ones developing customized chips. Large traditional chipmakers such as Intel Corporation (INTC), Advanced Micro Devices (AMD), Broadcom (AVGO), Marvell Technology (MRVL), and QUALCOMM (QCOM) have developed or are developing customized chips for customers. Additionally, several smaller companies have arisen over the last decade to compete in the customized AI chip arena. These companies include Untether AI, Habana, and Groq.

One single chip manufacturer doesn’t threaten NVIDIA; it’s a collective army of chip manufacturers and some of its customers bringing chip production in-house that threatens NVIDIA long-term. Austin Lyons, the author of Chipstrat, states the risk clearly and succinctly (emphasis added):

Nvidia derives a considerable portion of their revenue from a small number of clients purchasing AI GPUs, which are inferior to AI ASICs. Worse, their best customers have the strongest motivation to transition to these ASICs. Additionally, the emergence of custom AI chips promises to erode Nvidia’s AI GPU margins.

NVIDIA Chief Executive Officer (“CEO”) Jensen Huang is aware of this risk and has already taken action.

How NVIDIA competes against ASICs

The GPU provider tries to outdo the competition in the inference market in several ways, including using GPUs with Tensor Core, developing specialized SoCs (System-on-Chips) for edge computing applications, and competing directly with companies like Broadcom and Marvell in the customized AI chip market.

1. NVIDIA Tensor Core

Tensor Cores are specialized AI accelerators integrated into a GPU. Tensor Cores make GPUs more useful for modern-day AI applications such as deep learning by improving their performance characteristics and power efficiency compared to a GPU without the Tensor Core. NVIDIA designed its original GPUs to perform graphical computations to generate images, videos, and animations on a computer monitor. While GPUs without Tensor Core are well suited for generating images on a computer screen and are still helpful for some AI training applications, Tensor Core helps GPUs be more competitive with the performance of customized ASIC chips while remaining more flexible for tasks outside AI. NVIDIA Tensor Cores are similar to Google’s TPUs, with the main difference being that TPUs operate as standalone ASIC chips while Tensor cores integrate into GPU architectures. The need for GPUs likely won’t completely disappear, and the company’s efforts in developing power-efficient GPUs designed for inference tasks should help its competition with ASIC providers. Some examples of Tensor Core chips are the H100 Tensor Core GPU, H200 Tensor Core GPU, and the Blackwell GPU architecture, which NVIDIA should release in either late 2024 or early 2025. An NVIDIA press release states, “The GB200 NVL72 [Blackwell chips] provides up to a 30x performance increase compared to the same number of NVIDIA H100 Tensor Core GPUs for LLM inference workloads, and reduces cost and energy consumption by up to 25x.”

2. Deep Learning Accelerator

The company created the Deep Learning Accelerator (“DLA”) to address the need for edge computing applications with limited space or power requirements. NVIDIA describes DLAs on its website (emphasis added):

NVIDIA’s AI platform at the edge gives you the best-in-class compute for accelerating deep learning workloads. DLA is the fixed-function hardware that accelerates deep learning workloads on these platforms, including the optimized software stack for deep learning inference workloads.

While NVIDIA avoids using the term ASICs in the above explanation, some speculate that DLAs are custom ASICs designed for specific deep learning tasks on different edge computing platforms. The way the company describes DLAs, they don’t appear to use them as standalone devices but more as hardware accelerators for deep learning on a specialized SoC, which may also incorporate CPUs (central processing units) and GPUs.

The company uses DLAs in its Jetson platform, which the company also describes on its website, “The Jetson platform includes small, power-efficient production modules and developer kits that offer the most comprehensive AI software stack for high-performance acceleration to power Generative AI at the edge, NVIDIA Metropolis and the Isaac platform.” NVIDIA Metropolis is a platform that enables IoT devices, and the Isaac platform provides tools for developing robots. Some other Jetson platforms include:

- Jetson Nano: The company designed this platform for edge AI applications requiring only a tiny amount of processing power and little need for a large memory footprint, such as basic image recognition.

- Jetson Xavier: It designed this platform to address basic autonomous machine applications that need more processing power than Nano yet still requires solid power efficiency.

- Jetson Orin: The company designed this platform for edge computers using generative AI, computer vision, and advanced robotics. Orin is NVIDIA’s highest-performing option within the Jetson family.

3. Entering the customized chip market

Seeking Alpha published an article about NVIDIA’s potential competition in the customized chip market in early February. The article stated:

Nvidia’s H100 and A100 chips act as a generalized, all-purpose AI processor for several vital customers. However, many tech companies have begun to develop their own internal chips. Nvidia aims to support these companies develop custom AI chips which that have gone to competitors such as Broadcom and Marvell Technology.

A Reuters article states that the customized AI chip market size is $30 billion. The same article also said, “Nvidia officials have met with representatives from Amazon.com, Meta [Platforms] (META), Microsoft, Google and OpenAI to discuss making custom chips for them, two sources familiar with the meetings said.”

Suppose NVIDIA manages to stave off the competition with these strategies. In that case, it may continue to achieve the revenue growth and margins investors want to see to justify its valuation.

Brief First quarter FY 2025 overview and future expectations

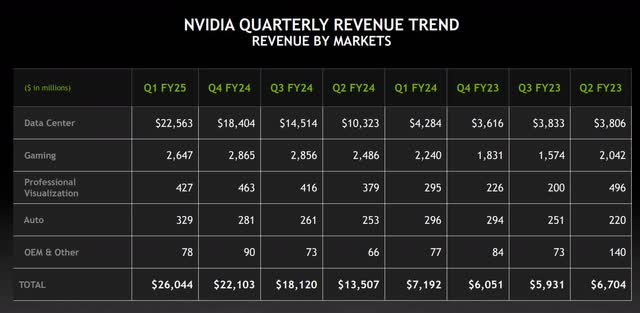

OpenAI and Microsoft introduced ChatGPT to the market in late November 2022. In 2023, the race among major cloud providers to introduce generative AI features created a heavy demand for powerful AI chips. NVIDIA was virtually the only AI chipmaker capable of providing the market with the types of chips needed to enable generative AI, and experts estimated that it grabbed over 90% of the market share for Data Center AI chips. The following table shows the company’s rapid revenue rise in the Data Center segment due to AI chip sales.

NVIDIA First Quarter FY 2025 Quarterly Revenue Trend

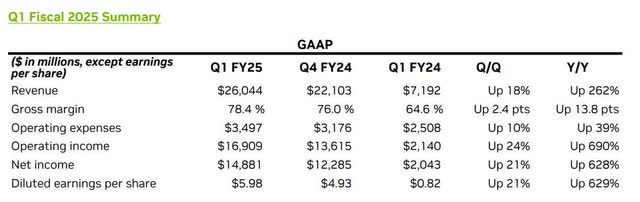

First quarter FY 2025 Data Center revenue growth of 427% year-over-year drove total revenue growth of 262%. Some may say that AI and generative AI are all hypes. However, unlike the dot-com era, companies like NVIDIA that benefit from the proliferation of AI have actual bottom-line results. The following table summarizing the company’s first quarter FY 2025 results shows that diluted earnings-per-share grew 629% from $0.82 in the first quarter of FY 2024 to $5.98 in FY 2025.

CFO Commentary on First Quarter Fiscal 2025 Results

The following chart shows that gross, operating, and net profit margins have expanded significantly over the last year and a half.

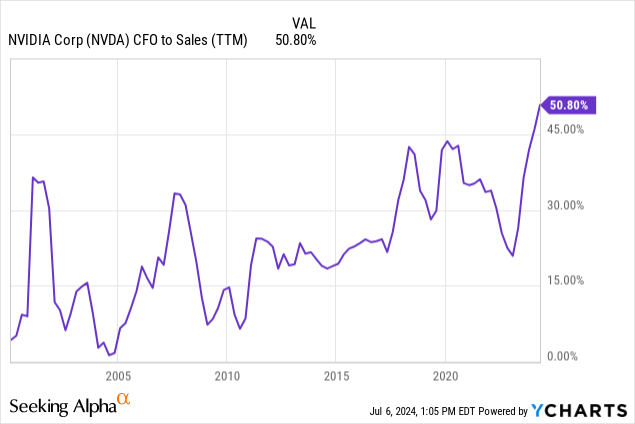

The company produced cash flow from operations (“CFO”) to sales of 50.8% during the first quarter, meaning that NVIDIA converts every $1 in sales into $0.51. The chart below shows that the number is the highest level it has attained in its history.

CFO-to-Sales has a direct relationship and is one of the factors in how much FCF a company can produce. Every NVIDIA investor should ask themselves how much CFO-to-Sales the company can average in the long term as the market for AI chips matures, goes through a cyclical downturn, and competition begins to eat into margins. The number of years the company can sustain above 50% and how much lower the average CFO-to-Sales will eventually drop over the next ten years will directly impact valuation methods using free cash flow (“FCF”).

In the first quarter, the company generated a trailing 12-month FCF of $39.33 billion.

NVIDIA ended the first quarter of FY 2025 with 31.44 billion in cash and short-term investments against $8.46 billion in long-term debt. The company has a debt-to-equity ratio of 0.23, indicating that most of its financing is from equity rather than debt. It has a debt-to-EBITDA ratio of 0.22, meaning investors don’t need to worry about NVIDIA’s ability to pay off its debt.

The company announced a ten-for-one stock split on June 7, 2024. Although stock splits don’t affect a company’s fundamentals or actual value, some studies show that companies that perform stock splits outperform the market over the next year.

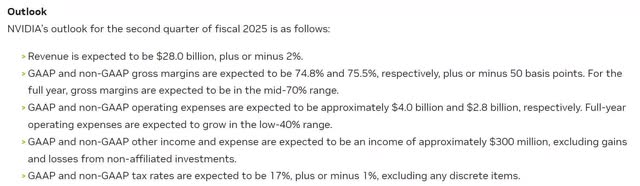

Zacks Investment Research estimates NVIDIA will report second-quarter earnings on August 28, 2024. The following table shows the company’s guidance for the second quarter of FY 2025.

NVIDIA First Quarter FY 2025 Earnings Release.

NVIDIA’s second quarter FY 2025 guidance for $28 billion exceeded analysts’ estimates of $26.66 billion. If the company’s revenue hits its second-quarter guidance, it would imply year-over-year revenue growth of 107%. If it hits the midpoint of the second quarter’s GAAP (Generally Accepted Accounting Principles) gross margin guidance, it would be up 470 basis points (‘bps”) from the previous year’s second quarter. All of that sounds great. However, it would represent a 360-bps sequential decline from the 78.4% GAAP gross margins the company recorded in the first quarter. This sequential decline may indicate that competition is already beginning to squeeze margins.

Intel launched its Gaudi 3 AI accelerator in April 2024 with Dell Technologies (DELL), Hewlett Packard Enterprise (HPE), Lenovo (OTCPK:LNVGY)(OTCPK:LNVGF), and Super Micro Computer (SMCI) lined up as potential customers. An Intel press release claims, “Intel® Gaudi® 3 AI accelerator, delivering 50% on average better inference and 40% on average better power efficiency than Nvidia H100 – at a fraction of the cost.” Although NVIDIA’s latest H200 and the upcoming Blackwell chip may be ahead of Gaudi 3, it provides competition in use cases where previously companies may have chosen H100 because it was the only AI chip available.

AMD released its MI300A and MI300X AI chips in December 2023. It unveiled its latest chip, MI325X, in early June 2024. AMD CEO Lisa Su claims that MI325X is better than NVIDIA’s H200. The company plans to follow MI325X up with MI350 in 2025 and MI400 in 2026. Although AMD and Intel may have a challenging time catching up to NVIDIA’s top chip performance any time soon, by providing the market with alternative AI chips, the era of AI chip demand outstripping supply may quickly end. NVIDIA could potentially lose pricing power, resulting in declining gross margins. Investors should monitor the company’s gross margins moving forward. If gross margins trend downward too rapidly, it would likely sap much of the positive sentiment out of the stock.

Valuation

NVIDIA trades at a price-to-earnings (P/E) ratio of 71.65, well above its sector median of 30.31. Seeking Alpha Quant rates the stock’s overall valuation an F. The following table shows the company’s forward P/E and analysts’ EPS estimates. Generally, a good rule of thumb is that when a company’s forward P/E matches analysts’ EPS estimates in a corresponding fiscal year, consider it trading at fair value. The market may overvalue a stock when its forward P/E exceeds analysts’ EPS estimates, while the market may undervalue a stock when a company’s forward P/E is below analysts’ EPS estimates.

According to the above rule, the market may undervalue this fiscal year’s earnings growth rates based on FY 2025 EPS growth rates and forward P/E comparisons. As mind-blowing as the stock’s rise this year has been, it may continue higher in the short term. However, the market may slightly overvalue NVIDIA based on FY 2026 comparisons.

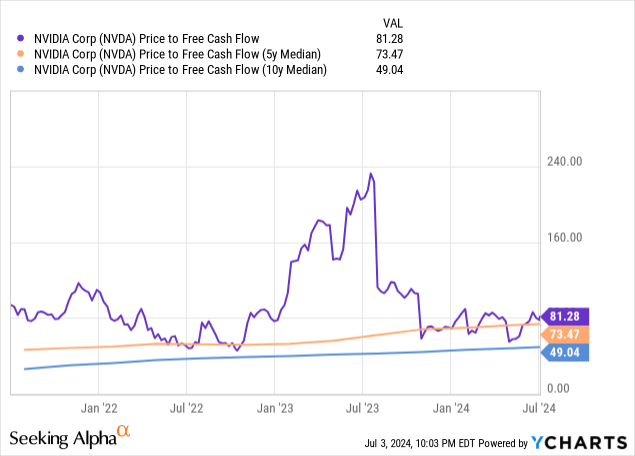

NVIDIA trades at a price-to-FCF of 81.28, above its five- and ten-year median. At this valuation, some consider this stock overvalued.

Let’s do a reverse DCF on NVIDIA to see what the July 3 closing stock price implies about Nvidia’s FCF growth over the next ten years. I will also estimate the stock’s intrinsic value today.

Reverse DCF

|

The first quarter of FY 2025 reported Free Cash Flow TTM (Trailing 12 months in millions) |

$39,334 |

| Terminal growth rate | 4% |

| Discount Rate | 10% |

| Years 1 – 10 growth rate | 24.4% |

| Stock Price (July 3, 2024, closing price) | $128.28 |

| Terminal FCF value | $363.082 billion |

| Discounted Terminal Value | $2333.067 billion |

| FCF margin | 49.30% |

One analyst estimates that NVIDIA’s revenue will grow at a 20.11% CAGR to reach $380.90 billion in 2034. If that analyst estimate proves true and NVIDIA can maintain a 49% FCF margin for the next ten years, the company will be unable to achieve an FCF growth of 24.4% over that period. Since NVIDIA will likely encounter much more competition over the next ten years than it does today in AI chips, it is unlikely to substantially increase or maintain an FCF margin of at least 49%. Let’s assume it can achieve a still aggressive average FCF margin of 35% and grow revenue at 20% annually over the next ten years; the estimated intrinsic value is $65.85 or 48.66% below the current stock price.

However, the stock will likely maintain a relatively high valuation over the next one to three years, since analysts expect revenue to grow at a CAGR of 44.89% over the next three years and FCF margins to remain around 49.5% over the same period. No one should be surprised if the stock stubbornly stays above most people’s fair value estimates in the near term. However, after three years, the company’s likelihood of maintaining the revenue growth and margins investors want to see to justify the current valuation begins to decrease.

Why I maintain my hold rating

Suppose you are a momentum investor only looking for short-term profits and willing to accept the risks. In that case, you may still be able to buy the stock and achieve potential gains over the next year due to the optimistic sentiment surrounding the company. However, the market overvalues the stock through several important valuation methods. If the company disappoints in the slightest, positive sentiment surrounding it could quickly evaporate. Therefore, most prudent investors would be better off not buying NVIDIA today. Buy-and-hold investors looking for long-term gains may have better places to invest their hard-earned dollars.

Investors with existing shares may hold on to them, as the stock can potentially go higher in the near term if the company meets high expectations for nearly triple-digit FY 2025 annual year over-year revenue and EPS growth with almost 50% FCF margins. Analysts also expect revenue to grow an additional 33% in FY 2026. The chip industry is cyclical and competitive. NVIDIA will likely eventually encounter difficulties due to a downcycle in the AI chip market or competitors taking market share and squeezing margins. How long its current good fortunes will last is unknowable. Therefore, if you hold the stock, monitor earnings results closely for signs that the company may encounter difficulties. I maintain my Hold rating on NVIDIA.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of GOOGL, AMZN either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.