Summary:

- The Q3 earnings result is decent, but investors need a long-term view, as Intel’s foundry’s long lead time continues to negatively affect its consolidated margin.

- INTC’s extensive presence in the enterprise will be an edge as it focuses its AI strategy on this segment.

- Retrieval-augmented generation and small language model can be the catalyst the Company needs to gain traction in the booming AI market.

- Acquisition interest in Intel improves the odds for investors, as this can limit the potential downside.

JHVEPhoto/iStock Editorial via Getty Images

Introduction

Intel Corporation (NASDAQ:INTC), one of the leading chipmakers in the world, has been struggling to benefit from the rise of AI. Intel’s missteps with GPU development have resulted in Nvidia (NVDA) dominating this market, making it the world’s most valuable company. Left behind in the race to make the most powerful AI accelerators for the hyperscalers, Intel is shifting its AI strategy towards enterprises. I believe this could rejuvenate their stagnating DCAI revenue. Intel’s existing ecosystem will be crucial in gaining traction in this area against Nvidia and AMD.

Like Wayne Gretzky’s famous quote, ‘I skate to where the puck is going to be, not where it has been,’ Intel is going where the money will be, not where it currently is.

2024 Q3 Earnings Takeaway

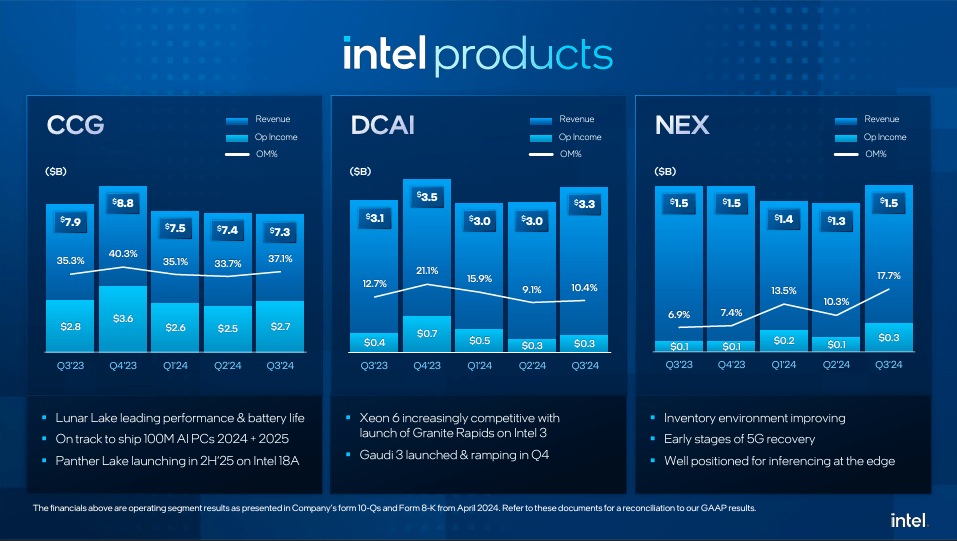

Intel reported a decent quarter for their 2024 Q3 earnings result. They reported revenue of $13.3B, which is better than their midpoint guidance. Disregarding the one-time impairment charges, their Q3 non-GAAP gross margin is around 41%, better than their Q3 guidance of 38%. As a standalone business, Intel Products delivered $12.2B in revenue with a 27% operating margin.

Intel Q3 Investor Presentation – Intel Products

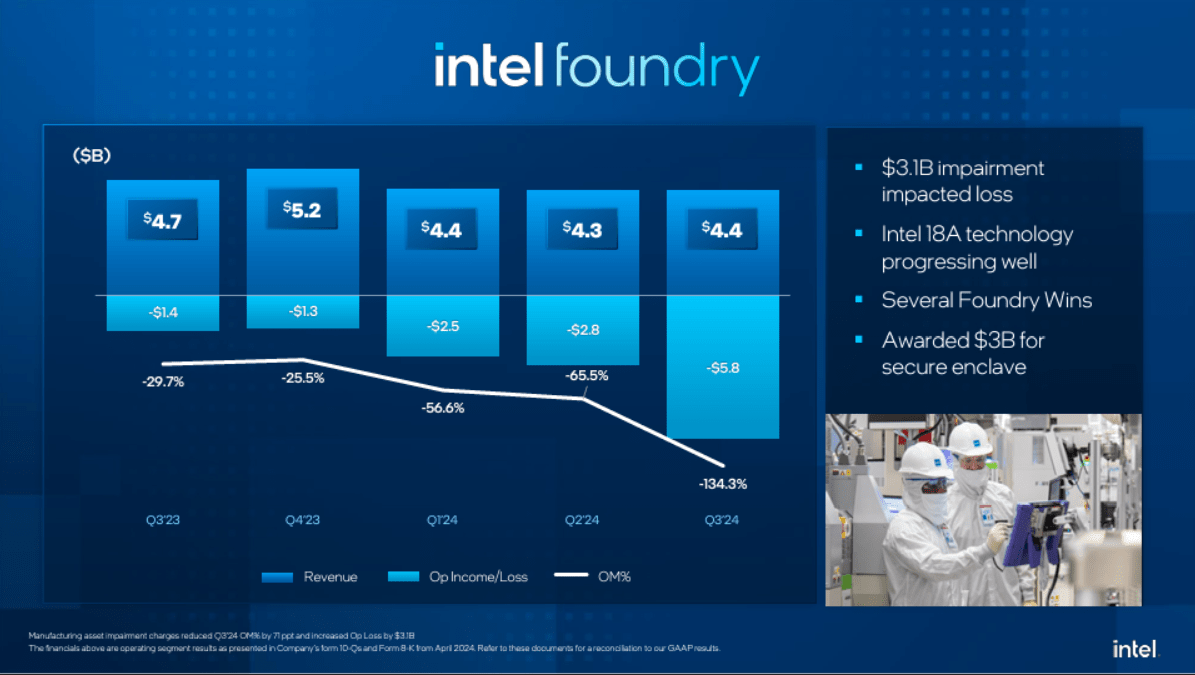

Intel Foundry reported revenue of $4.4 billion and an operating loss of $5.8 billion due to $3.1 billion in impairment charges from Intel 7 tools and equipment.

Intel Q3 Investor Presentation – Foundry

As management rapidly transitions Intel Foundry’s wafer outputs to more EUV, it makes sense to impair these assets. This impairment charge will be a tailwind on their future consolidated operating margin, as they no longer have to expense these assets through depreciation.

To summarize, Intel’s Product group continues to perform well, and I expect this to continue. Its recent product releases have been very competitive, which should have a material effect on Intel’s financials as these new products ramp up.

On the Foundry side, I will maintain a long-term view. As emphasized in my previous Intel article about Intel Foundry, investors should attribute its near-term struggles to the long lead-time cadence of new products and the ongoing glut of semiconductor businesses with limited exposure to Al, which is clearly affecting their wafer volume from Intel Products.

Enterprise Focus Approach On AI

Despite the explosion of AI’s popularity, one thing is for sure: all the companies in the downstream market are losing money, and enterprises that want to ride the AI trend are struggling to push their Proof-of-concept or POC into their production environment. As a result, enterprises are becoming more prudent in their AI investment, as they want certainty that their investments will create value for their business.

With significant demand from hyperscalers and AI startups, the upfront cost of AI investments has risen significantly. The huge initial investment and uncertainty of its potential use cases have created a barrier for enterprises that want to integrate generative AI into their businesses. Thus, Intel is taking advantage of this trend and positioning itself towards enterprises that want to integrate generative AI but can’t afford to invest a significant amount of capital or incur significant losses.

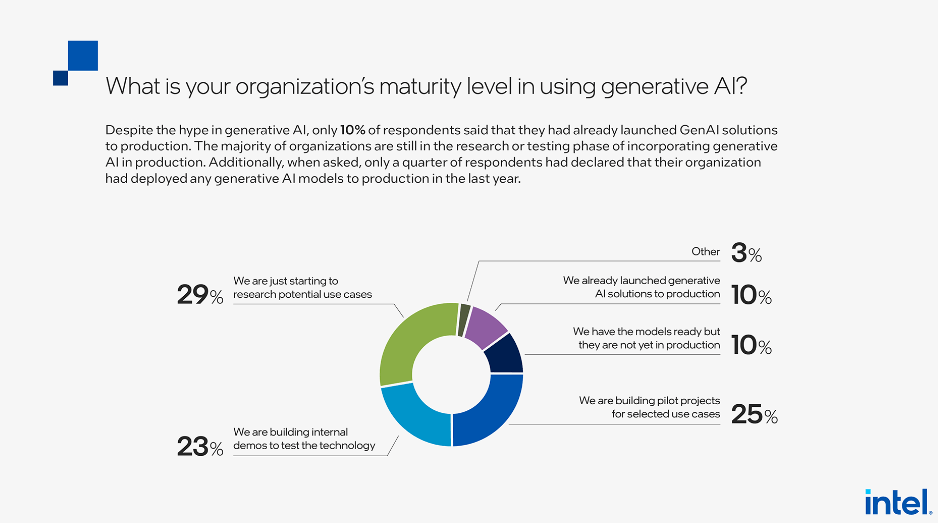

As with any new technology, it must go through a proof-of-concept phase before moving to production. According to Intel’s survey, only 10% of organizations surveyed have managed to move generative AI POC into production.

Intel Survey

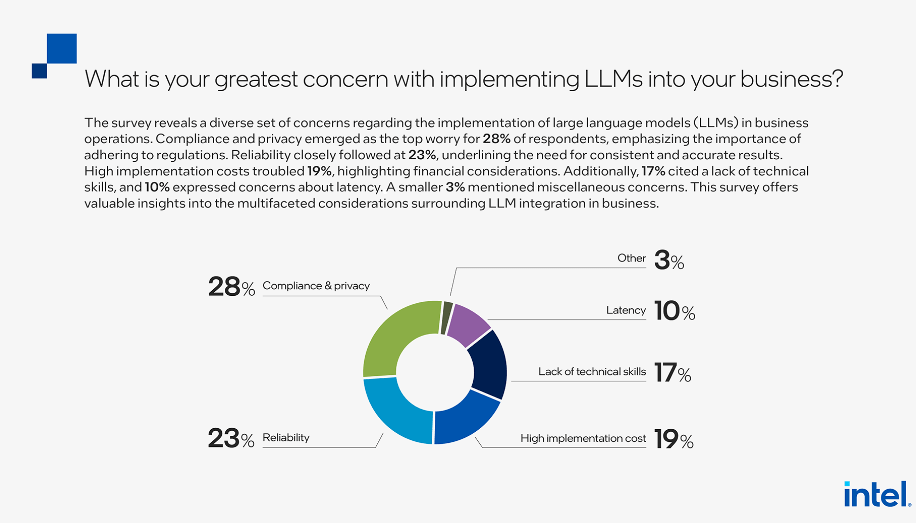

When asked what the biggest hurdle of implementing LLMs into their business is.

Intel Survey

Despite the hype surrounding generative AI’s potential, hurdles still exist before it can deliver value to enterprises. Therefore, from providing the best AI performance, the competing factor will shift towards companies that can help these enterprises create value from their AI investments. Intel’s open ecosystem and extensive partnerships are well-positioned to capitalize on these needs. An open software ecosystem tends to fuel innovation as other software companies find ways to create unique value propositions within their respective niches. These companies will need hardware and software toolkits with wide adoption or scale for their efforts to be worthwhile. With Intel’s CPU market share at around 80% for both server and client, it offers the wide adoption and scale needed for these development efforts to be worthwhile for independent software vendors or ISVs.

Intel’s existing partnerships with IT service companies such as IBM (IBM), Accenture (ACN), and Infosys (INFY) will be crucial in enabling generative AI adoption in enterprises. Corporate IT departments will rely on outside vendors with expertise and scale to make generative AI integration as cost-effective as possible. The shortage of AI talents further reinforces the importance of IT service companies moving forward. Again, an open ecosystem will be critical for these IT service companies as they seek diverse solutions to avoid vendor lock-in. They will want to integrate as many value-adding activities as possible into their offerings to improve their profit margin; an open ecosystem makes this easier to achieve.

Additionally, other factors, where Intel is currently very strong, such as security, compatibility, and scalability, will be a significant value proposition for enterprises that want to implement generative AI on-premise or in hybrid infrastructure. With approximately 40% of the traditional computing workload currently being run on the cloud, while the remaining is done either in hybrid or on-premises, it is reasonable to assume AI workload will run in the same places that traditional computing currently runs. Why? Because data will remain where they are currently.

While Nvidia’s full-integration approach will continue to dominate AI in the cloud, Intel has a chance to gain traction in the enterprise, where the priority is on cost-effective implementation. Intel’s position as the preferred server CPU by IT service providers and head node for OEM AI servers gives it an edge, as it can use these existing channels to roll out its AI accelerators. Lastly, enterprises aren’t as demanding as the hyperscalers who want the leading edge or the best AI accelerators in the market to achieve an even better AI performance; enterprises will just need a good enough AI accelerator to do the job cost-efficiently, and Intel Gaudi 3 can deliver on these needs.

SLM And RAG: A Potential Catalyst For Intel

The release of ChatGPT and its human-like response to chat inquiries has led to the popularity of Large Language Models, or LLMs, among consumers and enterprises. As a result, enterprises are exploring its potential use to automate or improve productivity on certain parts of their value chain, such as automation of business processes, AI Agents, and Chatbots. While LLMs are very useful for answering general inquiries, they can suffer from hallucinations when inquiries need to answer specific questions that pertain to data specific to the enterprise. You could further train and fine-tune the model to fix hallucination, but this can be very expensive for an average enterprise. Additionally, current LLMs are oversupplying the needs of an enterprise as they specifically need these language models to be trained on data exclusive to the enterprise; LLMs are trained with data available on the internet, meaning LLMs are becoming the jack of all trade types where it is good at everything but bad at doing very specific job. This predicament led to the birth of small language models, or SLMs. SLMs can be a cost-effective solution for enterprises looking to integrate AI into their operations.

These SLMs can be trained using data exclusive to the business and require less compute demand. Due to their smaller scale, they don’t need the best-in-class GPUs from Nvidia to function properly. SLMs, together with the implementation of Retrieval Augmented Generation or RAG, can provide the reliable and up-to-date proprietary data that an enterprise needs.

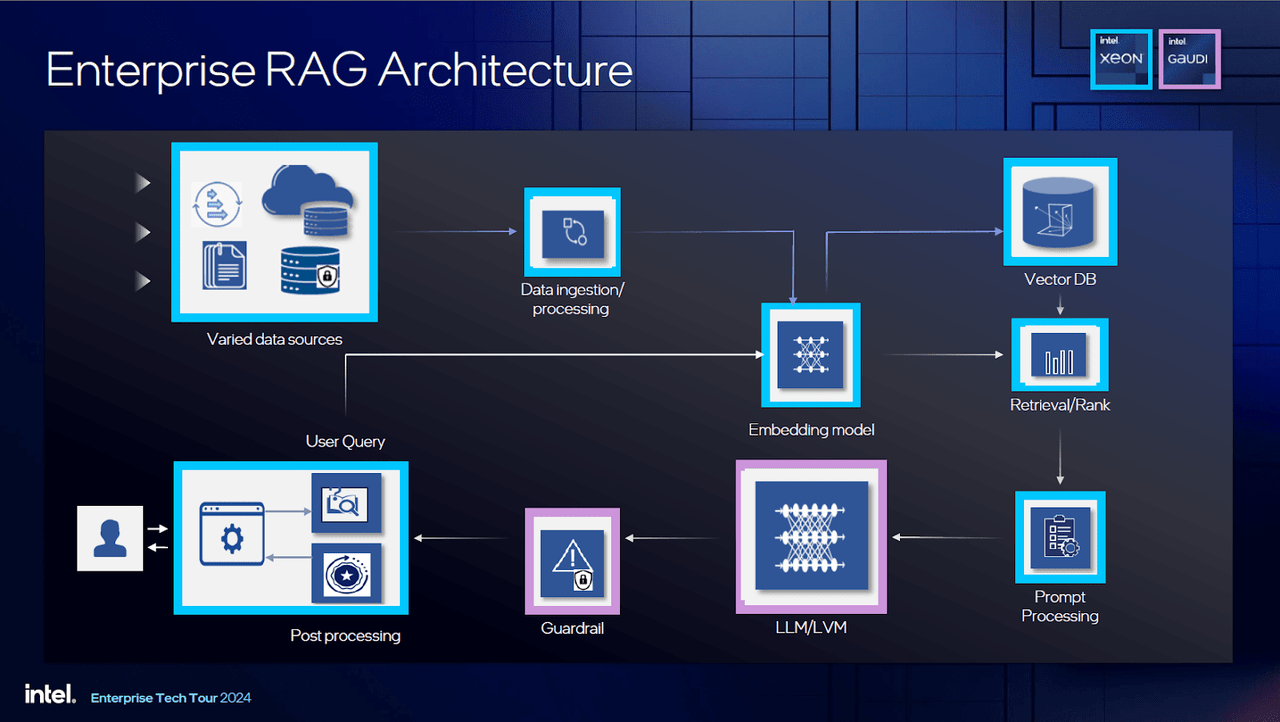

Nvidia’s full integration, from networking to software, will be overkill for this SLM + RAG architecture. I believe Intel’s portfolio of Xeon with AMX and Gaudi, along with its open ecosystem and extensive partnerships, are in a good position to tackle this developing trend; this is why Intel is focusing on enterprise rather than cloud, where I expect Nvidia’s continued domination.

Scalability will be a differentiator for Intel, as enterprises typically want a small startup cost for POC before they scale it into production. For example, an enterprise that wants chatbots that base their answers on data exclusive to the enterprise may want to start with their internal process as the proof-of-concept as cheaply as possible. They may first invest in Intel Xeon with AMX, which runs the RAG, and a couple of Gaudi AI accelerators to train the SLM, and slowly scale their hardware once they are ready to utilize AI to the rest of the enterprise or customer-facing side of the business. If latency and privacy are not a concern, the RAG architecture can be on-premise while the LLM will run on the cloud, such as the IBM cloud. The picture below shows an RAG architecture design for enterprises.

Intel Enterprise Tech Tour 2024

With Intel’s product portfolio, enterprise customers could explore various configuration options that will consider their budget and objectives, whether for a multi-billion dollar enterprise or a small business. With Intel shipping more than a million Xeon infused with AMX, this could be the beachhead Intel needs for their Gaudi accelerators to gain some traction in the booming AI market before the eventual release of their GPU accelerator, Falcon Shores. Furthermore, this could also stimulate demand for Intel Xeon as enterprises start adopting the RAG into their AI design architecture.

According to Gartner, IT spending will increase by 9.3% in 2025. Interestingly, IT service spending will grow from 5.6% to 9.4%, and data center spending will increase by 15.5%, a slowdown from 34.7% in 2024. This survey suggests that enterprises are looking to further capitalize on their AI investments; they will need the help of an IT service company to enable their AI hardware. Does the slowdown in data center spending mean that Intel has less opportunity in AI? No, it just means enterprises are becoming more prudent with their hardware investments. The competing factor moving forward is the companies that can provide certainty to their customer’s AI investments at a lower cost or ones that can make it easier for these companies to adopt AI, which Intel and its partners, as mentioned earlier, are well positioned with. Additionally, SLMs’ smaller scale means they don’t need the most powerful AI accelerators from Nvidia and AMD; Intel’s Gaudi 3 provides the adequate performance these SLMs need in a cost-effective and energy-efficient manner. Intel’s partnership with enterprise IT service companies such as IBM and Accenture will play a significant role, as they can influence enterprise decision-makers or CIOs on which hardware to adopt.

Valuation: Acquisition Interest Improves Investor’s Odds

Rule No.1: Never lose money. Rule No.2: Never forget Rule No.1.

– Warren Buffett

With Intel selling at a significant discount, various companies in the semiconductor industry, such as Qualcomm and ARM, have expressed interest in buying it. Due to its potential upside, I would be saddened if Intel were acquired. However, as an investor, I’ll take it as positive news. Why positive? Because this only improves my odds with Intel, I have a limited downside given it’s selling below book value, and its potential to be acquired by other companies could cover the price I’m paying.

Of course, as an investor, I want to have some upside. Where is that upside coming from? It will come from the potential separation of Intel or the company’s success as a whole. Intel’s valuation as a separate business is also why I don’t think the acquisition by other companies will materialize. I believe Intel Products’ valuation, if separated, is around $200B if you take its current operating profit of roughly $10B annually and use the multiple of some of the fabless semiconductor companies, which, I believe, is around 20x multiple. Despite what some say, Intel’s position in the PC and Server market, especially in the enterprise, remains strong and will be stronger as they ramp up their recently released product. Thus, if the board does its job of maximizing shareholder value, then it’s better just to spin off the foundry business and realize Intel Product’s true value in the open market than being bought at a small premium over its current price and the acquirer itself spinning-off the foundry after the acquisition. Additionally, tying Intel’s CEO compensation to the stock performance creates significant pressure for him to take measures that will immediately drive up the price.

Again, as emphasized in my previous article’s valuation, at this current price, you are buying half the worth of Intel Products as a separate company and the rest for free. The only change is that recent acquisition interest has further improved our odds and limited our downside. I have been aggressively averaging down with my Intel stock after it plunged to $18-$20 per share after my initial position at around $30.

Risk

Competition

Despite Intel’s significant presence in the enterprise market, the competition is not standing by. Anticipating a more democratized AI, Nvidia introduced NIM, which allows enterprises and consumers to run smaller-scale AI workloads such as language models and RAG on their Nvidia GPUs. AMD is also positioning its strong product portfolio, which consists of EPYC and AI accelerator products, in the enterprise. I believe their prioritization of designing ever more powerful AI GPU accelerators will result in a performance oversupply for the less demanding enterprise buyers in the market, which can give an opening for Intel to target these customers.

Enterprise Adoption

While I believe that AI workloads will run in the same places as traditional workloads, there is no guarantee that this will happen. Similar to traditional computing, it makes sense for enterprises to put their AI workload on the cloud due to its cheaper cost and scalability. However, as mentioned earlier, I believe the enterprise infrastructure for AI will resemble traditional ones because data will stay where it currently is. This means the typical decision factors for enterprises when choosing infrastructure design – whether on-premise, hybrid, or cloud – will be the same for AI: latency, security, and data privacy.

Conclusion

Intel’s focus on the enterprise sector is a good move as it leverages all its existing strengths. Observers have often attributed Intel’s competitive advantage to its manufacturing capabilities, which have allowed it to dominate the CPU market for decades; I believe it is overemphasized. Intel’s true competitive edge lies in its distribution network, where it excels in enabling and supporting partners to adopt its products for its customers. With its manufacturing prowess no longer its main strength and now playing catch-up, Intel’s distribution channel will play an even more important role as it navigates through these challenging times. Maybe if Intel’s manufacturing truly delivered on its promise of regaining leadership, the upside could be significant. Considering what was said earlier, whenever I see Intel at this current price, the first quote that comes to my mind is from Monish Pabrai:’Heads I win, tails I don’t lose much.’

Analyst’s Disclosure: I/we have a beneficial long position in the shares of INTC either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.