Summary:

- Early in this AI race, the market considered Amazon’s AWS as a laggard behind Microsoft Azure and Google Cloud. However, Amazon has been making considerable progress over the past 18.

- In previous articles, we covered how AWS has been opportunistically leveraging the popularity of open-source models and its own massive data repository advantage to catch up against Microsoft and Google.

- Now, going even further, investors may be overlooking just how shrewdly Amazon has been playing this AI revolution and the extent to which AWS is winning under the hood.

Thos Robinson

At the beginning of this AI revolution, the market considered Amazon’s (NASDAQ:AMZN) (NEOE:AMZN:CA) AWS as a laggard behind Microsoft Azure and Google Cloud, though investors have been acknowledging the progress AWS has been making over the past 18 months.

In the previous article, we covered how Amazon is savvy in capitalizing on the enterprises’ growing preference for open-source models, including by partnering closely with Meta Platforms (META) to deploy its popular Llama series. We discussed how Amazon made progress in terms of gaining market share over Microsoft (MSFT) Azure in Q2 2024, while its chief rival lost market share.

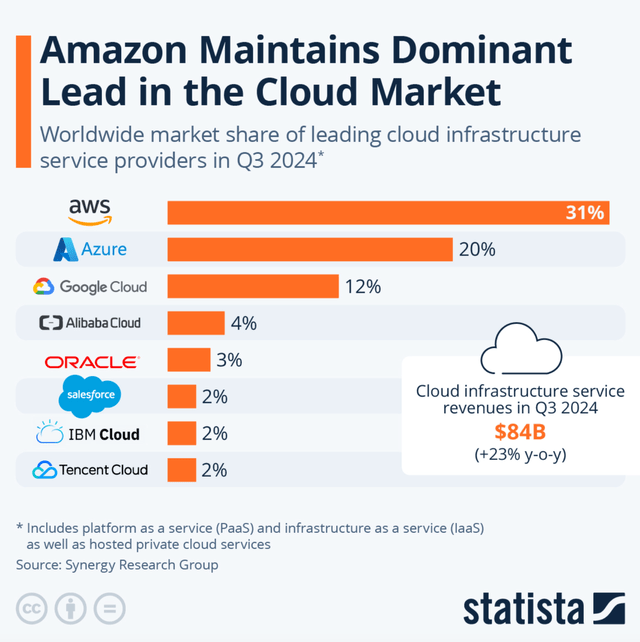

Now over the period of Q3 2024, the trend of Microsoft Azure losing market share has continued, falling from 23% to 20%. While AWS’ market share contracted slightly to last quarter, from 32% to 31%, it is certainly holding up better than Microsoft Azure.

Statista, Synergy Research Group

In case you were curious, Google (GOOG) Cloud’s market share held steady at 12%, which was the same as the previous quarter.

Over the past several months, I have been turning bearish on MSFT and instead advising to buy AMZN, given how the tables are turning in AWS’ favor, culminating in the market share trends we are now witnessing.

As it turns out, the bull case for Amazon’s AWS is only getting stronger.

AWS is proving to be a force to be reckoned with

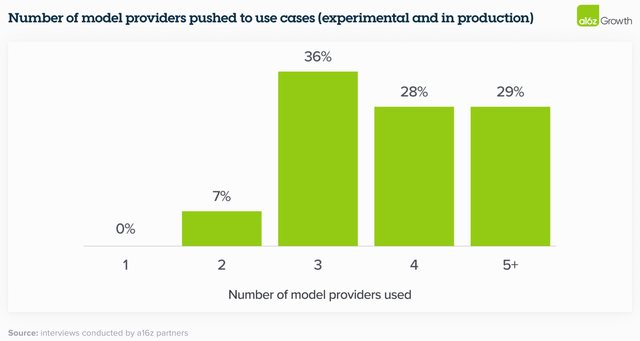

In order to understand Amazon’s savvy strategies to win at AI, it is important to acknowledge a broader industry-wide trend regarding enterprise adoption of AI technology.

When we talked to enterprise leaders today, they’re all testing—and in some cases, even using in production—multiple models, which allows them to 1) tailor to use cases based on performance, size, and cost, 2) avoid lock-in, and 3) quickly tap into advancements in a rapidly moving field.

…

Most enterprises are designing their applications so that switching between models requires little more than an API change. Some companies are even pre-testing prompts so the change happens literally at the flick of a switch, while others have built “model gardens” from which they can deploy models to different apps as needed. Companies are taking this approach in part because they’ve learned some hard lessons from the cloud era about the need to reduce dependency on providers, and in part because the market is evolving at such a fast clip that it feels unwise to commit to a single vendor.

– Research report from Andreessen Horowitz (March 2024)

Indeed, enterprises are reluctant to get locked into using only one or two models for powering their generative AI applications, and are seeking technology that enables easy switching between multiple models.

Acknowledging this trend among enterprises, AWS has been savvy in imbedding such flexible model-switching capabilities into its flagship Bedrock service for generative AI workloads.

We also continue to see teams use multiple model types from different model providers and multiple model sizes in the same application. There’s mucking orchestration required to make this happen. And part of what makes Bedrock so appealing to customers and why it has so much traction is that Bedrock makes this much easier.

– CEO Andy Jassy, Q3 2024 Amazon earnings call

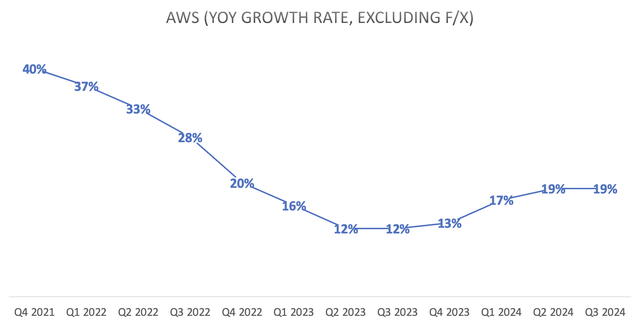

Because Amazon was already behind in the generative AI race due to the absence of a leading, exclusive AI model the way Microsoft secured with OpenAI and Google built Gemini, AWS has little to lose from allowing cloud customers to easily switch out one model for another in their applications. This in turn augments the appeal of Amazon’s Bedrock over similar services from the other major cloud providers. This is not to say that the Microsoft Azure AI service and Google Cloud’s Vertex AI do not facilitate model switching possibilities, but AWS Bedrock has clearly taken the lead on this, which aligns with what enterprises need. It is strategies and service differentiators like these that are resulting in AWS delivering a robust revenue growth rate of 19%, despite being considerably larger than its two main cloud rivals.

Nexus Research, data compiled from company filings

Aside from flexible model-switching capabilities, another prevalent requirement among enterprise customers is the need for more cost-effective computing solutions when it comes to training/ inferencing AI models. This is where Amazon’s custom chip advantage comes into play.

while we have a deep partnership with NVIDIA, we’ve also heard from customers that they want better price performance on their AI workloads. As customers approach higher scale in their implementations, they realize quickly that AI can get costly. It’s why we’ve invested in our own custom silicon in Trainium for training and Inferentia for inference. The second version of Trainium, Trainium2 is starting to ramp up in the next few weeks and will be very compelling for customers on price performance. We’re seeing significant interest in these chips, and we’ve gone back to our manufacturing partners multiple times to produce much more than we’d originally planned.

– CEO Andy Jassy, Q3 2024 Amazon earnings call (emphasis added)

The need for Amazon to increase the supply of their own custom silicon is an encouraging bullish development, as it not only reduces reliance on Nvidia going forward, but it also grants them more control over their technology stack that yields operating cost efficiencies.

In fact, it is worth noting that the new CEO of AWS that the company appointed in June 2024, Matt Garman, has been playing a crucial role in Amazon’s custom chip endeavors for many years now. He was the vice president of the project for deploying the very-first custom CPUs, the Graviton series, all the way back in 2018. So he’s well-versed at inducing cloud customers to use computing instances powered by their in-house silicon over third-party options.

This is a man that has been at AWS since 2006, and deeply involved in Amazon’s semiconductor projects from the very beginning. His valuable experience at driving deep integrations between the hardware and software layers of AWS should continue to yield greater cost efficiencies that can be passed onto customers to drive further market share gains.

Furthermore, over the past quarter since Garman took the reign at AWS, the company has been aggressively inking deals with noteworthy AI companies, including Anthropic and Databricks, to induce greater deployment of their own custom silicon over Nvidia’s expensive GPUs.

Amazon and startup Databricks struck a five-year deal that could cut costs for businesses seeking to build their own artificial-intelligence capabilities.

Databricks will use Amazon’s Trainium AI chips to power a service that helps companies customize an AI model or build their own.

…

Customers can expect to pay about 40% less than they would using other hardware, said Dave Brown, vice president of AWS compute and networking services.

So while the bears argue that Amazon AWS fell behind in the AI race given the absence of its own flagship AI model, the cloud leader is savvy in leveraging its advantage in the custom chips space to regain some lost ground. AWS undoubtedly has a wide lead over Azure when it comes to designing and deploying its own in-house chips, with Microsoft only having introduced its own “Maia accelerators” last year in November.

Risks to the bull case

The fact that AWS has years of experience designing its own server chips to handle AI workloads is certainly an advantage, but Google Cloud holds the clear lead when it comes to custom silicon, given that its line of Tensor Processing Units (TPUs) are specifically designed to run generative AI workloads. A few months ago it was revealed that Apple opted for Google’s TPUs to train their own AI models that power ‘Apple Intelligence, which is testament to just how well-prepared Google Cloud was for this AI revolution.

So while the threat of Azure taking market share from AWS is diminishing, it is still likely to be challenging for Amazon to take market share in the cloud market given the strength of Google Cloud’s technology stack, down from the silicon all the way up to its own multimodal AI model, Gemini.

Gemini API calls have grown nearly 40x in a 6-month period. – CEO Sundar Pichai, Alphabet Q3 2024 Earnings Call Transcript

Aside from Google Cloud’s leadership in the custom silicon space, customer demand for Nvidia’s GPUs continues to remain high among AWS customers, resulting in the company having to continue spending on Nvidia’s hardware for the time being.

Year-to-date capital investments were $51.9 billion. We expect to spend approximately $75 billion in CapEx in 2024. The majority of the spend is to support the growing need for technology infrastructure. This primarily relates to AWS as we invest to support demand for our AI services while also including technology infrastructure to support our North America and international segments. – CFO Brian Olsavsky, Q3 2024 Amazon earnings call

So Amazon is essentially signaling that it will be spending north of $23 billion on CapEx in Q4 2024 alone. This significant step up in CapEx will indeed translate into higher depreciation costs in the years ahead, putting pressure on operating profit margins going forward.

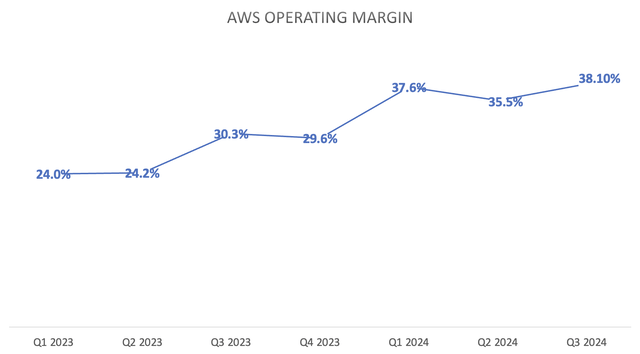

Nexus Research, data compiled from company filings

Note that the expansion in AWS’ operating margin over the past several quarters has partly been driven by accounting changes relating to the estimated useful lives of its traditional CPU-powered servers.

we increased the estimated useful life of our servers starting in 2024, which contributed approximately 200 basis points to the AWS margin increase year-over-year in Q3. As we said in the past, we expect the AWS operating margins to fluctuate, driven in part by the level of investments we’re making at any point in time.

Nonetheless, Amazon has also been making notable progress on the cost management front through effective employee headcount management, as well as the integration benefits from powering AWS computing instances with its own in-house chips over the years.

The investment case for Amazon

AMZN currently trades at just over 40x forward earnings (non-GAAP), although Forward PE multiples don’t factor in the rate at which a company’s earnings are growing. Hence, a more comprehensive valuation metric would be the Forward Price-Earnings-Growth (Forward PEG) ratio, which adjusts the Forward P/E multiple by the anticipated EPS growth rate over the next few years.

Nexus Research, data compiled from Seeking Alpha

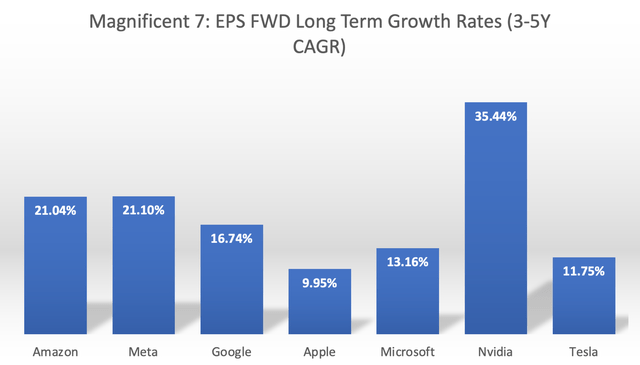

After Nvidia’s splendid EPS growth rate projection of 35.44%, Amazon and Meta have the highest expected earnings growth rates out of the Magnificent 7, both above 21%.

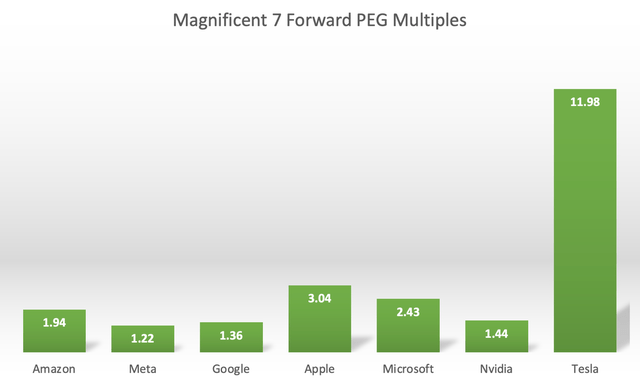

Now adjusting each stock’s Forward PE multiples by the predicted EPS growth rates gives us the following Forward PEG ratios.

Nexus Research, data compiled from Seeking Alpha

For context, a Forward PEG multiple of 1x would implicate that a stock is trading around its fair value, though it is not unusual for technology company shares to trade at premium valuations.

Now relative to its chief cloud rival Microsoft, which trades at 2.43x, Amazon stock is a cheaper investment choice at 1.94x. Although both are considerably more expensive than Google, which is trading much closer to fair value at 1.36x.

Although keep in mind that while the cloud segments of each of these companies are considered some of the early winners of this AI revolution, the stock price performances and valuations are also impacted by the other business units.

So the reason why Google stock is trading at such a cheap valuation multiple is due to investors’ concerns around its core Search ads business in the era of generative AI. And in a previous article from a few months ago, we discussed similar concerns around Amazon’s own e-commerce business in this new era, particularly amid the significant slowdown in its advertising revenue.

Nonetheless, with AWS still constituting the majority (60%) of Amazon’s total operating income, the bullish growth prospects around the cloud segment should continue to buoy stock price performance going forward.

Amazon stock remains a ‘buy’.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.