Summary:

- While Microsoft Azure was initially considered to be the leading cloud provider in the AI race, it may now be turning into a laggard due to a big competitive disadvantage.

- While investors are shrugging off the underwhelming Azure growth rates as a ‘demand outstripping supply capacity’ situation, it is not actually a positive considering the serious weakness beneath the surface.

- AWS and Google Cloud are way ahead of Azure in offering custom chips, which is turning out to be a vital competitive advantage as enterprises seek more cost-efficient AI solutions.

Kevin Dietsch/Getty Images News

At the onset of this AI revolution, Microsoft (NASDAQ:MSFT) Azure had the clear lead position thanks to its bet on OpenAI paying off. In fact, there was even speculation swirling that its market share could eventually overtake that of Amazon’s (AMZN) AWS. That narrative has since turned on its head, with AWS savvily and aggressively catching up, capitalizing on the shortcomings of Microsoft-OpenAI’s closed-source models.

In the previous article covering Microsoft Azure, we discussed the growing threat from open-source models, particularly from Meta Platforms’ (META) Llama series, which have caught up significantly in performance capabilities, undermining the appeal of the GPT models available exclusively through Azure’s OpenAI service. We also delved into Nvidia’s growing endeavor to facilitate on-premises AI solutions, mitigating the need for enterprises with strict data privacy needs to migrate to the cloud, subverting the growth prospects for all cloud providers, including Microsoft Azure.

And in the most recent article on Microsoft, we covered the underwhelming outlook for AI PCs until “killer AI applications” arise that make these new devices genuinely worth buying.

But for now, the cloud segment remains the main driver of stock price performance. Azure is losing market share, which could potentially get worse due to its shortcomings in the custom chips space. MSFT remains a ‘sell’.

Microsoft Azure no longer leading AI race

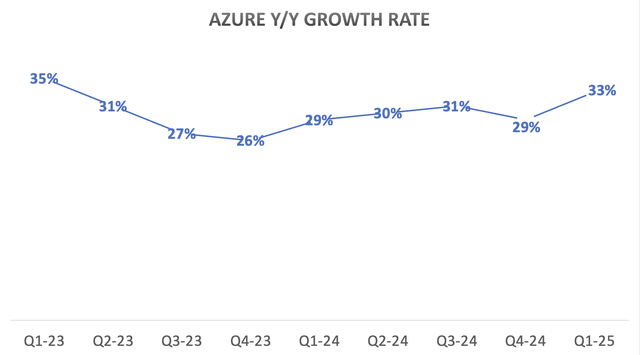

In Q1 FY2025, Azure delivered year-on-year revenue growth of 33%, which continued to underwhelm the market which had been expecting more accelerated growth from the cloud giant.

Nexus Research, data compiled from company filings

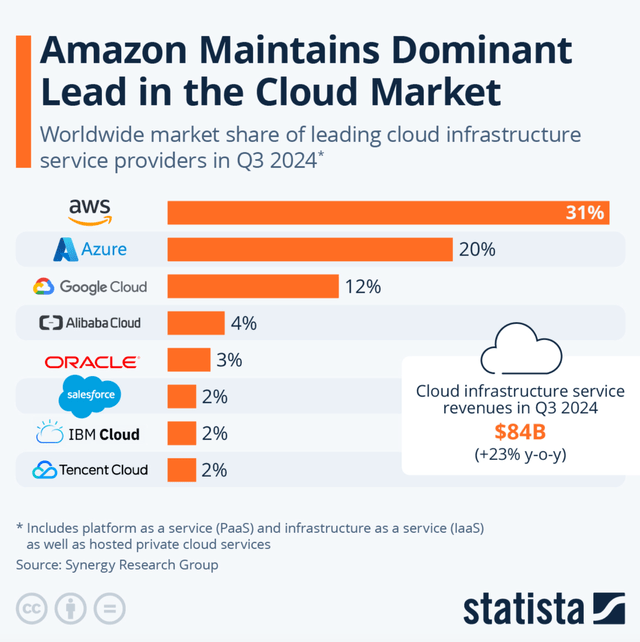

In fact, the software giant lost another 3% cloud market share last quarter, bringing the cumulative market share loss over the past three quarters to 6%. Over the same period year-to-date, AWS’s market share has remained stable at 31%, while Google Cloud’s share grew by 2% to 12%.

Statista, Synergy Research Group

Furthermore, the guidance for Azure’s growth in Q2 FY2025 disappointed investors yet again.

In Azure, we expect Q2 revenue growth to be 31% to 32% in constant currency, driven by strong demand for our portfolio of services…We expect the contribution from AI services to be similar to last quarter, given the continued capacity constraints as well as some capacity that shifted out of Q2.

…

The supply pushout, as Satya said, was third parties that are delivering later than we had expected, that get pushed mainly into the second half of the year and in general, Q3. So that’s third parties where we have tended to buy supply inclusive of kits so it’s complete end-to-end third-party delivery.

– CFO Amy Hood, Microsoft Q1 FY2025 earnings call

Indeed, Microsoft remains heavily dependent on third-party suppliers, particularly when it comes to semiconductors.

For a long time, Azure had been the only major cloud provider without its own custom chips, until it finally introduced the ‘Cobalt’ CPU and ‘Maia’ GPU in November 2023. And on the last earnings call, one year after its introduction, CEO Satya Nadella offered an update on the custom silicon side.

At the silicon layer, our new Cobalt 100 VMs are being used by companies like Databricks, Elastic, Siemens, Snowflake, and Synopsys to power their general-purpose workloads at up to 50% better price performance than previous generations.

Comparatively, Nadella did not offer a similar customer list and price-performance improvement statistics for its line of Maia accelerators, instead he emphasized the availability of Nvidia’s latest chips on the GPU side.

We offer the broadest selection of AI accelerators, including our first-party accelerator, Maia 100 as well as the latest GPUs from AMD and NVIDIA. In fact, we are the first cloud to bring up NVIDIA’s Blackwell system with GB200-powered AI servers.

Arguably, GPUs are the main component for running AI computations, and based off of Nadella’s remarks, it sounds like Microsoft is still heavily dependent on Nvidia’s chips to facilitate AI workloads, given the lack of traction for its own in-house silicon.

This plight is in stark contrast to the positions that rivals Amazon and Google are in, who have been designing and offering their own chips for many years now.

Amazon’s Trainium chips for AI training workloads have been available to AWS customers since 2021, while its Inferentia silicon launched as early as 2019.

while we have a deep partnership with NVIDIA, we’ve also heard from customers that they want better price performance on their AI workloads. As customers approach higher scale in their implementations, they realize quickly that AI can get costly. It’s why we’ve invested in our own custom silicon in Trainium for training and Inferentia for inference. The second version of Trainium, Trainium2 is starting to ramp up in the next few weeks and will be very compelling for customers on price performance. We’re seeing significant interest in these chips, and we’ve gone back to our manufacturing partners multiple times to produce much more than we’d originally planned.

– CEO Andy Jassy, Amazon Q3 2024 earnings call

So while Microsoft Azure’s growth is being held back by third-party supply constraints and barely any demand for its nascent Maia accelerators, AWS is boasting about having to procure more and more supply of its own chips in response to growing customer demand. This essentially raises the risk of AWS capturing more market share by attracting cloud customers that Azure did not have sufficient capacity to serve.

Now to allay investor concerns, Microsoft’s executives did signal that Azure’s growth rate should pick up pace as available supply expands in the second half of FY2025.

in H2, we still expect Azure growth to accelerate from H1 as our capital investments create an increase in available AI capacity to serve more of the growing demand.

– CFO Amy Hood, Microsoft Q1 FY2025 earnings call

But the risks aren’t limited to supply availability. Even as Microsoft gets hold of more chips from Nvidia and AMD, there is still the issue of third-party hardware dependency curbing Azure’s ability to offer optimum price-performance to cloud customers.

On the past several Amazon earnings calls, CEO Andy Jassy has repeatedly highlighted how customers are demanding greater inferencing cost efficiencies, given that running generative AI computations is costly. There is no doubt that Azure cloud customers are also seeking more and more cost-efficient AI solutions.

The key difference is that AWS was clearly very well-prepared to serve cloud customers’ needs for better price-performance through inducing greater adoption of their own in-house silicon, while Azure remains heavily dependent on Nvidia’s GPUs.

Customers can expect to pay about 40% less than they would using other hardware, said Dave Brown, vice president of AWS compute and networking services.

– The Wall Street Journal (emphasis added)

And then of course there is Google, which holds the lead in designing in-house chips, offering their custom Tensor Processing Units (TPUs) to cloud customers since 2018. Priorly, Google deployed its TPUs for internal workloads since 2015, and for years has proclaimed itself as an AI-first company, down from the silicon all the way up to the end-user software applications, which is clearly paying off for the company.

We are now on the sixth generation of TPUs known as Trillium and continue to drive efficiencies and better performance with them.

…

On the TPU front, … I just spent some time with the teams on the road map ahead. I couldn’t be more excited at the forward-looking road map, but all of it allows us to both plan ahead in the future and really drive an optimized architecture for it. And I think because of all this, both we can have best-in-class efficiency, not just for internal at Google, but what we can provide through cloud, and that’s reflected in the growth we saw in our AI infrastructure and GenAI services on top of it.

– CEO Sundar Pichai, Alphabet Q3 2024 earnings call (emphasis added)

Both AWS and Google Cloud have worked for years to drive deep integrations between their custom-designed chips, their data center servers, and the software stacks, culminating in operational cost efficiencies that can be passed onto cloud customers. As a result, the two rivals are better-positioned to lure enterprises looking for greater choice and optimum price-performance, while Microsoft Azure remains dependent on general-purpose chips from third parties like Nvidia and AMD.

It is no surprise then that AWS and Google Cloud have been able to better hold on to their cloud market shares, while Azure has lost market share for three consecutive quarters.

And this trend could indeed continue given that one year after the introduction of its own Maia GPUs, Microsoft still doesn’t have a customer deployment list to boast about, while Amazon and Google’s chips already benefit from a virtuous network effect that continues to attract more and more enterprises, as explained in a previous article on Google Cloud’s strengths:

In order for Google’s TPUs to commensurately challenge Nvidia’s GPUs, it is vital to trigger a network effect around the platform, whereby the more developers build applications, tools and services around the chips, the greater the value proposition of the hardware will become, which will be conducive to more and more cloud customers deciding to use the TPUs. And the larger the installed base of cloud customers deploying Google’s custom chips for various workloads, the greater the incentive for third-party developers to build even more tools and services around the TPUs, enabling them to generate sufficient income from selling such services to the growing user base of Google’s TPUs.

Now in this endeavor to cultivate a network effect and subsequently a flourishing ecosystem around the TPUs, Google recently hit a home run amid the revelation that Apple (AAPL) used Google’s TPUs to train its own generative AI models as part of ‘Apple Intelligence’.

So the uphill battle for Microsoft Azure is getting steeper, undermining the rate at which it can take market share from Google Cloud and Amazon’s AWS going forward.

Considering the bullish side

Now, despite Azure’s shortcomings on the custom chips front, the cloud provider still remains strongly positioned to capitalize on the AI revolution over the long term.

with Azure AI, we are building an end-to-end app platform to help customers build their own Copilots and agents. Azure OpenAI usage more than doubled over the past six months

In fact, while all the major cloud providers now offer a generative AI-powered coding assistant for developers, Microsoft appears to have a lead here with its GitHub Copilot service, compared to Google’s Gemini coding assistant and Amazon Q.

Microsoft GitHub Copilot was the overwhelming choice used for AI-based auto-programming tools for developers at 65%.

– Seeking Alpha News on a UBS report surveying 130 companies

Microsoft is the only major cloud provider that has been offering quantitative growth statistics for its coding assistant on earnings calls, and now is further building upon that lead through agentic capabilities.

Now on to developers. GitHub Copilot is changing the way the world builds software. Copilot enterprise customers increased 55% quarter-over-quarter as companies like AMD and Flutter Entertainment tailor Copilot to their own code base. And we are introducing the next phase of AI code generation, making GitHub Copilot agentic across the developer workflow. GitHub Copilot Workspace is a developer environment which leverages agents from start to finish so developers can go from spec to plan to code all in natural language.

– CEO Satya Nadella, Microsoft Q1 FY2025 earnings call (emphasis added)

Easing the coding process for developers is key to inducing greater development on the cloud platforms. Microsoft, followed by Google, have the lead here over chief rival Amazon.

The investment case for Microsoft

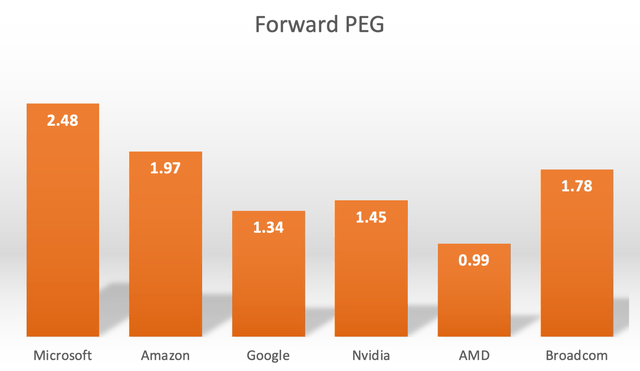

MSFT currently trades at 32.60x forward earnings. Although despite the prevalence of Forward PE multiples, a more thorough valuation metric is the Forward Price-Earnings-Growth (Forward PEG) multiple, which adjusts the Forward PE ratio by the anticipated earnings growth rate going forward.

Nexus Research, data compiled from Seeking Alpha

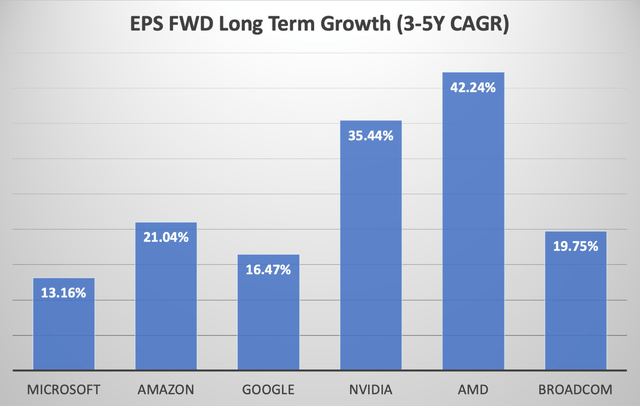

Top cloud providers and semiconductor companies are considered the ‘picks and shovels’ of this AI revolution, and out of the key AI players, MSFT has the lowest projected Earnings Per Share (EPS) growth rate.

Now adjusting each stock’s Forward PE ratio by their expected EPS growth rates gives us the following Forward PEG multiples.

Nexus Research, data compiled from Seeking Alpha

Relative to other ‘picks and shovels’ stocks that offer lucrative exposure to the AI revolution, MSFT is the most expensive, trading at 2.48x Forward PEG.

Given that the company’s initial edge through OpenAI is fading away amid the rise of open-source models, coupled with the fact that it is way behind Google Cloud and Amazon’s AWS in custom chips offerings, it is difficult to justify such a premium valuation over its tech peers.

Microsoft stock remains a ‘sell’.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.