Summary:

- Nvidia’s moat faces pressure from startups, Broadcom, AMD, and Big Tech’s in-house AI chips, with inference markets diversifying and startups gaining niche traction.

- U.S. restrictions and China’s push for domestic AI chips cut Nvidia’s China revenue share from 24.6% in 2022 to 12.2% in 2025, weakening growth prospects.

- Despite a $5.83T 2027 EV forecast, slowing growth and cyclical risks could spur volatility; current EV of $3.13T offers a 24.21% margin of safety for now.

estt

As someone who has studied NVIDIA (NASDAQ:NVDA) (NEOE:NVDA:CA) for years now (since its venture into AI), I have developed a deep and nuanced expertise of forecasting and understanding the stock. For some time, I have predicted a revenue contraction for the company between 2028 and 2031. That argument and thesis has been well-received, and my readers already know the details of my forecast, including that it is the primary reason for my prior Hold rating.

This analysis will be a deeper dive into the competitive threats growing in the diversifying AI chip market, which will likely be the most significant contribution (other than tapering demand for AI data center expansions) to cause Nvidia’s impending revenue contraction. Indeed, I should caveat, a revenue contraction for Nvidia in the next three to five years is not certain—the company has many areas where it could sustain growth, including AI factories, inference demand, and a potential burgeoning robotics opportunity in the next five to 10 years.

However, for the sake of probability and conservatism, I will be assuming that Nvidia will show typical semiconductor cyclical dynamics. Here are the threats currently surfacing that are attacking Nvidia’s moat.

Since my last Nvidia thesis in Q3, the stock has dropped 3.25% in price. However, I see this minor decline as temporary, and I expect strong growth to continue over the next two years at least. I have upgraded my rating in this analysis based on a two-year timeframe; read on to find out why.

The Diversifying AI Chip Market

First of all, it’s good to get a lay of the land as it relates to the total market. MarketsandMarkets anticipates that the global AI chip market size will increase from $123.16 billion in 2024 to $311.58 billion by 2029, which translates to a compound annual growth rate of 20.4%. Nvidia likely holds ~70% of the broad AI accelerator market, but about 95% of the GPU market used for AI training in data centers. Therefore, it’s fair to say Nvidia has an unprecedented moat in what is currently the world’s hottest industry.

However, Broadcom is emerging as a competitor and attempting to take market share from Nvidia by focusing on custom application-specific integrated circuits for hyperscalers like Google (GOOGL) (GOOG) and Amazon (AMZN). Broadcom’s AI revenue grew by 220% year-over-year in 2024, showing just how much ground it’s gaining.

There is also the obvious other major competitor, Advanced Micro Devices (AMD). However, you may not know that AMD only holds a 5-7% market share of the AI accelerator market, despite offering market-leading products like the MI300X and MI300A accelerators. The MI300X offers a superior memory capacity compared to Nvidia’s H100, giving AMD a distinct edge in the memory-intensive large language model market. However, the reality is AMD really has to focus on niche, underserved, and cheaper parts of the market to compete with Nvidia’s scale and power. Moreover, ROCm, which is AMD’s alternative to CUDA, lags behind the latter despite improving rapidly and being cost-effective.

Then, there’s Intel (INTC), which to be frank, while it shouldn’t be underestimated, is certainly not competing at the level of Nvidia and AMD at the moment, given its lack of organizational order amid the recent ousting of its CEO, Pat Gelsinger, and leaks from the company that it may spin off its foundry division in the future. Intel’s Gaudi accelerators are marketed as cost-effective alternatives to Nvidia’s graphics processing units (‘GPUs’), but Intel fell short of its relatively small $500 million revenue goal for Gaudi chips in 2024, primarily due to low adoption rates and substandard software quality.

Another exciting development is that major cloud providers, such as Google, Microsoft (MSFT), and Amazon, are developing in-house AI chips to reduce reliance on Nvidia’s GPUs. Of these world-leading technology companies, Google was the first to develop custom AI chips—it launched its Tensor Processing Units (‘TPUs’) in 2015. While earlier TPU versions were used for inference tasks, Google has now optimized them for both training and inference, as of v3. Among hyperscalers, Google TPUs hold approximately a 58% market share, with Amazon’s Trainium coming in second, potentially at ~20%.

Amazon also deserves a lot of attention. I outlined its recent developments in my last thesis on the company: “Amazon Stock Is Too Expensive Amid Trainium3 Diversifying The AI Chip Market”. The current Trainium2 instances (Trn2) offer up to a 30-40% better price performance compared to Nvidia’s P5e and P5en GPU-based instances. Inferentia2-based Inf2 instances are offering a 40% better price performance compared to other options, including GPU-based instances, while also improving throughput and latency.

Microsoft has perhaps been given a little less attention than it deserves in the custom AI chip market by analysts, including myself, and in my opinion. Its first in-house AI accelerator, Azure Maia, launched in late 2023, and is designed specifically for training and running AI models on Microsoft Azure. Maia has been developed with OpenAI to optimize ChatGPT. Microsoft has also introduced the Cobalt central processing unit (‘CPU’) for general-purpose and memory-optimized cloud computing tasks.

All of these developments represent how, on the macro and mainstream level, Nvidia’s market share, or moat, will inevitably narrow. The reason for this is that quite rightly, everyone wants a piece of the enormous AI opportunity.

Nvidia investors should recognize that at the very least growth will continue to slow in the next two years, and a revenue contraction seems almost inevitable given the major AI training phase will culminate and the market for inference will be more diversified following this.

AI Chip Startups

I have mentioned the diversifying AI chip market as it relates to startups before in my thesis “Nvidia’s Moat Remains Unchallenged Despite Emerging Players’ Advanced AI Chips”. For an in-depth discussion on these startups, refer to the second paragraph of the article onwards. I won’t recap the same points here, but I will mention the names of the core emerging players and visit new insights: Groq, Cerebras, SambaNova, and Graphcore (the last of which I did not mention previously). Despite the hardware innovations that these players have delivered to lower latency and increase inference or training efficiency, it is difficult for startups to gain traction because of how deeply entrenched Nvidia’s CUDA ecosystem is with developers.

Let’s briefly analyze what the future may look like for these players because it’s important to understand how Nvidia’s moat will likely remain intact, but narrow somewhat as new entrants continue to vie for its market share. As the AI chip market begins to shift from training to inference, I expect that the newer players mentioned here will have an opportunity to consolidate their positions. That said, Nvidia’s positioning in the inference market shouldn’t be underestimated, but it is much less of an immediate revenue compounder than its training upcycle. The inference revenue horizon for Nvidia will be a longer, more protracted journey to scale as AI proliferates through industries and technologies over the next few decades. Yet, the reality is that the inference market could likely be much more diversified than the training market due to a slower rate of adoption (less of an arms race among Big Tech), allowing for domain-specific chips, like those developed by startups, to gain much more prominence in specific industries. Therefore, the long-term threat to Nvidia from startups related to inference is much more significant than the near-term threat, while training is the main revenue generator in the AI chip market.

Chinese AI Chip Developments

China is prioritizing AI and semiconductor self-sufficiency with initiatives like “New Generation Artificial Intelligence Development Plan” and “Made In China 2025”. As an example of the massive funding taking place in China, the $47.5 billion China Integrated Circuit Industry Fund (Fund 3) intends to fortify China’s domestic semiconductor supply chain. It’s important as a Western citizen and investor in U.S. stocks not to lose sight of the progress being made overseas, as well as understanding different economies and perspectives, even if one doesn’t fully agree with the different circumstances or multipolarity that is evolving.

Huawei’s HiSilicon division produces the Ascend series of AI processors, which includes the Ascend 910B—this is designed for high-performance training and inference workloads and with growing demand for data centers and autonomous systems. The Kunlun 2 chip by Baidu (BIDU) powers applications like deep learning and autonomous systems, and Alibaba (BABA) and Tencent (OTCPK:TCEHY) are heavily investing in AI infrastructure, including custom chips for cloud platforms. Just like America, China has emerging players, namely Cambricon, Moore Threads, and Enflame Technology, who are developing GPUs to target both training and inference AI applications.

As a result of these developments, Nvidia’s revenue from China has already dropped significantly—it accounted for 24.6% in early 2022 to just 12.2% by mid-2025 (Fiscal 2025). A big reason for this is the impact of U.S. export restrictions on advanced chips like the A100 and H100, forcing Nvidia to offer downgraded versions to China to comply with regulations. Moreover, the Chinese government is informally discouraging companies from purchasing Nvidia’s H20 chips and wants them to instead purchase from Huawei and Cambricon, representing the broad, slow, and currently relatively diplomatic, decoupling that appears to be taking place between China and the U.S. As China represents one of the largest markets for AI chips globally ($7 billion), a further reduction of sales here over time is inevitably going to hurt Nvidia.

Valuation

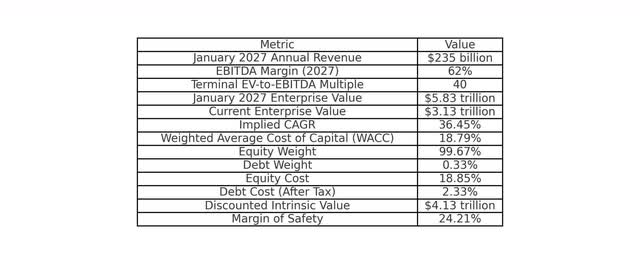

At the moment, I favor a two-year valuation model for Nvidia because I believe negative sentiment could build in 2027 and 2028 related to an impending revenue contraction. This might seem quite defensive, but I invest to survive so I can thrive over the long term; excessive risk is not my style. I estimate that Nvidia will have January 2027 annual revenue of $235 billion, with an EBITDA margin of 62%. Additionally, I estimate that the company will have an EV-to-EBITDA margin lower than its forward five-year average of 49 due to slowing growth—my terminal multiple is 40. The result is a January 2027 enterprise value forecast of $5.83 trillion. The current enterprise value is $3.13 trillion, so the implied CAGR is 36.45%.

Nvidia’s weighted average cost of capital is 18.79%, with an equity weight of 99.67% and a debt weight of 0.33%. Equity costs 18.85% and debt costs 2.33% after tax. When discounting my estimate for the company’s January 2027 enterprise value back to the present day using the weighted average cost of capital as my discount rate, the implied intrinsic value is $4.13 trillion. As the current enterprise value is $3.13 trillion, the margin of safety for investment is 24.21%, based on the traditional equation ‘Margin of Safety = (Intrinsic Value − Market Price) / Intrinsic Value’.

Nvidia Valuation (Author’s Model)

Conclusion: Moderate Buy

I am upgrading my rating here, which might seem counterintuitive given my last theses where I allocated Hold ratings, but my two-year timeframe (differing from the three-year timeframe in my last model which showed a fair valuation) opens up the potential for an investment worth considering.

However, I reiterate, be careful, even as January 2027 nears, the investment could get very volatile—on the other hand, it could stay stable through 2028 and continue growing. It is too early to tell, and I will update readers in due course. However, the market is diversifying, Nvidia’s revenue is slowing, and the AI training demand will not stay at this intensity forever. Therefore, Nvidia is facing a revenue contraction at some point in the next few years, unless significant market dynamics position it in a timely manner as a continuing compounder in inference, robotics, and AI factories (though I fail to see how this will allow to it to sustain ~$250 billion in annual revenue, I’m happy to be proved wrong).

Editor’s Note: This article discusses one or more securities that do not trade on a major U.S. exchange. Please be aware of the risks associated with these stocks.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.