Summary:

- Google has unveiled open access to Bard in the U.S. and UK on a rolling basis beginning Tuesday.

- The development continues to underscore Google’s shift from a conservative to catch-up stance on the deployment of speculative technologies to capture current momentum in generative AI.

- The following analysis will focus on both opportunities and threats facing Google as generative AI gains momentum.

Prykhodov

After reminding markets that Alphabet Inc. (NASDAQ:GOOG / NASDAQ:GOOGL) (“Google”) is an “AI-first company,” and committing to making large language models like LaMDA available to the public within the “weeks and months” following its fourth quarter earnings call in early February, the tech giant has delivered. About a month and a half after the initial introduction of Bard – Google’s answer to Microsoft-backed (MSFT) OpenAI’s ChatGPT – the LaMDA-based chatbot has now been made available to users across the U.S. and UK on a rolling basis.

The development continues to complement Google’s efforts in bringing the multitude of its investments into AI-enabled innovations over the past decade to light, which is critical for the tech giant to regain traction over its aspirations of being an AI-first company amid the heated arms race in generative AI. Despite growing concerns over potential market share erosion and margin compression with the deployment of generative AI capabilities like ChatGPT and Bard, the Google stock’s current discount to both its historical levels and mega-cap Internet peers, paired with the incremental value of new opportunities generated from the implementation of generative AI capabilities across its existing portfolio of offerings, are likely more than sufficient for compensating the related risks, and unlock fresh upside potential over time.

Bard Incoming

Since ChatGPT’s debut to the public in November, others built on the same technology have followed, including Snap’s (SNAP) My AI and Salesforce’s (CRM) Einstein GPT, in addition to non-GPT-based standalone developments like Baidu’s (BIDU) Ernie Bot and Google-backed Anthropic’s Claude. The latest to enter the chat is Google’s very own Bard, which was introduced in early February – best remembered for its blunder in answering a question wrong during a live demo at its debut.

*The first image of exoplanets was taken by the “Very Large Telescope” in 2004.

The LaMDA-based chatbot is now made available to the general public across the U.S. and UK – users can sign up on a waitlist and be permitted access on a rolling basis. And Google was sure to note that the chatbot is currently an “early experiment,” with the latest availability of open access to Bard aimed at “[getting] feedback from more people.” This is consistent with our discussion in the previous coverage on the stock, whereby Google is switching out its historical conservatism on the roll-out of speculative technologies in exchange for including the public into its AI journey in an attempt to stem market share loss to rival Microsoft.

Looking ahead, continued improvements to Bard and the chatbot’s ultimate deployment and monetization will not only be critical to stemming Google Search’s market share loss to rivals like ChatGPT-infused Bing, but also drive incremental demand for Google’s other generative AI capabilities via cloud. Specifically, while conversational AI services have recently taken the spotlight, generating more buzz than almost any other legacy AI development and turning a page for the technology’s cross-industry adoption from concept to reality, Google has long been integrating all sorts of AI-enabled solutions across its offerings:

1. Search

While Bard will be critical for addressing rival Microsoft’s ChatGPT-Bing head-on, and ensuring its leadership in online search and search ads is safeguarded, it is not the platform’s first major AI breakthrough. As discussed in our previous coverage, Google’s BERT – also a LLM – has been a key driver of Search’s accuracy and performance since its integration with the platform in 2019. BERT is trained to link text in traditional search queries with their underlying context to “improve web page rankings,” which inadvertently also drives better performance and conversion for search ads.

But before BERT, Google has already been investing and implementing other AI/ML-enabled solutions in search, which have been critical to making it the leader it is today – these include Neural Matching, a predecessor to BERT that was implemented in Search in 2018 to help improve understanding of queries, and better match them with web page results.

And the introduction of Multitask Unified Model (“MUM”) during Google’s I/O event in 2022, which enables multi-modal search via all of text, audio, and imagery in 75 languages, is expected to push the accuracy of responses further. MUM is trained to perform “many tasks at once,” making it “1,000x more powerful than BERT” – for instance, it is equipped to understand the context of queries better than BERT by having a “more comprehensive understanding and information and world knowledge than previous models,” and even prepare in advance for topics related to the original query.

Entering 2023, Bard – which is based on the LaMDA conversational AI introduced in 2021 – is now integrated into Search on an experimental basis. The pace of AI-enabled search exhibited by Google over the past half decade alone, and what they are capable of enabling in the future continues to underscore the company’s prowess in this field with little sign it is falling behind on the trend, nor the technology’s advancement.

2. Advertising

Google’s cutting-edge AI developments can also be found deeply integrated into the DNA of its advertising business. Take Performance Max (“PMax”), for example, which was introduced in 2021. The ad format utilizes AI/ML to optimize ad placements for advertisers, thus improving reach and performance, as well as the economics of advertising. PMax’s value proposition is simple – advertisers can easily access Google’s ad inventory, and create ad campaigns by inputting their desired goals (e.g., budget, location, language, etc.), and Google will do the rest by distributing the ad accordingly across channels spanning “YouTube, Display, Search, Discover, Gmail, and Maps.” In addition to simplicity of usage, PMax is also smart – the format will help optimize ad placements over time and attract new customers that advertisers might not have otherwise reached, while also considering advertisers’ cost per action (“CPA”) and return on ad spend (“ROAS”) goals to drive placements accordingly.

And Bard stands to complement this model over the longer-term, especially if generative AI queries start to displace traditional search over time (discussed further in below sections). Specifically, with conversational AI services gaining traction over time, the incremental engagement garnered with inevitably attract allocation of ad dollars over from traditional search platforms. With Bard now in testing and potential future deployment, Google is pacing well on track to ensure adequate monetization and market share recapture if the shift in search trends do materialize over the longer-term.

3. Cloud

Known as the third largest public cloud provider, GCP still trails rivals Azure and AWS (AMZN) by wide margins. The unit is also not yet profitable, unlike rival platforms that have been benefiting from lucrative margins in the field for years. Yet, GCP appears to be fared better than its larger peers in recent quarters, posting consistent double-digit y/y growth in the 30%-range while the broader industry is warning of deceleration. And continued integration of AI-enabled solutions, as well as offerings catered to AI workloads, will be critical to sustaining the market share gains required for GCP’s trajectory to profitability.

In addition to BigQuery, GCP’s flagship AI/ML-enabled analytics tool for handling increasingly complex workloads which was discussed in our previous coverage on the stock, Google’s continued vertical integration of in-house developed data center processors alongside its strides made in generative AI (e.g., LaMDA, Bard, etc.) will also help to reinforce the segment’s growth and scalability. Specifically, generative AI workloads are expected to further expand the total addressable market (“TAM”) in cloud computing over coming years. In addition to the LLMs that require substantial computing power (discussed here), subsequent applications built on them will also generate incremental demand for capacity over time. And the introduction of Bard and LaMDA – especially if Google models Microsoft’s strategy in monetization OpenAI’s LLMs – will be key to attracting additional enterprise cloud spending generated from incremental cloud capacity demand to suffice deployment of generative AI solutions. Not only will the distribution of access to Bard / LaMDA to developers generate an incremental revenue stream for GCP, it will likely also drive demand to adjacent cloud services offered and enable further scale towards profitability for the unit.

Generative AI: Threat or Opportunity?

With OpenAI’s ChatGPT accumulating more than 100 million users in just several months after its open access, it is only fair that Google’s investors are concerned if the company’s moat is at risk of being breached. Specifically, the rapid adoption of ChatGPT by day-to-day users and integration of OpenAI’s GPT models into existing platforms (e.g., Bing, Office 365, Dynamics 365, Salesforce Einstein GPT, Snapchat, etc.) have led to concerns over erosion – or even displacement, altogether – of Google’s market leadership in online search, which would put its core advertising business at risk. There are also worries about impact of generative AI deployment on the company’s gross margins – OpenAI founder Sam Altman has recently commented that it costs on average “single-digit cents” per prompt on ChatGPT, while industry forecasts generative AI queries could cost as much as 2x to 10x of traditional queries.

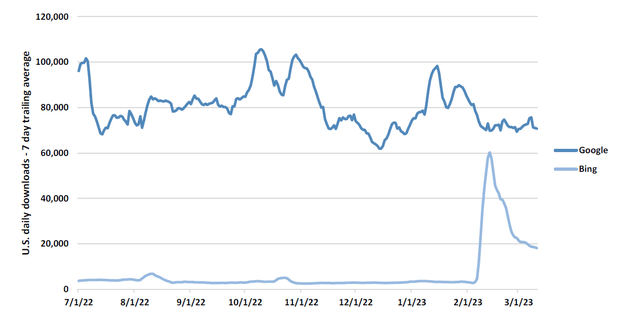

But the latest frenzy over generative AI appears to be more of an opportunity than a threat – especially for Google, as it has already allocated much of the past decade in preparing for the arrival of this very moment. In terms of market share erosion or displacement, Google has yet to experience any substantial impacts to Search’s traffic nor ad performance as a direct result of ChatGPT’s introduction. Recent data shows that daily Google downloads in the U.S. have steadily run up towards almost 100,000 in the weeks after ChatGPT’s debut in November 2022, before consolidating around 70,000 in recent months. Google’s download stats have not budged either since Bing’s debut of its ChatGPT-infused service in February, while the rival search platform’s spiked downloads as a result of the frenzy failed to sustain through the month.

Google vs. Bing Downloads (RBC Capital Markets, with data from Sensor Tower)

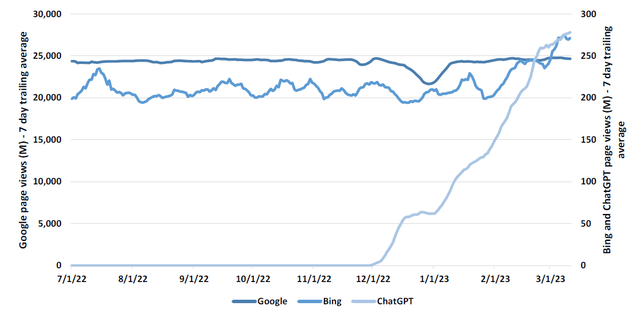

Visits to Google have also remained at about 25 billion mark for daily average page views, with the exception of an expected seasonal dip during the December holiday season, despite incremental traffic observed at Bing and ChatGPT.

Google vs. Bing We Page Traffic (RBC Capital Markets, with data from SimilarWeb)

Taken together, it appears the frenzy over generative AI queries have yet to displace traditional search on Google, nor eroded its leading market share. This is largely thanks to Google’s solid foundation in commanding more than 95% share of online queries (versus Bing’s ~2%), bolstered by its AI prowess supporting efficiency of the platform behind the scenes. A complete shift in user preference to a direct query and single response search format will also take time – this is akin to relatively little traffic on Google’s “I’m feeling lucky” option, which takes users to the first query response, as opposed to options in list format.

While it remains uncertain the extent of which generative AI queries might cannibalize demand for traditional queries in the longer-term, the recent introduction of Bard – and potentially other solutions enabled by Google’s LLMs, like LaMDA and PaLM – essentially mitigates the ensuing concerns over substantial market share erosion or displacement. And if generative AI queries proceed as an incremental development to traditional search, Bard is also readily available to help Google capture share in that arena over the long-run.

With regards to gross margins, while generative AI queries currently prove more expensive than traditional queries due to the substantial compute intensity required to process them, Google’s sprawling market reach shows potential in driving a scale advantage capable of offsetting some of the said headwinds. Meanwhile, vertical integration of GCP alongside Google’s generative AI developments will also help to reduce related costs. As discussed in our previous coverage, Google’s in-house developed “Tensor Processing Units” has been key to driving GCP’s reputation in the AI/ML community. Specifically, the latest “Cloud TPU v4” is catered to handling large-scale complex workloads like LLMs and other transformer models in generative AI, offering up to 80% faster speeds in training them at less than half the cost of its predecessor, Cloud TPU v3:

Cloud TPU minimizes the time-to-accuracy when you train large, complex neural network models…that would have taken weeks to train on other hardware…Cloud TPU provides low-cost performance per dollar at scale for various ML workloads. And now Cloud TPU v4 gives customers 2.2x and ~1.4x more peak FLOPs per dollar vs Cloud TPU v3.

Source: cloud.google.com.

This will not only help Google scale its deployment of generative AI solutions like Bard and offset some of the burden that related compute intensity will place on its profit margins, but also potentially attract additional customers to migrate their incremental generative AI / transformer workloads to GCP as well. Google’s continued integration of other AI-enabled solutions (e.g., BERT, Pmax, etc.) across its offerings have also contributed to a consistent track record of margin expansion in recent years, which further corroborates confidence in the company to overcome the incremental cost pressures ensuing from the deployment of Bard and other future generative AI solutions.

And as mentioned in the earlier section, Google has been investing in the development of generative AI aggressively in the past decade. This is further corroborated by the swift deployment and introduction of Bard following the viral sensation of ChatGPT, underscoring that the company has the tools in place to include Google users into its AI journey. Taken together with consistent top-line growth (ex-macro challenges in the near-term), it is unlikely that Google will have to shell out material incremental capex spend as a percentage of revenue in the foreseeable future, thus preserving cash flow expansion required to sustain valuation upsides.

The Bottom Line

While Microsoft, supported by OpenAI, has been first the debut mass market usability of generative AI, it is certainly not the first to have invested in related developments, nor the only one with deployment-ready services up their sleeves. With Google being a close competitor capable of benefiting from the same, if not more, monetization strategies for generative AI, the latest momentum in the nascent technology serves as an opportunity for the company to establish a fresh trajectory of next-generation growth. And the swift deployment of Bard is expected to reinforce traction on Google’s developments in this subfield of AI, and draw attention to the multitude of new growth engines and incremental revenue streams enabled by related offerings down the road through cloud, search, advertising, and potentially other verticals.

With the stock now also trading at a discount to its historical levels and relative to its mega-cap internet peers – likely dragged by both looming macroeconomic headwinds and investors’ mispricing of the potential threats and opportunities facing Google’s future regarding generative AI developments – we remain optimistic about Alphabet Inc.’s longer-term prospects.

Disclosure: I/we have a beneficial long position in the shares of GOOG either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Thank you for reading my analysis. If you are interested in interacting with me directly in chat, more research content and tools designed for growth investing, and joining a community of like-minded investors, please take a moment to review my Marketplace service Livy Investment Research. Our service’s key offerings include:

- A subscription to our weekly tech and market news recap

- Full access to our portfolio of research coverage and complementary editing-enabled financial models

- A compilation of growth-focused industry primers and peer comps

Feel free to check it out risk-free through the two-week free trial. I hope to see you there!