Summary:

- Nvidia Corporation and Advanced Micro Devices, Inc. are fabless chip designers that have been popular with the recent AI frenzy.

- I rate both Nvidia and AMD as Holds at current valuations.

- The AI frenzy has caused the stocks to trade at huge multiples, so buying is not recommended, but these are great businesses which I do not recommend selling.

- Both of these companies are releasing next-generation chips specifically designed for AI computing requirements.

- Despite AMD’s innovative next-gen chip, it doesn’t look set to dethrone Nvidia just yet.

PhonlamaiPhoto

Opening Discussion

Both NVIDIA Corporation (NASDAQ:NVDA) and Advanced Micro Devices, Inc. (NASDAQ:AMD) have built their businesses around fabless chip design and both are vying to ride the AI (Artificial Intelligence) wave.

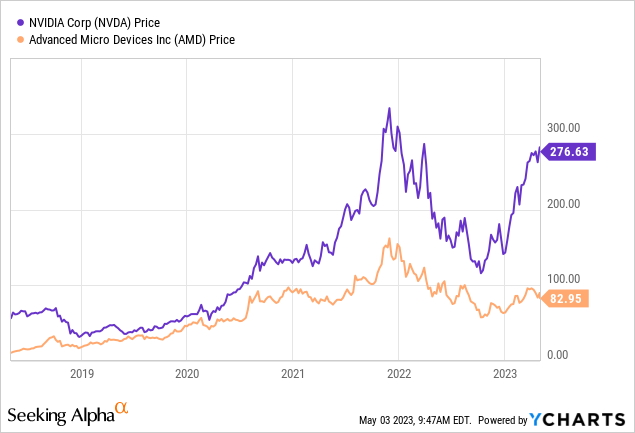

A look at price performance shows Nvidia as the clear victor, with AMD lagging by quite some margin despite being a close competitor as recently as 2020.

When looking at other financial metrics, we can see why: Nvidia has a significantly better net margin than AMD and a dominant market share in the GPU market which is propelled by the AI boom and looks set to soar.

Valuations

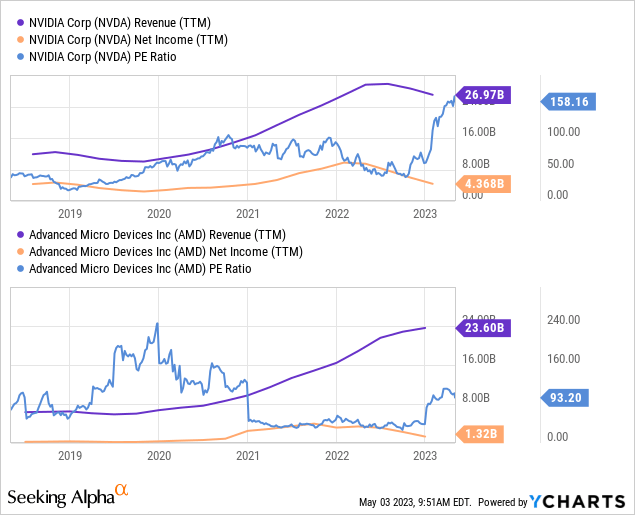

Both Nvidia and AMD are trading at questionable valuations. The recent run-up in NVDA has yielded a TTM P/E of about 160, while AMD comes in at TTM P/E of about 107. Valuations to this extent are seldom profitable trades or long-term investments for buyers, so I am recommending extreme caution in entering positions in either of these companies at current valuations. There are likely to be multiple contractions at any hint of headwinds, like AMDs recent slump because of mixed demand signals.

Though NVDA has hung a bit tougher, it’s still down. The current NVDA valuation will only yield a profitable investment over a 5-to-10-year period if there is near-perfect execution by management and a near-perfect macro environment, both of which are unlikely. Although NVDA management has been exceptional to date, the macro environment underpinned by Taiwan risk poses material risks to Nvidia’s business model.

My recommendation is to Hold both AMD and NVDA. AMD and NVDA stock prices will be acutely sensitive to any news about Taiwan other than the best-case scenario (no China invasion). For that reason, I am opting to wait on the sidelines for now, perhaps waiting for Taiwan drama to present a generational buying opportunity in Nvidia. If the Taiwan rhetoric cools off a bit, I will begin dollar-cost averaging into Nvidia, which I view as the superior option between these two businesses. As long as the P/E is anywhere near its current level, I will not be making significant moves into Nvidia, but I remain open to buying bits and pieces at a time.

GPUs and AI

OpenAI’s ChatGPT is the recent innovation in AI that has taken the internet by storm. By now, most people have either heard of or used ChatGPT themselves, and many have been extremely impressed with the results. ChatGPT is a neural network that is specialized in conversational speech, dubbed a large-language model (“LLM”). LLMs work in much the same way any other computer programs do: by transforming inputs into outputs. With an LLM and other neural networks, the program receives a set of inputs (a text prompt) and applies a series of weights to the inputs which then yields the output (a text-based response).

This process requires an immense amount of data to move between the processor and RAM (Random Access Memory). Traditional CPUs are not well equipped for this level of computing which is why Nvidia, the clear leader in discrete GPUs with a market share in excess of 80%, has been the subject of immense hype and frenzy recently. If CPUs are like sports cars transporting small amounts of data really quickly, GPUs are like dump trucks transporting huge amounts of data slowly. In a more general sense, CPUs perform 1×1 unit computations (scalar) while GPUs perform 1xN data unit computations (for linear algebra nerds that actually means (1xN)*(Nx1) matrix multiplication).

This is the reason Nvidia has garnered so much hype recently. In other words, ChatGPT will receive a 1xN or NxN unit input matrix, multiply it by an Nx1 or NxN unit weighting matrix, and transform the result into the output.

AMD and Intel Corporation (INTC) are the only other notable competitors, but with Intel prioritizing chip fabrication, AMD looks to be the only company positioning itself as a serious competitor to Nvidia in the AI-hardware space. Therefore, the focus of this article will be on Nvidia’s and AMD’s AI products, a comparison of the specifications, and who is likely to capture market share over time.

The Next Generation of Chips

Which Chip Will Win?

From a hardware standpoint, AMD’s Instinct MI300 looks to pack in more computing power at around the same energy efficiency. Purely from a specs standpoint, the MI300 looks to be the better and cheaper option than the Nvidia H100. Despite these strengths, the H100 has the backing of Nvidia’s software stack, built on its CUDA language, which blows AMD’s ROCm out of the water. Although some companies like OpenAI are opting to build their own software stacks, not every company will have the expertise or resources to do this. So the decision will come down to which vendor has the best full-stack solution (hardware and software), which is a crown Nvidia has worn since 2006 and looks set to keep wearing.

Further, Nvidia has scale and network effects. Nvidia GPUs have over 80% market share, and many companies have already built and optimized their platforms on the CUDA language, which increases switching costs. For example, Adobe has many of its products built on CUDA and designed for Nvidia GPUs, so they can’t easily switch from Nvidia products. This stickiness will be a tailwind for Nvidia throughout the coming AI boom and could result in AMD being hurt more by weakening demand than Nvidia.

Technical Comparison

Nvidia H100

The H100, based on the Hopper architecture, began shipping from OEMs (Original Equipment Manufacturers) around October 2022 and will mostly be sold to cloud service providers for over $30,000.

The H100 uses an industry-leading 4nm node, packs in 80 billion transistors (compared to A100’s 54 billion), and utilizes tensor cores. Transistors facilitate the flow of electrical currents in chips, in general, more transistors means more compute power. Tensor cores are more powerful than typical GPU cores and are specialized for NxN matrix multiplication. AI applications typically require NxN matrix multiplication for the transformation of inputs to outputs, so as AI innovation continues, tensor cores will be critical to scalability.

As mentioned before, LLMs like ChatGPT require huge sets of training data and a lot of time and power to train them. To accelerate the training, NVIDIA Hopper has a Transformer Engine which can speed training by as much as 9X and inference throughput by as much as 30X.

Nvidia also offers DGX cloud which is a cloud-based infrastructure and software for training AI models and includes many pre-trained models. This service is likely to gather steam as more and more businesses and startups begin developing their own AI models to suit their needs. DGX cloud uses H100 chips, so startups that may not have the cash flow to shell out $30,000 for a single computing chip can opt for this subscription-based service to the H100 power and access pre-trained models. Though it is worth noting, DGX cloud also carries a hefty price tag of roughly $37,000/month. DGX cloud also includes a free license to Nvidia Enterprise AI, which is a suite of AI development tools and deployment software, making it a really good option for start-ups or businesses that cannot afford to host their own supercomputing servers.

AMD Instinct MI300

The Instinct MI300 is expected to be shipped to customers before 2024. The specifications of this chip are breathtaking:

The MI300 is a monster device, with nine [of Taiwan Semiconductor Manufacturing Company Limited’s] (TSM) 5nm chiplets stacked over four 6-nm chiplets using 3D die stacking, all of which in turn will be paired with 128GB of on-package shared HBM (High Bandwidth Memory) memory to maximize bandwidth and minimize data movement. We note that NVIDIA’s Grace/Hopper, which we expect will ship before the MI300, will still share 2 separate memory pools, using HBM for the GPU and much more DRAM (Dynamic Random Access Memory) for the CPU. AMD says they can run the MI300 without DRAM as an option, just using the HBM, which would be pretty cool, and very fast.

At 146B transistors, this device will take a lot of energy to power and cool; I’ve seen estimates of 900 watts. But in this high-end of AI, that may not matter; NVIDIA’s Grace-Hopper Superchip will consume about the same, and a Cerebras Wafer-Scale Engine consumes 15kW. What matter is how much work that power enables.

Conclusion

Despite the strong performance in recent years and a re-entry into the discussion of chip powerhouses, Advanced Micro Devices, Inc. does not look set to dethrone Nvidia Corporation just yet. Nvidia shouldn’t, and isn’t, resting on its laurels, though. The tech sector is characterized by one thing: rapid innovation at scale. Nvidia will need to continue innovating to keep its edge and maintain its stranglehold of the GPU market, and if it can, NVDA will continue growing for years to come as society continues to delve further and further into the age of AI.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.