Summary:

- The danger to data center hardware companies in the AI boom is the cannibalization scenario: GPU compute eating CPU compute.

- GPU servers cost at least 10x more and take up at least double the space in a data center rack. Cloud providers with finite budgets will have to make choices.

- If they choose GPU, that’s not just bad for Intel Corporation and AMD, but all the data center hardware companies not named Nvidia Corporation.

- There is a ton of investment going into disrupting Nvidia and this dynamic. That doesn’t mean they will be disrupted any time soon.

Justin Sullivan

Nvidia Is Taking All The Money

There is a meme out there that the artificial intelligence, or AI, data center boom, centered around Nvidia Corporation’s (NASDAQ:NVDA) H100 data center GPUs, will provide an uplift to the entire data center hardware ecosystem — CPUs, memory, storage and network. Indeed, GPU servers tend to max out all of these.

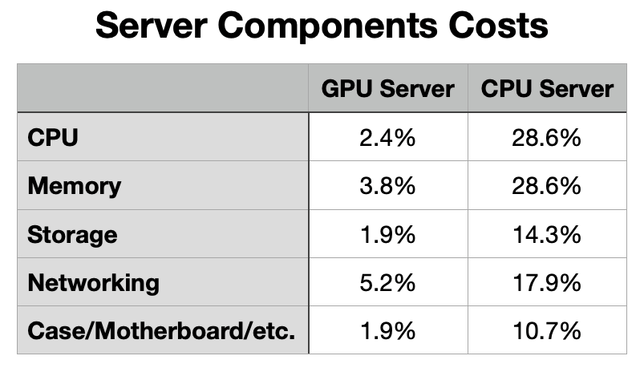

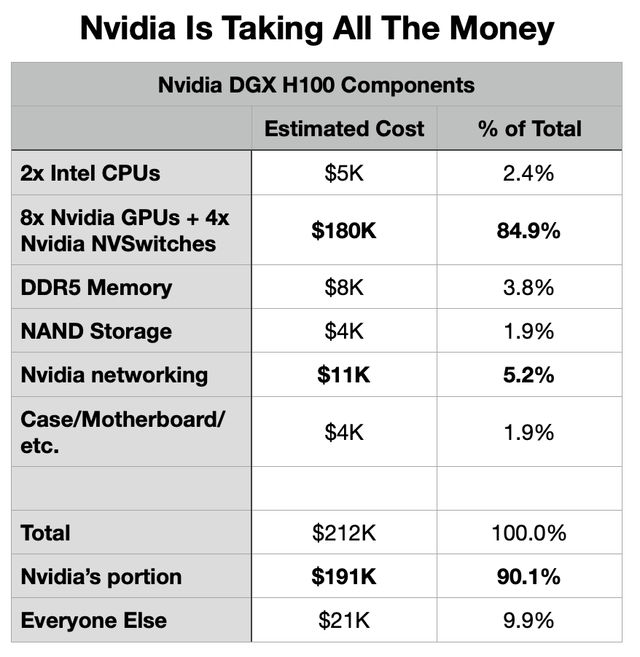

Nvidia’s gold standard GPU server, the DGX H100, really maxes everything out. Let’s look at some estimated component costs:

Not including Nvidia’s substantial markup (Author estimates)

They are using Intel Corporation’s (INTC) most expensive CPUs, 2 of them, and Intel still only gets 2.4% of the component costs. AWS of Amazon (AMZN), for example, is balking at these servers. They have lots of experience in building their own servers and data centers, and they can skip the Nvidia markup. They may also choose not to max out everything like Nvidia did, skimping a little on CPU, memory, networking or storage, but that may open up bottlenecks. In any case, they are still stuck with that $180k Nvidia line item.

Think of a top-end CPU as the same items, except without that Nvidia line. For the price of 1 DGX H100, a hyperscaler can buy 10 or more really good CPU servers. The component cost allocations are very different

Hyperscalers do not have unlimited capital budgets, and they have a lot of choices to make in the months and years ahead. On the CPU side, ARM data center chips are displacing x86 chips from Intel and Advanced Micro Devices, Inc. (AMD), led by AWS and Alibaba’s (BABA) cloud. But the bigger issue for Intel and AMD is that 10-to-1 (or more) price ratio and the tradeoffs hyperscalers are going to have to make.

Moreover, DGX H100 servers are giant, taking up 8 rack “units.” A CPU server can be anywhere from 1-4 units. This means only 6 DGX H100s can fit in an industry-standard 48-unit rack, displacing 12-48 CPU servers. GPU servers may not only displace CPU servers in budgets, but in physical footprint.

If the AI investment boom keeps going, expensive and space-hogging GPU compute will be displacing CPU compute.

The evidence is that it is already happening.

Nvidia Eats CPU Compute

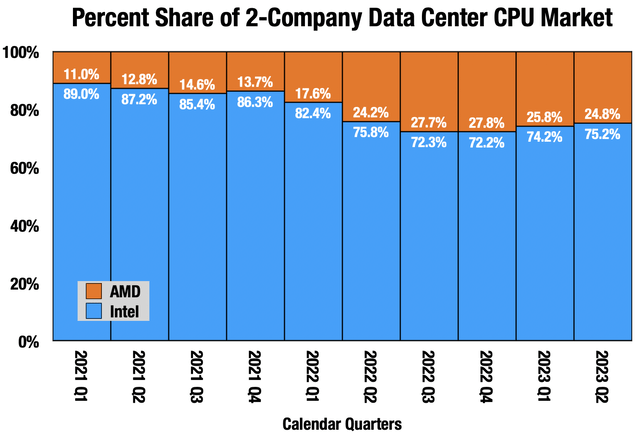

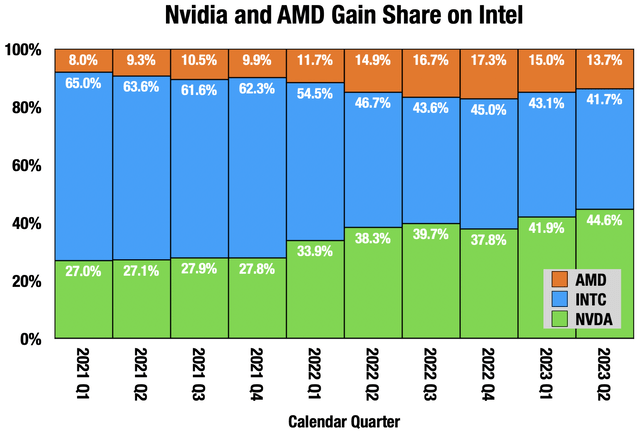

Looking at the x86 data center CPU companies, we see that AMD gained a lot of market share in 2022, though Intel clawed some of that back in H1 2023. But the story is not great here, and I’ve been surprised by the weakness in data center CPUs, because cloud demand isn’t going away.

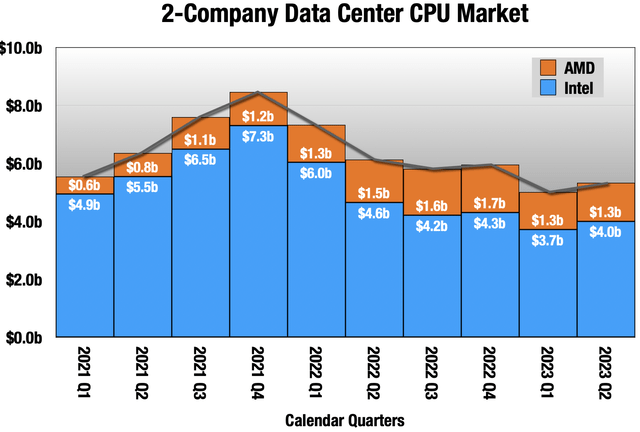

I thought we would be in recovery here by 2023, but it took another leg down in H1 instead. That’s because GPU compute is eating up cloud capital budgets, displacing CPU servers. Let’s add in Nvidia:

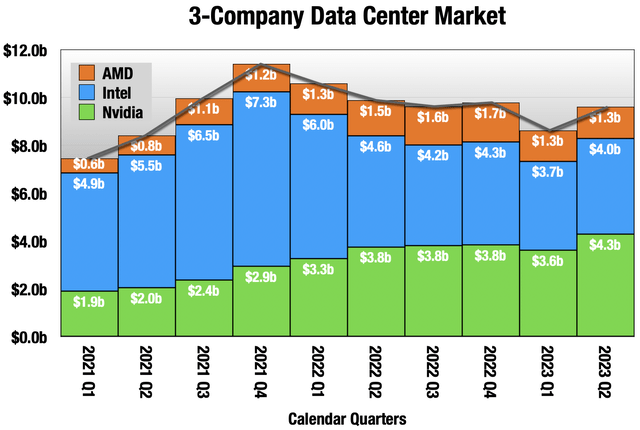

The lines on those charts look very different. Let’s switch to market share:

Nvidia quarters 2 months ahead, e.g. 4/30 vs 6/30 (Company quarterly reports)

In the most recent reporting for each company, Nvidia was at almost 45% of total data center revenue. Once they report next week (earnings expected post-market August 23rd), it should be a majority. Intel is getting squeezed on both sides of the equation, even though they have the DGX H100 CPU contract. That should tell you what you need to know.

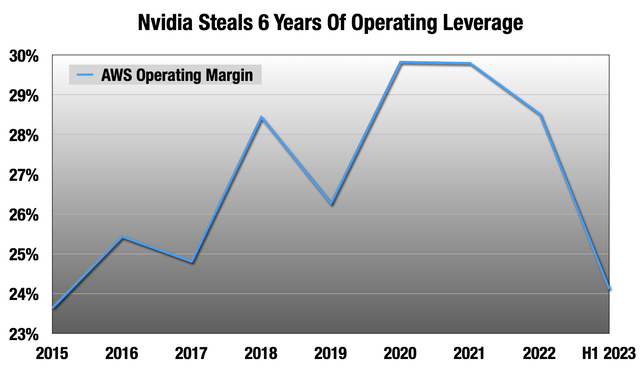

Nvidia taking all the money is not just affecting other data center hardware companies. They are stealing margin from their own customers. AWS gives us the cleanest look at “cloud” at scale, by which I mean just rentable compute and storage.

AWS operating margin hit a record 32% in H1 2022, and it’s been downhill from there. Microsoft’s (MSFT) Intelligent Cloud segment doesn’t give us as clean a look (they mix it with legacy software), but they are also seeing years of leverage lost to Nvidia in the same time frame. The cloud companies have a lot of change in the air right now, finite capital budgets, and shrinking margin. They will have to trade off increased growth from the AI investment boom with these other things.

Will GPU Keep Cannibalizing CPU?

Though 2023 at a minimum, I don’t see how it doesn’t. But something has to give here. The Nvidia tax is starting to bite everyone in the data center ecosystem. They are taking all the money.

There are two ways this ends:

- The AI investment boom dies. AI has had repeated investment hype cycles dating back to the 1950s, and this could be another one. More on that here.

- Nvidia is disrupted, and the hardware cost structure for model training and inference declines dramatically. There is a massive incentive throughout tech for this to happen, and a lot of capital being poured into this effort. It does not mean it will happen any time soon. Nvidia’s moat is the software they have been developing since 2005, the deepest part being CUDA.

It’s sort of Nvidia against everyone, but it’s a very delicate dance. For example, the cloud companies would love to be hosting less expensive AI hardware. AWS and Google (GOOGL) already do, and Microsoft is working on it. But customers want Nvidia, despite the very high cost to rent, because of that software moat. Nvidia GPUs have been the AI default since 2012 and that is not changing tomorrow. While supply of H100s is tight, the cloud providers have to play nice with Nvidia to make sure they get enough.

At the same time, they are all desperately trying to stop paying the Nvidia tax. There are battle lines formed, but no one will admit there is a war going on.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of MSFT either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

20% OFF! Until the end of August, annual subscriptions will come with a 20% discount for the first year.

At Long View Capital we follow the trends that are forging the future of business and society, and how investors can take advantage of those trends. Long View Capital provides deep dives written in plain English, looking into the most important issues in tech, regulation, and macroeconomics, with targeted portfolios to inform investor decision-making.

Risk is a fact of life, but not here. You can try Long View Capital free for two weeks.