Summary:

- The new AI world launched by ChatGPT is one of the massive costs. Costs increase exponentially with model size.

- At the root of that is the expense of Nvidia data center GPUs. They are ripe for disruption, but that doesn’t mean it will happen any time soon.

- These trends favor pick-and-shovels plays and large incumbents with deep pockets. Hardware infrastructure and foundational model APIs.

- A look at 3 AI cloud services: Microsoft Azure, Google Cloud, and Oracle Cloud. Microsoft looks like the surest thing, but Google has the largest opportunity as a potential Nvidia disruptor with their TPU v4.

jetcityimage

A Cost Explosion

We are in an era of rolling hype cycles: crypto, metaverse, and now generative AI. Companies are racing to implement generative AI features in existing software, with mixed success. As I write this, iOS App Store reviews for Snapchat (SNAP) have been dominated by 1-star reviews since they pushed an update with their “MyAI” chatbot. The Snappers are displeased.

So where is the opportunity in the current gold rush atmosphere? As with the original California Gold Rush, the winners are likely to be large incumbent picks-and-shovels plays. The introduction of large generative AI models has revolutionized more than just the use cases – it has exploded the costs.

At the root of this is the size of these new models, and the Nvidia (NVDA) A100 and new H100 data center GPUs, the chips that do all the very heavy lifting (H100 production is still ramping, so the A100 remains dominant for now). Say you want to do something on the scale of GPT-3, 175 billion parameters, and run it in production. A good starter kit to get it trained relatively quickly and scale usage is 10k of those GPUs, which will run around $100 million, if you can get them. They are going on secondary markets for as high as 4 times as much. If you wanted to match GPT-4, probably close to a trillion parameters, $300 million is probably more like it, and that’s just for the GPUs. These costs are before any other hardware – CPUs, memory, storage, network – and the substantial costs of running all these GPUs. 10k H100 GPUs running 24/7 would use 31 gigawatt-hours a year, about the same consumption as 2800 average US homes. Assuming the data center is located in a very low power cost region ($0.07 a kilowatt-hour), that’s still over $2 million a year, just to power the GPUs.

Some recent startup data points:

- Stability AI raised $100 million in late 2022 at a $1 billion valuation, and they have already burnt through that, with a lot going to their cloud bill. Their commitments to AWS (AMZN) are $75 million. They are out looking for another round at a $4 billion valuation.

- Anthropic is looking to raise $5 billion over the next two years. Their next model will cost $1 billion over 18 months.

- Despite being the champion fundraiser, with $10 billion over several years from Microsoft (NASDAQ:MSFT), on the heels of $1 billion they already burned through, and just another $300 million from others, OpenAI is not going to build GPT-5 any time soon, because who could afford such a thing?

As these models scale in size, the cost really explodes. Take this exchange I just had with New Bing in Creative mode:

Prompt: Please write a poem about the Federal Reserve Open Market Committee and their interest rate decision on May 3, 2023. Please mention 3 committee members by name. You can choose any rhyming scheme you would like, but please make it rhyme, and keep the rhyming pattern consistent.

I will spare you Bing’s response, but it was an intro sentence, and then a 24-line poem, more or less what I asked for. Let’s look at the costs of that silly exchange, were Microsoft paying the full API rate, which they are not.

- At the GPT-3.5 rate, that query and response costs $0.001, or $3.1 billion at the rate of the roughly 3 trillion search queries Google gets a year (that’s a very conservative guess – the last we know is 1.2 trillion in 2012).

- At the more expensive of the two GPT-4 rates, that’s $0.058 for the exchange, or $173 billion at the rate of 3 trillion Google searches a year. The less expensive GPT-4 API is half as much, but even that is 28 times more expensive than GPT-3.5.

In one way, AI has upended the assumption about software that has existed since the 1980s, that as you scale, unit cost plummets. With AI as you scale up in model size, costs increase dramatically.

Absent a disruption of Nvidia’s dominance with something cheaper or more efficient, the new cost structure of these giant models advantages large incumbents and their investment partners, and owners of infrastructure: hardware, cloud, and application programming interfaces to foundational models like GPT.

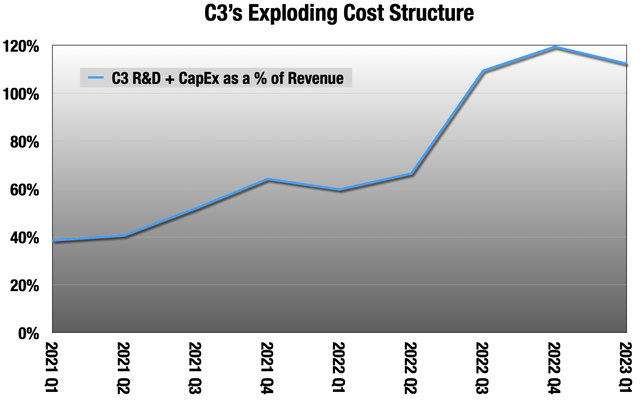

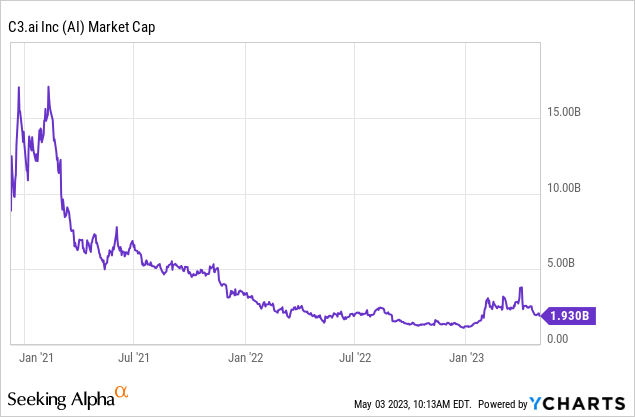

Take C3 (AI) for example. Besides the ticker everyone wants right now, they would seem to be a huge beneficiary for software infrastructure. They make tools for companies to take their own data, and build machine learning models and predictions with it. But, the costs:

It is even coming for the operating margins of the cloud companies we are talking about:

Microsoft quarterly earnings reports

I don’t know that I have ever seen anything as ripe for disruption as Nvidia’s dominance in AI compute hardware. This does not mean it will happen any time soon, but the list of companies with a large vested interest in cutting out their legs is wide and deep now. Until then, it is pick-and-shovels, with large deep-pocketed incumbents greatly advantaged.

We’re here to talk about one part of that, cloud providers. We will be looking at Microsoft Azure, Google Cloud (GOOG) and Oracle Cloud (ORCL). Yes, no AWS.

Why No AWS?

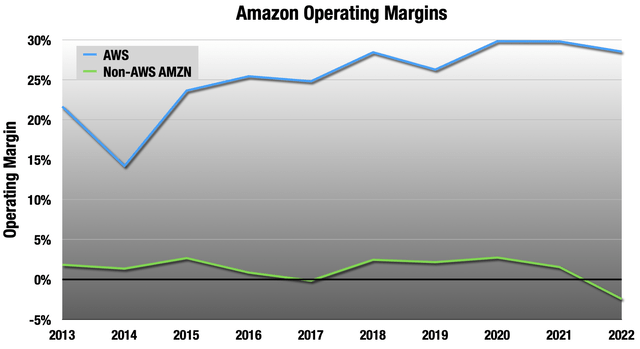

I still consider AWS the best general purpose cloud, and it is the one where I am a customer. AWS invented the modern cloud, and it is a great high margin business that grows fast. The problem is that it is still attached to the rest of Amazon, 84% of their revenue last quarter.

Like two different companies. The non-AWS margin in Q1 was -0.6% (Amazon annual reports)

Non-AWS Amazon is a tiny-margin mess that Jeff Bezos left for Andy Jassy, who is still trying to figure it all out. He just killed their fitness tracker, and there are more shoes to drop. Until they can match Walmart’s (WMT) 4%-5% retail operating margin, I am not interested.

Microsoft Azure

Of the three cloud providers we are talking about, Azure looks like the smallest opportunity but also the surest thing. Here we need to separate Azure, what we are talking about, with the rest of Microsoft Cloud. Much of their business here is really software revenue, now cloud-based managed services instead of self-hosted and administered as they were in the past. Azure is the backbone to all that, but it’s important to separate out the issues.

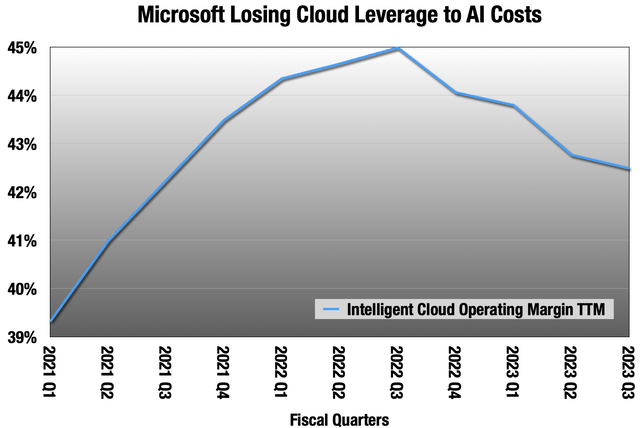

More than any other company, Microsoft is plunging headlong integrating generative AI into existing software products. This will be expensive and shift margin profiles like we already see in their Intelligent Cloud segment, where Azure lives. But the bigger risks are in these models themselves, and integrating them into mission-critical software before they are production-ready.

I call this “Zillow Risk:” giving too much responsibility to an AI before it is ready. Zillow (Z) had a wealth of current and historical data with which to build a model that would buy and sell houses. The mistake they made was letting it buy enough houses right away that they could lose $420 million when things went wrong. The model had never seen a pandemic housing market, and it was out there buying billions of dollars’ worth of homes in the middle of one.

Microsoft has a reputation as a maker of solid, if unspectacular, enterprise software. Their primary customers are the heads of IT departments, and the safest path for these people is choosing Microsoft as their vendor. Most actual users will tell you they prefer Slack to Teams, but Teams still makes more sense to IT managers.

But GPT, the models undergirding all of this push, is a black box that gives uncertain results. It’s amazing how often it answers questions correctly, but it is also very prone to making stuff up in a very convincing fashion. These are called “hallucinations.” A much more colloquial interpretation is “bullshit.” If customers begin to rely on these features and later regret it because of hallucinations, this could undercut Microsoft’s considerable reputation built over decades.

So they have to navigate this very carefully; I have a lot of faith in CEO Satya Nadella, one of the best of his generation in my opinion, but he is walking through a minefield.

But on the pure cloud portion of this, Azure, it is much more straightforward. Azure began this way behind in AI cloud infrastructure. But the partnership with OpenAI became a crash course in building AI infrastructure to meet OpenAI’s considerable needs as they were building DALL-E, GPT-3, and GPT-4. I think OpenAI API usage still dominates their AI cloud infrastructure, and they are going to have to keep building out to meet demand.

As I pointed out, this is crushing their operating leverage, which had been steadily growing. This came up during the recent earnings call, and Nadella’s response was essentially, We’re a hyperscaler and we’re going to hyperscale our way out of this. I’m more skeptical. I see Nvidia stealing some of their margin, and until Nvidia is disrupted somehow, I think there is a good news-bad news tradeoff for AI cloud providers. The AI gold rush will increase top line growth, but they will have to accept a lower operating margin as a tradeoff. So, mostly good news at the bottom line.

Microsoft is also developing their own AI chip.

Azure’s AI infrastructure is currently clogged up by ChatGPT and the OpenAI APIs that run on it. They don’t offer a ton of options – just limited GPU configurations, and no AI accelerators. There is still a lot of work to be done here on building the infrastructure for customers who are not OpenAI, and a lot of lessons learned along the way. I’m pretty sure they understand all of this.

But given the execution under Nadella, I have a lot of faith that they will get their infrastructure up to speed, and that their sales team will sell the heck out of it to all their existing customers, which includes 95% of the Fortune 500. This is a core corporate competency – making IT managers feel safe in choosing Microsoft.

Disclosure: I am a Microsoft shareholder since 2016, a couple of years after Nadella took over. I’ll remain a shareholder for the foreseeable future mostly based off of their enterprise execution under Nadella.

Google Cloud

Google Cloud has the most to gain here. No one has spent more time and money on AI than Google. For years, they were the sugar daddy for AI research. But that does not guarantee success. Famously, the Xerox (XRX) Palo Alto Research Center [PARC] invented the modern office – PCs with graphical interfaces, the mouse, ethernet, and laser printing – but Xerox never made a dime off it. Xerox just donated PARC to a nonprofit.

Google is already using AI all over their consumer products – Pixel, Android, Search, ad-serving, YouTube – pretty much all of it. Also, no one has more AI infrastructure options for cloud customers than Google Cloud, offering a wide range of options matching CPUs, Nvidia GPUs and AI accelerators. Google calls their accelerator a Tensor Processing Unit, or TPU. Google built the first TPU in 2015 because they had run into a wall using the hardware that was available to them a decade ago, including from Nvidia. Version 1 was for internal use only, but now TPU versions 2 through 4 are available as cloud services. They have the largest opportunity here, to leap from an also-ran to on par with AWS and Azure, and gain an identity as the AI cloud king.

In a recent paper, Google showed TPU v4 outperforms the Nvidia A100 in the crucial performance-per-watt metric by anywhere from 55% to 225% depending on the workload. At Google Cloud, TPU v4 costs 45% less to rent, and works faster than a comparable A100 instance. This economy does not come without a price – AI accelerators operate at a lower level of precision than GPUs, because they are doing a workaround to the floating point matrix math at the heart of this that makes it so computationally expensive. But a lot of research shows that outside of scientific applications, high levels of precision are not needed in generative AI training and prediction for good results. TPU and other AI accelerators aren’t right for every AI workload, but the majority of mining in this gold rush can be done on TPUs.

TPUs and other AI accelerators from AMD/Xilinx (AMD), Qualcomm (QCOM), Intel (INTC) may be the thing that disrupts the Nvidia AI hardware juggernaut. The biggest hindrance is that the AI software landscape is a bit of a mess and everything tends to be bespoke implementations that are very platform-specific. Software was built by researchers for their purposes, and everyone has become reliant on Nvidia’s CUDA libraries. Now that these things are scaling to commercial production, the software landscape is a fragmented mess. Startup Modular is working on that problem, but until there is an industry-wide solution that makes models platform-independent, Nvidia retains a wide moat.

But Google has the largest opportunity among the cloud providers, to go from an also-ran in cloud to one of the main players, and the king of AI compute. Nvidia retains a wide moat so long as the software remains fragmented and their GPUs remain the default AI workhorse, but something like Modular’s solutions could drain it.

To me, this is Google’s big opportunity in AI right now, not messing with their most important product, Search, by adding a chatbot to it because Microsoft did. Bing was already widely disliked, so it would be hard for it to suffer reputational damage. But Google Search is used by billions of people, who trust it to deliver accurate results. Integrating a chatbot could undermine that trust. So far, Google has wisely kept Bard separate at bard.google.com and off the Search page, unlike Microsoft and Bing.

Can they execute? Part of why I’m comfortable with Microsoft is their execution under Nadella. Execution at Google has not been nearly as good. Over the years, Google has launched and killed 281 products, with 4 more going down this year. Besides Search, everything that makes a profit at Google was acquired – the third-party network, YouTube and Android.

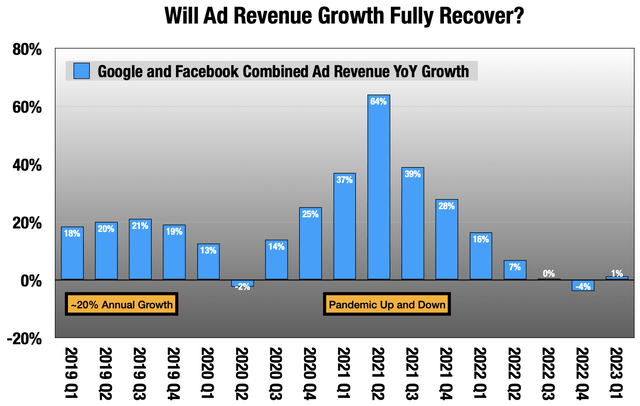

Google also has headwinds elsewhere:

I believe we are past the high-growth period in digital ad growth, and 15%-20% YoY quarters will no longer be the norm.

Google and Facebook quarterly earnings reports

Will that fully recover? We of course don’t know, but the ad business does not grow at 20% a year, and digital can only steal from TV and other mediums for so long.

Google also faces a number of regulatory and antitrust threats. The most dangerous in my opinion is the recently filed US v Google. This is a case from the Department of Justice and several states that seeks to break Google’s third-party ad network, originally DoubleClick, off from the rest. Google owns the biggest ad exchange, and also is the biggest participant on that exchange. It’s like if NASDAQ (NDAQ) also had the largest hedge fund. I think this case has a decent likelihood of success.

I don’t own Google, but I will be watching their cloud division closely. They just showed their first very thin operating profit there last quarter (they have been underpricing to grow fast), which I’ll take as a good omen.

Oracle Cloud

Oracle Cloud is another also-ran like Google, mostly competing on price right now, with a big opportunity to move up the ladder here. They have nice AI infrastructure built only around Nvidia setups, and they probably need to add accelerators at some point. Oracle’s advantage is that they host a ton of large databases in their cloud. Machine learning runs on data, and Oracle is the database company. So the big opportunity is selling add-on machine learning services to their existing database customers, helping them turn their data into models and predictions.

I like a combination of Oracle and C3 as a merger, especially now that C3’s valuation makes a lot more sense than it once did.

This would be a great way to quickly bootstrap these services to existing customers.

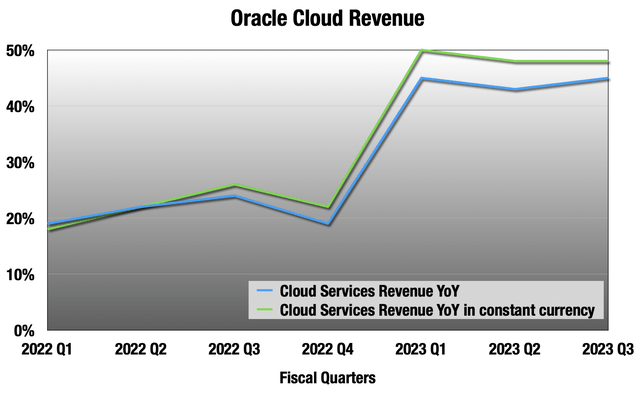

Oracle only began breaking out pure cloud revenue from their license revenue this fiscal year, ending May. So we only have 7 quarters of this, but it is certainly encouraging:

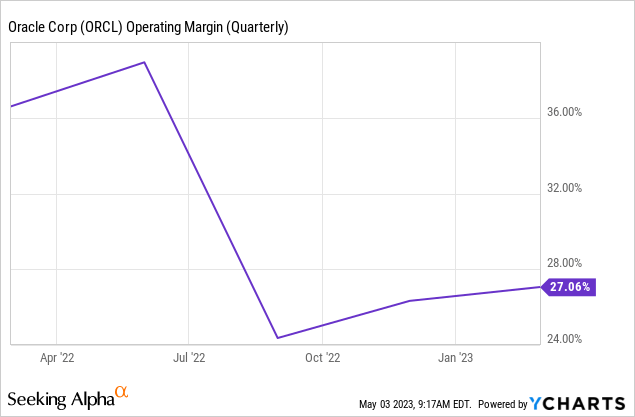

We don’t know about margins here, because for that they still combine it with software licensing revenue, a very different sort of margin profile. I want to see if they can keep up that cloud growth without destroying margin, which they are already having issues with:

I don’t own Oracle, but what I will be watching closely are those margins, and new machine learning services they roll out. They already offer machine learning services for hosted databases, but this is a limited service and they need to make it more robust to really see a world where they can compete on more than just price, like Google. A C3 merger could bootstrap that.

Summing Up

- The new AI world launched by ChatGPT is one of massive costs. Costs increase exponentially with model size, as exemplified by the wide delta in API prices between GPT-3.5 and GPT-4.

- The costs are driven by Nvidia’s data center GPUs, the workhorses behind all this. The most important aspect of Nvidia’s moat is the fragmented software landscape.

- I don’t know that I have ever seen anything as ripe for disruption as Nvidia’s data center GPUs. This doesn’t mean it will happen any time soon, but there is now a huge vested interest to cut out Nvidia’s legs.

- In a gold rush environment, the winners are typically pick-and-shovel plays. In this case, that means hardware infrastructure and foundational models with APIs.

- Until Nvidia is disrupted, these trends all favor large incumbents with deep pockets and their investment partners.

- Until Nvidia is disrupted, cloud providers will see a tradeoff of higher growth rates for lower operating margin. This is still mostly good news for the bottom line.

- Microsoft Azure started out behind in their cloud infrastructure, but their partnership with OpenAI made them have to catch up quickly. I think OpenAI still dominates their infrastructure, and they need to continue to invest here, also adding AI accelerators. They have repeatedly proven under Nadella that they can execute these sorts of moves. It doesn’t hurt that all OpenAI APIs are hosted there.

- Google Cloud has a robust infrastructure that includes their own AI accelerator, the TPU. Now on the 4th version, these are a potential Nvidia disruptor, and Google has a very large opportunity here to start competing on more than just price.

- Oracle Cloud also has a unique opportunity, but not as large as Google’s. They host a ton of databases on their cloud, and adding on robust machine learning tools coupled with hardware is their opportunity here. They offer this sort of service, but it needs more development. A quick way to bootstrap that would be a C3 merger.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of MSFT, QCOM either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

At Long View Capital we follow the trends that are forging the future of business and society, and how investors can take advantage of those trends. Long View Capital provides deep dives written in plain English, looking into the most important issues in tech, regulation, and macroeconomics, with targeted portfolios to inform investor decision-making.

Risk is a fact of life, but not here. You can try Long View Capital free for two weeks. It’s like Costco free samples, except with deep dives and targeted portfolios instead of frozen pizza.