Summary:

- In a previous article, we had discussed how the tables may finally be turning in favor of Amazon’s AWS relative to Microsoft Azure.

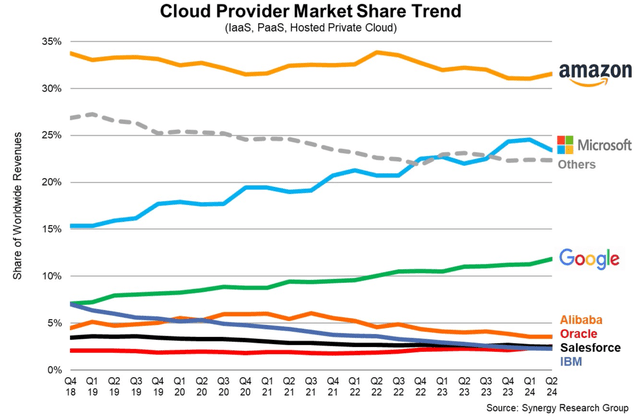

- In Q2 2024, AWS finally gained market share along with Google Cloud, while Azure lost share.

- Amazon’s differentiated strategy to win this AI revolution is paying off, buoyed by the growing prominence of Meta’s open-source models, at the expense of Microsoft-OpenAI’s GPT models and Google’s Gemini.

- Despite AWS gaining ground over Azure, growing evidence shows Google Cloud’s leadership over AWS on the AI custom chips front, with Google possessing a much larger market share.

- While cloud market terminologies can be perplexing, we discuss AWS’ potential to win this AI revolution in a simplified manner, enabling investors to more easily grasp the underlying growth story.

Thos Robinson

At the beginning of this AI revolution, investors were concerned that Amazon’s (NASDAQ:AMZN) AWS was considerably lagging behind rivals Microsoft (MSFT) Azure and Google (GOOG) Cloud, being the only major cloud provider without a popular, flagship model to boast. However, this narrative is certainly starting to turn around lately, with AWS doubling down on the growing prominence of open-source models, at the expense of closed-source models like Microsoft-OpenAI’s GPT series and Google’s Gemini. Amazon is also way ahead of Microsoft in the custom chips race, but Google seems to be the clear leader on this front. Nonetheless, the bull case for AWS remains intact, sustaining the ‘buy’ rating on the stock.

In the previous article on Amazon, we discussed the impact of generative AI deployment on the e-commerce side of the business. More specifically, we covered how rivals Google and Meta Platforms are making much more significant progress on leveraging the power of generative AI to innovate new valuable ad solutions that are already delivering results, and strengthening their ability to facilitate more and more e-commerce activity through their platforms. On the other hand, the growth rate of Amazon’s advertising segment underwhelmed investors following the last earnings report. The e-commerce giant’s new generative AI-powered Rufus chatbot still needs to prove its advertising potency, while it simultaneously risks aggravating a conflict-of-interest issue on the Amazon Marketplace.

Nonetheless, while the e-commerce side still makes up the bulk of Amazon’s top-line revenue, AWS constitutes the majority of its operating income, contributing 69% in Q2 2024. Hence, the bull case for the stock is considerably driven by the cloud computing side of the business at the moment, as the major Cloud Service Providers [CSPs] are evidently the ‘picks and shovels’ of this AI revolution.

In fact, in the preceding article from June 2024, we covered how the tables may finally be turning in favor of AWS amid the rise of open-source models, which threatens Microsoft Azure’s initial edge in the AI race. Over the past few months, Amazon has been proving its ability to capitalize on this golden opportunity.

The Rise Of Open-Source Models Is A Gift To AWS

Investors were awestruck by the solid growth delivered by AWS last quarter, with CFO Brian Olsavsky proclaiming on the Q2 2024 Amazon earnings call:

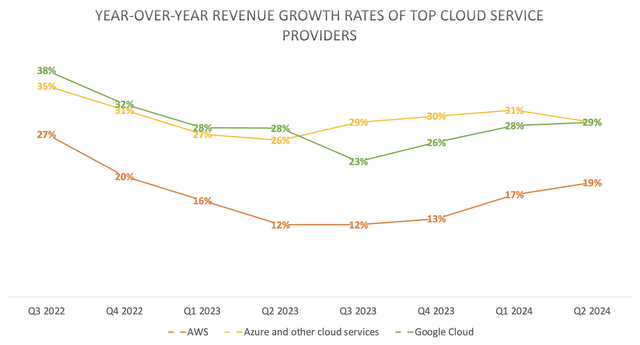

our AWS segment, revenue is $26.3 billion, an increase of 18.8% year-over-year, excluding the impact of foreign exchange. AWS now has an annualized revenue run rate of more than $105 billion.

Nexus Research, data compiled from company filings

A revenue growth rate of 19% is remarkable given the sheer size of AWS relative to its closest rivals, being 2-3 larger than Google Cloud.

At the beginning of this AI revolution, Wall Street perceived Amazon as a laggard in the absence of an exclusive, powerful Large Language Model [LLM] of its own. Microsoft sprinted ahead with its opportune partnership with OpenAI, which granted it exclusive access to the popular GPT series, while Google fought back with the revelation of its Gemini model, on which it had been working for years.

Though in a previous article downgrading MSFT, we had covered how open-source models are swiftly catching up in performance against the proprietary models sold by Microsoft-OpenAI and Google.

studies revealing how open-source models, such as Meta’s Llama models, are catching up quickly in terms of performance, while the rate of performance improvements for OpenAI’s closed-source models is slowing

Then in July 2024, Meta Platforms decided to open-source its most powerful LLM to date, Llama 3.1, and notable third-party distributors of the model are already lauding its performance advancements.

This generation of Llama models finally bridges the gap between OSS and commercial models on quality. Llama 3.1 is a breakthrough for customers wanting to build high quality AI applications, while retaining full control, customizability, and portability over their base LLM.

– Ali Ghodsi, CEO & Co-Founder, Databricks (emphasis added)

For context, “OSS” stands for ‘Open-Source Software’, while “commercial models” refers to closed-source models (or proprietary models) like OpenAI’s GPT and Google’s Gemini.

As the name would suggest, an open-source model is ubiquitously available through any software vendor that decides to distribute the model through their platform. All the major cloud providers are already offering access to Llama 3.1, as well as other technology enablers like Nvidia, Databricks, Snowflake, and Dell Technologies. So essentially, there is no exclusivity advantage for any single provider here.

Furthermore, in the previous article on AWS, we alluded to how Amazon can catch up in the AI race amid the growing prominence of open-source models.

Amazon should strive to offer the highest-quality tools for helping enterprises use/customize open-source models for developing their own applications.

In fact, offering the best deployment tools and services is increasingly becoming a differentiating strength for AWS relative to Azure and Google Cloud, with Meta Platforms CEO Mark Zuckerberg citing Amazon as one of the key distribution partners thanks to its exceptional offerings.

Amazon, Databricks, and NVIDIA are launching full suites of services to support developers fine-tuning and distilling their own models.

– CEO Mark Zuckerberg in his open letter alongside the release of Llama 3.1

On top of this, Zuckerberg decided to give a special shout-out to AWS on the Q2 2024 Meta Platforms earnings call:

“And part of what we are doing is working closely with AWS, I think, especially did great work for this release.”

AWS has been working on offering top-quality deployment services for some time now, for both open-source as well as closed-source models like Anthropic’s Claude series.

In fact, while Llama 3.1 was only released in Q3 2024 (on July 23rd to be more specific), AWS’s market share edged up in Q2 2024, from around 31% to 32%. Over the same period, Azure’s market share contracted from around 25% to 23%.

Although a gain/ loss of market share over one quarter is obviously not sufficiently convincing of a longer-term trend, with continuous back-and-forth resuming as the AI revolution evolves.

Moreover, on Microsoft’s last earnings call, the executives cited weaker-than-expected demand growth for non-generative AI workloads when explaining why Azure’s growth rate missed expectations last quarter. They assured investors that demand for generative AI compute remained strong and exceeded supply capacity across its data centers. So Microsoft still remains a formidable competitor on the AI front.

That being said, the persistent parallel demand for generative AI and non-generative AI workloads leads us to the other advantage AWS has over Azure.

The Custom Chips Race

The onset of the AI revolution has led to soaring demand for Graphics Processing Units (GPUs) that are capable of processing the complex computing tasks incurred in generative AI workloads. As a result, all the major cloud providers have reported massive CapEx numbers as they spend heavily on these advanced chips, the chief beneficiary of which has been Nvidia (NVDA).

Although in a recent article upgrading AMD stock, we discussed extensive research findings reflecting the fact that generative AI is not yet a silver bullet for all IT workloads, conducive to sustained demand for the Central Processing Units (CPUs) that are more cost-effective for running traditional computing tasks. And this aligns aptly with what the major cloud providers are witnessing.

During the second quarter, we saw continued growth across both generative AI and non-generative AI workloads.

– Amazon CFO Brian Olsavsky on the Q2 2024 earnings call

Consequently, CSPs have also been purchasing CPUs from AMD and Intel to support these non-generative AI workloads. Although out of the three big cloud players, AWS leads the way in offering cloud customers its own in-house CPUs, known as the ‘Graviton’ series, which was introduced in November 2018. Comparatively, Azure and Google Cloud entered the space much later, with Microsoft announcing its own ‘Cobalt’ CPU in November 2023, and Google introducing its custom ‘Axion’ CPUs in April 2024.

On the most recent earnings call, Amazon CEO Andy Jassy highlighted how AWS and its customers have benefitted from this ARM-based custom silicon relative to relying on third-party chips:

it’s hard to get that price performance from existing players unless you decide to optimize yourself for what you’re learning from your customers and you push that envelope yourself. And so we built custom silicon in the generalized CPU space with Graviton, which we’re on our fourth model right now. And that has been very successful for customers and for our AWS business as it saves customers about — up to about 30% to 40% price performance versus the other leading x86 processors that they could use.

The key takeaway here is that AWS possesses years’ of experience in encouraging cloud customers to migrate from cloud solutions powered by CPUs from AMD or Intel to its own in-house processors, learning from first-hand customer feedback and deployments.

For example, in its endeavor to persuade customers to switch from AMD/ Intel CPUs to its own Graviton chips, Amazon had created certain tools and services to ease the migration process, including the AWS Schema Conversion Tool and the AWS Application Migration Service.

Subsequently, this playbook and valuable experience can now feed into Amazon’s push to induce customers to switch from Nvidia’s GPUs to its own custom GPUs for generative AI workloads. As discussed in previous articles, Amazon also boasts its own line of AI chips, including ‘Trainium’ for training workloads, and ‘Inferentia’ for inferencing workloads.

The value proposition of using in-house accelerators over third-party semiconductors lies in the deeper vertical integration with AWS’s servers and other infrastructure, yielding performance advancements and cost efficiencies thanks to greater control over the technology stack.

Trainium provides about 50% improvement in terms of price performance relative to any other way of training machine learning models on AWS.

…

With Inferentia, you can get about four times more throughput and ten times lower latency by using Inferentia than anything else available on AWS today.

– Matt Wood, VP of product at AWS

Furthermore, on the last earnings call, CEO Andy Jassy emphasized the growing demand for its custom chips from its cloud customers, given the cost of computing benefits:

We have a deep partnership with NVIDIA and the broadest selection of NVIDIA instances available, but we’ve heard loud and clear from customers that they relish better price performance.

It’s why we’ve invested in our own custom silicon in Trainium for training and Inferentia for inference. And the second versions of those chips, with Trainium coming later this year, are very compelling in price performance. We’re seeing significant demand for these chips.

And the differences in computing costs can be wide-spread, as per the experience of AI start-up NinjaTech, which opted to use AWS’s custom silicon.

To serve more than a million users a month, NinjaTech’s cloud-services bill at Amazon is about $250,000 a month… running the same AI on Nvidia chips, it would be between $750,000 and $1.2 million

The significant cost benefits and growing demand for Amazon’s in-house chips should ease the tech giant’s reliance on Nvidia GPUs going forward, lowering future CapEx pressures, subsequently allowing more top-line revenue dollars to flow down to the bottom-line, improving profitability for shareholders.

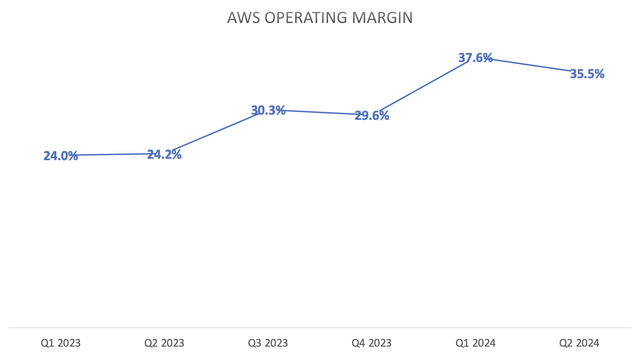

In fact, AWS has already been delivering operating profit margin expansion for the past several quarters now, amid its cost control initiatives.

Nexus Research, data compiled from company filings

In Q2 2024, the AWS operating margin stood at 35.5%, which is more than triple the 11% operating margin of Google Cloud (which also includes income from Google Workspace). Microsoft does not break out the operating margin for Azure specifically, instead reports numbers for the ‘Intelligent Cloud’ segment as a whole, which includes both Azure and its traditional on-premises server business. Last quarter, Intelligent Cloud delivered an operating margin of 43%.

Nonetheless, as the largest cloud provider with an annual revenue run rate of $105 billion, an operating margin of between 35-40%, while still growing top-line revenue at around 20%, is absolutely impressive, cementing AWS’s position as the top cloud provider with a durable moat around the business.

Risks And Counterarguments To The Bull Case

AWS is undoubtedly making progress in inducing its cloud customer base to use Amazon’s in-house chips over Nvidia’s GPUs. However, as enterprises have become increasingly accustomed to using Nvidia-powered cloud solutions for accelerated workloads, it has made it that much harder for AWS to convince them to migrate over to its in-house chips, which is a fact confessed by AWS executive Gadi Hutt.

Customers that use Amazon’s custom AI chips include Anthropic, Airbnb, Pinterest and Snap. Amazon offers its cloud-computing customers access to Nvidia chips, but they cost more to use than Amazon’s own AI chips. Even so, it would take time for customers to make the switch, says Hutt. – The Wall Street Journal (emphasis added)

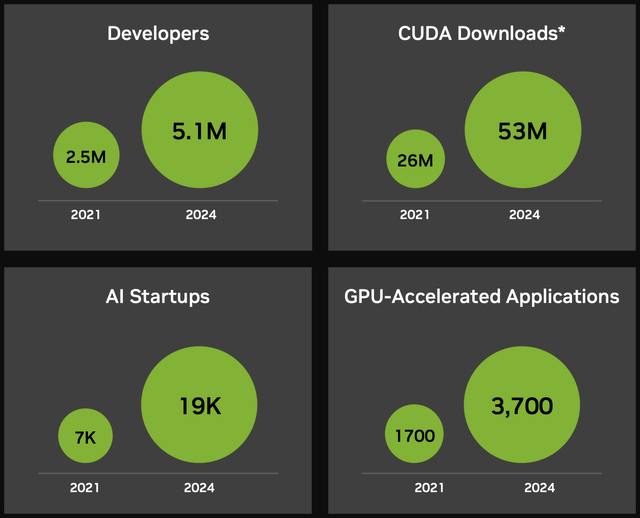

This is testament to the extensive capabilities of Nvidia’s CUDA platform, which is the software layer accompanying its popular GPUs, and is what enables developers to program the chips for specified workloads. Nvidia has been cultivating a software ecosystem around its hardware devices for almost two decades now, boasting 5.1 million developers and 3,700 GPU-accelerated applications that extend the usability of its chips for a variety of workloads across different sectors and industries.

CUDA platform statistics (Nvidia)

So in order for Amazon’s in-house chips to meaningfully challenge Nvidia’s GPUs, AWS will also need to establish a flourishing software ecosystem around its hardware.

The fact that Amazon is already witnessing more and more customers opt for Trainium/ Inferentia is certainly a development in the right direction, as it is conducive to enterprises’ software developers, as well as independent third-party developers, building out tools, services and applications that continuously enhance the value proposition of its chips.

However, Nvidia’s GPUs aren’t the only chips that Amazon’s custom silicon is competing against, with other cloud rivals also designing their own semiconductors. Although Microsoft Azure is less of a concern here, given that it announced its own line of ‘Maia Accelerators’ in November 2023, way behind AWS which has been offering its Trainium chips since 2021, and had introduced its Inferentia GPUs in 2019.

The real competitive threat is Google Cloud, which had started using its own line of Tensor Processing Units (TPUs) for internal workloads since 2015, and began offering TPU-powered cloud instances to third-party enterprises since 2018.

Keep in mind that Google was much better prepared for this AI revolution, being the tech giant that invented the ‘Transformer’ architecture that enabled the creation of generative AI applications. Correspondingly, Google also built competent TPUs that would be capable of processing such complex workloads, and in fact had already been training its own Gemini model on these custom chips internally.

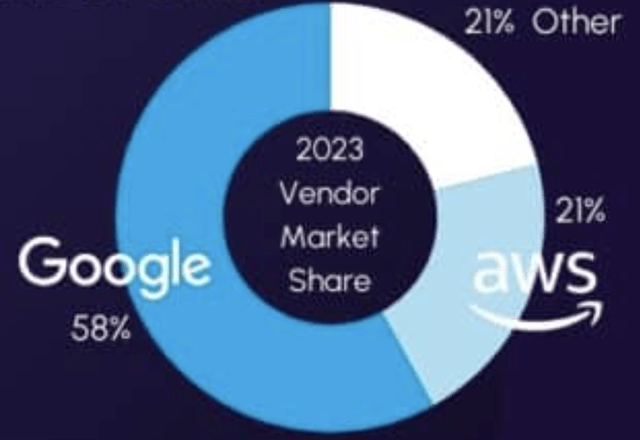

Consequently, Google Cloud is ahead of AWS when it comes to offering in-house silicon to power generative AI workloads, and subsequently also estimated to possess a considerably larger slice of market share (58%) in the custom chips space among cloud providers as per a 2023 report.

Estimated custom cloud AI chips market share (Source: The Futurum Group)

Note: the market share figures above only relate to AI workloads in cloud data centers, not including non-AI workloads.

Furthermore, notable technology giants have also opted for Google’s TPUs over Amazon’s GPUs lately, as discussed in a recent article covering Google Cloud’s strengths:

Consider the fact that Apple and Google are also competitors in the smartphone market, not so much in terms of iPhone vs Google’s Pixel (which holds marginal market share), but more so on the operating system level, iOS vs Android, as well as the imbedded software apps running on these devices.

Keeping this in mind, Apple could have used the Trainium chips offered by Amazon’s AWS for training their AI model, but instead relied on competitor Google’s TPUs, which speaks volumes about the value proposition of Google Cloud’s custom silicon over AWS’s GPUs

Additionally, Salesforce has been a well-publicized AWS customer for years. However, the software giant opted for its rivals’ TPUs for training their own AI models, and Google Cloud seems to have successfully locked in Salesforce to use subsequent generations of its chips.

We’ve been leveraging Google Cloud TPU v5p for pre-training Salesforce’s foundational models that will serve as the core engine for specialized production use cases, and we’re seeing considerable improvements in our training speed. In fact, Cloud TPU v5p compute outperforms the previous generation TPU v4 by as much as 2X. We also love how seamless and easy the transition has been from Cloud TPU v4 to v5p using JAX.

– Erik Nijkamp, Senior Research Scientist at Salesforce on Google Cloud TPUs (emphasis added)

The point is, AWS has been losing massive deals to Google Cloud due to the competitors’ superior silicon offerings. So while the progress Amazon is making in inducing more and more customers to use its in-house chips is encouraging, the tech giant still fights a tough battle on this front.

Amazon Stock Valuation

AMZN is currently trading at just over 39x forward earnings, while MSFT trades at almost 33x and GOOG hovers around 21x.

From this perspective, Amazon stock may seem too expensive relative to the other major cloud players. However, it is essential to also take into consideration the anticipated pace of earnings growth for each company.

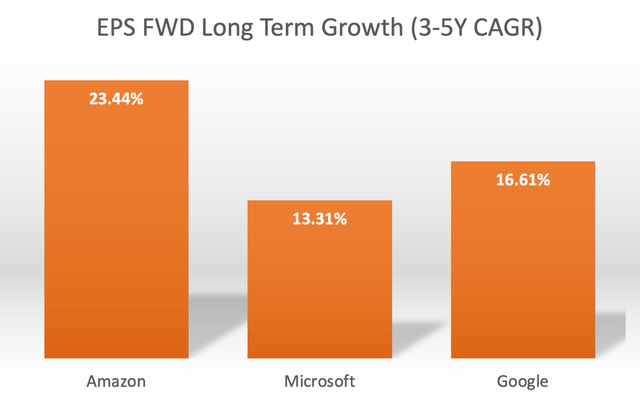

Nexus Research, data compiled from Seeking Alpha

Amazon’s expected EPS FWD Long-Term Growth (3-5Y CAGR) is 23.44%, notably higher than the anticipated earnings growth rates for Microsoft and Google.

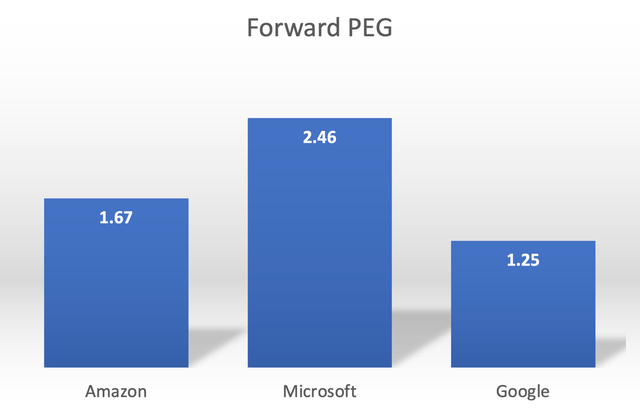

Now, adjusting each stock’s forward PE multiples by the expected EPS growth rates would give us the Forward Price-Earnings-Growth (Forward PEG) ratios.

Nexus Research, data compiled from Seeking Alpha

For context, a Forward PEG ratio of 1x would imply a stock is trading roughly around fair value. While AMZN still remains relatively more expensive than GOOG on a Forward PEG basis, the stock is clearly much cheaper than arch-rival MSFT.

Microsoft’s OpenAI edge is fading away, while Amazon is savvily leveraging and capitalizing on the growing preference for open-source models. Simultaneously, AWS holds a sizeable lead over Azure when it comes to offering in-house chips, conducive to being less dependent on Nvidia’s GPUs going forward, leading to better profit margin expansion potential ahead.

AWS’s promising growth prospects sustain the ‘buy’ rating on the stock.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.