Summary:

- Amazon stock is on track to end the year around +50%, and the bull run should keep going into 2025 thanks to its powerful cloud segment.

- Amid the ongoing AI revolution, AWS remains the main driver of the stock price, as the “picks and shovels” are the earliest beneficiaries of the growing demand for computing power.

- The line-up of incredible announcements at the recent “AWS re:Invent” conference last week have fortified the bull case for Amazon stock.

Noah Berger/Getty Images Entertainment

About one year ago, as we were approaching the end of 2023, I had upgraded Amazon (NASDAQ:AMZN) stock to a “buy” rating, given the early indicators of AWS strengthening its competitive position in the AI race. AMZN is on track to end 2024 around 50% higher, as the tech giant indeed gained its footing in the AI race this year.

In the previous article on Amazon, we had delved into some of AWS’ key strategies to fight back against Microsoft’s (MSFT) Azure and Google Cloud (GOOG)(GOOGL) in this AI race. This included easing model-switching capabilities on Amazon Bedrock, as well as leveraging its ability to offer more cost-effective cloud computing solutions through its suite of in-house AI chips.

Then last week, Amazon held its “AWS re:Invent” conference between Dec 2nd-6th, where it made some ground-breaking announcements, which not only bolstered AWS’ competitive positioning, but also addressed some key risks to the bullish narrative that I have been highlighting over the past year. So, thought I should give an update on Amazon stock as we go into 2025.

New models, new chips

Firstly, a key risk I have been highlighting in the past several articles on Amazon is the fact that it is the only major cloud provider without its own proprietary, industry-leading AI model that is exclusively available on AWS. Sure, they tried to make some headway with their Titan models in April 2023, but those barely moved the needle compared to the popularity of Microsoft Azure’s exclusive access to OpenAI’s GPT series models and Google Cloud’s own Gemini models.

Now 18 months later, Amazon has leveraged its internal AI deployment experiences and knowledge across the various business lines to design its latest multimodal AI models, the Nova series.

“Inside Amazon, we have about 1,000 generative AI applications in motion, and we’ve had a bird’s-eye view of what application builders are still grappling with,” said Rohit Prasad, SVP of Amazon Artificial General Intelligence. “Our new Amazon Nova models are intended to help with these challenges for internal and external builders, and provide compelling intelligence and content generation while also delivering meaningful progress on latency, cost-effectiveness, customization, Retrieval Augmented Generation, and agentic capabilities.”

Being earlier to the game, both Microsoft Azure and Google Cloud had been architecting their AI systems and platforms as such that they are optimized to run their proprietary AI models as cost-efficiently as possible. Google Cloud in particular had the lead here, with its Vertex AI platform and Tensor Processing Units (TPUs) being optimized to run its Gemini model, resulting in performance enhancements and cost savings that would subsequently be passed onto cloud customers to gain market share over AWS.

However, Amazon is finally catching up on this front too.

“In addition to better price performance from AWS’ custom silicon, the company introduced the Amazon Nova family of foundation models which operate at a lower cost on Bedrock relative to leading peers,” Devitt said. “Amazon Nova Micro, Amazon Nova Lite, and Amazon Nova Pro are at least 75% less expensive than the best performing models within their respective intelligence classes and run faster in Bedrock than competing models.”

The exclusivity of these models that yield both performance and cost advantages on AWS should indeed enable Amazon to defend its cloud market share from rivals. As per data from Synergy Research Group, in Q3 2024 AWS maintained 32% market share, well ahead of both Microsoft Azure and Google Cloud, which held 20% and 13%, respectively.

Furthermore, AWS also introduced its latest Trainium3 chips fit for training larger AI models, although they will only become available in late 2025. But perhaps the biggest custom chip-related announcement came from Apple (AAPL) director, Benoit Dupin, who touted the use of Amazon’s in-house CPUs and GPUs for their own AI workloads.

He noted that Apple has been using AWS for over a decade in its products and services including iPads, Siri, and App Store.

The company has also used AWS’ Inferentia and Graviton chips for search services, which have led to around 40% efficiency gains in machine learning workloads.

Apple is also exploring using Amazon’s new AI chip Trainium 2 for pre-training its models, and expects to see up to 50% improvement in efficiency.

– Seeking Alpha News reporting on comments by Benoit Dupin, Apple’s senior director of machine learning, at the AWS re:Invent conference

For context, “Graviton” refers to Amazon’s custom CPUs, while Trainium and Inferentia refer to the custom GPUs for training and inferencing AI models, respectively.

Now, this is a big home-run for AWS, as it validates the in-house chips as a viable alternative to Nvidia’s (NVDA) products. In fact, in the previous few articles when discussing the custom chips race between the major cloud providers, I had been highlighting the fact that Google still remains ahead of Amazon on this front, which was recently affirmed by the revelation in July 2024 that Apple had used Google Cloud’s TPUs for training its AI models for Apple Intelligence.

But now that Apple has confirmed that it is also using AWS’ custom silicon for its AI workloads, it not only subdues the competitive threats from Google Cloud, but also cements its wide lead over Microsoft Azure on this front. In fact, it has been one year since Azure introduced its own custom GPU, the “Maia Accelerators”. But the cloud rival has still not been able to boast a list of customers deploying this chip for AI workloads, reflective of just how difficult it is to succeed in this area. It makes us better appreciate AWS’ success in the custom chips space.

Apple’s use of Amazon’s in-house silicon should help the cloud giant encourage more and more enterprise customers to opt for the custom chips over Nvidia and AMD’s GPUs. But even more importantly, it should spur the development of a software ecosystem around its in-house chips.

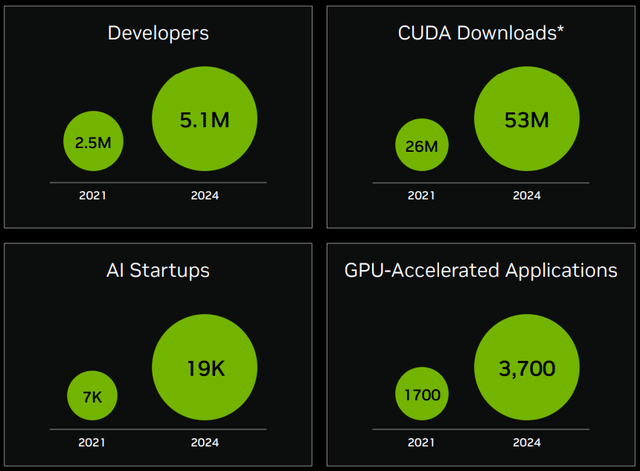

Moreover, the reason why Nvidia GPUs are so highly performant and sought-after is due to the accompanying CUDA software package that greatly extend the capabilities of its silicon for various types of workloads across industries.

Nvidia’s software ecosystem statistics (Nvidia)

Hence, in order to enhance the competitiveness of their custom chips, AWS and Google Cloud have also been striving to encourage third-party developers to build software that accentuate the value proposition of their chips.

This is why Amazon has been striking special deals with companies like Anthropic, whereby the start-up is contractually required to use in-house chips in exchange for large investments and cloud computing credits. AWS is essentially striving to induce greater software development around its own GPUs.

So now in addition to AI start-ups, with Apple also asserting the utilization of Amazon’s Trainium/ Inferentia series, this could help spur the much-needed network effect around its GPUs, whereby more third-party developers are enticed to build software around the custom chips that broaden their usability, which in turn would attract even more customers to opt for AWS’ in-house silicon, which then again would keep third-party developers encouraged to build even more monetizable software extensions, and so the virtuous cycle would go on.

All in all, this should reduce reliance on Nvidia’s GPUs going forward, allowing more AWS revenue to flow down to the bottom line, boosting earnings per share for shareholders.

AWS’ new masterstroke strategy

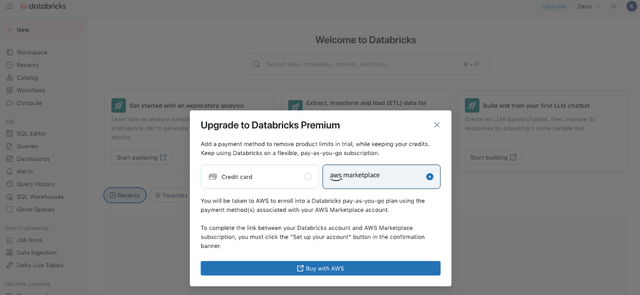

The progress on the AI models and custom chips front certainly bolster the bull case for AMZN, but AWS took its “offense” strategy one step further last week with the introduction of “Buy with AWS”.

This approach is akin to Amazon’s “Buy with Prime” offering on the e-commerce side of the business, which we covered in-depth in a previous article, and essentially enables third-party merchants (webstores outside of Amazon.com) to give customers the option to buy products with their Amazon Prime membership benefits (including cheap and fast delivery).

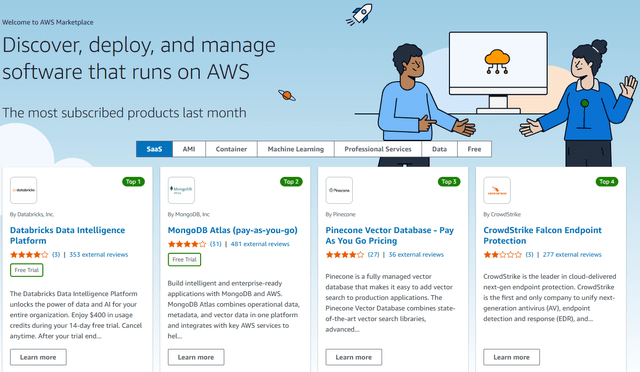

Now, with regard to “Buy with AWS”, it is important to first understand that each of the major cloud providers also run marketplaces whereby third-party software vendors can sell their services to their respective cloud customer bases.

The easy availability and integration of such third-party software services, such as data management & analytics offerings from Databricks and MongoDB, or cybersecurity services from CrowdStrike and Fortinet, help augment the value proposition of cloud platforms.

Amid the ongoing AI revolution, the competition between the cloud providers to attract more and more third-party software vendors to their platforms has tightened, as the hyperscalers try to ensure that the latest generative AI innovations from across the software industry are available through their platforms.

Note that many of these software vendors will be listed across the marketplaces of all the major cloud providers, not just one. This general availability and lack of exclusivity can subdue any “differentiator” advantages for the cloud providers.

Consequently, ease of integration between a cloud provider and a third-party software vendor has become a big competitive factor. This is exactly what Amazon’s “Buy with AWS” is targeted at, whereby software vendors that are listed on the AWS marketplace will also be able to show potential customers a “Buy with AWS” button on their individual websites outside of Cloud Computing Services – Amazon Web Services (AWS).

Example of “Buy with AWS” button on Databricks website (AWS blog)

This is an absolute genius masterstroke, and here’s why. Now when corporate customers are considering subscribing to new software services that meet their business needs in the era of AI, the prominent visibility of AWS as a unified purchase option should induce enterprises to seamlessly integrate their new service with their AWS workloads, before they even consider integrating through Microsoft Azure or Google Cloud. Or in case they are not yet a cloud customer, it certainly keeps AWS top of mind when they are considering migrating to the cloud, or adopting a multi-cloud approach beyond Azure and Google Cloud.

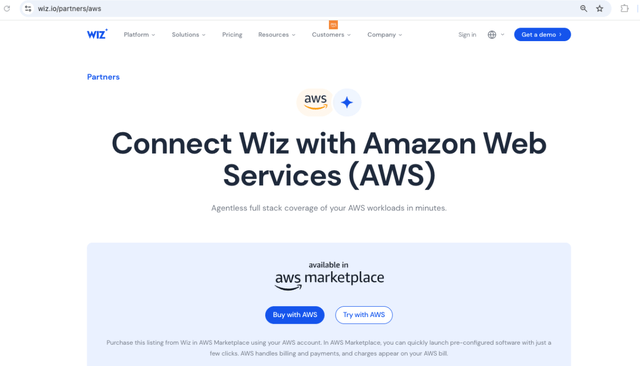

Example of “Buy with AWS” on Wiz website (AWS blog)

Essentially, it should lead to greater stickiness of AWS, as customers are more likely to stay loyal to a platform where they have most of their third-party software vendor linkages, and subsequently where most of their workloads reside.

It’s a savvy move to sustain AWS’ market share amid intensifying competition from its top two rivals.

We have buyers who are not as familiar with us who are very familiar with AWS … It validates Workday as a potential provider.

– Matthew Brandt, Workday’s senior vice president of global partners in an interview with CNBC

Amazon is essentially leveraging AWS’ greater brand recognition over smaller software vendors that want to sell their services to more and more enterprises. That is one of the key motives behind third-party companies wanting to offer the option to “Buy with AWS” on their websites.

Now, certain investors would argue that any advantages through this strategy will not last because Microsoft Azure and Google Cloud will certainly follow suit and offer their own customized checkout options on third-party software vendors’ websites.

Although, keep in mind that it’s not just a case of creating a button that can be added on third-party websites. Arranging the back-end integrations and setting up the applicable contract agreements can take time and effort.

So while Azure and Google Cloud work to catch up on this front, AWS is beautifully set up to entangle customers more deeply into its ecosystem as these enterprises seek new software services going into 2025.

In fact, the market is already looking forward to 2025 as a year of “AI agents”, and more importantly, as a year when companies will start to increasingly adopt new-age, AI-powered applications, and AWS is now strongly positioned to capitalize on this potential wave.

Risks

Amazon is undoubtedly making commendable advancements in terms of capitalizing on the AI revolution, though it is important to also consider the counteractive forces to AWS’ progress.

Building powerful, large-scale clusters of numerous chips has become a key frontier in the AI race to enable faster and more effective training of AI models. At the AWS re:Invent conference, the tech giant boasted how it is making progress in this arena.

Trn2 UltraServers—a completely new EC2 offering—use NeuronLink interconnect to connect four Trn2 servers together into one giant server. With new Trn2 UltraServers, customers can scale up their generative AI workloads across 64 Trainium2 chips.

– Amazon

However, combining 64 Trainium chips together is a drop in the bucket relative to the size of the clusters that Nvidia is building in partnership with customers like Oracle.

Oracle announced the world’s first Zettascale AI Cloud computing clusters that can scale to over 131,000 Blackwell GPUs to help enterprises train and deploy some of the most demanding next-generation AI models.

– CEO Jensen Huang, Nvidia Q3 FY2025 earnings call (emphasis added)

So the fact remains that Nvidia’s technology is still the best option for training increasingly larger and complex AI models.

Furthermore, while the deployment of Amazon’s custom silicon by Apple and AI start-ups should help cultivate a growing software ecosystem, note that catching up to Nvidia on this front remains a steep uphill battle, with the semiconductor giant boasting an ecosystem of 5.1 million developers for its CUDA software platform.

These third-party developers are continuously advancing the capabilities of Nvidia’s GPUs, including on the inference side.

Our large installed base and rich software ecosystem encourage developers to optimize for NVIDIA and deliver continued performance and TCL improvements. Rapid advancements in NVIDIA’s software algorithms boosted Hopper inference throughput by an incredible 5 times in one year and cut time to first token by 5 times. Our upcoming release of NVIDIA NIM will boost Hopper Inference performance by an additional 2.4 times.

– CEO Jensen Huang, Nvidia Q3 FY2025 earnings call

Given Nvidia’s strengthening lead underpinned by continuous innovation, AWS will very likely have to continue purchasing chips from Nvidia for the foreseeable future as it builds out new data centers for the AI age. This means more large-scale Capex cutting into profit margins over the years ahead until Amazon’s own custom silicon gains more prevalence across its cloud customer base.

The investment case for Amazon

AMZN is currently trading at around 44x forward earnings. Now, even though the Forward PE ratio tends to be one of the most popular valuation metrics, I prefer the Forward Price-Earnings-Growth (Forward PEG), which essentially adjusts a company’s Forward PE multiple by the expected earnings growth rate ahead.

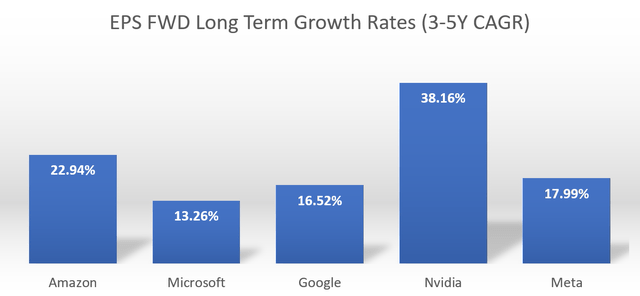

Nexus Research, data compiled from Seeking Alpha

Out of the mega-cap tech companies that are currently expected to be the biggest winners of generative AI, AMZN currently has the second-highest projected EPS growth rate of 22.95%, behind only NVDA which remains the king of AI.

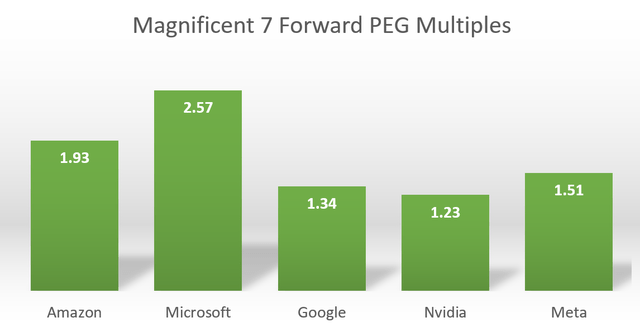

Now, when we adjust each company’s Forward PE ratio by their anticipated EPS growth rates, we obtain the following Forward PEG multiples.

Nexus Research, data compiled from Seeking Alpha

A Forward PEG multiple of 1x would imply that a stock is trading around its fair value, but it’s not unusual for popular tech stocks to trade at rich premiums to their fair value.

NVDA remains one of the cheapest AI winners thanks to its higher projected EPS growth rate, as other tech companies are expected to continue purchasing its highly sought-after chips over the next few years.

Now in terms of the major cloud players, MSFT remains the most expensive, while GOOG is the cheapest. Although, keep in mind that the reason Alphabet shares are trading at such a discount relative to other hyperscalers is because of the serious concerns around its core Search business amid the intensifying antitrust scrutiny.

Now, AMZN shares are certainly not cheap at 1.93x Forward PEG, but it’s still valued more attractively than MSFT at 2.57x. At the beginning of this AI revolution, Microsoft Azure was perceived to be in the leading position thanks to its opportune partnership with OpenAI.

However, considering the substantial progress AWS has been making in terms of capitalizing on the AI revolution, which we covered throughout this article, and given the valuation discrepancy between the top two cloud companies, AMZN shares continue to be more appealing than MSFT.

Amazon stock sustains a “buy” rating.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.