Summary:

- AI was a bright spot in the latest corporate earnings season, yet reference to the technology was missing entirely during Amazon’s latest earnings update.

- Instead, management focused on optimization, which aligned with damage control efforts amid ongoing company-specific and macro challenges to cloud and e-commerce.

- The following analysis will discuss all that Amazon is doing in AI, and why optimization will be complementary to those efforts, making the current focus a key long-term competitive advantage.

HJBC

AI has been at the heart of almost all tech companies – big and small – as many rush to showcase their capabilities in the field that has gained viral sensation in the past several months. Yet, reference to the technology was largely absent at Amazon’s (NASDAQ:AMZN) latest earnings call, despite its market-leading footprint in public cloud-computing services critical to keep AI developments and use cases – like the many generative AI-enabled chatbots available today – online. Instead, the tech giant has doubled down on its focus in optimization – for both its customers and within internal operations – to compensate for deceleration observed across AWS and diminishing margins in Amazon.com e-commerce in the face of deteriorating macroeconomic conditions and intensifying competition.

But Amazon has not missed a beat when it comes to its dabble in AI. Although reference to the technology was absent during Amazon’s latest earnings call – while its mega-cap peers like Microsoft (MSFT), Google (GOOG / GOOGL) and Meta Platforms (META) have been capitalizing on the momentum with announcements of big strides in generative AI – the company remains well invested into related future growth opportunities. While AI (alongside other bright spots like double-digit growth in digital advertising amid the cyclical industry downturn) did not get the “air-time” it deserved during Amazon’s latest earnings call, which was primarily dominated by the outline of near-term challenges and related mitigation efforts in AWS and e-commerce, the company’s foray in the arena remains competitive and critical, nonetheless.

And taken together with Amazon’s increased focus on restoring margin expansion, which is likely to place a more evident impact on its earnings in the second half of the year via ongoing efforts such as cloud optimization and cost-cutting initiatives across all segments, as well as ultimately returning cyclical tailwinds, the company continues to demonstrate an attractive set-up for longer-term upside potential from current levels. While we remain incrementally cautious on near-term weakness in the stock due to acute macroeconomic headwinds still facing the bulk of Amazon’s core operations, ongoing optimization efforts remain favourable to the company’s free cash flow generation and will likely reinforce accrual of value at the underlying business through the year, which will be unleashed to propel renewed upsides when market confidence returns.

Amazon and AI

Generative AI has been the talk of the town in recent months, with the acronym mentioned more than 400 times in the latest earnings season (or 200+ times in tech), more than doubling the volume of related references observed in past corporate discussions. But Amazon – the market leader in cloud-computing services critical to enabling training and deployment of large language models and other AI workloads behind use cases like chatbots – refrained from mentioning the technology – AI, or artificial intelligence, was not mentioned even once during its latest fourth quarter earnings call.

Instead, Amazon traded in AI for optimization. Variations of the term – which refers to the growing industry-wide chorus on “doing more with less” and preserving profit margins amid tightening financial conditions – were mentioned eight times, with expanded discussion scattered throughout the latest earnings update:

Enterprise customers continued their multi-decade shift to the cloud while working closely with our AWS teams to thoughtfully identify opportunities to reduce costs and optimize their work…Starting back in the middle of the third quarter of 2022, we saw our year-over-year growth rates slow as enterprises of all sizes evaluated ways to optimize their cloud spending in response to the tough macroeconomic conditions. As expected, these optimization efforts continued into the fourth quarter…Our customers are looking for ways to save money, and we spend a lot of our time trying to help them do so…As we look ahead, we expect these optimization efforts will continue to be a headwind to AWS growth in at least the next couple of quarters.

Source: Amazon 4Q22 Earnings Call Transcript

But a deeper dive into AWS’ focus on recent years would reveal that investments and efforts going into AI and optimization are not mutually exclusive at the company. Instead, AI has long been pervasively applied across Amazon’s core businesses, not just AWS, and has played a critical role in enhancing cost and performance for both its internal operations as well as the enterprise cloud spending segment.

Alexa

Alexa, Amazon’s infamous smart assistant, marks one of the earliest presences of generative AI application in day-to-day settings and interactions. Since its debut as a smart-home assistant via devices like the Echo smart speakers, Alexa’s presence has now expanded to in-vehicle infotainment systems as well as home security devices like Ring. Despite Amazon’s devices unit being “a major target of the company’s recent layoffs”, Alexa engagement with customers continues to increase at a double-digit run-rate of as much as 30% in the last 12 months. This has led to the unit’s head, Dave Limp, reaffirming his team’s commitment to further building out Amazon’s AI foray via implementation in Alexa and related devices earlier this year.

The continued build-out of Alexa’s AI-enabled capabilities – especially considering the recent awareness generated over generative AI-enabled conversational services’ application in day-to-day interactions – remains a critical vertical in our view in helping users stay connected with Amazon while also driving greater adjacent growth across its ecosystem of offerings over the longer-term. This is consistent with Amazon’s recent introduction of “Create with Alexa”, an AI-enabled tool built into the assistant that can help parents and children create AI-generated stories with a unique narrative, graphics, and music.

And this is just one of many day-to-day interactive use cases of large language models (“LLMs”) that power Alexa, as well as chatbots like OpenAI’s ChatGPT. And further underscoring Amazon’s competitive positioning in the AI arms race via its retail product Alexa is the company’s development of the “Alexa Teacher Model 20B” (“AlexaTM 20B”) last year. Built on 20 billion parameters, the new LLM can improve Alexa’s current processing and dialogue capabilities by “supporting multiple languages, including Arabic, Hindi, Japanese, Tamil and Spanish”. As discussed in our most recent coverage on LLMs, a larger sized model does not necessarily mean greater effectiveness. In AlexaTM 20B’s case, the LLM has demonstrated outperformance on “linguistic tasks” such as summarizing and translating texts against significantly larger rival models such as GPT-3.5, which currently powers ChatGPT, and Google’s PaLM. AlexaTM, which is currently Alexa AI’s largest sized model, is available for customer access via “SageMaker JumpStart”, its hub of open-source models used to train different AI/ML-enabled use cases (further discussed in later sections).

Amazon.com

Similar to how Google’s BERT has been interacting behind the scenes with users for years by optimizing the billions of daily search results, Amazon has also leveraged LLMs to facilitate multi-modal search at scale on its e-commerce platform. Much of the related models powering the AI-enabled capabilities in search functions on Amazon.com stem out of Amazon’s “Search M5” unit.

The body of researchers has long been developing, testing and deploying ML models critical to empowering search on Amazon.com and benefiting customers on the platform. And AWS also plays a critical role in the work of Search M5. Having to “simultaneously train thousands of models with more than 200 million parameters each”, Search M5 relies heavily on “Amazon EC2”, the public cloud on which many depend on to scale their respective workloads and IT infrastructures off premise. Other AWS services leveraged by Search M5 also include Amazon S3, Amazon FSx, and AWS Batch, which together help “store, launch, run, and scale feature-rich and high-performing file systems in the cloud”. Not only does Search M5 highlight the prevalence of AI integration across Amazon’s offerings to its day-to-day customers, but it also underscores the flexible and reliable technology offered by AWS in enabling AI development and integration across the enterprise cloud spending segment.

AWS

Based on the foregoing analysis, it is clear that not only is Amazon competitive in the development of LLMs and other complex models that power a multitude of AI-enabled applications, but its cloud-computing arm also plays a critical role in supporting the development and deployment of said technologies. In addition to Amazon S3, Amazon FSx, and AWS Batch discussed in the earlier section, which are cloud-based data storage, management and launch solutions critical in scaling the development of ML models and related applications, AWS also offers Amazon SageMaker, AWS Trainium, and AWS Inferentia, which are all specifically designed to facilitate “building, training, and deployment” of ML models:

- Amazon SageMaker: As mentioned in the earlier section, SageMaker is an open-sourced hub of ML models and resources that customers can access for building, training and deployment “into a production-ready hosted environment”. The service is not limited to LLMs, unlike rival Microsoft’s “Azure OpenAI Service” (discussed here) or Meta Platform’s “LLaMA” (discussed here), and can instead facilitate the entire process of building to training and deployment of any ML model.

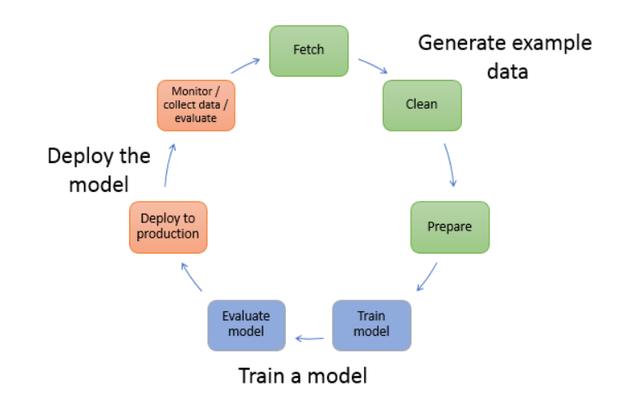

ML model workflow (docs.aws.amazon.com)

- AWS Trainium: AWS Trainium is hardware, rather than cloud-based software, offered by Amazon’s cloud-computing unit designed specifically for “accelerating deep learning training”. For instance, the Amazon EC2 TRn1 instance “deploys up to 16 AWS Trainium accelerators to deliver a high-performance, low-cost solution for [deep learning] training in the cloud” – there is a smaller instance type that runs on a single Trainium chip, and a larger instance type that runs on 16 Trainium chips. Specifically, Trainium-based EC2 instances can reduce training costs of as much as 50% compared against rival accelerators like Nvidia’s (NVDA) GPUs for certain models. This is because Trainium can facilitate “analysis, experimentation and exploration on the cheaper single-chip instance”. Meanwhile, the same endeavour run on the Amazon EC2 P4d instances, which deploys eight Nvidia A100 GPUs, would be comparatively more expensive knowing that a single Trainium chip would have cut it for the same job. This accordingly makes AWS Trainium a key factor in ensuring Amazon offers not just one, but a wide range of efficient clouds fitted for every kind of DL / ML model training.

- AWS Inferentia: Similar to Trainium, Inferentia is hardware specifically designed to optimize costs and performance for DL applications. Inferentia accelerators currently power the Amazon EC2 Inf 1 instances, capable of delivering “up to 2.3x higher throughput and up to 70% lower cost per inference than comparable GPU-based Amazon EC2 instances”. The newest Inferentia2 accelerators can deliver “up to 4x higher throughput and up to 10x lower latency” compared to its predecessor, which complements increasing cloud optimization demands from customers as AI / ML models become increasingly complex. Training on both Inferentia and Trainium can be seamlessly done via AWS Neuron, a software development kit (“SKD”) that can “help developers deploy models” on EC2 instances powered by either of both chips without any major adjustments to existing workflows.

- Graviton3: This is Amazon’s latest generation of its in-house designed Arm-based CPU. Graviton3-based EC2 instances are optimized for ML workloads, offering “3x better performance compared to AWS Graviton2 processors”.

Taken together, AWS remains a powerhouse of critical services and hardware needed to enable the development and deployment of high-performance generative AI solutions in a cost-optimized and reliable environment, which brings us to the next point in discussing why Amazon’s focus on cloud optimization and recent momentum in AI are not mutually exclusive.

Optimization and AI

Optimization and AI development are not mutually exclusive, but rather complementary to one another. According to founder and CEO of OpenAI, Sam Altman, it costs on average “single-digit cents” per prompt on ChatGPT right now, which makes further optimization of configurations and scale of use critical to ensuring sustainability of the service. Meanwhile, Latitude, an early adopter of OpenAI’s GPT LLMs in its game “AI Dungeon”, disclosed it was “spending nearly $200,000 a month on OpenAI’s so-called generative AI software and AWS in order to keep up with millions of user queries it needed to process each day” during the game’s peak demand in 2021. Even with its recent switch to a comparable generative AI software offered by start-up “AI21 Labs”, Latitude’s monthly bill for sustaining the technology behind its game remains in the $100,000-range per month, making it critical to start charging players a subscription fee “for more advanced AI features to help reduce the cost”. Microsoft’s recent implementation of ChatGPT into its Bing search engine is also estimated to have cost “at least $4 billion of infrastructure to serve responses” to all users, while its continued build-out of computing power needed to support OpenAI’s developments is likely to have cost north of “several hundred million dollars” on top of its existing multi-billion-dollar investment into the AI company.

Despite the hype and momentum around AI-enabled conversational services in recent months, they remain costly to not only develop, but also maintain. As discussed in the earlier section, the computing power required to run the complex LLMs, as well as the hardware behind it, are not cheap.

The high cost of training and “inference” — actually running — large language models is a structural cost that differs from previous computing booms. Even when the software is built, or trained, it still requires a huge amount of computing power to run large language models because they do billions of calculations every time they return a response to a prompt. By comparison, serving web apps or pages requires much less calculation. These calculations also require specialized hardware…Most training and inference now takes place on graphics processors, or GPUs, which were initially intended for 3D gaming, but have become the standard for AI applications because they can do many simple calculations simultaneously. Nvidia makes most of the GPUs for the AI industry, and its primary data center workhorse chip costs $10,000.

Source: CNBC News

The only way to achieve scale is if every component across the supply chain – from chipmakers to hyperscalers and the LLM application operators – can optimize efficiency from both a cost and performance perspective. And Amazon is addressing the exact demand across the board – while it continues to prioritize optimization across its core businesses, said efforts continue to complement both the industry’s and its own opportunities in AI developments by ensuring their sustainability over the longer-term.

The Bottom Line

The impending macroeconomic challenges are not exclusive to Amazon, despite its core e-commerce and cloud-computing business’ direct exposure to their impact. Although growth is likely to remain subdued this year due to the increasingly prominent reality of deteriorating macroeconomic conditions, management’s continued efforts in bolstering profit margins and investing into longer-term opportunities that address customers’ growing demand for optimization will likely be key to restoring upside potential once market confidence returns. Ex-macro challenges, longer-term secular growth opportunities remain, nonetheless, with “90% to 95% of the global IT spend [remaining] on-premises” that have yet to migrate to the cloud, and more than 80% of commerce still offline, which Amazon’s core commerce and cloud-computing moats are poised to further benefit from. And continued efforts in optimizing its offerings for customers in both e-commerce and cloud are expected to be a key competitive advantage in ensuring sustained growth and demand despite both unit’s sprawling market share.

And from a valuation perspective, Amazon appears to be trading at a discount from both a relative and absolute perspective. The stock currently trades at approximately 11x estimated EBITDA, which is below that of peers with similar growth and profitability profiles. Admittedly, much of the discount is reflective of acute near-term macroeconomic challenges facing Amazon, as well as other company-specific headwinds such as ongoing inefficiencies in productivity that have likely been further complicated by wage inflation struggles.

But considering Amazon’s moats in core secular growth trends and the incremental competitive advantage in optimization as discussed in the foregoing analysis, even bringing the stock’s valuation in line with peers at about 15x (+35% upside potential, or $130 apiece) would be sufficient to account for near-term macro and company-specific challenges in our view, with further optimism when cyclical tailwinds return. We believe the current market set-up continues to create entry opportunities that will make Amazon an attractive buy-and-hold long-term investment to monetize on its “unmatched scale and advantage” in key secular verticals spanning e-commerce, cloud, and potentially digital ads and AI within the foreseeable future.

Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Thank you for reading my analysis. If you are interested in interacting with me directly in chat, more research content and tools designed for growth investing, and joining a community of like-minded investors, please take a moment to review my Marketplace service Livy Investment Research. Our service’s key offerings include:

- A subscription to our weekly tech and market news recap

- Full access to our portfolio of research coverage and complementary editing-enabled financial models

- A compilation of growth-focused industry primers and peer comps

Feel free to check it out risk-free through the two-week free trial. I hope to see you there!