Summary:

- Certain investors believe that AWS is behind Microsoft Azure and Google Cloud in the AI race, but this pessimism seems overblown.

- Amazon has several strengths to leverage to stay competitive in the AI race.

- Encouraging customers to migrate from cloud services powered by Nvidia’s chips to those powered by Amazon’s own chips will be a challenge.

Noah Berger

Amazon’s AWS pioneered cloud-computing, which helped it cement its leadership position in the cloud industry. Though amid the onset of the generative AI revolution seeing rivals like Microsoft Azure and Google Cloud catching up with their powerful large language models, industry observers fear that AWS may continue losing market share to these competitors. Amazon is indeed fighting back to defend its leadership position. AWS possesses some strengths to leverage, while also facing numerous challenges to defend its crown. Nexus Research assigns a ‘hold’ rating on the stock.

Both Microsoft Azure and Google Cloud currently offer the leading large language models, GPT-4 and PaLM 2, respectively. While Amazon has introduced its own large language model, Titan, its size and training method remain undisclosed.

Nevertheless, Amazon is striving to catch up by not just relying on its own model, but also offering third-party models through its Bedrock AI service, including from “companies like Anthropic, Stability AI, AI21 Labs, Cohere”. Though keep in mind that Microsoft Azure and Google Cloud will also be offering third-party models, hence this strategy does not necessarily offer a competitive edge for AWS.

Microsoft Azure is deemed to be the key competitive threat to AWS after the software giant struck gold with its investment in OpenAI. Though unlike AWS and Google Cloud, Microsoft has not yet developed its own chips for cloud-computing, and is heavily reliant on Nvidia’s industry-leading GPUs. While AWS also offers access to NVIDIA H100 GPUs, Amazon CEO Andrew Jassy proclaimed that the company is already offering its own AI chips:

“Customers are excited by Amazon EC2 P5 instances powered by NVIDIA H100 GPUs to train large models and develop generative AI applications. However, to date, there’s only been one viable option in the market for everybody and supply has been scarce.

That, along with the chip expertise we’ve built over the last several years, prompted us to start working several years ago on our own custom AI chips for training called Trainium and inference called Inferentia that are on their second versions already and are a very appealing price performance option for customers building and running large language models. We’re optimistic that a lot of large language model training and inference will be run on AWS’ Trainium and Inferentia chips in the future.”

Investors believe that AWS is behind Microsoft Azure and Google Cloud in the AI race, but this pessimism is overblown. Unlike Microsoft Azure, AWS was already designing its own AI chips before the ChatGPT-induced generative AI hype even started. While presently Nvidia’s H100 GPUs remain the best silicon available for training and inferencing AI models, Amazon will indeed be striving to encourage customers to shift more of their workloads to its own Trainium and Inferentia chips, and has a head start over Microsoft Azure in doing so.

These AI chips are certainly not AWS’s first venture into designing its own chips. For years, the tech giant has also been offering its Graviton CPU chip, aimed at generalized computing needs in AWS. On the last earnings call, CEO Andrew Jassy proclaimed:

“a few years ago, we heard consistently from customers that they wanted to find more price performance ways to do generalized compute. And to enable that, we realized that we needed to rethink things all the way down to the silicon and set out to design our own general purpose CPU chips.

Today, more than 50,000 customers use AWS’ Graviton chips and AWS Compute instances, including 98 of our top 100 Amazon EC2 customers, and these chips have about 40% better price performance than other leading x86 processors. The same sort of reimagining is happening in generative AI right now.”

The fact that Amazon has already been using its own silicon is indeed encouraging, but the Trainium and Inferentia AI chips are not as powerful as Nvidia’s H100 GPUs. Given that Azure services are more reliant on Nvidia’s GPUs in the absence of its own chips, while also being able to offer the industry-leading AI large language model, Azure is currently perceived to be better positioned to attract cloud customers across industries that are looking to transform their businesses for the AI revolution.

Furthermore, encouraging customers to migrate from cloud services powered by Nvidia’s chips to those powered by Amazon’s own chips will be a challenge. Firstly, Amazon will need to advance its set of AI chips to better compete against Nvidia’s industry-leading GPUs from a price-performance perspective.

Additionally, the competitiveness of Nvidia’s GPUs is also fostered by the accompanying CUDA software package and the subsequent software ecosystem that has developed around its chips. This ecosystem incurs third-party developers increasingly building AI applications tailored to Nvidia’s chips, which continuously augments the appeal of Nvidia’s GPUs to customers. Hence, Nvidia has indeed built a strong moat around its AI solutions through extensive network effects, keeping both developers and customers entangled in its ecosystem.

Nonetheless, Amazon’s market-leading status in the cloud- industry for so many years has led to an extensive partner ecosystem developing around AWS, which Amazon will indeed strive to leverage by encouraging third-party developers to build applications around its AI cloud services and chips.

In addition, even if Trainium and Inferentia chips are not as or more powerful than Nvidia’s chips, AWS could still be able offer better price performance through customization benefits. Moreover, by designing and producing its own chips, Amazon can tailor the Trainium/ Inferentia chips to the specific needs of its own cloud services. This streamlined integration benefit enables Amazon to optimize performance and reduce latency for its customers.

Now in terms of how AWS will strive to encourage greater adoption of Trainium/ Inferentia chips across its cloud customer base, the strategies the tech giant used to induce migration towards its Graviton CPU could indeed offer some clues.

A key issue that could discourage customers from migrating is the need to modify or re-write their applications to run on AWS’s chips. This could be a significant switching cost, especially for larger applications. To facilitate the switch towards Graviton, Amazon has worked to ensure that many popular applications and software packages are compatible with Graviton, reducing the need for customers to modify or re-write their applications. On top of this, Amazon provides tools and services to help customers migrate their applications to Graviton, including the AWS Application Migration Service and the AWS Schema Conversion Tool. Hence, Amazon will indeed deploy a similar strategy to ease migration from Nvidia’s GPUs to Trainium/ Inferentia chips for training/ inferencing workloads.

If Amazon can successfully encourage migration towards its own chips in the AI revolution, it would benefit AWS in several ways. We already covered earlier the customization benefits, enabling AWS to offer better performance and lower latency, as the chips would be specifically tailored to its own cloud solutions. Furthermore, producing its own chips allows Amazon to innovate and develop new features and capabilities that may not be available with off-the-shelf chips like those from Nvidia and AMD. This can help Amazon to further differentiate its cloud services from competitors and provide additional value to customers. Hence, these advantages could enable AWS to better attract cloud customers, drive top-line revenue growth and defend its leadership position.

Though the use of internally-built chips also brings cost-efficiency benefits. By producing and deploying its own chips, Amazon can save on costs associated with purchasing Nvidia’s chips that carry lofty price tags. Moreover, as per the customization benefits mentioned above, running cloud services powered by its own chips can also enable it to offer such solutions more cost-efficiently. This can allow Amazon to reduce costs and offer more competitive pricing to its customers.

Financial guidance and performance

On the last earnings call, when an analyst asked about the timeline over which executives expect to see AI-related AWS growth, they weren’t willing to provide any specifics:

“Brent Thill

Andy, just on AI monetization. Can you just talk to when you think you’ll start to see that flow into the AWS business? Is that 2024? Do you feel like the back half, you’ll start to see that as a bigger impact for the business?

Andrew Jassy

…I think when you’re talking about the big potential explosion in generative AI, which everybody is excited about, including us, I think we’re in the very early stages there. We’re a few steps into a marathon in my opinion. I think it’s going to be transformative, and I think it’s going to transform virtually every customer experience that we know… But it’s very early. And so I expect that will be very large, but it will be in the future.”

Comparatively, Microsoft has been able provide guidance relating to AI-related growth for Azure, stating on the Microsoft earnings call that for Q1 FY24 it expects:

“In Azure, we expect revenue growth to be 25% to 26% in constant currency, including roughly 2 points from all Azure AI Services.”

Amazon will need to offer more material growth expectations going forward to sustain investor optimism around AWS’s AI prospects.

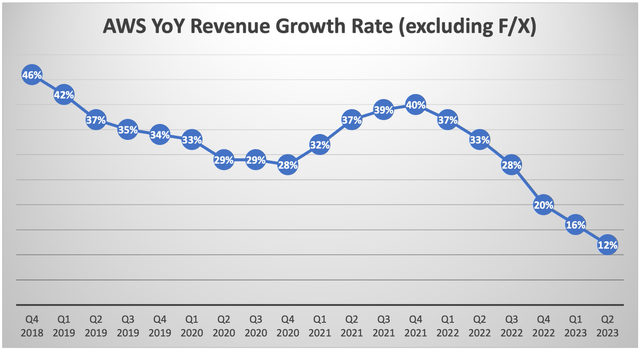

AWS revenue growth has been markedly decelerating over the last several years, similar to its largest rivals.

While the AI revolution is expected to re-ignite the revenue growth rates across all cloud providers, there are indeed massive upfront costs to be dealt with first. CEO Andrew Jassy did offer guidance on AI-related capex going forward:

“Looking ahead to the full year 2023, we expect capital investments to be slightly more than $50 billion compared to $59 billion in 2022. We expect fulfilment and transportation CapEx to be down year-over-year, partially offset by increased infrastructure CapEx to support growth of our AWS business, including additional investments related to generative AI and large language model efforts.”

As Amazon invests in scaling its AI infrastructure in AWS, it will pressure near-term profit margins. The tech giant will need to invest in both third-party solutions, such as Nvidia’s H100 chips, to be able to serve customers’ current AI needs with the industry-leading solutions, while simultaneously also investing in Trainium and Inferentia chip design advancements.

On top of that, Amazon will also be striving to build a more competitive large language model to serve as the foundation of its customers’ AI applications development. And as CEO Andrew Jassy mentioned, “to develop these large language models, it takes billions of dollars and multiple years to develop”, which means investors can expect large-scale capital expenditure over the foreseeable future.

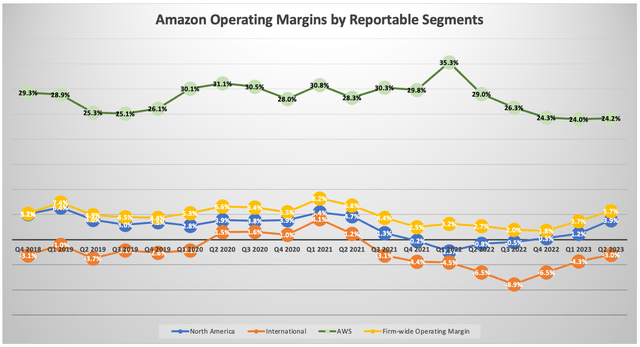

Massive AI-related capex will indeed pressure AWS’s profit margins over the near- term. Though as AWS infrastructure scales and is able to better serve customers’ growing demand for AI services, the subsequent top-line revenue growth should support profit margins over the long term, depending on how well it is able to compete against Microsoft Azure and Google Cloud.

As per Seeking Alpha data, Amazon stock currently trades at an expensive valuation of over 65x forward earnings. This valuation also reflects Amazon’s e-commerce business. AWS only made up about 16% of total revenue in Q2 2023. However, given that AWS is Amazon’s most profitable segment by a wide margin, it is a major contributor to the company’s profits, and therefore a significant driver of its forward earnings multiple.

Given that over the next year Amazon is going to embark on heavy capex which will pressure its profit margins, Nexus Research believes that this is a lofty price to pay for the stock.

Take into consideration also that 65x forward earnings is significantly more expensive than its top cloud rivals, with Microsoft trading at over 30x and Google trading at almost 24x. Therefore, while AWS could indeed prove to be a strong player in the era of AI, Nexus Research believes that investors can get better exposure to AI-related cloud computing growth with the cheaper valued rivals. Nexus Research assigns a ‘hold’ rating on the stock.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of MSFT, NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.