Summary:

- Advanced Micro Devices, Inc. has made impressive progress in its ability to capitalize on the AI opportunity ahead.

- While Nvidia continues to dominate the Data Center market, AMD is leveraging a key weapon to challenge its chief rival’s dominance.

- We are upgrading AMD stock to a “Buy” rating.

David Becker

With the AI revolution in full swing, Advanced Micro Devices, Inc. (NASDAQ:AMD) is striving to prove that there can be more than one winner in the artificial intelligence (“AI”) race. While NVIDIA Corporation (NVDA) has proven to be the clear leader thanks to its industry-leading AI chip (or GPU), AMD’s own AI silicon “MI300” is also delivering notable sales growth. Though offering cutting-edge chips is just one part of the growth story, AMD’s real weapon to challenge Nvidia’s dominance lies in its software.

In the previous article, a “hold” rating had been assigned to AMD stock, as it was perceived to be falling behind in the AI race given the lack of attributes the executives had to proclaim about their upcoming AI chip, as well as the uphill battle the company faced in challenging Nvidia’s “CUDA” software moat.

On the last earnings release, though, AMD’s executives assured investors that there is strong demand for its line of “Instinct” AI accelerators, even raising the top line guidance for data center AI GPUs. More importantly, CEO Lisa Su offered insights into the progress with their ROCm software layer. We are upgrading AMD stock to a “Buy” rating.

AMD capitalizing on the AI revolution

On the Q4 2023 AMD earnings call, CEO Lisa Su raised the sales revenue guidance for its data center AI chips from $2 billion to $3.5 billion in 2024, and shared the progress they are making (emphasis added):

“Customer response to MI300 has been overwhelmingly positive”.

“Customer deployments of our Instinct GPUs continue accelerating, with MI300 now tracking to be the fastest revenue ramp of any product in our history, and positioning us well to capture significant share over the coming years based on the strength of our multi-generation Instinct GPU road map and open source ROCm software strategy.”

And this “open source ROCm software strategy” is indeed the big weapon AMD is leveraging to take on Nvidia.

AMD’s ROCm software

Let’s start by understanding the difference between closed source and open source software.

Nvidia’s popular CUDA software platform, which is used for optimizing the performance of its GPUs, is closed source.

This means that the source code of CUDA is not publicly accessible and is considered the intellectual property of Nvidia. Users typically have a license to use the software but cannot modify it.

On the other hand, AMD’s ROCm software platform is open source, which means that the source code is freely available for anyone to inspect, modify, and distribute.

While both the closed source and open source approach allows third-party developers to build new software libraries and tools on top of these platforms, the open source approach is considered to allow greater collaboration from a much larger pool of developers thanks to its transparent nature, potentially leading to faster identification and implementation of innovative ideas.

However, given the well-established stature of the CUDA platform (existing since 2006) and Nvidia’s leadership position in GPUs, the semiconductor giant has successfully built a flourishing developer community around its closed source platform. And thanks to Nvidia’s massive installed base of several hundred million GPUs, it continues to attract more and more developers to the platform, with the company revealing that it had 4.7 million developers by the end of 2023.

AMD, on the other hand, introduced the ROCm software platform in 2016, a decade after Nvidia’s CUDA launched, and made it open source. However, till date, the CUDA platform remains larger than ROCm.

Now both the closed source and open source software approaches have their pros and cons. But from the perspective of data center customers, one of the biggest disadvantages of CUDA’s closed source nature is that it induces “vendor lock-in.” In other words, customers become highly dependent on both Nvidia’s software and hardware due to the software libraries and tools only being compatible with Nvidia’s GPUs, and limited compatibility with other competitors’ hardware.

This translates into weaker bargaining power for these data center customers when they seek to buy new chips and other hardware equipment from Nvidia, as they become increasingly tied into Nvidia’s software ecosystem.

In order to avoid this, data center customers strive to diversify their supplier base as much as possible.

Recognizing customers’ discontent with the closed source nature of Nvidia’s CUDA platform creating a “vendor lock-in” risk, AMD has made ROCm open source. Developers and users can modify ROCm to optimize it for different hardware architectures, allowing the software to be compatible with both AMD’s GPUs and competitors’ GPUs, and thereby supporting customers’ needs for supplier diversification.

This serves as a key selling point and product differentiator from Nvidia’s AI solutions.

AMD is moving aggressively to augment the functionalities of ROCm to serve generative AI workloads. The company is making impressive progress in encouraging large data center customers, as well as rising AI companies, to adopt AMD’s GPUs and ROCm software for their AI workloads, as CEO Lisa Su shared on the Q4 2023 AMD earnings call:

“The additional functionality and optimizations of ROCm 6 and the growing volume of contributions from the Open Source AI Software community are enabling multiple large hyperscale and enterprise customers to rapidly bring up their most advanced large language models on AMD Instinct accelerators.

For example, we are very pleased to see how quickly Microsoft was able to bring up GPT-4 on MI300X in their production environment and roll out Azure private previews of new MI300 instances aligned with the MI300X launch. At the same time, our partnership with Hugging Face, the leading open platform for the AI community, now enables hundreds of thousands of AI models to run out of the box on AMD GPUs and we are extending that collaboration to our other platforms.”

Additionally, Meta Platforms, Inc. (META) is also a big customer of AMD’s “MI300X,” and amid its launch in December 2023, analysts at Raymond James highlighted that:

“Meta’s comment that MI300X based OCP accelerator achieved the “fastest design to deployment in its history” suggests that AMD’s software is maturing fast.”

So AMD is making substantial progress in building out its ROCm software platform to augment the value of its AI chips.

Furthermore, it is worth noting that while ROCm’s open source nature theoretically avoids vendor lock-in for customers, there could still be some form of dependence on AMD GPUs via ROCm utilization.

ROCm’s core development team is from AMD. This means optimizations for ROCm are likely prioritized for AMD hardware, potentially leading to better performance on AMD GPUs, and potentially less efficient performance on other vendors’ GPUs.

The performance of ROCm software on non-AMD chips will depend on the quality of software developed by the open source software community.

While AMD will strive to lure data center customers into using its GPUs and ROCm platform for AI workloads by proclaiming its “open source” nature, it would be prudent to assume that AMD will strive to lock customers into its ecosystem by prioritizing software optimization for its own GPUs.

Building a sticky software ecosystem is essential for AMD to achieve recurring demand for new advanced editions of its AI chips, as the company executes its hardware product roadmap over time.

In fact, on the earnings call, CEO Lisa Su shared a very important piece of insight (emphasis added):

“The point I will make is our customer engagements right now are all quite strategic, dozens of customers with multi-generational conversations. So, as excited as we are about the ramp of MI300 and, frankly, there’s a lot to do in 2024. We are also very excited about the opportunities to extend that into the next couple of years out into that ’25, ’26, ’27 timeframe. So, I think, we see a lot of growth.”

In other words, customers are not only purchasing AMD’s MI300, but also already engaging with the company regarding the supply of future generations of AMD’s AI chips. Lisa Su also added that:

“you can be assured that we’re working very closely with our customers to have a very competitive roadmap for both training and inference that will come out over the next couple of years”

And these tight relationships are likely to extend to the development of the ROCm software platform to ensure customers remain engaged with the AMD ecosystem. And, as the value proposition of AMD’s AI chips continues to augment amid increasing training/inferencing functionalities through ROCm, it should drive pricing power for AMD as they roll out their next-generation chips. Lisa Su even hinted at this strengthening pricing power when explaining the company’s $400 billion market size estimate for Data Center AI GPUs, claiming that “there’s some ASP [Average Selling Price] uplift in there.”

The overarching point is, AMD is making noteworthy progress in building out the ROCm platform to compete against Nvidia’s CUDA platform, and subsequently augment the value proposition of its AI chips to better compete against Nvidia’s industry-leading GPUs.

Risks for AMD

The uphill battle is getting steeper: Nvidia’s developer ecosystem continues to grow rapidly, with the company revealing in February 2024 that by the end of 2023, total CUDA software downloads had accumulated to 48 million, with the developer community growing to 4.7 million.

That’s up from 45 million CUDA downloads and 4 million developers that Nvidia had shared in an investor presentation back in October 2023. Hence, Nvidia’s CUDA software moat continues to intensify at an accelerated pace.

On the other hand, while AMD CEO Lisa Su spoke encouragingly about the growing software community around ROCm, the company has not revealed any statistics to back up the claims of positive momentum, suggesting that it is still far behind its chief rival.

Furthermore, Nvidia’s massive installed base of several hundred million GPUs across global data centers, and its well-established CUDA platform with tens of millions of users, create a more compelling marketplace for third-party developers to build their own commercial services on top of the platform to help Nvidia’s GPU customers with their training/inferencing workloads, and generate substantial incomes to build long-lasting businesses of their own.

Hence, despite AMD’s “open source” software strategy with ROCm to attract as many developers as possible, the company still fights an uphill battle against Nvidia’s growing software moat.

Customers producing their own chips and software: Data center customers like Microsoft Azure have their own GPUs and software to run AI training and inferencing workloads, and will increasingly strive to reduce their reliance on third-party suppliers.

So while it is encouraging that AMD’s customers are contributing to the development of ROCm, there is simultaneously a growing risk that these customers prioritize the development of a software ecosystem around their own hardware. This could undermine the level of sales growth that AMD is able to deliver going forward.

AMD Financial Performance & Valuation

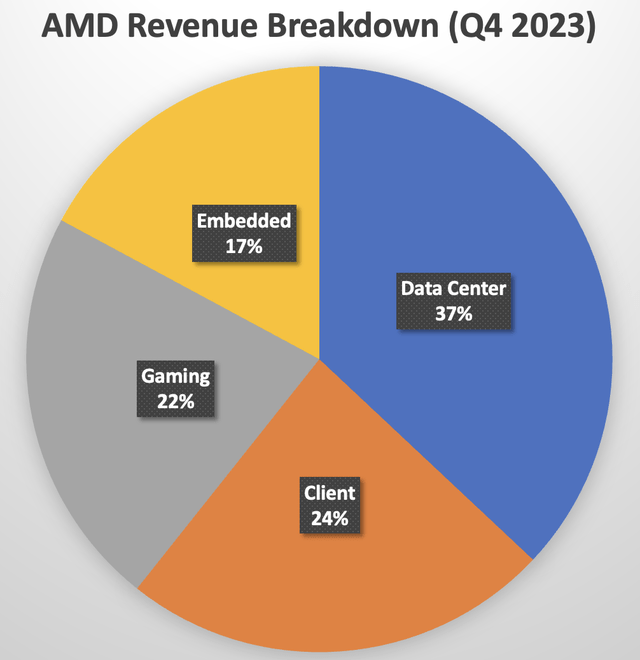

As we compare AMD against Nvidia amid the AI race, it is important to note that the “Data Center” segment represented 83% of Nvidia’s total revenue last quarter, while only accounting for 37% of AMD’s company-wide revenue.

Nexus, data compiled from company filings

While the AI revolution should eventually benefit every segment, during this phase of the AI revolution, it is the “Data Center” segment that will see the most revenue and profit growth, which is a market that Nvidia currently dominates.

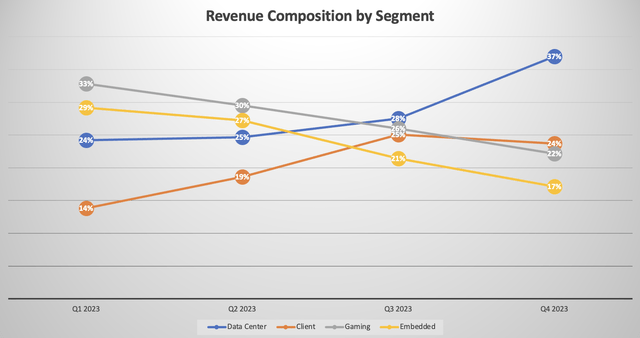

Nonetheless, as AMD increasingly capitalizes on the AI opportunity in the “Data Center” through its line of “Instinct” AI GPUs, the segment will inevitably grow as a proportion of total revenue, as it has already done over the past several quarters.

Nexus, data compiled from company filings

On the last earnings call, CFO Jean Hu shared that:

“Gross margin was 51%, flat year-over-year, with higher revenue contribution from the Data Center and the Client segments offset by lower Embedded segment revenue.

…

the major driver is going to be the Data Center business is going to grow much faster than other segments. That mix change will help us to expand the gross margin nicely.”

Indeed, as “Data Center” becomes a larger portion of the revenue mix, it should help drive gross margins higher. Though at present, Nvidia boasts a higher gross margin of 76%.

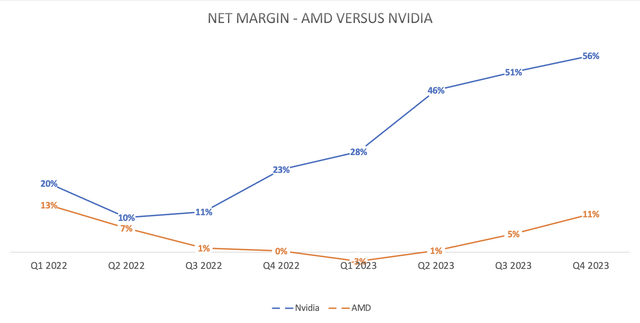

Furthermore, in terms of net profitability, Nvidia has been able to deliver incredible profit margin expansion relative to AMD over the past year, thanks to its enviable pricing power for its AI chip “H100,” reportedly selling for around $40,000 per GPU.

Nexus, data compiled from company filings

Although from a positive perspective, Nvidia’s high price tags enable AMD to price its own chips more aggressively as well, with Citi analyst Christopher Danely estimating that:

“It appears as if Microsoft and Meta are the largest customers for the MI300, … with the average selling price at roughly $10,000 for Microsoft and $15,000 or more for other customers”

This strong pricing power is only likely to continue strengthening as customers become increasingly tied to AMD’s ecosystem via the ROCm software layer, as discussed earlier, which should be conducive to profit margin expansion going forward.

Nevertheless, while the AI-driven growth prospects fueled a stock price rally of around 127% last year regardless of weakness in its other segments, AMD stock may not be able to continue rallying as strongly if the other units continue to be weak.

In fact, on the last earnings call, CFO Jean Hu offered the following guidance:

“Directionally, for the year, we expect 2024 Data Center and the Client segment revenue to increase driven by the strengths of our product portfolio and the share gain opportunities. Embedded segment revenue to decline and the Gaming segment revenue to decline by a significant double-digit percentage.”

This mixed guidance disappointed the market. The “Embedded” and “Gaming” segments continue to be important composites of AMD’s total revenue. Hence, declines in these segments will undermine the overall financial performance of AMD this year, which is a risk investors should keep in mind.

Now, in terms of AMD stock’s valuation, it currently trades at a forward P/E of around 58x, which is well above its 5-year average of around 43x, and significantly more expensive than Nvidia stock, which trades at around 36x.

Although the forward P/E is not necessarily the best valuation metric, as it doesn’t take into consideration how fast earnings are expected to grow. If companies are anticipated to experience faster EPS growth going forward compared to previous years, as is the case with these semiconductor stocks amid the AI revolution, it could be perfectly reasonable for the market to assign higher forward P/E multiples to these securities relative to historical valuation trends.

This is why the forward Price-Earnings-Growth [PEG] ratio offers a more comprehensive measure of valuation. The ratio is calculated by dividing a stock’s forward P/E by its expected earnings growth rate over a certain period of time.

A forward PEG ratio of 1 would imply that the stock is trading at fair value. Although popular technology stocks most often trade well above that level as the market tends to assign a premium to these securities, based on factors such as product strength/competitive differentiation and the total market size opportunity ahead.

AMD currently has a forward PEG of 1.20, which is below its 5-year average of 1.46, but still more expensive than Nvidia, which trades at a forward PEG of 1.04.

Nonetheless, at a forward PEG of 1.20, the stock trades closer to fair value than it has historically, and that is despite the promising growth prospects amid the AI revolution. Therefore, we are upgrading AMD stock to a “Buy,” given the company’s improving AI-driven growth prospects.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.