Summary:

- Advanced Micro Devices, Inc.’s RSI at 40.32 signals it’s neither overbought nor oversold, while a downward volume trend suggests weakening. Trading at around $140, the average target is $160.

- Analysts project Q2 revenue of $5.74 billion, up 7% YoY, with significant growth in the data center segment and AI chip market.

- AMD’s data center revenue hit a record $2.3 billion, driven by AI chips, and is set to grow further with AI-driven PC upgrades.

- With 192 GB HBM3 memory and 304 CDNA 4 Compute Units, AMD’s MI300X excels in handling large AI models and high batch-size workloads, offering superior cost efficiency.

da-kuk/E+ via Getty Images

Investment Thesis

Since our last coverage in April, Advanced Micro Devices, Inc. (NASDAQ:AMD) has reached our initial target price of $152 and is on track toward our ambitious year-end target of $275 despite facing resistance at $186.

However, recent market volatility has introduced new challenges. The semiconductor sector experienced a significant sell-off following reports of potential tighter export restrictions to China by the Biden administration.

Additionally, former President Trump’s remarks about Taiwan, the largest chip producer, needing to fund its defense have further fueled concerns. The company’s innovative products and improved profitability drove a six-year stock surge, although it faced a slump in late 2021 due to a downturn in PC sales.

Finally, AMD’s shares rose again last year, fueled by excitement over its AI chips for data centers and potential profits from an AI-driven PC upgrade cycle. Hence, following a 24% correction, AMD trades at an attractive entry point for long-term investors, reinforcing our strong buy rating ahead of its earnings report.

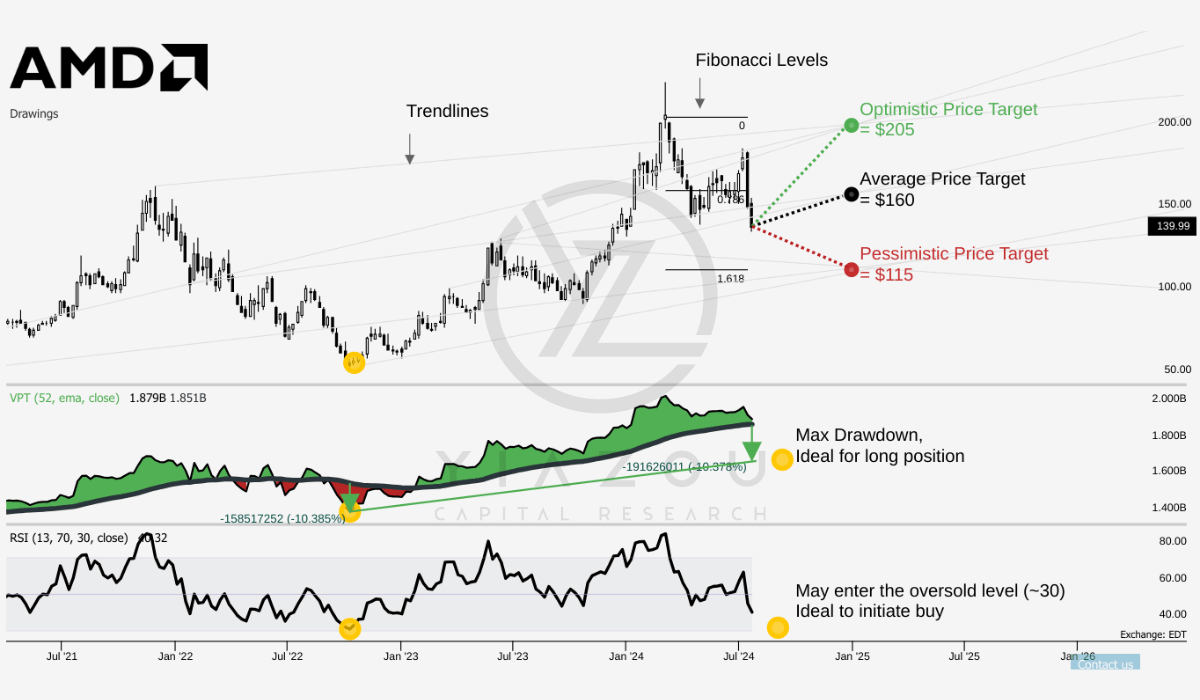

Poised for a Swing – $160 Average Target

AMD is currently trading at $139.99. The average price target for 2024 is set at $160. Moreover, the optimistic target is $205, and the pessimistic target is $115. These targets are derived from short-term trends projected over Fibonacci levels (0.786, 0, and 1.618). The range indicates a wide range of potential price movements.

The Relative Strength Index (RSI) is at 40.32, suggesting that AMD is neither overbought nor oversold. The RSI line trend is downward. This reflects a bearish momentum without any bullish or bearish divergences. It means there’s no significant deviation from the price trend. For a long setup, the RSI needs to touch near 30, indicating a potential buy signal if AMD’s price touches this level.

The volume price trend (VPT) also shows a downward trend, currently at 1.88 billion. This is slightly above the moving average of 1.85 billion. The VPT indicates that trading volume is declining, which could be a sign of weakening buying pressure. The long setup threshold is at 1.92 million. This is based on a potential max drawdown (in line with the historical pattern) below the moving average.

Author (trendspider.com)

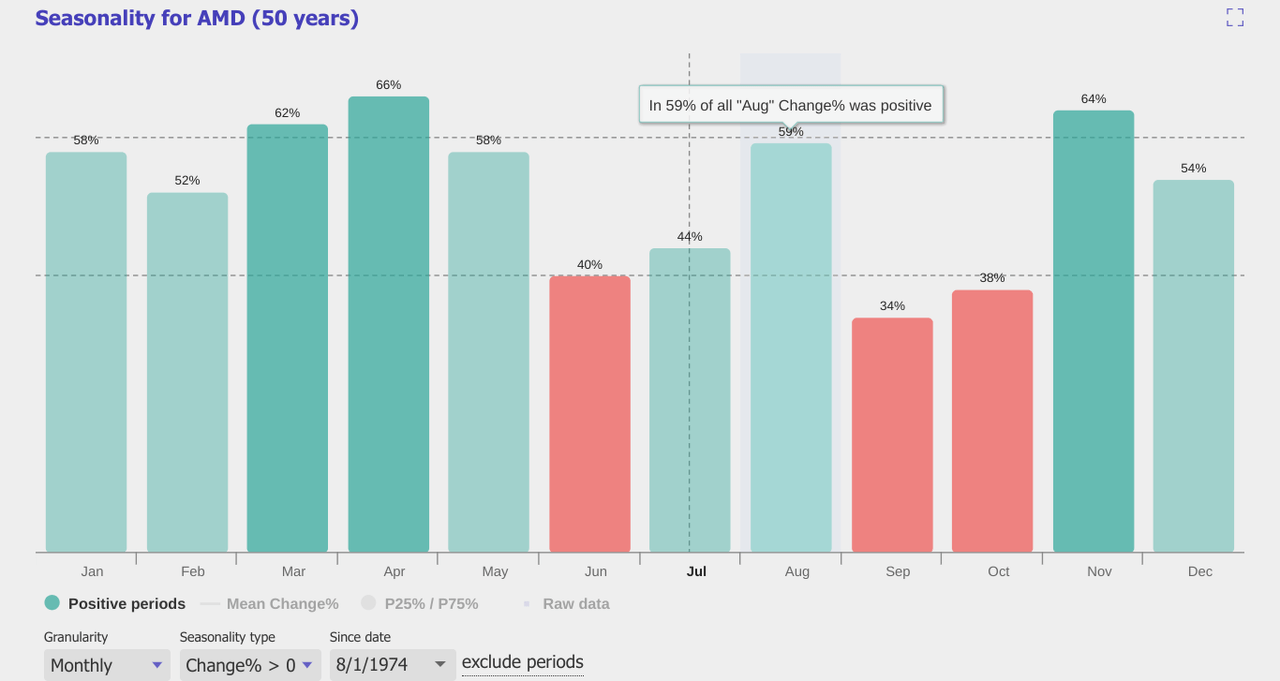

Lastly, historical data for August 2024 suggests a 59% chance of positive returns if an investment is made this month, based on five years of monthly seasonality.

Will AI Boom Propel Data Center Growth and Boost Investor Confidence?

AMD will post second-quarter earnings on Tuesday after the close. Investors will look for hints of record data center growth and solid guidance in the face of an ongoing artificial intelligence boom. Here’s what to expect from AMD’s coming earnings report.

Analysts expect AMD to post revenue of $5.74 billion, up 7% year-over-year (YoY). It should see a net income of $343.08 million or 21 cents a share. That’s up drastically from last year’s second quarter when AMD had a net income of $27 million and earnings of 2 cents a share.

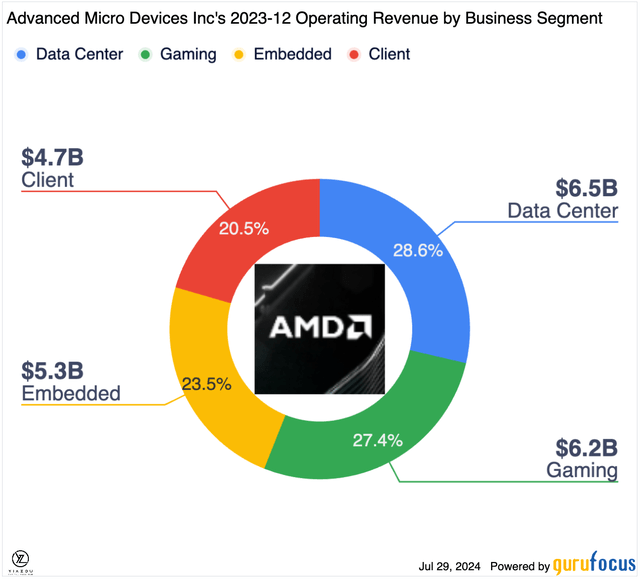

One such metric would be AMD’s performance in the data center segment. In Q1, it saw a record high revenue for its data center segment of $2.3 billion, an 80% YoY increase due to strong sales of its MI300 chip used in AI data centers. According to experts, this segment is set to amass $2.79 billion in the second quarter, probably setting yet another quarterly record.

AI-Driven Prospects AMD’s market position has been severely affected by the AI boom. It surged on May 17 after a report came that Microsoft Corp. intends to offer its Azure cloud computing customers a platform of AMD AI chips as an alternative to NVIDIA Corporation (NVDA). At Computex last month, it announced an annual update cycle for Instinct AI accelerators, and the next generation, MI325X, is due later this year.

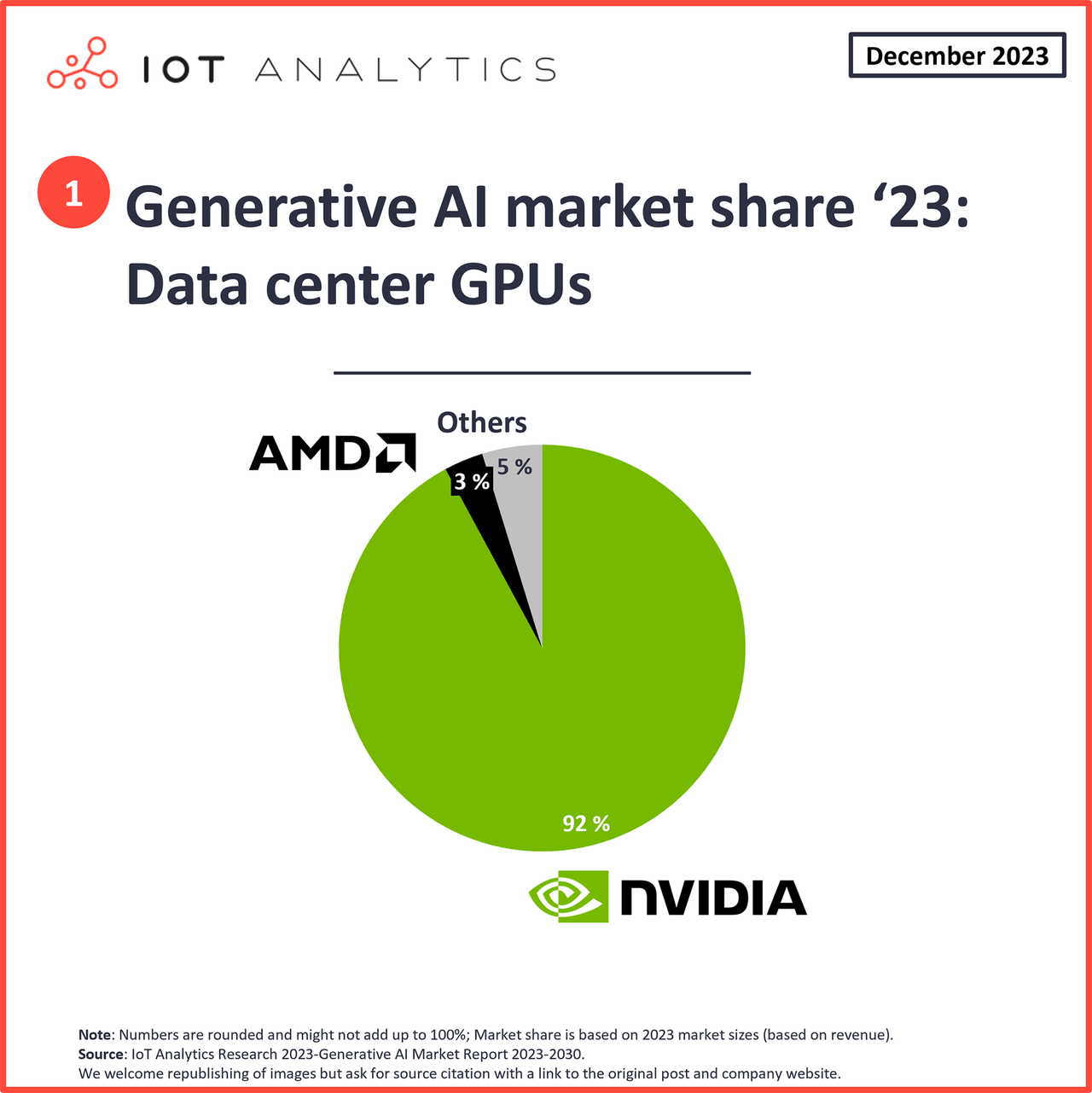

AMD Challenges Nvidia’s AI Dominance with Strategic Moves and Cutting-Edge Innovation

AMD is entering hot pursuit in AI Chips against Nvidia’s dominance. The company posted record data center revenue of $2.3 billion, up 80% YoY, backed by robust sales of its Ryzen and Instinct chips. That growth indicates the potential for AMD to capture a decent share of the potentially massive AI chip market. The Instinct MI300X GPU and the acquisition of AI accelerators continue to position AMD for future growth in the enterprise and data center markets, offering robust alternatives to Nvidia’s offerings.

AMD has also made a few strategic acquisitions to fortify its AI capabilities. Acquisition of Silo AI, the largest private AI Lab in Europe, to the tune of $665 million, oversaw bolstering AMD’s capability in AI software and enabled them to provide end-to-end AI solutions. This buy complements the $1.9 billion acquisition of Pensando Systems and Xilinx’s $49 billion acquisition further to pad out AMD’s data center and AI portfolios. Hence, this proves AMD’s commitment to growth and new initiatives, thus setting up a severe contrast to Nvidia.

The bottom line, however, is that what makes AMD competitive against Nvidia are its technological innovation capabilities and strategic partnerships. Notably, the hub of the chiplet architecture-especially with the MI300 series-provides flexibility and scalability, enabling AMD to iterate at an extremely fast pace for product development. This architecture gives AMD a cost-effective way to drive performance improvements and address various applications. In addition, AMD’s focus on open-source software development provides a critical advantage in this AI market, where adaptability and customization lie at the core.

AMD MI300X Excels in High Workloads, Nvidia H100 Dominates Mid-Sizes

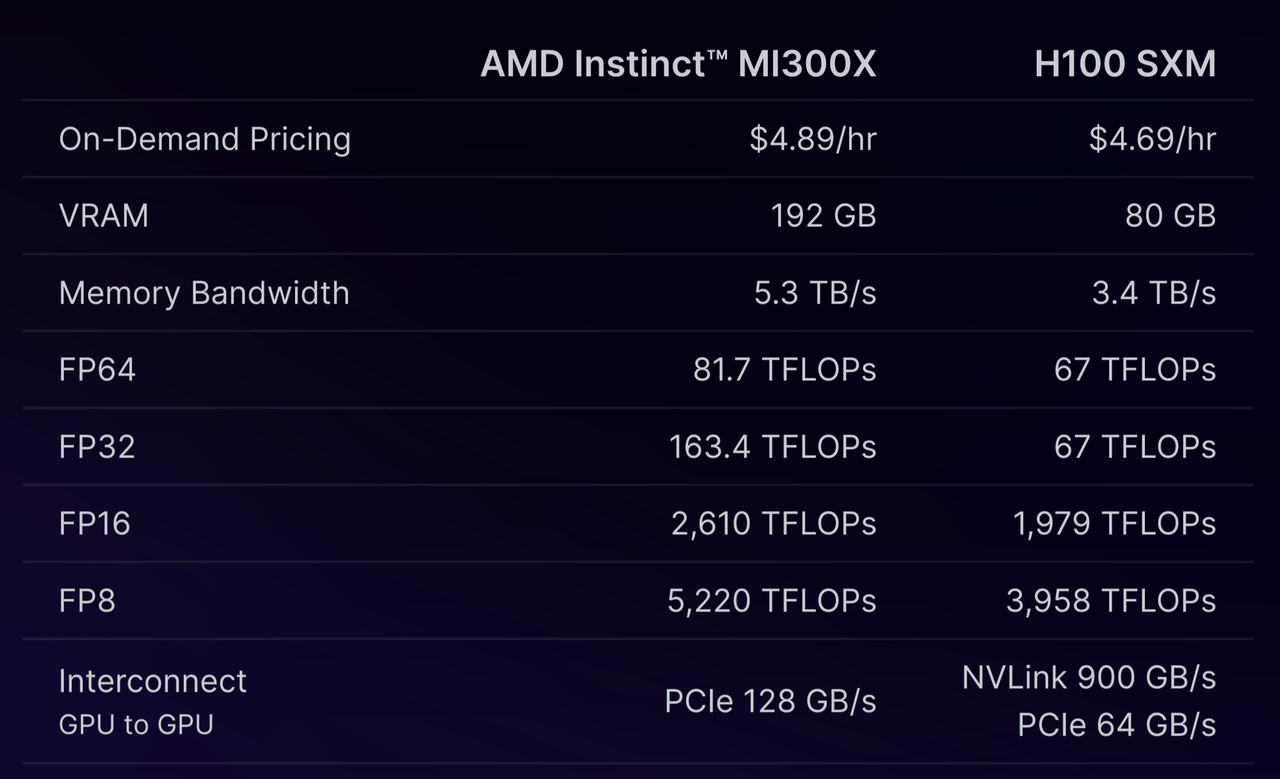

Nvidia has long dominated the AI training and inference market, but AMD is making significant strides with its MI300X GPU. The MI300X, designed to compete with Nvidia’s H100, boasts superior specifications. However, real-world application reveals a more nuanced picture.

AMD’s MI300X offers up to 192 GB of HBM3 memory and 304 CDNA 4 Compute Units, which allows it to handle larger AI models on a single GPU. This is advantageous for tasks requiring extensive data storage and processing power. In contrast, the Nvidia H100, built on the Hopper architecture, excels in ray-tracing and AI tasks, particularly with its dedicated RT Cores that significantly enhance render times.

Additionally, the MI300X’s architecture accelerates physics simulations by processing complex calculations quickly. This feature is particularly beneficial for creating detailed simulations involving fluid dynamics and cloth simulations. Nvidia’s H100, with its Tensor Cores, also boosts simulation performance, enabling faster calculations and improving workflow efficiency for animations.

The MI300X enhances real-time viewport performance, making tasks like sculpting and animation playback more fluid. This is critical for artists working on detailed models. Conversely, the H100 ensures smooth manipulation of high-poly models and complex scenes, providing a seamless creative process.

Recent benchmarks comparing the MI300X and H100 SXM in AI inference workloads, specifically on Mistral AI’s Mixtral 8x7B LLM, provide valuable insights. The MI300X outperformed the H100 SXM at both small and large batch sizes (1, 2, 4, 256, 512, 1024), though it lagged at medium batch sizes. The larger VRAM of the MI300X becomes advantageous at higher batch sizes, handling larger workloads more efficiently.

Moreover, cost comparisons using RunPod’s Secure Cloud pricing reveal the MI300X’s cost-effectiveness at smaller and very high batch sizes. At a batch size of 1, the MI300X costs $22.22 per million tokens, compared to the H100 SXM’s $28.11. This trend continues at batch sizes of 2 and 4. However, at medium batch sizes, the H100 SXM proves more economical.

Serving benchmarks, which assess real-world capabilities such as request throughput, token processing times, and inference latency, highlight the strengths of both GPUs. The MI300X offers lower latency and consistent performance under higher loads, whereas the H100 SXM maintains robust throughput and cost-efficiency at mid-range batch sizes.

Additionally, the AMD MI300X stands out for its superior performance at very low and high batch sizes, leveraging its larger VRAM for more effective handling of extensive workloads. On the other hand, the Nvidia H100 SXM excels at smaller to medium batch sizes, making it ideal for applications where these sizes are prevalent.

Finally, the choice between the MI300X and H100 SXM depends on specific workload requirements, balancing throughput and latency. Future benchmarks, particularly with other popular open-source models, will continue to illuminate the capabilities of these powerful GPUs.

Concluding Thoughts

AMD’s MI300X shows strong potential to compete with Nvidia’s H100, particularly in handling large-scale AI models and high batch-size workloads. This is thanks to its superior specifications like 192 GB HBM3 memory and higher compute units, giving it an edge in cost efficiency for low and high batch sizes.

However, Nvidia’s H100 excels in medium batch sizes, offering better throughput and cost efficiency, along with advanced ray tracing and Tensor Cores for rapid rendering and complex simulations. Despite the MI300X’s hardware advantages, Nvidia’s dominant CUDA platform remains a significant hurdle.

Undoubtedly, Nvidia still dominates, but AMD’s strategic moves and technological advancements will be ready to challenge that soon. With flexible chiplet architecture and important strategic acquisitions, AMD is in a better position than most peers in responding to the evolving requirements for AI applications. Finally, AMD’s strong momentum in AI chips, fueled by strategic acquisitions and technological advancements, positions it as a formidable rival to Nvidia.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of AMD, NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.