Summary:

- The Vision Pro headset is far better than competitors such as the Meta Quest Pro, but it still won’t be enough to win over consumers, in my view.

- Even a technology powerhouse like Apple couldn’t turn HoloLens into a cool consumer product, but augmented reality is the true destination rather than mixed reality.

- Vision Pro is, as the name suggests, for professional users, mostly Apple developers.

- Investor takeaways: investors should not be concerned about near-term sales of Vision Pro.

Justin Sullivan

Apple (NASDAQ:AAPL) unveiled its Vision Pro mixed reality headset to much fanfare during CEO Tim Cook’s World Wide Developer Conference keynote. We were told that Vision Pro represents a new “era of spatial computing”, but the price tag of $3500 will keep most consumers away. And although Apple didn’t want to admit it, the Vision Pro falls well short of the augmented reality glasses that were supposed to do everything Microsoft’s (MSFT) HoloLens does, but without making users look (and feel) like they’ve joined the Borg Collective. Try as they might, there was really no way to make the Vision Pro seem cool. But investors should keep in mind that Vision Pro is just a waypoint towards the objective system.

The Vision Pro headset is better than competitors, but too pricey

The Apple mixed reality headset, dubbed Vision Pro, was much as rumors have described it, except that it wasn’t nearly as svelte as digital artists have imagined.

The rumor:

An artist’s conception of the Apple mixed reality headset prior to launch. (WCCFTech)

The reality:

The Vision Pro (VP) uses external stereoscopic cameras to create a 3D image of the user’s external environment. The VP can combine this with internally generated 3D objects to create a “mixed reality” experience or go into full virtual reality mode.

In many ways, Vision Pro is Apple’s most complex and sophisticated computing device ever. It is fully self-contained with its own operating system, now known as visionOS, based on iOS and iPadOS. And it can even run iPadOS apps in virtual screens that appear suspended in mid-air.

Apple uses a “better than 4K” microOLED screen for each eye. This provides more than double the number of pixels per screen of the Meta Quest Pro, which also is mixed reality capable. This is still not quite Retina Display caliber, but it greatly sharpens the image, an advantage everyone has noticed.

A Meta Quest Pro user in mixed reality mode. (Meta)

Apple has applied its silicon expertise to equip the Vision Pro with an M2 chip as well as a new sensor processor dubbed the R1. Together they reduce motion lag to 12 milliseconds.

Apple’s eye tracking is accurate enough that it is used in the Vision Pro as a selection mechanism. One can launch an app just by looking at it in the Pro’s Home screen, which is very much like that of an iPad, except that it is overlaid on a view of the external environment:

Eye tracking is also used for “foveated rendering”, which means that the parts of the image that are in the peripheral vision of the user are rendered with less detail, thus saving rendering time and reducing latency.

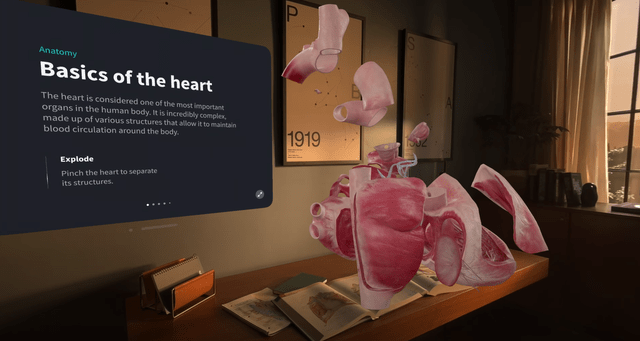

And its stereoscopic 3D allows effects and enables use cases beyond what iPad or iPhone can provide. This is the “spatial computing” aspect. Virtual screens and 3D objects can be superimposed on the screen view of the local environment:

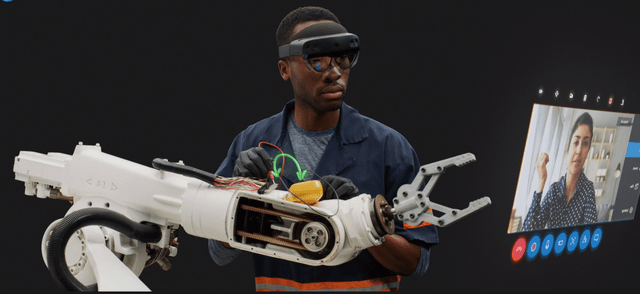

With Vision Pro, users can engage in virtual meetings with presentation material floating in a virtual screen:

But for all its technical advances, it must be acknowledged that the Vision Pro is probably not going to meet with great sales success. The price tag alone assures that. So why did Apple choose to release this system, and who is it really for?

Vision Pro falls short of the augmented reality glasses Apple has been working on

When Apple bought Akonia Holographics in 2018, it was assumed that Akonia’s tech would be folded into an augmented reality headset along the lines of Hololens:

According to a Reuters report:

Akonia said its display technology allows for “thin, transparent smart glass lenses that display vibrant, full-color, wide field-of-view images.” The firm has a portfolio of more than 200 patents related to holographic systems and materials, according to its website.

In the smart glasses approach, the glasses allow direct viewing of the external world, but overlaid with a visual display. Microsoft takes this a step further by using sensors and image processing to create the illusion that virtual objects are anchored in the real world, augmented reality.

Mixed reality headsets use video cameras built into the headset to provide a view of the external world. Virtual objects and data are then easier to merge digitally on the headset display.

Theoretically, self-contained mixed reality headsets like the Vision Pro should allow moving around and interacting with the real world, but there are limitations. The field of view of the headset cameras is limited, and the image on the display screen may be spatially distorted.

Apple has done a great job of addressing these limitations, but there are still good reasons why you wouldn’t want to wear your Vision Pro about town. It’s bulky and heavy, and quite a bit more obtrusive than a pair of glasses. The look reminds me of Futurama’s Bender, especially with the external eye display turned on.

Following the Akonia acquisition, there have been reports of various snags and hiccups with the display technology. Apple has apparently decided to offer a mixed reality headset that provides the “experience” of augmented reality glasses, down to the external display of the user’s eyes.

That Apple went to such lengths is not just Apple worshipping at the altar of “user experience”, although Apple does do that. The Vision Pro clearly shows where Apple wants to go, and where it expects to be eventually: light weight, unobtrusive AR glasses that consumers are comfortable wearing just about anywhere.

Apple introduced Vision Pro as just the first in a line of “spatial computing” devices, thereby confirming that the AR glasses are still in the works. But even Apple couldn’t solve all the technical barriers involved in shrinking a HoloLens augmented reality system down to a pair of lightweight glasses.

These barriers include the display technology and processing. For the AR glasses, Apple will need to shift from OLED displays to microLED displays. MicroLED displays can be more compact and higher resolution than OLED, but they’ve proved difficult to make. Apple has been working on microLED for years, since it acquired LuxVue in 2014. I described this research in an article for members of my investing group in 2018.

Processing is another area where the technology isn’t quite there yet. Apple’s use of the M2 is understandable, since that would be the best available processor, but the processor is fabricated on TSMC’s (TSM) improved “5 nm” N5 process. Apple needs processors that are even more energy efficient, and that probably requires TSMC’s next generation N3 process, which is just starting to ramp production. Apple’s next generation iPhone is expected to use N3 this year.

So, with three key areas, the holographic optics, the microLED display, and processing, still somewhat immature, Apple found itself unable to create a cool, wearable alternative to HoloLens in my view.

Vision Pro is mainly for Apple developers

But Apple is close enough, I believe, that it wanted to get developers started working on augmented reality apps that would be 100% compatible with the AR glasses to come. And that’s what stands out about Vision Pro. It provides all the underpinning technology and application programming interfaces (APIs) for developers to start developing AR apps that will carry over perfectly to the glasses.

This came through very clearly in the Platforms State of the Union, which is an introduction to new programming models and digital technologies that Apple provides for developers every year at WWDC. It’s like the keynote, but with less hype and more substance.

The section on visionOS provides an overview of the new operating system and the underlying APIs. The key takeaways from this are that Apple has been building towards the release of an augmented reality device for years. Apple has provided ARKit APIs that allow the creation of augmented reality experiences for iPhone and iPad, using the cameras and 3D sensors of these devices to combine virtual objects with the external environment on the device’s display.

The key difference is that now developers have stereoscopic 3D displays and sensors, but the programming APIs remain much the same. The other key takeaway is that Vision Pro, for all of its limitations and compromises, provides essentially identical sensor and display functionality as the objective AR glasses.

From a developer standpoint, programs for Vision Pro will work the same on the AR glasses. The basic reason for this is that visionOS does the hard work of embedding virtual objects in the real-world view for the developer. The OS provides the sensing of user inputs such as eye movements and hand gestures automatically as well.

Even though Apple seems to be pitching this as a consumer device, that’s really not the target audience in the near term. The target audience is primarily Apple developers who want to get an early start on the new field of “spatial computing”.

The other main constituency for the new device will be professional users who can benefit from the unique capabilities of “spatial computing” in the course of their work. That includes game developers and 3D content creators, professional designers and engineers. Microsoft turned to professional users when it found little consumer interest in HoloLens.

Investor takeaways: don’t worry about Vision Pro sales

As a consumer product, Vision Pro doesn’t make much sense for Apple, and I question the wisdom of marketing it as such. When it doesn’t sell in large numbers, there will be an army of Apple nay-sayers ready to shout, “we told you so!”

As a stepping stone to the objective system for developers, Vision Pro makes a lot of sense, especially if the objective system is only a year or two away. Apple needs to get developers started on the new platform, and to that end, Vision Pro doesn’t need to be pretty. It just needs to work.

And it does work. Now there’s still the deeper question of whether people want to be immersed in a “spatial computing” environment. Virtual reality and the concept of an immersive 3D interface to computers has been the subject of science fiction for decades. It makes for great entertainment, but do people really want it in their day-to-day lives?

Apparently Apple believes they do, or will. But I doubt that belief is founded on the Vision Pro. Most users still find wearing a computer on their face a little off-putting. Apple in its usual secrecy, has denied us a look at the objective system, the cool AR glasses you would actually want to wear.

But that objective system must exist, or else Vision Pro would make no sense whatsoever. So investors don’t need to worry about Vision Pro’s undoubtedly miniscule sales potential. The true Next Big Thing is still to come. I remain long Apple and rate it a Buy.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of AAPL, MSFT either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

Consider joining Rethink Technology for in depth coverage of technology companies such as Apple.