Summary:

- Meta stock has recently corrected along with the other mega-cap tech stocks.

- Meta’s masterstroke strategy positions the company well to dominate the AI era alongside Nvidia, and creates enhanced revenue growth opportunities for investors.

- Nvidia CEO Jensen Huang has even proclaimed incorporation of Meta’s key AI technology into Nvidia’s AI foundry services, fostering Meta’s ambition to dominate the AI era.

- Ironically, the two tech giants that are currently best positioned to make their AI technology the standard across the industry, Meta and Nvidia, are the cheapest Magnificent 7 stocks.

- Meta stock is being upgraded to a ‘buy’ rating.

Drew Angerer/Getty Images News

Meta Platforms (NASDAQ:META) stock had corrected by around 16% in July. While it has since recovered some ground, it is still 8.5% off of its high. With the stock market, and particularly the Magnificent 7 stocks, witnessing a period of intense volatility, it is creating buying opportunities in long-term AI winners. Amid open-source models increasingly gaining prevalence among enterprises, Meta’s decision to open-source Llama is a masterstroke decision to gain control over its own AI narrative and create new avenues of advertising growth over the long-term, conducive to an upgrade of the stock to a ‘buy’ rating.

In the previous article, we discussed Meta’s unique advantage over advertising rivals Google (GOOG) and Amazon (AMZN) thanks to the popularity of its messaging apps like WhatsApp and Messenger. We delved into Meta’s revenue growth opportunities through automating business messaging services between consumers and merchants/ advertisers on its platforms through generative AI.

In this article, we will also be covering how open-sourcing Llama advances Meta’s endeavor to enable businesses to run their own generative AI-powered assistants through the various social media apps, extending its lead over competitors, and driving revenue growth for shareholders. Although primarily, we will be delving into how Meta’s decision to open-source Llama should benefit long-term investors. Just as Nvidia’s (NVDA) GPUs have become prevalent for running AI workloads across the industry, Meta’s Llama is set to become the most prevalent AI model that powers all sorts of generative AI applications.

Meta’s masterstroke strategy to open-source Llama

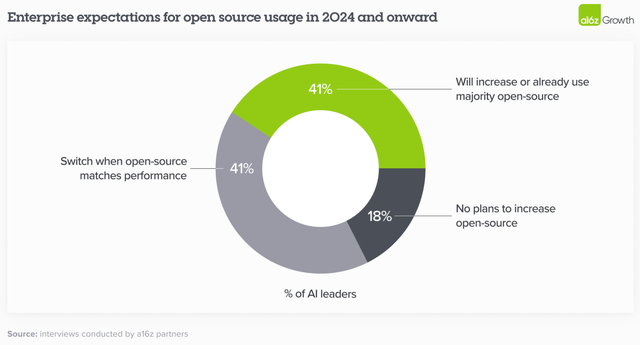

Venture capital giant Andreessen Horowitz (also abbreviated as ‘a16z’ by the company itself) released research findings earlier this year that uncovered how enterprises are adopting generative AI in their businesses. Perhaps the most important point made by a16z was that enterprises are increasingly preferring open-source models over closed-source models:

We estimate the market share in 2023 was 80%–90% closed source, with the majority of share going to OpenAI. However, 46% of survey respondents mentioned that they prefer or strongly prefer open source models going into 2024…. In 2024 and onwards, then, enterprises expect a significant shift of usage towards open source, with some expressly targeting a 50/50 split—up from the 80% closed/20% open split in 2023.

Andreessen Horowitz

Meta is the only mega-cap tech giant to open-source its flagship model, the Llama series, with CEO Mark Zuckerberg proclaiming on the Q2 2024 Meta earnings call:

I think we are going to look back at Llama 3.1 as an inflection point in the industry where open source AI started to become the industry standard, just like Linux is.

Meta’s Llama model is available through all the major cloud providers, and essentially serves as a threat to several of the top proprietary models out there, namely Microsoft-OpenAI’s GPT model series, and Google’s Gemini. In fact, in an open letter that Mark Zuckerberg wrote alongside the release of Llama 3.1, he professed that:

Developers can run inference on Llama 3.1 405B on their own infra at roughly 50% the cost of using closed models like GPT-4o, for both user-facing and offline inference tasks.

Hence, Meta is coming out swinging at the Microsoft Azure OpenAI Service, as well as Google Cloud.

Furthermore, the threat that Meta’s open-source Llama model poses to cloud providers does not stop there.

In a recent article, we delved deeply into Nvidia’s software ambitions through building AI factories for enterprises (which are private data centers for running AI workloads), and making “NVIDIA AI Enterprise” the core operating system for all AI workloads, built into servers made by Original Equipment Manufacturers (OEMs) like Dell, HP and Super Micro Computer. This essentially subdues the need for enterprises to migrate to the cloud to take advantage of generative AI.

Now Meta has partnered with both Nvidia and Dell to make Llama 3.1 available to enterprise customers that want to run their own AI factories for on-premises computing.

To get a sense of the extent to which this broadens Meta’s reach to enterprises, consider this statistical insight from CEO Andy Jassy on Amazon’s Q2 2024 earnings call:

about 90% of the global IT spend is still on premises. And if you believe that equation is going to flip, which I do, there’s a lot of growth ahead of us in AWS as the leader

Although with Meta and Nvidia (as well as OEMs like Dell) forming such a powerful partnership to offer enterprises all that they need to run AI workloads privately on-premises, it could undermine cloud providers’ ability to attract these enterprise customers onto their own platforms and encourage use of their own proprietary models (particularly in the case of Microsoft Azure and Google Cloud). So while the cloud giants see a massive total addressable market ahead with 90% of workloads still running on-premises, truth is a notable portion of this market could stay on-premises and build generative AI applications that run on open-source models like Meta’s Llama.

Regardless of whether businesses choose to deploy generative AI applications through cloud platforms or on-premises computing solutions, Meta is savvily making its models available through both avenues, similar to how Nvidia has successfully been selling its GPUs to both cloud service providers and to on-premises clients.

In fact, at Nvidia’s SIGGRAPH event last week, CEO Jensen Huang discussed how his company has been leveraging Meta’s open-source model to build services around it (emphasis added):

I think that Llama is genuinely important. We built this concept called an AI Foundry around it so that we can help everybody build. You know, a lot of people, they have a desire to build AI. And it’s very important for them to own the AI because once they put that into their flywheel, their data flywheel, that’s how their company’s institutional knowledge is encoded and embedded into an AI. So they can’t afford to have the AI flywheel, the data flywheel somewhere else. So open source allows them to do that. But they don’t really know how to turn this whole thing into an AI and so we created this thing called AI Foundry. We provide the tooling, we provide the expertise, Llama technology, we have the ability to help them turn this whole thing, into an AI service. And, and then when we’re done with that, they take it, they own it. The output of it is what we call a NIM. And this NIM, this neuro micro NVIDIA Inference Microservice, they just download it, they take it, they run it anywhere they like, including on-prem. And we have a whole ecosystem of partners, from OEMs that can run the NIMs to, GSIs like Accenture that that we train and work with to create Llama-based NIMs and pipelines and now we’re off helping enterprises all over the world do this. I mean, it’s really quite an exciting thing. It’s really all triggered off of the Llama open-sourcing.

Essentially, as more and more companies around the world decide to use Nvidia’s GPUs and infrastructure, it potentially creates opportunities for greater adoption of Meta’s Llama as well.

Meta’s LLMs could potentially become even more prevalent than Nvidia’s expensive GPUs, given the fact that Llama is open-source and freely available for use.

Although, this also means that Meta does not directly generate revenue off of the use of its models in enterprises’ generative AI applications.

In fact, it is the partner companies that will make money by offering tools and services that help enterprise customers modify and customize the Llama models for their own use cases, as CEO Mark Zuckerberg shared in his open letter:

Beyond releasing these models, we’re working with a range of companies to grow the broader ecosystem. Amazon, Databricks, and NVIDIA are launching full suites of services to support developers fine-tuning and distilling their own models. Innovators like Groq have built low-latency, low-cost inference serving for all the new models. The models will be available on all major clouds including AWS, Azure, Google, Oracle, and more. Companies like Scale.AI, Dell, Deloitte, and others are ready to help enterprises adopt Llama and train custom models with their own data. As the community grows and more companies develop new services, we can collectively make Llama the industry standard and bring the benefits of AI to everyone.

Now, this broadening partner ecosystem indeed enables Meta to make Llama the most prominently used model across businesses and industries.

Though, the real question is, how will Meta’s shareholders benefit from this open-sourcing strategy?

Meta’s monetization opportunities through open-sourcing Llama

Meta Platforms’ main business motive remains selling ad space on its various apps, and strives to offer rich generative AI-powered services that yield great advertising potency going forward. Additionally, Zuckerberg also suggested the possibility of “people being able to pay for, whether it’s bigger models or more compute or some of the premium features” on the Q1 2024 Meta earnings call.

The point is, Meta wants to offer the best generative AI features and experiences through its social media apps, and Zuckerberg explained on the last earnings call how the open-source approach can help the company achieve this vision (emphasis added):

For Meta’s own interests, we’re in the business of building the best consumer and advertiser experiences. And to do that, we need to have access to the leading technology infrastructure and not get constrained by what competitors will let us do.

…

the reason why open sourcing this is so valuable for us is that we want to make sure that we have the leading infrastructure to power the consumer and business experiences that we are building. But the infrastructure, it’s not just a piece of software that we can build in isolation. It really is an ecosystem with a lot of different capabilities that other developers and people are adding to the mix, whether that’s new tools to distill the models into the size that you want for a custom model, or ways to fine-tune things better or make inference more efficient or all different other kinds of methods that we haven’t even thought of yet, the silicon optimizations that the silicon companies are doing, all the stuff. It is an ecosystem.

And this open-source strategy is already working to encourage third-party developers to expand the capabilities of the Llama model, as The Wall Street Journal reported recently:

Within days of the launch of Llama 3 in April, the open-source community improved the model so that it could take in 20 times as many tokens at a time than when it launched. A token is a unit of text, like a word or a punctuation mark.

This significantly enhances Llama’s ability to process longer/larger prompts from users.

Furthermore, Meta is even going as far as hiring a team that helps third-party developers build upon Llama, as Zuckerberg shared in his open letter:

We’re building teams internally to enable as many developers and partners as possible to use Llama, and we’re actively building partnerships so that more companies in the ecosystem can offer unique functionality to their customers as well.

This strategy should grant Meta valuable insights into broader consumer and enterprise usage trends for generative AI applications powered by its own models, which would indeed feed into its own R&D efforts, with Zuckerberg mentioning on the last earnings call:

we are planning what’s going to be in Llama 4 and Llama 5 and beyond based on what capabilities we think are going to be most important for the road map that I just laid out for having the breadth of utility that you’re going to need in something like Meta AI, making it [so that] businesses and creators and individuals can stand up any kind of AI agents that they want

Essentially, this open-sourcing approach yields Meta greater control over the AI technology upon which it builds its own social media services and experiences, as well as the broader developer community worldwide.

In fact, in his open letter, Zuckerberg emphasized the importance of this control and freedom to innovate by citing the lost opportunities during the last technology wave over the past decade, the smartphone era, in which Apple (AAPL) yielded tight control through its closed ecosystem:

One of my formative experiences has been building our services constrained by what Apple will let us build on their platforms. Between the way they tax developers, the arbitrary rules they apply, and all the product innovations they block from shipping, it’s clear that Meta and many other companies would be freed up to build much better services for people if we could build the best versions of our products and competitors were not able to constrain what we could build. On a philosophical level, this is a major reason why I believe so strongly in building open ecosystems in AI

So when you hear Meta’s executives proclaim the benefits of open-sourcing their models, but are confused over how it will generate revenue and profits for the company given that they don’t charge developers for the deployment of Llama, think instead about Meta’s ability to more freely innovate and roll out new social media features that drive engagement and create new surfaces for ad monetization.

Zuckerberg clearly feels that his company lost out on too many product monetization opportunities over the past decade. So now by striving to make “Llama the industry standard”, and by running the company that potentially builds the most prevalent open-source model, Meta could enjoy much greater product innovation freedom, conducive to new and greater sources of ad revenue for shareholders.

Meta’s leadership in automated business messaging

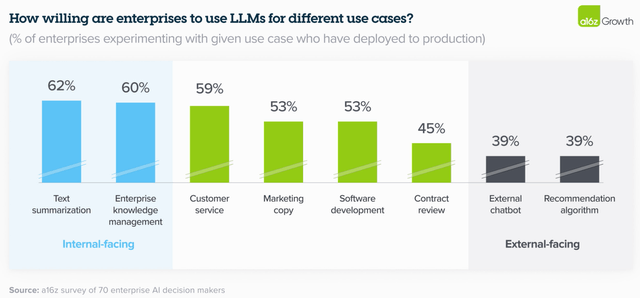

One key area where innovation and advancements will be necessary will be consumer-facing AI applications.

Survey-based research by Andreesen Horowitz found that businesses are much less willing to deploy generative AI models for customer-facing assistants, than they are for internal use cases, fearing risks of chatbots giving bad answers or even hallucinating.

Andreessen Horowitz

Nonetheless, as the industry strives to constantly improve the quality and capabilities of their models to make them fit for customer-facing applications, Meta seems to have a lead here over rivals.

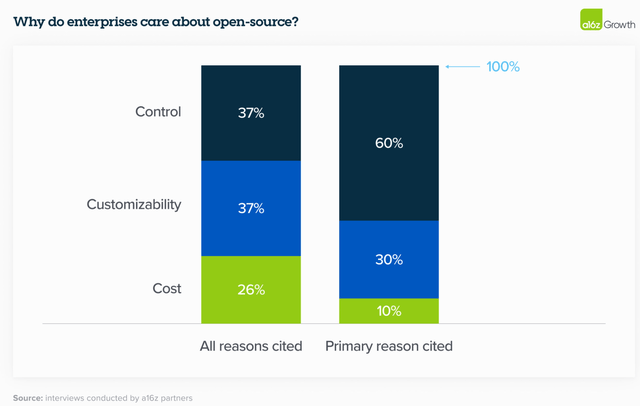

Aside from cost advantages, open-source models also yield other key benefits over closed-source models. As part of Andreessen Horowitz’s survey, when enterprises were asked why they preferred open-source models, the results revealed that:

Control (security of proprietary data and understanding why models produce certain outputs) and customization (ability to effectively fine-tune for a given use case) far outweighed cost as the primary reasons to adopt open source.

Andreessen Horowitz

Hence, because Meta’s open-source model allows developers to customize the underlying code as per their discretion to fit organizations’ needs and gain better control over what answers their chatbots produces in response to consumer queries, Llama is more likely to be adopted by developers worldwide for consumer-facing applications, relative to closed-source LLMs like OpenAI’s GPT series and Google’s Gemini.

Now here’s how this could benefit Meta’s own social media apps.

Keep in mind that Meta’s entire business revolves around consumer-facing services, facilitating communication and connections between people. A key aspect of this has been messaging apps like WhatsApp and Messenger, and the company has been increasingly focused on driving commerce activities through these apps over the past several years.

This has manifested in Meta having a particular lead over rivals Google and Amazon in facilitating communications between merchants and customers through offering “click-to-message” advertising.

Instagram ‘Click-to-message’ ad (Instagram)

This has been growing to become a great additional source of revenue. While Meta does not often disclose how much revenue it generates from its business messaging features, in early 2023 it was reported that:

The company announced in February 2023 that click-to-message ads had reached $10 billion a year in sales, and in April, Meta said that its Family of Apps other revenue was $380 million for the quarter, driven primarily by WhatsApp Business Platform.

Moreover, on earnings calls, Meta’s executives have spoken extensively about automating these business messaging features through generative AI-powered chatbots, enabling businesses to produce their own customized assistants that would respond on their behalf to consumers interested in advertised products.

The social media giant has been testing this feature with businesses over the past few quarters, with Meta CFO Susan Li sharing on the earnings call back in April 2024 that:

we’re also learning a lot from these tests to make these AIs more performant over time as well. So we’ll be expanding these tests over the coming months and we’ll continue to take our time here to get it right before we make it more broadly available.

Perfecting these customer-facing AI assistants will indeed take time, as merchants will want to make absolute sure that the chatbots represent their businesses in a competent manner, and can effectively sell their products/ services, without any embarrassing hallucination incidents.

And this is where Meta’s open-source strategy can benefit the company’s own products and services. Realizing that open-source models will be the clear preferred choice for powering consumer-facing applications, independent developers, as well as developers from businesses across industries, will be contributing to improve the efficacy of Meta’s Llama for deployment in customer-facing applications.

And as Llama’s capabilities to continue to expand and quality of responses continue to improve thanks to the work of third-party developers, Meta will be able to leverage these contributions to also enhance the automated business messaging features through its own social media apps. And as mentioned earlier, with Meta hiring special teams that constantly interact with the third-party developer community, it will grant the company great insights into how enterprises’ use cases are evolving to serve end-consumers better through generative AI applications, which Meta can subsequently incorporate into advancing the capabilities of its next-generation models, namely Llama 4, Llama 5, and so on.

In fact, on the last earnings call, CFO Susan Li already cited progress with facilitating better business messaging automations with the replacement of Llama 2 with Llama 3:

While we are in the early stages, we continue to expand the number of advertisers we are testing with and have seen good advances in the quality of responses since we began using Llama 3.

The point is, with Wall Street eager to see generative AI applications that can help companies boost top-line revenue growth, as opposed to just boosting cost efficiency, Meta seems to be in the lead in terms of providing the open-source technology that can most effectively facilitate consumer-facing commerce. As a result, it is also likely to sustain its lead over rivals Google and Amazon in facilitating communications between advertisers and shoppers through its social media apps in this new AI era.

Risks facing the bull case for Meta

Open-sourcing could undermine Meta’s edge in AI: Now while understanding the advantages of open-sourcing for Meta, you may have wondered, wouldn’t this enable competitors and new social media start-ups to produce applications using a model that Meta has spent many resources to build? Rivals would be aware of the underlying model code that its powering Meta’s own social media features, and could take advantage of the same open-source contributions that Meta is seeking to benefit from.

Zuckerberg’s response to this risk is the fact that no other competitor has the breadth of social media apps and services that Meta offers, which is conducive a rich treasure of user data that can be fed into its models and algorithms to create better experiences, and can’t be matched by its competitors, as he had highlighted on the earnings call back in February 2024.

we typically have unique data and build unique product integrations anyway, so providing infrastructure like Llama as open source doesn’t reduce our main advantage.

Nonetheless, while Meta is seeking to build its automated business messaging ad features for deployment through its own social media apps, there is still the risk of enterprises building Llama-based assistants and deploying them through their own websites or other digital platforms, given the free availability of Llama through the major cloud providers and other tech-focused vendors. Consequently, Meta could miss out on ad revenue opportunities through its own technology. The only way this risk can be mitigated is by sustaining the daily and monthly user engagement on its social media apps, encouraging businesses to deploy their generative AI-powered advertising/ marketing campaigns primarily through Meta’s platforms, as opposed to alternative avenues.

Competing models: While Llama 3.1 is undoubtedly a powerful AI model, it still lacks the multimodal capabilities that OpenAI’s GPT-4o and Google’s Gemini boast. While Mark Zuckerberg has already hinted at future Llama models possessing more multimodal and agentic capabilities, the absence of these features from Llama 3.1 could undermine the extent to which enterprises utilize and contribute to Llama.

Moreover, aside from Llama, there are also other open-source models from start-ups like Mistral AI and Cohere that are also deployed by enterprises worldwide. Additionally, while we discussed Nvidia inducing greater adoption of Meta’s Llama model through its own AI foundries/ factories, note that Nvidia also offers access to other models like Mistral and Stable Diffusion XL. The point is, enterprise adoption of other open-source models could undermine the extent to which Meta builds a burgeoning developer ecosystem to continuously expand Llama’s capabilities. That being said, Meta is a much more well-known and well-established tech giant relative to these upcoming start-ups, which could certainly help the company attract more third-party developers to constantly enhance Llama.

Regulatory Risks: While Meta’s ambition to “make Llama the industry standard” in the AI era could certainly help it overcome the system policies of other major tech giants like Apple, opening the door to greater innovation and monetization possibilities, there is still the risk of regulators undermining Meta’s revenue growth opportunities.

In Europe, the company already faces scrutiny over utilizing users’ data for training its AI models, limiting Meta’s ability to leverage this data. In the US, Meta continues to use posts and photos that users share publicly on its social media apps, to train its AI models. However, any regulatory crackdowns in the US and around the globe relating to data privacy laws could undermine Meta’s ability to build more powerful AI models and algorithms.

Furthermore, Meta also faces other legal headwinds, with the Federal Trade Commission seeking to ban the company’s ability to use data of users under the age of 18 for monetization purposes, amid intensifying concerns around children’s safety and cases of child sex exploitations through the social media apps. In the interest of transparency, this is one of the key reasons I don’t personally own the stock, amid the alleged ill-effects of social media on society. Meta Platforms as a company certainly does not intend for its apps to be used for such purposes, although it does need to do more to eliminate the unintended ill-impacts of its apps on users, which lawmakers are reportedly pushing the company to do. On that note, any developments of Meta successfully eradicating such instances completely from its platforms in the future could certainly change my view on owning the stock, even if that means having to buy the shares at a higher valuation. But for now, I will continue to share my research on the company’s AI strategies, to help investors better understand the growth opportunities ahead.

Meta financial performance and valuation

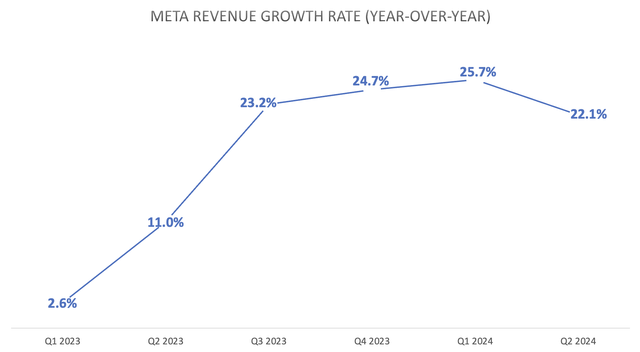

Meta Platforms impressed Wall Street by delivering revenue growth of 22% last quarter, as the market was expecting growth of less than 20%. Meta proved that its years of investment in core AI infrastructure (prior to the generative AI revolution even starting) is paying off in the form of improved advertising potency of its social media apps.

Nexus Research, data compiled from company filings

On the last earnings call, CFO Susan Li highlighted that:

In Q2, the total number of ad impressions served across our services and the average price per ad both increased 10%… Pricing growth was driven by increased advertiser demand in part due to improved ad performance.

With Meta delivering returns on investments from its CapEx from prior years, executives are now seeking to win confidence from investors that its heavy investments in new AI infrastructure to run its generative AI features will also pay off in a few years, with CEO Mark Zuckerberg mentioning on the last earnings call:

We are planning for the compute clusters and data we’ll need for the next several years. The amount of compute needed to train Llama 4 will likely be almost 10 times more than what we used to train Llama 3, and future models will continue to grow beyond that. It’s hard to predict how this trend — how this will trend multiple generations out into the future. But at this point, I’d rather risk building capacity before it is needed rather than too late, given the long lead times for spinning up new inference projects.

And as we scale these investments, we’re of course, going to remain committed to operational efficiency across the company.

This indeed signals much higher CapEX ahead.

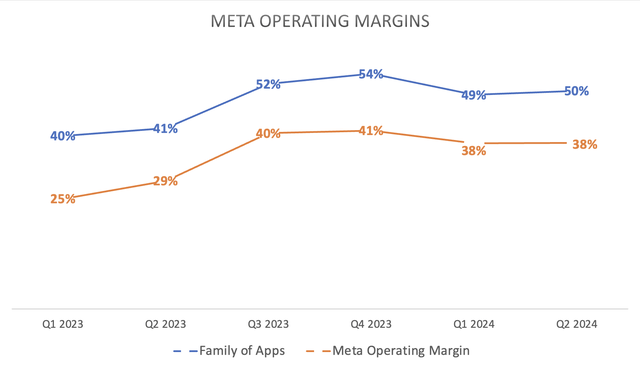

Up till now, Meta has been maintaining a company-wide operating margin close to 40%.

Nexus Research, data compiled from company filings

Although CFO Susan Li did warn on the last earnings call of higher depreciation costs weighing on profitability going forward, amid the increased expenditure in AI data centers and servers:

we expect infrastructure costs will be a significant driver of expense growth next year. As we recognize depreciation and operating costs associated with our expanded infrastructure footprint.

Nonetheless, with Meta’s savvy strategy of open-sourcing its model and fostering a broad developer ecosystem around it, Llama is well-positioned to become the leading LLM, and manifesting Zuckerberg’s ambition of possessing the leading AI infrastructure that is conducive to the best generative AI features and experiences across Meta’s social media apps, which should ultimately translate into greater and new sources of advertising revenue for shareholders.

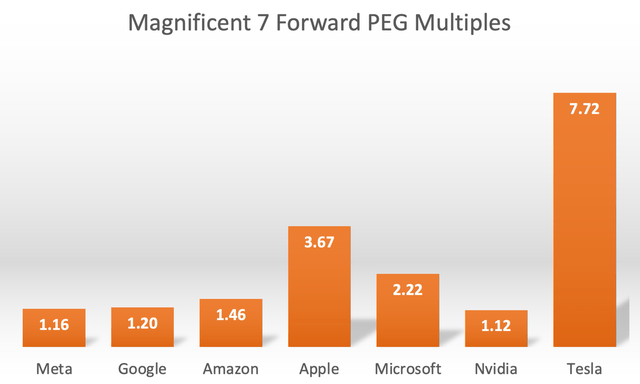

Although despite the company’s stronger positioning in the AI era (relative to the smartphone era with mobile operating systems controlled by Apple/ Google), META has not been rewarded by a commensurate valuation multiple by the market, with the stock still trading at 22.55x forward earnings.

Moreover, when adjusting the Forward PE multiple by the expected future earnings growth rates to derive at the forward Price-Earnings-Growth [PEG] ratio, it reveals that the stock is one the cheapest out of the Magnificent 7, trading at 1.16x, well-below its 5-year average of 1.52x.

Nexus, data compiled from Seeking Alpha

It is ironic that the two tech giants that are currently best positioned to make their AI technology the standard across the industry, Meta Platforms and Nvidia, are the ones that are trading at the cheapest valuation multiples on a Forward PEG basis.

The key discrepancy between the two AI winners is that Nvidia is positioned to immediately generate revenue from the sales of its GPUs and other adjacent data center hardware, while setting itself up to earn recurring software revenue as ‘NVIDIA AI Enterprise’ comes built-in with the wholesome AI supercomputers it sells.

On the other hand, Meta is currently not generating any upfront revenue from the deployment of its Llama model by enterprises across industries. Instead, its pay-off is expected at a later stage of this AI revolution, with greater control over its own AI narrative opening the door to greater product innovations and monetization opportunities.

Amid the recent rotation out of the mega-cap tech stocks, investors are concerned of further downside.

However, given the company’s proven track record of delivering returns on investments, and with the stock trading at such a cheap multiple of 1.16x Forward PEG, long-term investors should already start buying the dip in META, hence the upgrade to a ‘buy’ rating.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.