Summary:

- One analyst estimates Nvidia Corporation has a total addressable market of $2.4 trillion.

- Nvidia’s dominance in AI and parallel computing supports aggressive growth assumptions.

- Risks include potential tariffs on Taiwan-made chips, which could impact Nvidia’s margins and pricing strategy.

- NVDA stock is a buy for aggressive growth investors.

Sundry Photography

I have given NVIDIA Corporation (NASDAQ:NVDA) a Hold recommendation the last three times I have written about the stock. I made those recommendations mainly because the stock’s valuation requires aggressive assumptions about the company’s future revenue, earnings, and FCF growth. The market may eventually become reluctant to push the stock much higher, especially if the company’s fundamentals falter. Although the company has the best AI software, accelerator, and networking solutions, there is high market demand for open-source alternative solutions that could eventually reduce Nvidia’s market share, revenue growth, and margins.

However, the company should continue increasing its prolific numbers over the next one or two years. Nvidia’s Blackwell and its potential successor, Rubin, have an estimated one to two-year lead over competitors. The company also has other competitive advantages, such as its CUDA platform and dominant InfiniBand networking technology, that should keep it on top of the AI infrastructure market for quite some time. So, there are reasons for investing in the stock today.

This article will discuss the soaring demand for AI infrastructure and Nvidia’s fulfillment of that demand with its new graphics processing unit (“GPU”) powered Blackwell chip. It will also review the company’s third-quarter fiscal year (“FY”) 2025 results, valuation, risks, and why I upgraded the stock to a Buy for aggressive growth investors.

Soaring demand for AI infrastructure and Blackwell

Throughout 2024, some analysts and investors have become increasingly skeptical of businesses’ willingness to continue spending on AI infrastructure when it currently provides little return on investment. The Goldman Sachs Group, Inc. (GS) newsletter stated:

Tech giants and beyond are set to spend over $1tn on AI CapEx [capital expenditure] in coming years, with so far little to show for it. So, will this large spend ever pay off? MIT’s Daron Acemoglu and GS’ Jim Covello are skeptical, with Acemoglu seeing only limited US economic upside from AI over the next decade and Covello arguing that the technology isn’t designed to solve the complex problems that would justify the costs, which may not decline as many expect.

Suppose the opinions of Daron Acemoglu and Jim Covello prove accurate; there is a real potential for an AI bubble to form and pop. As a result, analysts and investors closely watch Nvidia’s earnings reports for signs of AI-chip demand declining. Today, there is an ongoing debate among experts about whether computer scientists can improve the Large Language Models (“LLMs”) powering various generative AI applications enough to “solve the complex problems that would justify the cost.”

On the company’s third-quarter fiscal year (“FY”) 2025 earnings call, Cantor Fitzgerald analyst C.J. Muse asked Nvidia Chief Executive Officer (“CEO”) Jensen Huang, “I guess just a question for you on the debate around whether scaling for large language models have stalled.” Scaling LLMs means making the models larger and larger until they produce valuable results. Muse’s question refers to the debate over whether the growing size of LLMs has reached the point where the benefits are worth the costs. C.J. Muse is fishing for early signs of a dropoff in demand for Nvidia’s chips. If the AI industry has reached a point of diminishing returns for scaling LLMs, there will potentially be less demand for Nvidia’s chips to train and run them.

CEO Huang responded to the question by saying:

A foundation model pre-training scaling is intact and it’s continuing. As you know, this is an empirical law, not a fundamental physical law, but the evidence is that it continues to scale.

Foundation model pre-training is an LLM initial training stage in which a massive amount of text data is fed to the model to gain general knowledge about language and context. Huang’s response means that from his vantage point, computer scientists can still build and train larger LLMs. CEO Huang also said the following on the third quarter earnings call (emphasis added):

What we’re learning, however, is that it’s [model pre-training] not enough. We’ve now discovered two other ways to scale. One is post-training scaling. Of course, the first generation of post-training was reinforcement learning human feedback, but now we have reinforcement learning AI feedback and all forms of synthetic data, generated data that assists in post-training scaling. And one of the biggest events and one of the most exciting developments is Strawberry, ChatGPT o1, OpenAI’s o1, which does inference time scaling, what’s called test time scaling.

Post-training scaling techniques, which include reinforcement learning human feedback (Human Feedback) and reinforcement learning AI feedback (Other AI systems give feedback), improve a model’s performance after the pre-training phase. Reinforcement Learning AI Feedback is a newer, advanced, faster, and more scalable technique than human feedback. The reference to synthetic data is data generated by “AI factories” that computer scientists use to supplement real-world data.

Inference time scaling is a technique for improving the model as it makes predictions. Test time scaling is a subset of inference time scaling, where the model is enhanced during the testing phase. These terms are just fancy ways of saying that the AI model spends more time “thinking” before giving a response to a query. He cited examples of models that use inference time scaling in the quote, such as Strawberry and OpenAI o1. The way OpenAI’s website reads is that people can use OpenAI o1 within ChatGPT Plus. However, I don’t think there is an official ChatGPT o1.

These three ways of scaling LLMs have contributed to healthy demand for Nvidia’s AI infrastructure, especially Blackwell, its latest GPU accelerator, which offers significant performance improvement over its earlier chips, like H100. Chief Financial Officer (“CFO”) Colette Kress cited the following statistic on the third quarter earnings, “Just 64 Blackwell GPUs are required to run the GPT-3 benchmark compared to 256 H100s or a 4 times reduction in cost.” That performance improvement has created enormous demand for Blackwell chips. CFO Kress said on the earnings call, “Blackwell demand is staggering, and we are racing to scale supply to meet the incredible demand customers are placing on us.” CEO Huang said the following in the earnings call about Blackwell chips (emphasis added):

Blackwell production is in full steam. In fact, as Colette mentioned earlier, we will deliver this quarter more Blackwells than we had previously estimated. And so the supply chain team is doing an incredible job working with our supply partners to increase Blackwell, and we’re going to continue to work hard to increase Blackwell through next year. It is the case that demand exceeds our supply and that’s expected as we’re in the beginnings of this generative AI revolution as we all know. And we’re at the beginning of a new generation of foundation models that are able to do reasoning and able to do long thinking and of course, one of the really exciting areas is physical AI, AI that now understands the structure of the physical world.

Currently, Blackwell has supply constraints. The company expects demand to exceed supply for several quarters into FY 2026—something investors were excited to hear. Blackwell demand exceeding supply should help Nvidia maintain its high margins well into the next fiscal year.

Company fundamentals

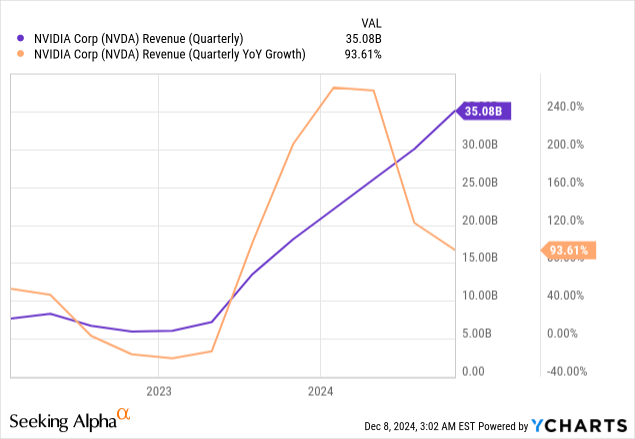

Nvidia’s third quarter FY 2024 total revenue increased by approximately 94% over the previous year’s comparable quarter to $35.08 billion, beating management’s prior guidance of $32.5 billion and analysts’ estimates of $33.13 billion.

CFO Kress said the following about revenue,

“All market platforms posted strong sequential and year-over-year [revenue] growth, fueled by the adoption of Nvidia accelerated computing and AI.”

Let’s look at the market that drives most of the company’s revenue growth: Data Center.

NVIDIA Third Quarter FY 2025 Investor Presentation.

Data Center revenue, which encompasses revenues generated from compute, networking products, and services, grew 17% sequentially and 112% year-on-year to $30.8 billion. Data Center compute revenue, primarily generated from compute products, increased by 22% sequentially and 132% year-over-year to $27.6 billion. Networking revenue grew by 20% year-over-year to $3.1 billion. In the highlight section of the above image, the company states that it’s the largest inference platform, which investors should note since the area in which competitors are most likely to gain inroads against Nvidia is AI inference chips. Investors should look for signs of competitors eroding the company’s dominance in AI chips by gaining market share in inference chips.

NVIDIA Third Quarter FY 2025 Investor Presentation.

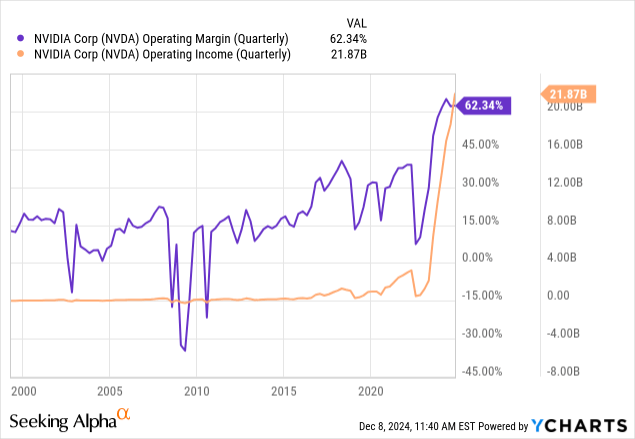

A shift toward higher-margin Data Center sales increased GAAP (Generally Accepted Accounting Principles) gross margins by 0.6 points to 74.6%. Sequentially, GAAP gross margins dropped -0.5 points due to sales shifting from higher-margin H100 systems to newer, lower-margin products. Generally, older, less sophisticated products with established manufacturing processes have lower costs and higher margins.

The third quarter FY 2025 operating margin grew 4.85 points to 62.34%. On the surface, Nvidia’s gross and operating margins are excellent and best of breed. However, bearish investors note that its gross and operating margins peaked in its first quarter of FY 2025 (April 2024) at 78.4% and 64.9%, respectively. Although the company has a valid explanation for margins slightly declining from its peak (Rapid growth of lower-margin Blackwell chips), investors dislike seeing margins decline because it can signal competitors may be eating into a company’s margins.

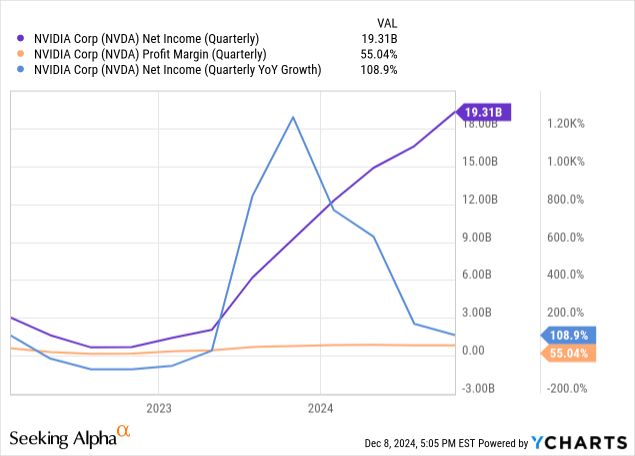

Nvidia’s GAAP net income grew 109% year over year to $19.309 billion. The company displayed fat profit margins of 55.04%. Third quarter FY 2025 weighted average shares declined by 166 million year over year to 24.77 billion. GAAP diluted earnings-per-share (“EPS”) rose 111% over the previous year’s comparable quarter to $0.78, beating analysts’ estimates by $0.08.

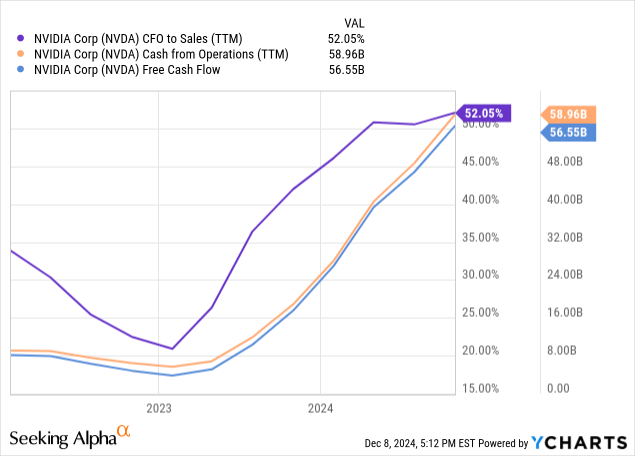

The company’s trailing 12-month (“TTM”) cash flow from operations (“CFO”) to sales was 52%, a fantastic number that indicates that the company converts every $1 of sales into $0.52 of cash. Its TTM CFO was $58.96 billion, and TTM’s free cash flow (“FCF”) was $56.55 billion.

The company returns much of its cash flow to investors. CFO Kress said on the earnings call, “In Q3, we returned $11.2 billion to shareholders in the form of share repurchases and cash dividends.”

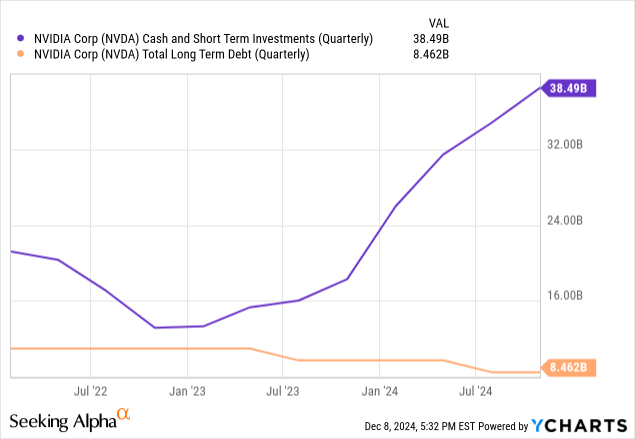

At the end of its third quarter FY 2025, the company had $38.49 billion in cash and short-term investments and $8.46 billion in long-term debt. Nvidia has one of the lowest debt-to-EBITDA in its history at 0.11, which means it could quickly repay its outstanding debt. Its debt-to-equity ratio is 0.16, a sign of a solid balance sheet.

NVIDIA Third Quarter FY 2025 Investor Presentation.

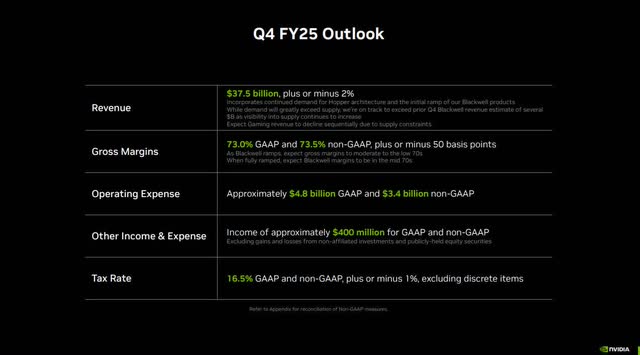

Nvidia forecasts fourth-quarter FY 2025 revenue of $37.5 billion-plus or minus 2%, which was ahead of analysts’ forecast of $36.44 billion before earnings.

CFO Colette Kress gave the following commentary about gross margins and operating expenses (emphasis added):

GAAP and non-GAAP gross margins are expected to be 73% and 73.5%, respectively, plus or minus 50 basis points. Blackwell is a customizable AI infrastructure with several different types of Nvidia-build chips, multiple networking options, and for air and liquid-cooled Data Centers. Our current focus is on ramping to strong demand, increasing system availability, and providing the optimal mix of configurations to our customer. As Blackwell ramps, we expect gross margins to moderate to the low-70s. When fully ramped, we expect Blackwell margins to be in the mid-70s. GAAP and non-GAAP operating expenses are expected to be approximately $4.8 billion and $3.4 billion, respectively. We are a data center scale AI infrastructure company. Our investments include building data centers for development of our hardware and software stacks and to support new introductions.

In the above commentary, she warns investors that gross margins could continue to drop due to the ramping up of lower-margin Blackwell chips. So, investors shouldn’t automatically assume that competition is eating into the company’s margins if gross margins continue to drop into the low 70s.

Valuation

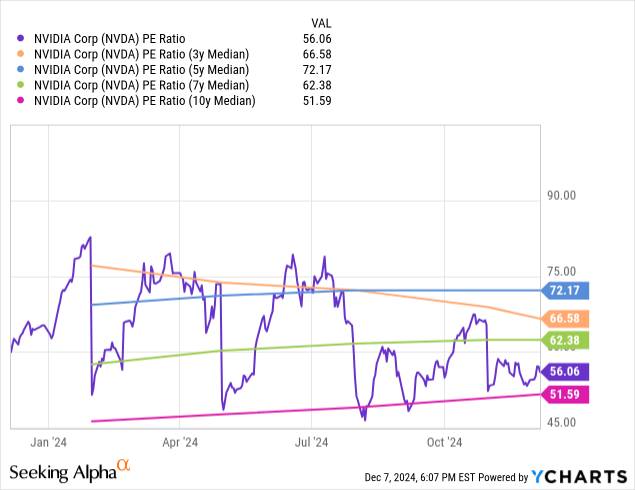

Nvidia’s price-to-earnings (P/E) ratio is 56.06, below its three-, five-, and seven-year median but above its ten-year median. I believe its three- and five-year medians are the most valid since the company’s monstrous performance only occurred over the last several years after OpenAI introduced the possibilities of ChatGPT and generative AI to the market. If it traded at its three-year median today, the stock price would be $169.11, an 18.72% rise over the stock’s December 6 closing price of $142.44.

Nvidia’s one-year forward price/earnings-to-growth (“PEG”) ratio is 0.64. The market generally considers a PEG ratio below 1.0 as undervalued. If the stock sold at a one-year forward PEG ratio of one (Fairly Valued), the stock price would be $221.50, a 55% rise over the December 6 closing price.

Let’s analyze Nvidia using a reverse discount cash flow (“DCF”). This DCF uses a terminal growth rate of 4% because the company should continue steadily growing its cash flow well above the market average after the ten-year analysis period. I use a discount rate of 9.5%, which is the opportunity cost of investing in Nvidia, reflecting slightly less than a moderate risk level. This reverse DCF uses an unlevered FCF for the following analysis.

Reverse DCF

|

The first quarter of FY 2025 reported Free Cash Flow TTM (Trailing 12 months in millions) |

$56,546 |

| Terminal growth rate | 4% |

| Discount Rate | 9.5% |

| Years 1-10 growth rate | 20.2% |

| Stock Price (December 6, 2024, closing price) | $142.44 |

| Terminal FCF value | $368.547 billion |

| Discounted Terminal Value | $2477.961 billion |

| FCF margin | 49.92% |

If Nvidia can maintain a 50% FCF margin for the next ten years, the company must grow revenue by 20.2% annually to justify the December 6 closing price. Nvidia’s revenue has grown by around 31% over the last ten years. My previous article on Nvidia explained why I believe the company can achieve at least 20% annual revenue growth over the next ten years. It may achieve even higher growth.

A Seeking Alpha article discussing William Blair’s Coverage initiation of Nvidia included a quote from Sebastien Naji,

“This vertical approach to computing is packaged into Nvidia’s DGX product, which has greatly expanded its [total addressable market] beyond the traditional GPU market (roughly $100 billion TAM) to the broader semiconductor market ($800 billion) and cloud services markets ($1.6 trillion).”

If that statement proves valid, the company has a total addressable market of $2.4 trillion. Despite Nvidia’s market dominance, with its current TTM revenue of $113.27 billion, the company only has a 4.7% market share of a massive addressable market. Considering the size of the opportunity, the company has the potential to grow revenue faster than 20% annually over the next ten years in a market with high demand for AI infrastructure.

The biggest question is how long the company can maintain an FCF margin of 50% against significant competition. According to a William Blair article announcing the initiation of coverage on Nvidia, senior associate Sebastien Naji said (emphasis added):

Nvidia has a long history of designing parallel computing systems to handle complex processing tasks. In the past, this positioned Nvidia as a leader in niche markets like gaming, automotive, visualization, and high-performance computing. The rising AI tide has catapulted parallel computing to the forefront of the tech industry and has driven massive demand for the company’s GPUs and parallel computing stack. Part of this is related to Nvidia’s technical aptitude—we estimate it has a one- to two-year lead over competitors in AI accelerator performance. The other key driver is the company’s system-level approach with DGX/CUDA, which allows Nvidia to layer in critical IP from top to bottom of the IT stack.

Nvidia is dominant in two AI markets: Training and Inference. Because of its dominance, Nvidia may be able to maintain around a 50% FCF margin for the next several years. Although it faces significant competition in training, that competition is mostly from one player, Advanced Micro Devices, Inc. (AMD). Even if AMD catches up, Nvidia will be in a duopoly where it should be able to maintain high margins for training chips.

Most of Nvidia’s competition will come in the inference AI chip market. This includes a wide array of competitors, including large publicly traded players like Broadcom Inc. (AVGO) and QUALCOMM Incorporated (QCOM), smaller private players like Cerebras Systems, Graphcore Limited, Groq, and SambaNova Systems, and non-traditional chipmakers like Amazon.com, Inc. (AMZN) and Meta Platforms, Inc. (META). The AI Inference chip market is ripe for being quickly commoditized. Nvidia maintaining high margins in inference chips could be challenging. However, suppose you believe in its innovative culture and the brand’s reputation for superior products. In that case, Nvidia may be able to maintain a high FCF margin for quite some time—just not at 50%.

Suppose Nvidia’s FCF margin averages a still aggressive 40% over the next ten years; the company would need to grow revenue at 23.3% annually over the same period. If it averages an FCF margin of 35%, the company must grow revenue annually at 25.1%. I believe these numbers are within Nvidia’s range of achieving. I think the company is within a Fair Valuation range.

The above analysis contains many assumptions. Refrain from fully believing that the company will achieve those exact numbers. Additionally, investor sentiment goes a long way toward determining the valuation where a stock will trade in reality.

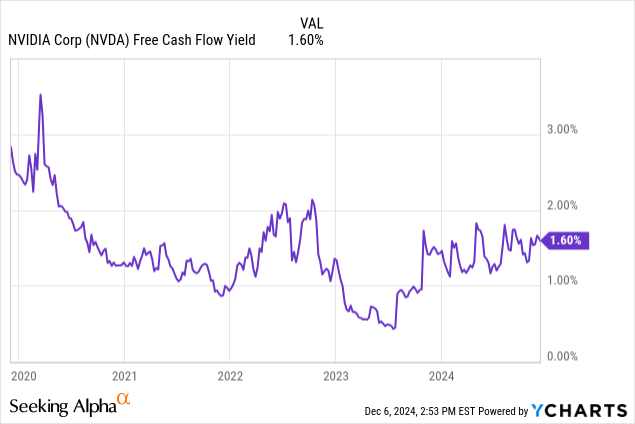

I sometimes use FCF yield to loosely value a stock. Generally, an FCF yield at the top of its trading range signals undervaluation. Alternatively, an FCF yield at the low end of a trading range signals overvaluation. Over the last several years, the market has likely overvalued Nvidia when it traded at an FCF yield below 1% and undervalued it at an FCF yield above 2%. The market likely fairly values the stock at the current FCF yield of 1.6%.

I believe Nvidia sells at least at Fair Value, and it could have some near-term upside over the next year as the company ramps up Blackwell’s production to meet market demand.

Risks

I typically don’t bring politics into the discussion, but the company does face uncertainty from the president-elect’s stated policy of raising tariffs on Taiwanese-manufactured chips. A policy of tariff raises could have many negative repercussions, such as decreased global trade, disrupted supply chains, increased production costs negatively impacting Nvidia’s margins, and possibly higher prices that could lower demand for AI chips.

Investors should remain aware that the company has a high customer concentration risk. Some of its top customers (cloud service providers) have developed in-house solutions. For instance, Alphabet Inc. (GOOGL, GOOG) has developed an in-house inference chip named the Tensor Processing Unit (“TPU”) that can partially replace Nvidia’s technology. The same situation exists for other in-house solutions, which can only replace Nvidia’s technology in specific applications. Still, multiple companies partially replacing the need for its GPUs could eventually reduce Nvidia’s market share and revenue growth. The company’s FY 2024 10-K states (emphasis added):

We receive a significant amount of our revenue from a limited number of customers within our distribution and partner network. Sales to one customer, Customer A, represented 13% of total revenue for fiscal year 2024, which was attributable to the Compute & Networking segment. With several of these channel partners, we are selling multiple products and systems in our portfolio through their channels. Our operating results depend on sales within our partner network, as well as the ability of these partners to sell products that incorporate our processors. In the future, these partners may decide to purchase fewer products, not to incorporate our products into their ecosystem, or to alter their purchasing patterns in some other way. Because most of our sales are made on a purchase order basis, our customers can generally cancel, change or delay product purchase commitments with little notice to us and without penalty. Our partners or customers may develop their own solutions; our customers may purchase products from our competitors; and our partners may discontinue sales or lose market share in the markets for which they purchase our products, all of which may alter partners’ or customers’ purchasing patterns.

Nvidia is currently at the top of its game, and the market doesn’t worry about customer concentration risks or the potential impact of rising competition. Still, these risks could hurt the company in the long term. Investors should consider this when using long-term valuation methods like the reverse DCF I performed in this article, which uses a ten-year timeline.

Nvidia stock is a buy

In my last article on the company, I said,

“Longer-term buy-and-hold investors may be better off waiting for a more significant sell-off to bring the FCF margin over 2% or investing in other companies with a better risk versus reward ratio.”

I still believe that waiting for an FCF yield above 2% before considering a stock purchase is advisable for most long-term investors. Despite the stock’s impressive performance over the last several years and its seemingly invincible competitive position, the company is vulnerable to the competition taking away just enough market share to shrink margins or slow revenue growth below what analysts and investors need to justify its valuation. Risk-averse investors should remain cautious about buying at the current valuation.

However, Nvidia has enough upside over the next year that an aggressive growth investor can buy it for the short term (a year) or until they observe signs of the company’s competitive advantages fraying. I am upgrading the stock to a buy, but only for investors who understand the risks and will take the time to monitor the company’s results closely.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.