Summary:

- Google Cloud is running circles around Microsoft Azure in terms of in-house chips and is still ahead of AWS on this front, which is cultivating into market share gains.

- Thanks to its leadership in custom TPUs, Google has been successfully accelerating revenue growth, seizing more market share, and expanding profit margins all at the same time, which is remarkable.

- While the bears were caught off-guard by the 5% jump in Google Cloud’s profit margin, it’s likely not surprising for investors that comprehensively understand Alphabet’s savvy AI strategies beneath the surface.

400tmax/iStock Unreleased via Getty Images

Aside from the major chip players, the three big cloud providers are also perceived to be the ‘picks and shovels’ of this AI revolution. Currently, Alphabet Inc.’s (NASDAQ:GOOG) (NASDAQ:GOOGL) Google Cloud is increasingly appearing the best-positioned to capitalize on generative AI, thanks to possessing the most comprehensive technology stack, from its own custom chips all the way up to its own, natively multimodal AI models. This is translating into market share gains coupled with accelerating profit margin expansion.

In the previous article, we discussed the growing risks around Google’s core Search business. While Google’s new “AI Overviews” still doesn’t fully embrace the back-and-forth chatting experience that rivals are offering, OpenAI has been stepping up its encroachment into Alphabet’s territory by extending into broader Search functionalities. Alphabet is certainly in a vulnerable position given growing antitrust woes targeting its monopoly over the Search and digital advertising markets.

The threats to Google’s Search business certainly cannot be overlooked given that it still makes up 56% of the tech giant’s top-line revenue. It was the key reason for the downgrade of the stock to a ‘hold’ rating in the last article.

While GOOG remains a ‘hold’, the company is making remarkable progress in its cloud business.

Google Cloud’s strengthening market position

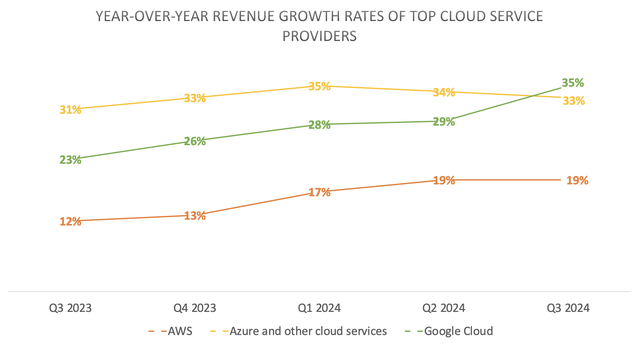

Following Alphabet’s Q3 2024 earnings release, the company surprised the market with Google Cloud revenue growing 6% faster than the expected 29% growth rate.

Nexus Research, data compiled from company filings

Turning to the Google Cloud segment, which continued to deliver very strong results this quarter. Revenue increased by 35% to $11.4 billion in the third quarter, reflecting accelerated growth in GCP across AI infrastructure, generative AI solutions and core GCP products. Once again, GCP grew at a rate that was higher than cloud overall.

– CFO Anat Ashkenazi, Alphabet Q3 2024 earnings call (emphasis added).

While Microsoft Corporation’s (MSFT) Azure saw its growth rate slow down to 33%, and Amazon.com, Inc.’s (AMZN) AWS’ growth plateaued at 19%, Google Cloud witnessed accelerating growth. In fact, keep in mind that Google Cloud consists of both its Google Cloud Platform (GCP) and its Google Workspace business (productivity suite competing with Microsoft’s Office 365 apps). So with CFO Anat Ashkenazi disclosing that “GCP grew at a rate that was higher than cloud overall”, it means that the cloud unit’s actual growth rate was even higher than 35%.

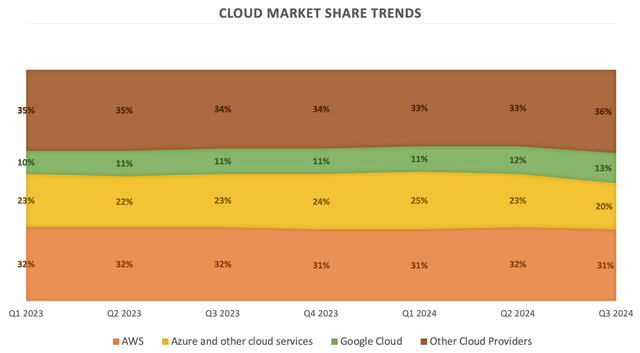

As a result, Google Cloud once again gained market share last quarter, now standing at 13%. Google Cloud’s market share grew by 2% so far in 2024. Meanwhile, AWS’s market share continues to hover in the 31-32% range, while Microsoft Azure’s market share trends are showing signs of reversal.

Nexus Research, data compiled from Synergy Research Group

It is important to note here that in Q3 2024, Microsoft had actually adjusted the composition of its Azure revenue, whereby it took out SaaS-based revenues. This is why Azure saw such a notable market share drop from 23% to 20% last quarter.

While statistics from Synergy Research Group are often cited by analysts covering the cloud market, it is essential to keep in mind that these figures are just estimates after all. Given the discrepancies in how each tech company reports cloud revenue, it can be difficult to accurately determine each hyperscaler’s exact market share.

That being said, Google Cloud is undoubtedly making significant progress in catching up to its two largest rivals, thanks to its AI prowess. While GCP may still be behind in market share, it holds a remarkable lead when it comes to offering custom silicon that is specifically designed for processing AI workloads, namely its Tensor Processing Units (TPUs).

I just spent some time with the teams on the road map ahead. I couldn’t be more excited at the forward-looking road map, but all of it allows us to both plan ahead in the future and really drive an optimized architecture for it. And I think because of all this, both we can have best-in-class efficiency, not just for internal at Google, but what we can provide through cloud, and that’s reflected in the growth we saw in our AI infrastructure and GenAI services on top of it. So I’m pretty excited about how we are set up.

– CEO Sundar Pichai, Alphabet Q3 2024 earnings call.

Google’s leadership role in the AI innovation race and subsequent first-party workloads (i.e., Gemini model powering first-party services like AI Overviews, Google Maps, as well as advancing Performance Max capabilities for advertisers, etc.) allows the company to design their TPUs and plan their technology stack based on foresight about how this AI era will evolve going ahead, conducive to pre-built, embedded cost and performance advantages starting all the way down from the silicon.

As a result, a growing number of enterprises are choosing Google’s TPUs to power their own AI models, including Apple Inc. (AAPL), Salesforce, Inc. (CRM) and Lightricks.

We’ve been leveraging Google Cloud TPU v5p for pre-training Salesforce’s foundational models that will serve as the core engine for specialized production use cases, and we’re seeing considerable improvements in our training speed. In fact, Cloud TPU v5p compute outperforms the previous generation TPU v4 by as much as 2X. We also love how seamless and easy the transition has been from Cloud TPU v4 to v5p using JAX. We’re excited to take these speed gains even further by leveraging the native support for INT8 precision format via the Accurate Quantized Training (AQT) library to optimize our models.

– Erik Nijkamp, Senior Research Scientist at Salesforce, on working with Google Cloud (emphasis added).

So not only has Google Cloud been able to successfully induce a list of noteworthy tech companies to opt for its custom TPU-powered computing instances, but it has also been making progress in locking-in customers from one generation to the next through continuously easing the transition process.

Meanwhile, chief rival Microsoft Azure, which had been perceived to be leading this AI race during the early innings, is still struggling to induce cloud customers to utilize its custom chips over Nvidia’s GPUs, as discussed in my recent Microsoft article:

one year after the introduction of its own Maia GPUs, Microsoft still doesn’t have a customer deployment list to boast about, while Amazon and Google’s chips already benefit from a virtuous network effect that continues to attract more and more enterprises

Furthermore, on the last earnings call Google’s executives also proclaimed how persistent performance advancements at the custom silicon layer are also resulting in greater cost efficiencies for processing its in-house Large Language Models, enabling Google Cloud to pass on these cost benefits to enterprise customers.

On the TPUs, if you look at the Flash pricing we have been able to deliver externally, I think – and how much more attractive it is compared to other models of that capability, I think probably that gives a good sense of the efficiencies we can generate from our architecture. And so – and we are doing the same that for internal use as well. The models for search, while they keep going up in capability, we’ve been able to really optimize them for the underlying architecture, and that’s where we are seeing a lot of efficiencies as well.

– CEO Sundar Pichai, Alphabet Q3 2024 earnings call.

For context, “Flash” refers to Google’s Gemini Flash 1.5 model that was introduced earlier this year.

So the company’s TPU-driven cost efficiencies are already paying off in the form of more competitive pricing strategies for its Gemini models, enabling it to attract more customers to Google Cloud and take market share from AWS and Azure in 2024.

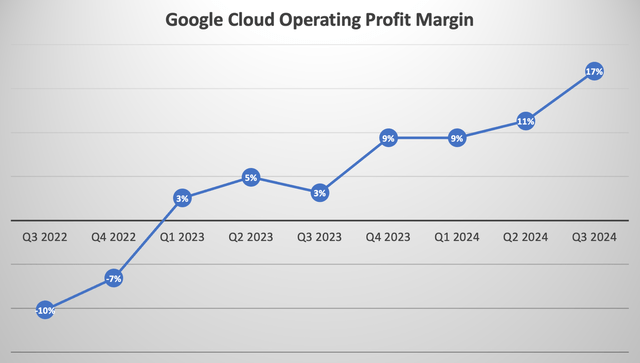

In fact, Google’s custom chip advancements are not only enabling competitive pricing strategies, but it is also yielding internal cost efficiencies that are conducive to profit margin expansion.

Google Cloud’s operating income increased to $1.9 billion and its operating margin increased to 17%. The operating margin expansion was driven by strong revenue performance across cloud AI products, core GCP, and Workspace, as well as ongoing efficiency initiatives.

– CFO Anat Ashkenazi, Alphabet Q3 2024 earnings call.

Nexus Research, data compiled from company filings

Now the extent to which Google Cloud is passing on the TPU-driven cost efficiencies to customers relative to how much is being absorbed internally remains undisclosed. Regardless, the tech giant has successfully been able to accelerate top-line revenue, seize more market share, and expand profit margins all at the same time, which is absolutely remarkable.

Moreover, keep in mind that the company’s custom chip advantage isn’t the only cost-efficiency initiative driving profitability. A previous article from earlier this year offered a simplified deep-dive of another crucial technique Google has deployed to drive down the cost of running AI models, called ‘compute-optimal scaling’. So while the bears may have been caught off-guard by the jump in Google Cloud’s profit margin from 11% to 17% last quarter, it should not be surprising for investors who have been taking the time to comprehensively understand the incredible AI innovation progress the company had been making beneath the surface.

Risks to Google Cloud’s growth prospects

Google Cloud certainly holds a wide lead over its rivals in the custom chips space, but AWS has also been making noteworthy progress on this front, increasingly striking deals with AI-centric companies like Anthropic and Databricks to induce greater utilization of its custom silicon.

As customers approach higher scale in their implementations, they realize quickly that AI can get costly. It’s why we’ve invested in our own custom silicon in Trainium for training and Inferentia for inference. The second version of Trainium, Trainium2 is starting to ramp up in the next few weeks and will be very compelling for customers on price performance. We’re seeing significant interest in these chips, and we’ve gone back to our manufacturing partners multiple times to produce much more than we’d originally planned.

– CEO Andy Jassy, Q3 2024 Amazon earnings call (emphasis added).

The fact that Amazon is having to procure more and more supply of its in-house chips in response to customer demand reflects the impressive strides that AWS is making in the AI race, fighting back against Google Cloud’s advancements and market share gains.

And then we have Microsoft Azure, which is way behind its two main competitors on the custom silicon front, and has been conceding market share this year.

Nonetheless, despite being notably larger than Google Cloud, Azure has still been delivering 30%+ growth rates over the past several quarters, as it is ahead when it comes to generative AI-powered coding assistants.

Microsoft GitHub Copilot was the overwhelming choice used for AI-based auto-programming tools for developers at 65%.

– Seeking Alpha News on a UBS report surveying 130 companies.

Expediting developers’ workloads by simplifying the coding process has been key to encouraging enterprises to migrate to the cloud platforms in this AI revolution, and Azure has been leading on this front.

The investment case for Google stock

GOOG currently trades at around 22x forward earnings (non-GAAP), well below its 5-year average of 25-26x. Although the Forward Price-Earnings-Growth (Forward PEG) multiple is a more thorough valuation metric, by adjusting the Forward P/E ratio by the projected earnings growth rates going forward.

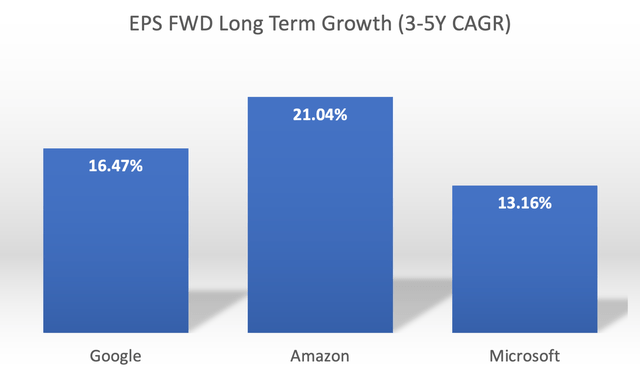

Nexus Research, data compiled from Seeking Alpha

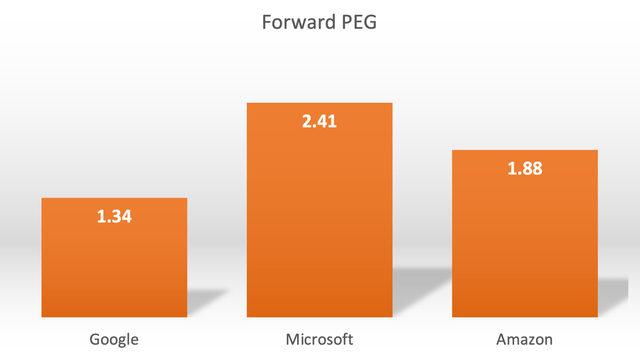

Out of the top three cloud providers, AMZN has the highest expected EPS growth rate, while MSFT has the lowest, and GOOG hovering in the center. Now adjusting each stock’s Forward P/E multiple by their anticipated EPS growth rates gives us the following Forward PEG ratios.

Nexus Research, data compiled from Seeking Alpha

Out of the hyperscalers, GOOG is the cheapest at a Forward PEG ratio of 1.34x. However, it is important to remember that these stocks also trade on the growth prospects of their other business units.

The reason why GOOG is trading at such a discounted valuation is because the market is wary of Alphabet being able to maintain its Search engine market share amid intensifying competition from rising AI start-ups like OpenAI and Perplexity, while also facing regulatory scrutiny from the Department of Justice targeting Google’s practices to sustain market dominance.

While there are strong reasons to stay bullish on Google Cloud, there are also plenty of reasons to be cautious about its core Search advertising business. Therefore, despite the stock trading at a relatively cheaper valuation, GOOG remains a ‘hold’.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.