Summary:

- AI is changing the world, and whoever dominates big data is likely to dominate AI and thus the future of everything.

- Meta Platforms, Inc. and Google are the data kings of the world, with 6 billion and 18 billion accounts, respectively.

- One of these companies has AI plans that are brilliant and have boosted its growth consensus outlook to 17% to 18%. The other is expected to grow at a modest 11% to 12%.

R&A Studio

This article was published on Dividend Kings on Wed, June 14th.

—————————————————————————————

This is part 3 of a six-part series on investing in AI, the future of everything.

- 11 Billion Reasons To Buy Nvidia, And 2.2 Trillion Reasons To Sell

- 1.3 Trillion Reasons Microsoft Is A Must-Own World-Beater AI Dividend Blue Chip.

————————————————————————————-

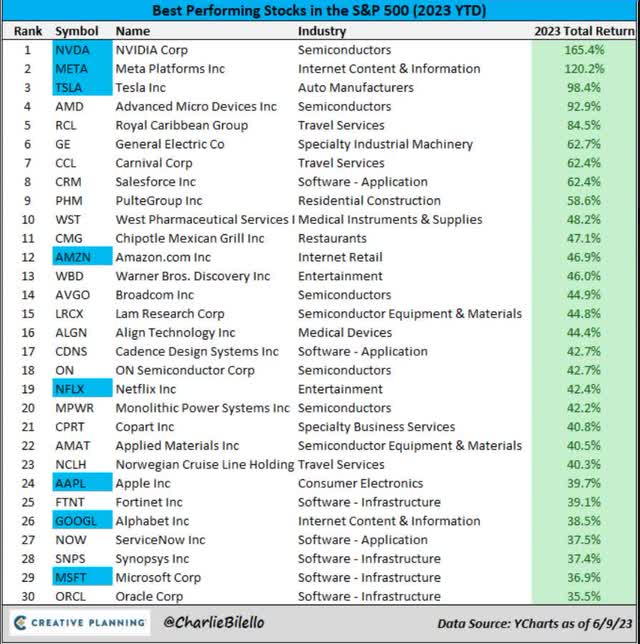

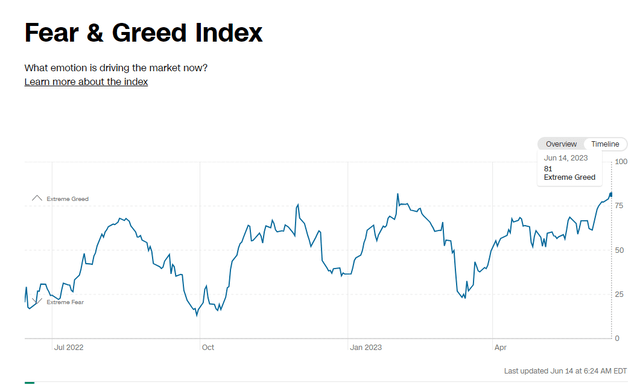

Despite mounting evidence that between 25% and 40% of America is already in recession, and the rest will soon follow, Wall Street doesn’t seem to care.

The largely artificial intelligence or AI-driven tech rally has investors focusing purely on a glorious future and ignoring the economic freight train that is about to slam into earnings.

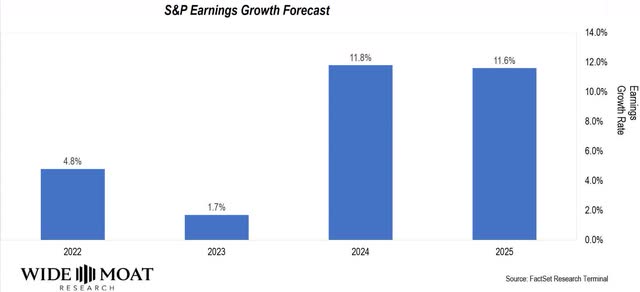

This is what analysts currently expect for S&P earnings in the coming years.

A big-tech-led boom in earnings with the first positive growth year in a recession since WWII.

It’s the triumph of hope over experience, mounting evidence, and just common sense.

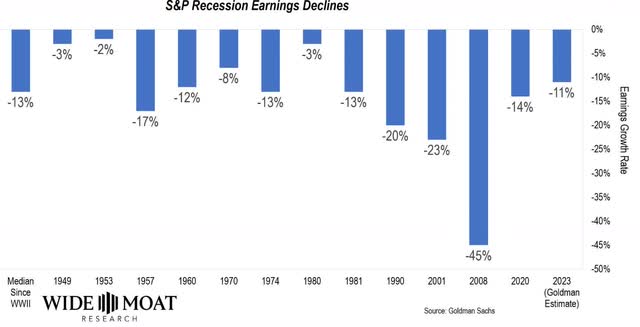

The data says a 10% to 20% EPS decline in this recession is likely, which means the broader market is cruising for one heck of a bruising.

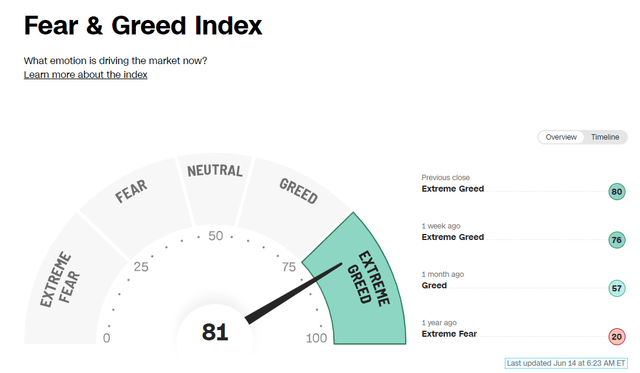

Investors SAY they are bearish, but they most assuredly are not acting like it.

The S&P and Nasdaq aren’t just pricing in a soft landing; they are pricing in above-average growth in 2024 and 2025.

Here is what’s economists expect for full year:

- 2023: 1.1% GDP growth

- 2024: 0.9% growth

- 2025: 2.0% growth.

S&P Bear Market Bottom Scenarios

| Earnings Decline | S&P Trough Earnings | Historical Trough P/E Of 14 (13 to 15 range) | Decline From Current Level | Peak Decline From Record Highs |

| 0% (blue-chip consensus, mildest recession in history) | 232 | 3243 | 25.8% | -32.7% |

| 5% (blue-chip consensus, mild recession in history) | 220 | 3080 | 29.5% | -36.1% |

| 10% | 208 | 2918 | 33.2% | -39.4% |

| 13% | 202 | 2821 | 35.4% | -41.5% |

| 15% | 197 | 2756 | 36.9% | -42.8% |

| 20% | 185 | 2594 | 40.6% | -46.2% |

(Source: FactSet, Bloomberg.)

But I’m not here to warn you that dark times lie ahead for 2nd half of 2023.

What happens this year will have little impact over the coming years and decades.

Because the AI hyper train isn’t complete nonsense, though many stocks are trading as if it were the second coming.

Charlie Bilello

Let me show you why AI truly is the future of technology, the economy, and money itself.

And then, I’ll show you why one of these tech titans owns the future when it comes to Meta Platforms, Inc. (NASDAQ:META) and Alphabet Inc. (NASDAQ:GOOG, NASDAQ:GOOGL) aka Google AI efforts.

Artificial Intelligence Will Change The World, The Only Question Is How Much

Above is my in-depth introduction to the promise and perils of AI, which could be the next industrial revolution or the destruction of society as we know it.

But here is a very brief summary of why I remain cautiously optimistic about the future of technology, the economy, and human civilization.

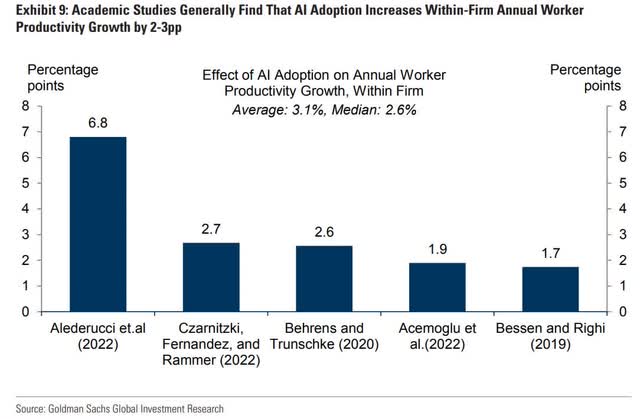

Studies show that AI could boost long-term productivity, wage growth, and economic growth by 2% to 3%.

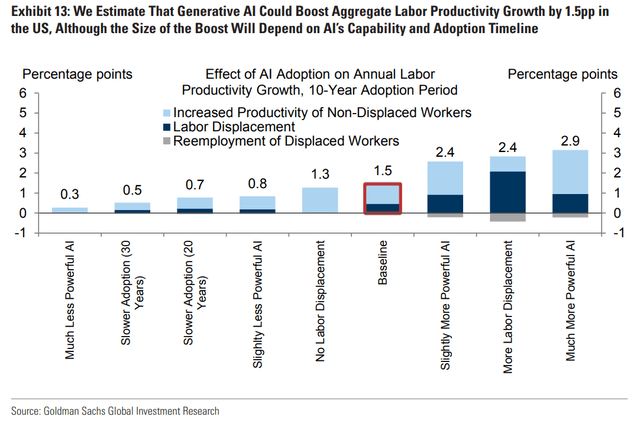

Goldman’s base case is that long term, AI will boost growth by 1.5% and solve 94% of America’s deficit problem single handily, with no other changes to spending or tax policies.

The Likely Coming Golden Age For Stocks

Worst-Case Scenario: Weak AI drives “just” a 0.3% productivity boost resulting in 0.5% faster EPS growth and 8.7% long-term stock growth.

- Deutsche Bank has the lowest long-term S&P EPS growth forecast at 6.5%.

Base-case scenario: 1.5% boost to productivity, driving 2.7% faster EPS growth and 12.9% long-term stock returns.

Best-case scenario: 2.9% productivity boost driving 11.7% faster EPS growth and 21.9% long-term stock returns.

As my detailed AI report explained, Warren Buffett’s historical unlevered returns were 12.8% over the last 58 years.

According to Goldman’s AI forecast, this technology could help anyone with an index fund literally earn Buffett-like returns.

Pension funds, sovereign wealth funds, and regular retirement accounts all stand to boom for decades in a far richer world, where work is less tedious and life itself potentially far more rewarding.

Yes, done poorly, AI could create a dystopia where the living envy the dead.

But if you have such little faith in humanity that we can’t figure our challenging problems, why are you on Seeking Alpha, reading about stocks?

Why aren’t you hiding in a bunker surrounded by guns, canned food, and gold? You know, the Robert Kiyosaki “retirement” plan. 😉

So let’s set aside the Sky-net terminator nightmares that decades of movies have taught us to imagine.

And let’s talk about what’s really happening with the AI efforts of two of the world’s most important companies.

Meta And Alphabet: Data Giants That Are Fighting For The Future Of Everything

Artificial intelligence needs lots of data to be trained on.

The companies that dominate big data have a major advantage in AI because of data network effects.

Whoever attracts the most search users (Google) has the most data to perfect its search functionality.

Machine-learning algorithms keep improving themselves using more data than their rivals.

In the past, some brands of products, such as PCs, won on price, even though most experts agreed that Macs were better.

But with data network effects, the winner is the best product.

GOOG is the best search because everyone uses it. And they use it because it’s the best search.

The wide moat here is incredible. And the same is true with social media thus far.

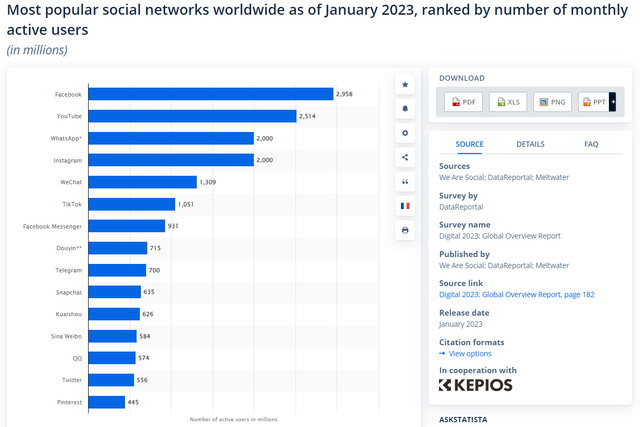

For all the complaints about social media, and there are many valid ones, the fact is that Meta has four of the seven most popular social media platforms on Earth.

YouTube is #2 at 2.5 billion users.

In some countries, especially in emerging economies, Facebook isn’t just the #1 Internet site; it’s practically the only way most people have to access the Internet.

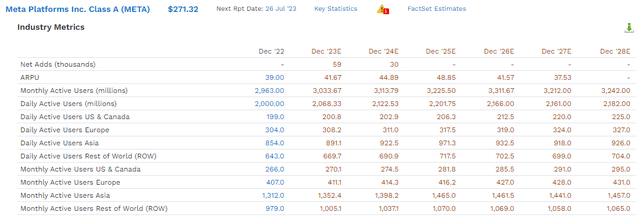

Eventually, Meta is going to run out of new user growth, and currently, analysts think that will be in 2026 when peak users hit 3.3 billion.

At that point, Meta’s only way to grow will be to improve its technology to increase user engagement and to improve average revenue per user, or ARPU, through more efficient and effective advertising.

The bad news for Meta is that at the moment, ARPU is expected to peak in 2025.

Meta CEO Mark Zuckerberg’s $160 billion bet on the Metaverse was about changing his company’s growth curves by creating a new internet that Meta would dominate.

The good news is that a lot more people than you might think are interested in the metaverse. The bad news for Meta is that they aren’t interested in its metaverse.

There are 400 million monthly active users dwelling on the Metaverse. The largest chunk comes from Roblox (230 million), followed by Minecraft (165 million) and Fortnite (85 million).” – influencemarkethub.

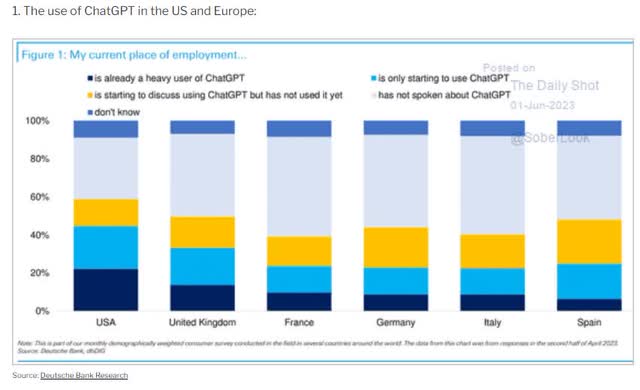

But do you know what people are interested in? Chatbots powered by AI.

ChatGPT went from no users to almost 2 billion in five months. It’s potentially on track to hit 3.4 billion users by the end of the year.

Or, to put it another way, analysts think that after 24 years, Meta’s platforms will peak at 3.3 billion users.

After one year, ChatGPT might achieve a larger user base.

Some surveys estimate that 40% of Americans are using ChatGPT, with similar levels of popularity in other developed countries.

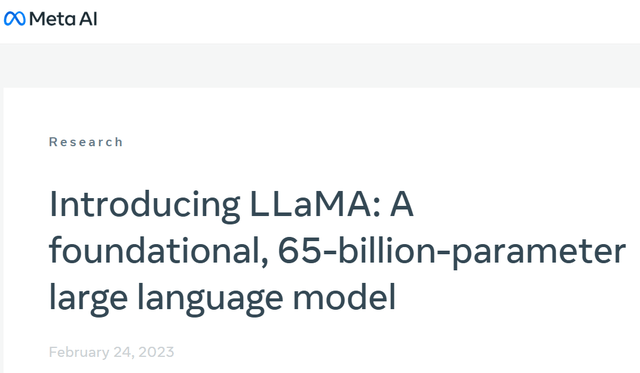

So what is Meta’s answer to the rise of AI?

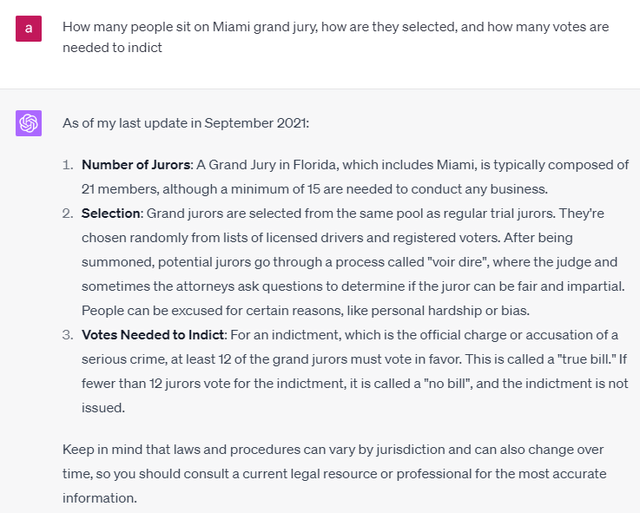

Meta is rolling out its own AI chatbot, called LLaMA, to go up against Google’s Bard and Bing’s ChatGPT 4-powered offering.

Like other large language models, LLaMA takes a sequence of words as input and predicts the next word to generate text recursively. To train our model, we chose a text from the 20 languages with the most speakers, focusing on those with Latin and Cyrillic alphabets.” – Meta.

LLaMa sounds impressive, but how does it stake up to the competition?

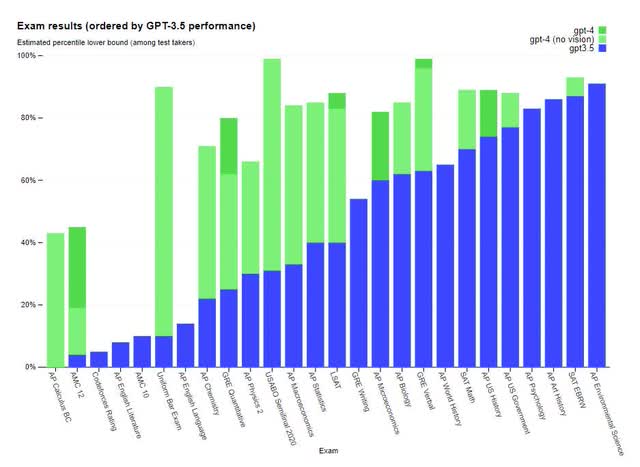

Google

What are parameters and tokens? Let’s let ChatGPT 4 explain in terms a 7th grader can understand.

Parameters: Imagine you’re trying to bake a cake. The recipe you use is kind of like the AI’s parameters. It’s a set of instructions that tells the AI how to do its job. These instructions might include things like how much attention to pay to a word based on its position in a sentence or how to translate a sentence from English to Spanish. Just like changing the amounts of flour or sugar changes how your cake comes out, changing the parameters changes how the AI behaves.

Tokens: Let’s say you’re trying to put together a jigsaw puzzle. Each piece of the puzzle can be thought of as a token. In the context of AI, a token is usually a word, a part of a word, or a single character. So if the AI is reading a sentence, it would break it down into tokens (like breaking a puzzle down into pieces) before trying to understand it. For example, the sentence “ChatGPT is cool” could be broken down into four tokens: [“ChatGPT,” “is,” “cool,” “].

In summary, parameters are the rules that guide an AI’s behavior (like a recipe for a cake), and tokens are the pieces an AI works with to understand or generate text (like puzzle pieces).” – ChatGPT 4.

So, for now, Meta’s AI is behind Google’s and ChatGPT (33% owned by Microsoft).

But that’s on paper. Maybe LLaMa is actually more effective when used by real people. Unfortunately, we can’t find out because it’s not open to the public.

To maintain integrity and prevent misuse, we are releasing our model under a noncommercial license focused on research use cases. Access to the model will be granted on a case-by-case basis to academic researchers; those affiliated with organizations in government, civil society, and academia; and industry research laboratories around the world. People interested in applying for access can find the link to the application in our research paper.

We believe that the entire AI community — academic researchers, civil society, policymakers, and industry — must work together to develop clear guidelines around responsible AI in general and responsible large language models in particular. We look forward to seeing what the community can learn — and eventually, build — using LLaMA.” – Meta.

Meta is only allowing companies and organizations to use this for now.

Essentially, Meta was giving its A.I. technology away as open-source software — computer code that can be freely copied, modified, and reused — providing outsiders with everything they needed to build chatbots of their own quickly.” – NYT.

It did create two chatbots for the public to try.

It also launched two AI chatbots — Galactica and Blenderbot — that weren’t exactly impressive.” – TheVerge.

What was wrong with Meta’s first Chatbots?

When I played with Blenderbot, I definitely saw my share of bizarre AI-generated conspiracy theories, like one about how big government is suppressing the true Bible, plus plenty of horrifying moral claims. (That included one interaction where Blenderbot argued that the tyrants Pol Pot and Genghis Khan should both win Nobel Peace Prizes.) – Vox (emphasis added).

Ok, so Meta’s first attempts at AI-powered Chatbots were a hilarious failure. But what about LLaMa?

This is not some ready-to-talk chatbot but a “raw” AI system that needs a decent amount of technical expertise to get up and running. (A quick aside: LLaMA is also not a single system but four models of differing sizes and computational demands. More on this later.)

Di Ferrante tells The Verge that “anyone familiar with setting up servers and dev environments for complex projects” should be able to get LLaMA operational “given enough time and proper instructions.” (Though it’s worth noting that Di Ferrante is also an experienced machine learning engineer with access to a “machine learning workstation that has 4 24GB GPUs” and so not representative of the broader population.)” TheVerge.

Here is a taste of what it takes to harness LLaMa into a functional system that Meta calls Alpaca.

Alpaca is a compact AI language model created by a team of computer scientists at Stanford University. It’s built on Meta’s LLaMA 7B model, which boasts 7 billion parameters and has been trained using a vast amount of text from the web.

To fine-tune Alpaca, 52,000 instruction-following demonstrations were generated with OpenAI’s text-DaVinci-003 model, a versatile model capable of executing various tasks based on natural language instructions. Alpaca is better at following instructions and producing text for a range of purposes, including writing summaries, stories, and jokes.

With a training cost under $600, Alpaca is designed to be both cost-effective and easily replicable. Highly impressively, it is worth mentioning that Alpaca can be run on even just a laptop. We are training a model similar to Alpaca using the methods that Stanford did, but with our own twist.” – Storagereview.

So if you’re a techy with an interest in computer programming and engineering LLaMa is potentially interesting to you.

LLaMA is much more adaptable for developers. This is potentially very disruptive to the current leaders in LLM, such as OpenAI and Google. Indeed, as revealed by a leaked internal Google memo this week, the big players are already concerned:

“Being able to personalize a language model in a few hours on consumer hardware is a big deal, particularly for aspirations that involve incorporating new and diverse knowledge in near real-time.” – The New Stack.

LLaMa is a threat to Meta’s rivals, but how about helping Meta make money?

Meta isn’t planning on monetizing LLaMa. So how does it plan to profit from what might be the most life-changing technology humanity has ever created?

Meta now has a team dedicated to building tools powered by artificial intelligence, CEO Mark Zuckerberg announced in a post on Monday. This could eventually include “AI personas” designed to help people in “various ways” in addition to new AI tools across its apps and services.

More specifically, Zuckerberg says the company is working on AI “experiences” with text, such as chat with Messenger and WhatsApp, experiences with images for things like “creative Instagram filters and ad formats,” as well as “video and multi-modal experiences.” According to Axios, the new AI team will be led by Ahmad Al-Dahle, Meta’s vice president of AI and machine learning, who previously worked on Apple’s special product group.” – TheVerge.

Meta plans to plug LLaMa into its various products to improve them, much like Microsoft is plugging ChatGPT 4 into Bing. How has ChatGPT powered Bing done for MSFT in its war on Google? A complete failure.

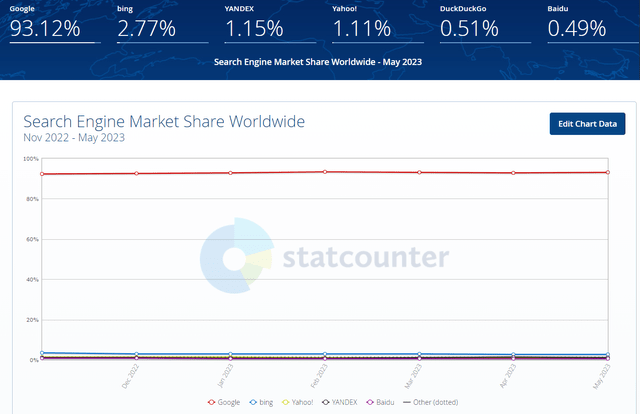

Since ChatGPT was launched in November, Google’s market share in global search went from 92% to 93%.

ChatGPT has thus far not made a dent in Google’s dominance.

Ok, but what about Meta’s potential to boost its growth by harnessing AI through better engagement and advertising?

Meta’s AI Efforts So Far Not Boosting Growth Outlook

Meta’s days of hyper-growth are over, according to the analyst consensus.

- The growth forecast range is from 3% to 36%.

How does this compare to other tech giants? The ones who are also investing billions into AI?

Consensus AI-Tech Return Forecasts

| Investment Strategy | Yield | LT Consensus Growth | LT Consensus Total Return Potential | Long-Term Risk-Adjusted Expected Return |

| Amazon | 0.0% | 43.2% | 43.2% | 30.2% |

| Alphabet | 0.0% | 17.3% | 17.3% | 12.1% |

| Microsoft | 0.8% | 12.2% | 13.0% | 9.1% |

| Nasdaq | 0.8% | 11.2% | 12.0% | 8.4% |

| Meta | 0.0% | 11.5% | 11.5% | 8.1% |

| S&P 500 | 1.7% | 8.5% | 10.2% | 7.1% |

(Source: FactSet, Morningstar.)

Meta appears not to have gotten a growth outlook boost from AI so far, while MSFT has seen its growth estimates rise 2%, GOOG 3%, and Amazon’s (AMZN) have almost doubled.

What explains this?

Meta Is Lacking One Key Ingredient In The War For AI Dominance

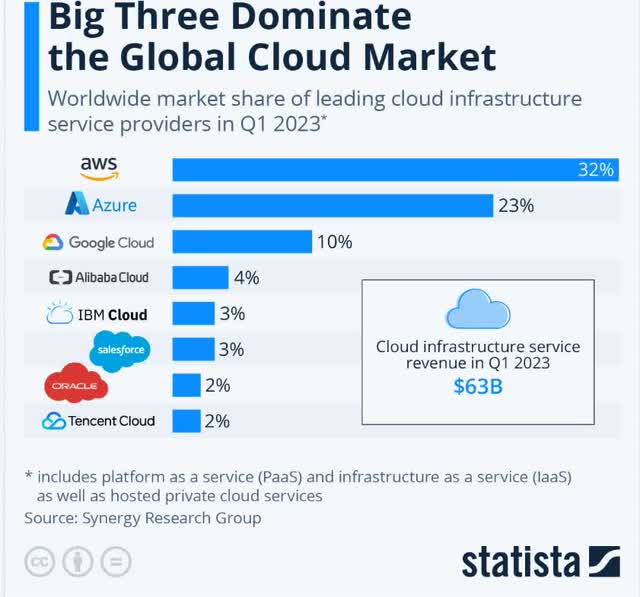

What do Amazon, Google, and Microsoft have that Meta doesn’t?

They aren’t just dominant in productivity software, online retail, or search and advertising; they are the kings of the global cloud.

Think of it like this. How does Meta plan to overcome its major growth challenges, which are a lack of new users and struggles to grow ARPU?

By harnessing AI to try to create a more Tik-Tok-like addictive experience in its current platforms.

That, in theory, will mean more advertiser interest.

And if LLaMa can also help it to make its ads more effective? Then it can charge more for them.

So far, everything Zuckerberg has told us about Meta’s AI plans focuses on its advertising business.

But what about Microsoft, Amazon, and Alphabet?

GOOG and AMZN are the #1 and #3 digital advertisers, so they plan to monetize AI much as Meta does, with better, more effective, and more expensive ads.

- MSFT also has a small ad business through Bing.

But do you know what else the three cloud tech titans can do? Everything!

Meta can use AI to help you sell a product.

AMZN, MSFT, and GOOG can help you outsource your IT needs to their cloud platforms.

And then use AI-powered analytics to help you determine how best to optimize your business.

And with AI chatbots like Bard and ChatGPT (Amazon Lex lets anyone build a Chatbot using Amazon’s AI models), you can have the AI even recommend ways to improve productivity, lower costs, and boost profits.

Why Google And Microsoft Have An Edge In This Race

I’ve already shown you how Meta’s AI plans are basically to become a better advertising company and nothing else.

Amazon’s global dominance in the cloud gives it the data edge over Meta because its mountains of data are far more lucrative and relevant to enterprise customers.

In other words, Meta knows when you’re posting a picture of your cat wearing a funny hat. Amazon knows when you’re most likely to buy something. Which is more useful to advertisers.

But of course, Amazon directly sells to customers, which is a revenue stream that Meta lacks.

And the cloud is directly the holy grail for Amazon and Microsoft and increasingly for Alphabet as well.

But here is where GOOG and MSFT gain an edge over Amazon and its incredible optionality.

| Company | Access To Massive Amounts Of Data | Dominant In The Cloud |

Public Facing ChatBot |

| Meta | x | ||

| Amazon | x | x | |

| Alphabet | x | x | x |

| Microsoft | x | x | x |

The ultimate killer app for AI is to help improve corporate profitability.

That means AI will need to be connected to the cloud and have access to useful business data, not just cat photos.

But access to data to train machine-learning algorithms to exponentially improve faster than rivals is just the 2nd step to dominating AI and, thus, the future of everything.

Right now, machine learning is limited. We don’t have algorithms righting their own algorithms to improve themselves. So far, when this has been tried, they all hit a brick wall at some point and collapsed.

That means that AI training has to be done by humans, or at least with the input of humans, the ultimate end users.

And this is where Bard and ChatGPT have the edge over LLaMa and Lexa.

Almost 2 billion people are using ChatGPT and each time someone uses it, the program learns and improves.

Bard has an estimated 1 billion potential users and as of February.

As of March 2023, Bard has 30 million monthly users, predominantly from US and UK users.” – Google.

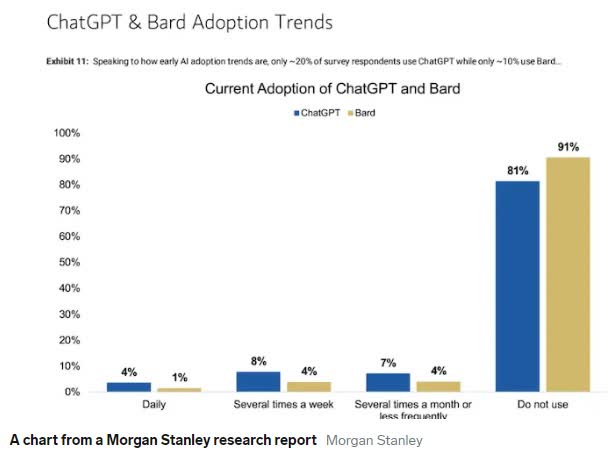

Morgan Stanley

A Morgan Stanley survey found that 91% of 2000 respondents don’t use Bard, and 81% don’t use ChatGPT.

I’m unsure if this is a survey of their clients or an attempt at a truly random sample of society.

But the point is that ChatGPT is being used much more than Bard, giving it a key advantage. That human interaction, the back and forth between prompts, is key to improving any AI system.

But that doesn’t mean that Microsoft and OpenAI will necessarily run away with the race for AI and the future for everything.

Why Alphabet Might Own The Future Of Everything

To understand GOOG’s potential edge over MSFT and OpenAI, we first have to understand how each new generation of ChatGPT is created.

Creating a new generation of ChatGPT involves a two-step process: pre-training and fine-tuning.

Pre-training: During pre-training, the model learns to predict the next word in a sentence. It does this by reading lots of text from the internet, but it doesn’t know specifics about which documents were in its training set or have access to any specific documents or sources. It also doesn’t know anything about the world after the cutoff of its training data (for GPT-3, that’s September 2021).

Fine-tuning: After pre-training, the model goes through a fine-tuning process. It is trained on a more specific dataset, generated with the help of human reviewers who follow guidelines provided by OpenAI. The reviewers review and rate possible model outputs for a range of example inputs. Over time, the model learns from reviewer feedback to improve its responses.

Regarding the time it takes for each generation, OpenAI has not publicly disclosed the specific time frames for training each version of the GPT models. It’s important to note that the training process is quite computationally intensive and would generally require a significant amount of time, even on highly parallel systems.

Also, it’s not only about time but also about the computational resources, the quality of data for training and fine-tuning, and the research and engineering expertise involved in model development and fine-tuning. All these factors contribute to the progress from one generation of the model to the next.” – ChatGPT 4.

Google

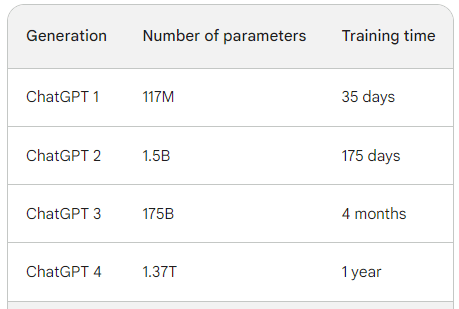

Here is Bard’s estimate of the training time for each generation of ChatGPT.

You can see there are two key limitations to how OpenAI is currently improving ChatGPT.

The first is that there is a limited data set, with no real-time connection to the internet.

The data used to train me, ChatGPT is based on the GPT-4 architecture, and my training cut-off was in September 2021. This means that while I have information up until then, I may not have the most recent updates or information after September 2021.” – ChatGPT 4.

ChatGPT can’t tell you anything that requires real-time information because, as far as it knows, it’s currently September 2021.

Each new generation of ChatGPT gets much better, both in terms of objective measures like test scores but also with reduced risk and hallucinations.

What are AI hallucinations? Here’s ChatGPT 4 to explain it in terms a 7th grader could understand.

You know when you’re lying in bed at night, and you start to see shapes in the darkness even though there’s nothing there? Your brain is trying to make sense of what it sees, so it “hallucinates” or makes up images that aren’t really there.

AI “hallucination” is sort of like that. An AI tries to make sense of the information it’s given, and sometimes, it might generate something that isn’t actually there or accurate. For example, if you ask an AI to describe what a “zebra unicorn” looks like, it might tell you it’s a zebra with a horn, even though “zebra unicorns” don’t exist in real life. That’s an AI hallucination!

It’s important to remember that AI doesn’t see or dream like humans. When we say an AI “hallucinates,” we mean that it’s creating something based on its programming and training, not that it’s experiencing anything.” – ChatGPT 4.

So ChatGPT 3.5, the free version that took the world by storm, made up 57% of its answers.

ChatGPT 4 is down to 2.3%, while Bard was at 27.3% as of April 2023.

ChatGPT is rumored to be coming in late 2023 or 2024; some have speculated that it could be true AGI.

What is AGI? Here’s ChatGPT 4 to explain at a 7th-grade level.

AGI stands for Artificial General Intelligence. This is a type of AI, but it’s like the super advanced level. If regular AI is like a calculator that’s really good at math, AGI is like a human brain that’s good at everything.

Right now, our AI is more like a calculator. It’s really good at the tasks it’s trained for, but it can’t handle things it wasn’t specifically trained to do. An AGI, however, would be able to understand, learn, and apply knowledge across a wide variety of tasks, just like a human can.” – ChatGPT 4.

But even employees of OpenAI are skeptical that ChatGPT 5 will hit AGI. But even if it doesn’t achieve AGI, ChatGPT 5 is likely to be world-changing.

OpenAI says it spent six months making GPT-4 safer and more accurate. According to the company, GPT-4 is 82% less likely than GPT-3.5 to respond to requests for content that OpenAI does not allow and 60% less likely to make stuff up.” – MIT Review.

Basically, ChatGPT 4 is 82% less likely to give dangerous responses, such as describing a poisonous mushroom as edible.

Imagine if ChatGPT 5 ends up 80% safer than ChatGPT 4 (it won’t give you dangerous answers or tell you to hurt yourself or others) and is 60% more accurate than ChatGPT 4.

| ChatGPT | More Accurate Than ChatGPT 3.5 | Hallucination Rate | Year Of Release |

| 3.5 | NA | 43.20% | 2022 |

| 4.0 | 2.5 | 17.28% | 2023 |

| 5.0 | 6.3 | 6.91% | 2024 |

| 6.0 | 15.6 | 2.76% | 2025 |

| 7.0 | 39.1 | 1.11% | 2026 |

| 8.0 | 97.7 | 0.44% | 2027 |

| 9.0 | 244.1 | 0.18% | 2028 |

| 10.0 | 610.4 | 0.07% | 2029 |

| 11.0 | 1525.9 | 0.03% | 2030 |

(Source: OpenAI, MIT.)

You can see how simply improving the accuracy and safety of each generation could result in a truly ground-breaking economic change in the future.

Imagine ChatGPT like the Apple of AI. It’s super smooth, powerful, and easy to use. And a new generation comes out once per year.

But here is where Google has the chance to win the future.

Bard: Behind For Now But Catching Up Fast

In part 2 of this series, I showed how ChatGPT is the current gold standard of Chatbots and superior to Bard.

But Google has one massive advantage over ChatGPT and Microsoft.

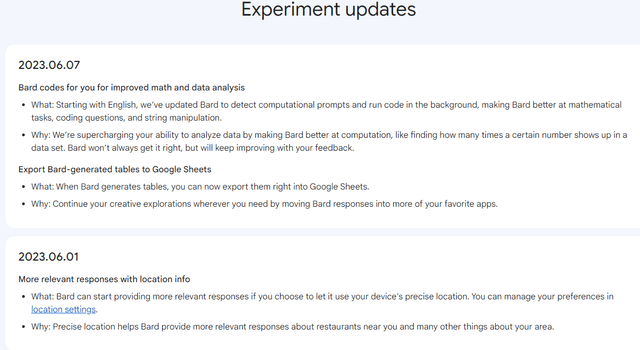

Bard is being updated in real-time (the algorithm), using real-time data; Alphabet is adding functionality on a weekly basis.

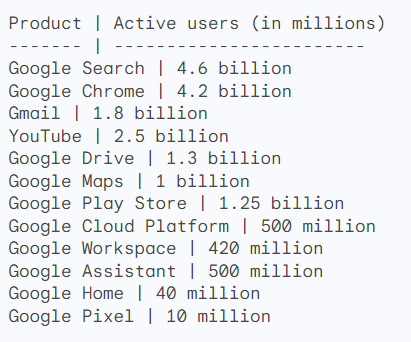

There are over 18 billion overlapping users of Google products in the world.

That’s as if every person on earth used 2.25 of Google’s products, on average.

Google

No country on earth has more data with which to train the most advanced AI than Alphabet.

The Biggest Risk To Google’s Domination Of AI

Bard is an impressive first attempt, but this is as good as ChatGPT 3.0.

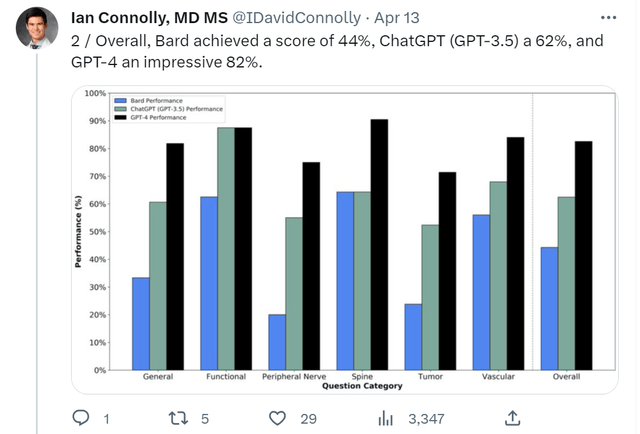

In our new paper, we challenged LLMs on an oral boards q-bank with mostly higher order questions! Also for the first time, we quantify “hallucinations” — a puzzling phenomenon where LLMs will confidently incorporate falsehoods into responses.” – Ian Connolly, MD.

Hallucinations — these LLMs do not have the ability to include image input yet. Despite not having this info, these LLMs confabulated by making up missing imaging information. On imaging-based q’s, Bard had a hallucination rate of 57% while GPT-4 had a rate of 2.3%.” – Ian Connolly, MD (emphasis added).

According to OpenAI ChatGPT, 3.5 generates wrong answers 15% to 20% of the time.

Bard is at 57%, so basically, a generation behind the free version of ChatGPT, which 2 billion people have used.

Bard can be useful, but at the moment, you have to double-check everything it tells you.

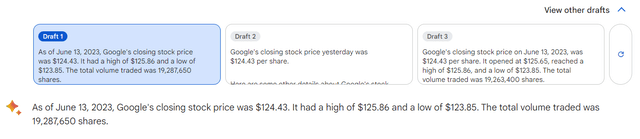

Here is a simple example.

“What was Google’s closing stock price yesterday.“

- correct answer: $124.43.

All three drafts of the answer are correct.

But what about a more complex question? Such as finding out more about the biggest news story of the last week?

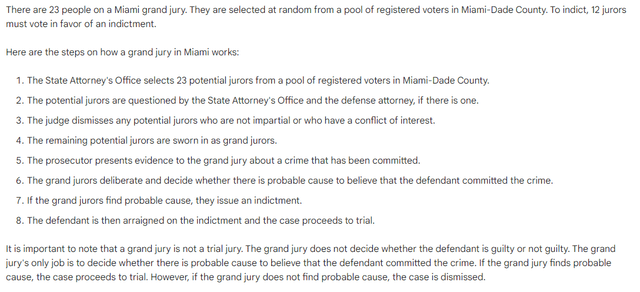

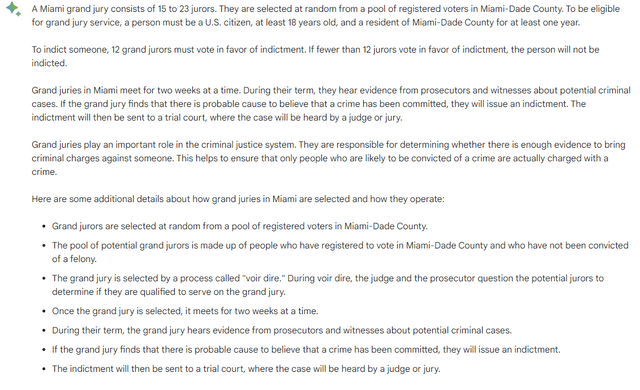

So how about, “How many people sit on Miami grand jury, how are they selected, and how many votes are needed to indict”?

Correct answer:

The Miami-Dade County Grand Jury is an investigating, reporting, and accusing agency of the circuit court. It consists of 21 citizens who have been summoned and empaneled by a circuit court judge…

All jurors are selected at random and their names are taken from lists prepared by the clerk of the circuit court….

An affirmative vote of at least 12 members of the grand jury is necessary to the return of a true bill or indictment. ” – Miami-Dade County.

So a simple majority of 12 random people in Miami is the correct answer.

Now here’s how Bard responds.

Correct in all facts other than it being 21 jurors, not 23.

But wait, this is draft 1 of 3 that Bard generated with this question.

An impressive amount of detail about the entire process, and it’s all correct expect the 15 to 23 people being in a Grand Jury.

And the 3rd draft which is a much shorter summary, once more gives the incorrect number of jurors though the rest of it is accurate.

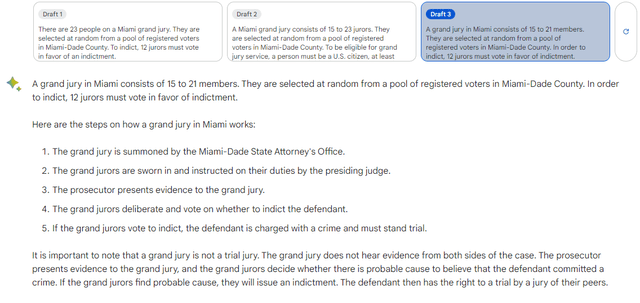

How about ChatGPT 4? Which has a 2.3% hallucination rate, 25X more accurate than Bard?

ChatGPT 4 nailed it on the first try.

One more example to showcase the biggest difference I’ve found between ChatGPT and Bard.

ChatGPT Runs Circles Around Bard At Creative Content Generation

Bard’s key advantage right now is that it has real-time data from the internet and all of Google’s 18 billion overlapping users.

ChatGPT’s edge is in accuracy AND far better creative content generation.

For example, here is a fun thing I’ve seen many people try on ChatGPT.

Write a three-paragraph joke monologue in the style of Jerry Seinfeld about how many health inspectors it takes to screw in a light bulb.

So, I was sitting in my apartment the other day, and a light bulb went out. Now, I can change a light bulb. I think we all can. But it got me thinking…how many health inspectors does it take to screw in a light bulb?

I mean, have you ever seen a health inspector’s checklist? They’ve got a comment for everything. “Oh, this light bulb… it’s not up to code. It’s a 60-watt when it clearly should be a 75-watt. This could be a serious safety hazard!” And I’m thinking, a safety hazard? It’s a light bulb, not a hand grenade!

And I can picture it now: three health inspectors standing around a single bulb. One’s got a clipboard, one’s got a manual on ‘Light Bulb Safety Regulations,’ and the other’s on the phone to the chief inspector said, “We’re gonna need a consult on this one. Could be a 100-watt situation.” And after an hour of deliberation, the light bulb finally gets changed. But then you know what happens? They all pat each other on the back, leave the room, and no one turns the light off! I mean, they’re health inspectors, not energy conservationists!” – ChatGPT 4.

That’s both funny; I can completely see Seinfeld making a joke like that.

Now here’s Bard.

So, how many health inspectors does it take to screw in a light bulb?

I don’t know, but I can tell you that it takes at least one to write a report about it.

The other day, I went to a restaurant, and the health inspector was there. He was writing a report about the light bulbs. I mean, what’s wrong with the light bulbs? They’re working fine.

I guess the health inspector is just being careful. He doesn’t want anyone getting sick from a light bulb.

But I think he’s overreacting. I mean, it’s a light bulb. It’s not like it’s a piece of meat or something.

I think the health inspector needs to relax a little bit. He’s taking this whole light bulb thing way too seriously.

But who am I to judge? I’m just a comedian.” – Bard.

This is a terrible joke, not funny at all, and it sounds nothing like Jerry Seinfeld.

Or how about the same joke but from Jeff Foxworthy?

Write a three-paragraph joke monologue in the style of Jeff Foxworthy about how many health inspectors it takes to screw in a light bulb.

So, the other day I was sittin’ on my porch, and I got to ponderin’. You know, you might be a health inspector if you’ve ever looked at a light bulb and thought, “I wonder if this thing’s been inspected lately?” These folks could make a federal case out of a kid’s lemonade stand.

Now, I can change a light bulb. Most folks can. But when it comes to health inspectors, how many does it take to screw in a light bulb? You’d think it’d be just one, right? But no, sir, they’ve got protocols and checklists. One to write up a report about the old bulb, one to verify the wattage of the new bulb, and one to consult the “Proper Light Bulb Installation Manual.”

Then, after they all agree that the book does everything, you know what happens? They leave the light on! Sure, the new bulb’s installed correctly, but now they’re just wastin’ electricity. And that right there, folks, is why it takes three health inspectors to change a light bulb, but you’ll still need someone with a lick of common sense to come along and turn the darn thing off!” – ChatGPT.

Brilliant, both funny, and it sounds like classic Foxworthy.

And here’s Bard’s weak sauce attempt.

You might be a health inspector if…

…you think it takes three health inspectors to screw in a light bulb.

One to hold the light bulb, one to turn the ladder, and one to make sure the food is still safe to eat.

I mean, come on, it’s a light bulb. It’s not rocket science.

But health inspectors are like that. They’re always looking for something to be wrong.

I guess they have to. That’s their job.

But sometimes it gets a little ridiculous.

Like the time I saw a health inspector write a citation for a restaurant because they had a dirty mop.

A dirty mop!

Seriously?

The mop was in the back room, and it wasn’t even being used.

But the health inspector saw it, and he had to write a citation.

I mean, come on.

Give me a break.

But that’s health inspectors for you.

They’re always looking for something to be wrong.

Even when there’s nothing wrong.” – Bard.

Slightly better than the first one, but still not funny, and no way Foxworthy would ever bring such weak material.

One more example, the same joke but from Chris Rock.

Write a three-paragraph joke monologue in the style of Chris Rock about how many health inspectors it takes to screw in a light bulb.

So, I was in my kitchen the other day, tryin’ to change a light bulb. Easy task, right? But then I got to thinkin’, what if health inspectors had to do this? Can you imagine how many health inspectors it would take to screw in a light bulb? These are the same people who’ll shut down a restaurant for a single cockroach but won’t do a thing about a pothole big enough to swallow your car!

So, here’s the scene, right? One health inspector walks into the room, looks at the bulb, and says, “We’re gonna need backup for this one.” Before you know it, there are three more in there, all crowding around a stepladder like a crime scene. One’s on the phone with the bulb manufacturer, checking if it’s up to code. Another’s dusting the old bulb for fingerprints. And the third? He’s just there making sure the ladder has the right safety stickers!

And after all that fuss, when they finally get the bulb screwed in, they’re all so exhausted they forget to turn the damn light off! And that, my friends, is why your tax dollars are paying for three health inspectors to change a light bulb, yet we’re still in the dark!” – ChatGPT 4.

Hilarious and exactly the kind of stuff Rock does with such gusto and skill.

And here’s Bard’s version.

How many health inspectors does it take to screw in a light bulb?

One, but it takes them three hours, and they have to fill out a 20-page report.

I’m not saying health inspectors are bad people. They’re just doing their job. But sometimes they can be a little bit…overzealous.

Like when I went to a restaurant, the health inspector came in and shut the place down because there was a single fly in the kitchen.

A single fly!

I mean, come on.

The fly wasn’t even bothering anybody. It was just sitting on the wall, minding its own business.

But the health inspector had to shut the whole place down.

I don’t know about you, but I think that’s a little bit ridiculous.

But that’s health inspectors for you.

They’re always looking for something to be wrong.

Even when there’s nothing wrong.

So next time you see a health inspector, remember: they’re not there to ruin your day. They’re just there to make sure your food is safe to eat.

Even if it means shutting down the whole restaurant over a single fly.” – Bard.

Sometimes you can see some of Rock’s classic flair and style but ultimately a bit meandering, and it drops the ball.

A great joke will close while reminding you of the starting theme to bring a sense of completion. Bard strings together what can be generously described as “rockisms.”

One final example of how ChatGPT runs a circle around Bard in terms of creative content.

Write a three-verse song in the style of Weird Al Yankovic, specifically, “Amish Paradise” about Chorkies.

(Verse 1) As I walk through the valley where I hunt my favorite toys, I take a look at my coat and realize it brings joy. ‘Cause I’ve been barkin’ and struttin’ so long that, Even the mailman thinks that my mind is gone. I’m a pooch of the breed, they call a Chorkie, folks. Got a Yorkshire heart and a Chihuahua’s strokes.

(Chorus) We’ve been spending most our lives Living in a Chorkie Paradise. We chase after sticks and balls, oh so nice, Living in a Chorkie Paradise. We wag our tails once or twice, Living in a Chorkie Paradise. But you’ll pay the premium price, Living in a Chorkie Paradise.

(Verse 2) A local boy asked me in the park last week, “I thought Chorkies were quiet, why does yours speak?” I really don’t mind, I wish him well, ‘Cause my Chorkie’s bark is its own kind of spell. We’re the smallest in size, but the biggest in heart, A perfect little pooch, a work of art.

(Chorus) We’ve been spending most our lives Living in a Chorkie Paradise. We’re just plain adorable in your eyes, Living in a Chorkie Paradise. There’s no denying, we’re quite the prize, Living in a Chorkie Paradise. But remember, every paradise has its price, Living in a Chorkie Paradise.” – ChatGPT.

Not that great, but not bad either. You can definitely sense the style of Weird Al here.

And here is Bard.

**Verse 1** Living in an Amish Chorkie paradise

Cutting up all the furniture with a chainsaw

Raised a barn on Monday, soon I’ll raise another In an Amish Chorkie paradise

**Chorus** Chuffing down the driveway in my little buggy With my little Chorkie, ain’t no need for a big ‘un Climbing up the mountain, I’m so carefree Living in an Amish Chorkie paradise

**Verse 2** Chuffing down the highway, ain’t no need to slow down With my little Chorkie, I’m a-havin’ a ball Stopping at the lights, people stare and point At my little Chorkie, they’re so impressed

**Chorus** Chuffing down the driveway in my little buggy With my little Chorkie, ain’t no need for a big ‘un Climbing up the mountain, I’m so carefree Living in an Amish Chorkie paradise

**Bridge** I’m so happy and content With my little Chorkie, he’s my best friend I wouldn’t trade him for the world He’s my little buddy, he’s my little man

**Chorus** Chuffing down the driveway in my little buggy With my little Chorkie, ain’t no need for a big ‘un Climbing up the mountain, I’m so carefree Living in an Amish Chorkie paradise” – Bard.

All three versions of Bard’s song just put the word “chorkie” into the song; it’s not creative, fun, or funny.

ChatGPT Is The King Of Technical Content Generation

ChatGPT is famous for creating complex and technical documents, thinking legal documents, planning a business meeting, or even creating entire business plans.

Write a three-paragraphs bill to remove lead paint from American homes with young children at the cost of $167 billion over the next ten years.

Title: The Lead-Free Homes Act

SECTION 1. FINDINGS AND DECLARATIONS

The Congress hereby finds and declares that the continued presence of lead-based paint in American homes poses a significant health risk, especially to young children who are particularly vulnerable to lead poisoning. Lead poisoning can lead to long-term developmental issues and learning disabilities. Therefore, it is imperative to undertake an extensive, nationwide initiative to remove lead paint from American homes.

SECTION 2. ALLOCATIONS AND USE OF FUNDS

For the purpose of eliminating the risk of lead poisoning in residential properties, there is hereby authorized to be appropriated the sum of $167 billion over the next ten years. This sum shall be allocated to fund a comprehensive program of lead paint removal, particularly prioritizing homes with young children. The program shall include funding for inspections, risk assessments, lead-based paint hazard control, lead abatement, post-removal verification, and relocation assistance during the removal process, where necessary.

SECTION 3. IMPLEMENTATION AND REPORTING

The Department of Housing and Urban Development (HUD) shall be responsible for the administration and oversight of this program, working in partnership with local housing authorities and health departments. HUD shall submit an annual report to Congress detailing the progress of the initiative, funds spent, and number of homes made lead-safe. These efforts will aim to ensure that future generations of American children grow up in homes free from the risk of lead exposure.” – ChatGPT 4.

Very impressive, considering it’s limited to 3 paragraphs, and actual bills run for dozens or even hundreds of pages.

And here is Bard.

A BILL

To remove lead paint from American homes with young children.

Be it enacted by the Senate and House of Representatives of the United States of America in Congress assembled,

Section 1. Short title.

This Act may be cited as the “Lead Paint Removal Act of 2023”.

Sec. 2. Findings.

Congress finds the following:

(1) Lead is a toxic substance that can cause serious health problems, including learning disabilities, developmental delays, and even death.

(2) Lead paint is a major source of lead exposure for children.

(3) Nearly 4 million children in the United States live in homes with lead paint.

(4) The cost of removing lead paint from homes is significant.

Sec. 3. Authorization of appropriations.

(A) In general—There are authorized to be appropriated to the Secretary of Housing and Urban Development $167,000,000,000 over the next ten years to carry out this Act.

(B) Use of funds—The Secretary shall use the funds appropriated under subsection (A) to—

(1) provide grants to States and localities to remove lead paint from homes with young children;

(2) provide technical assistance to States and localities on lead paint removal; and

(3) conduct research on lead paint removal.

Sec. 4. Definitions.

In this Act:

(1) The term “lead paint” means any paint that contains lead.

(2) The term “young child” means a child under the age of 6.

Sec. 5. Effective date.

This Act shall take effect on the date that is 180 days after the date of its enactment.

This bill would authorize the federal government to spend $167 billion over the next ten years to remove lead paint from homes with young children. The bill would provide grants to states and localities to carry out lead paint removal, provide technical assistance to states and localities on lead paint removal, and conduct research on lead paint removal. The bill would take effect 180 days after its enactment.” – Bard.

Actually, I have to give it to Bard here. In a very limited amount of room, it really got into the details, while ChatGPT is more of a summary.

If you’ve ever actually read a Congressional bill, Bard’s is a closer approximation.

Bottom Line: Meta Is A Fine Company, But Google Might Own The Future Of Everything

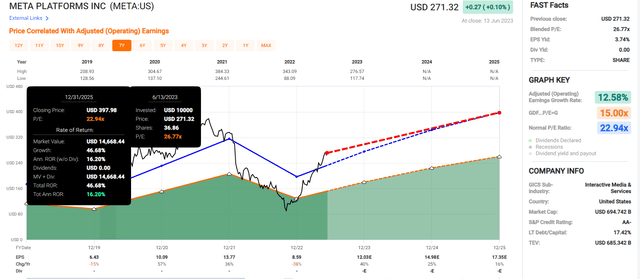

Both Meta and GOOG have been red hot this year. Both trade at approximately fair value. And both offer roughly the same return potential over the next 2.5 year, about 50%.

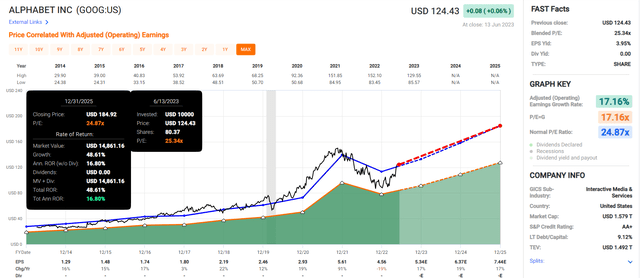

FAST Graphs, FactSet FAST Graphs, FactSet

But if you want a higher quality and faster-growing tech stock, then hands down, the winner is Google.

Consensus AI-Tech Return Forecasts

| Investment Strategy | Yield | LT Consensus Growth | LT Consensus Total Return Potential | Long-Term Risk-Adjusted Expected Return |

| Amazon | 0.0% | 43.2% | 43.2% | 30.2% |

| Alphabet | 0.0% | 17.3% | 17.3% | 12.1% |

| Microsoft | 0.8% | 12.2% | 13.0% | 9.1% |

| Nasdaq | 0.8% | 11.2% | 12.0% | 8.4% |

| Meta | 0.0% | 11.5% | 11.5% | 8.1% |

| S&P 500 | 1.7% | 8.5% | 10.2% | 7.1% |

(Source: FactSet, Morningstar.)

And not just by a little, but a country mile.

- 75% more return potential over the next 10 years

- 200% more over the next 20 years.

Meta is still a fine company that’s expected to beat the S&P over the very long-term by a modest amount.

In terms of Social Media moats, it still has the best, courtesy of 3 billion users.

But while Meta is a great social media company that plans to use AI to keep sustaining low double-digit growth, Alphabet is a tech optionality powerhouse.

It’s the world’s top digital ad company.

93% market share in search.

18 billion cumulative users (overlapping).

3rd largest cloud computing company and growing faster than Microsoft or Amazon.

GOOG’s growth outlook was 14% before it released Bard, and now after several months of upgrades, its growth outlook is up to 17%.

That’s because Bard, while currently highly limited in its capabilities, has the potential to overtake ChatGPT in the next two to four years to become the world’s most powerful and highest-quality Generative AI platform.

Microsoft would have to work with OpenAI to accelerate its own ChatGPT training schedule by using Microsoft’s cloud data to stay ahead of what Bard is doing now.

- Possibly MSFT buys Open AI if its valuation crashes in the coming recession.

Right now, GOOG is the global king of data. And Bard is improving by the week.

Expert tech reviewers have noticed that Bard is catching and even sometimes surpassing ChatGPT, and it’s only been out for a few months.

If GOOG plays its cards right and combines its cloud computing data with its user data from 18 billion global accounts, then it could own the future of AI and, thus, everything.

And even if it doesn’t, it is likely to become one of the two dominant AI companies on earth, along with Microsoft and its 33% share of ChatGPT, the fastest-growing technology in history.

Basically, if you want to know how to invest in AI in the lowest-risk way, buy GOOG and MSFT.

They are the Visa and Mastercard of this industry. Or the Lowe’s and Home Depot. Or the Coke and Pepsi, or the Boeing and Airbus.

The key to dominating AI is dominating data, both consumer and enterprise.

And that’s what Meta lacks today and will always lack in the future.

While Meta is still chasing the Metaverse, which few people actually want, GOOG is busy chasing the future of data, the cloud, AI, and thus everything.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

—————————————————————————————-

Dividend Kings helps you determine the best safe dividend stocks to buy via our Automated Investment Decision Tool, Zen Research Terminal, Correction Planning Tool, and Daily Blue-Chip Deal Videos.

Membership also includes

- Access to our 13model portfolios (all of which are beating the market in this correction)

- my correction watchlist

- my $2.5 million family hedge fund

- 50% discount to iREIT (our REIT-focused sister service)

- real-time chatroom support

- real-time email notifications of all my retirement portfolio buys

- numerous valuable investing tools

Click here for a two-week free trial so we can help you achieve better long-term total returns and your financial dreams.