Summary:

- While most headlines lately have been surrounding Google’s Search business, investors may be overlooking the notable progress on the Google Cloud front.

- While the Cloud segment may appear more difficult to understand than the Search side, we break down in a simplified manner the key business growth opportunities beyond the perplexing terminologies.

- Once investors gain a clearer understanding of the Google’s latest wins driving future revenue opportunities, it becomes easier to grasp the more powerful AI growth story hidden beneath the surface.

JasonDoiy

Google (NASDAQ:GOOG) has been facing a slew of negative headlines lately on the Search side of its business, including the growing competitive threat from OpenAI, the unfavorable antitrust ruling against its monopolistic Search engine dominance, and yet another antitrust trial underway relating to its unfair advantage in the ad tech market.

In fact, in the previous article we discussed OpenAI’s entrance into the Search engine market with the launch of SearchGPT, which actually affirmed the notion that Search engines will remain important avenues for navigating the internet in this new AI era. We delved into Google’s most powerful advantages that should enable the tech giant to defend its moat from new rivals. However, Google’s growing antitrust woes could undermine these very advantages it boasts over rivals, causing the market to push the stock into bear market territory, down around 22% from its all-time high.

Now while the Search engine business indeed remains the most important driver of the stock, the Google Cloud segment has also become a crucial determinant of share price performance, given that Cloud Service Providers [CSPs] are one of the ‘picks and shovels’ of this AI revolution. On the company’s earnings calls, executives tend to offer high-level statistics relating to Google Cloud performance and progress. Though such statements can be insufficient for investors to make fully informed investment decisions. So in this article, we will be breaking down in a simplified manner the key growth opportunities for Google Cloud beneath the surface, enabling investors to gain a firmer grasp of the underlying growth story, which other market participants may be missing. I am maintaining a ‘buy’ rating on the stock.

Google Cloud’s advantage with custom-made TPUs

Ever since this AI revolution started, Nvidia’s GPUs have been the most highly sought-after hardware devices, as they are the most capable of processing the complex computations incurred in generative AI workloads. Google Cloud, along with the other major cloud providers, have reported staggering CapEx numbers as they each spend heavily on loading up their data centers with these high-performance semiconductors. In Q2 2024, Google’s CapEx amounted to $13 billion, with no signs of slowing down.

Although, at the same time, all the large cloud providers are now also designing their own chips. Google has the most experience on this front, using its custom-made TPUs for internal workloads since 2015, and making them available for use through Google Cloud since 2018. Amazon’s (AMZN) AWS launched its Inferentia chips in 2019, and its Trainium chips were made available in 2021. Whereas key rival Microsoft (MSFT) Azure came much later to the game, introducing its Maia accelerators in November 2023.

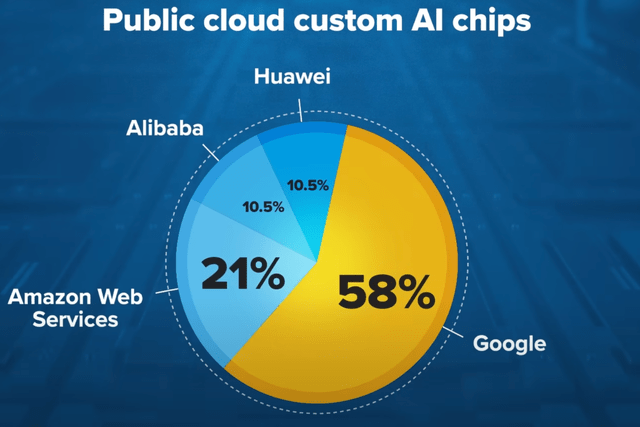

Google TPUs dominate among custom cloud AI chips, with 58% of the market share and Amazon comes in second at 21%.

– CNBC, The Futurum Group

Although offering in-house chips is just the first step, as the real battleground is on the software front. Indeed, much of the superior performance capabilities of Nvidia GPUs is owed to the CUDA software layer accompanying the hardware devices.

CUDA is essentially the computing platform that enables software developers to program the GPUs for specific use cases across industries, including tasks like image/graph processing and physics simulations. Nvidia has been building this software platform for almost two decades now, and currently boasts 3,700 GPU-accelerated applications solving a wide range of business problems in various sectors, as well as an ecosystem constituting of 5.1 million developers globally.

In order for Google’s TPUs to commensurately challenge Nvidia’s GPUs, it is vital to trigger a network effect around the platform, whereby the more developers build applications, tools and services around the chips, the greater the value proposition of the hardware will become, which will be conducive to more and more cloud customers deciding to use the TPUs. And the larger the installed base of cloud customers deploying Google’s custom chips for various workloads, the greater the incentive for third-party developers to build even more tools and services around the TPUs, enabling them to generate sufficient income from selling such services to the growing user base of Google’s TPUs.

Now in this endeavor to cultivate a network effect and subsequently a flourishing ecosystem around the TPUs, Google recently hit a home run amid the revelation that Apple (AAPL) used Google’s TPUs to train its own generative AI models as part of ‘Apple Intelligence’.

To build the AI model that will operate on iPhones and other devices, Apple used 2,048 of the TPUv5p chips. For its server AI model, Apple deployed 8,192 TPUv4 processors. – Reuters

The benefits of such large-scale use of Google’s TPUs by the largest consumer electronics company in the world cannot be understated. In fact, as part of a recent article discussing Nvidia’s CUDA moat, I covered how this development fortifies Google’s efforts to induce greater usage of its own chips.

This is a massive win for Google. Not just because it positions its chips as a viable alternative to Nvidia’s GPUs, but also because it could accelerate the development of a software ecosystem around its chips. In this process, third-party developers build more and more tools and applications around its TPUs that extend its capabilities, with Apple’s utilization serving as an affirmation that this hardware is worth building upon.

Such grand-scale use by one of the largest tech companies in the world should indeed help Google convince more enterprises to use its TPUs over Nvidia’s GPUs.

Consider the fact that Apple and Google are also competitors in the smartphone market, not so much in terms of iPhone vs Google’s Pixel (which holds marginal market share), but more so on the operating system level, iOS vs Android, as well as the embedded software apps running on these devices.

Keeping this in mind, Apple could have used the Trainium chips offered by Amazon’s AWS for training their AI model, but instead relied on competitor Google’s TPUs, which speaks volumes about the value proposition of Google Cloud’s custom silicon over AWS’s GPUs, and the wide lead it holds over Microsoft Azure on this front.

Apple even has the balance sheet and scale to design its own AI training chips in partnership with Broadcom (AVGO) or Marvell Technology (MRVL), but the need to move quickly in this AI race induced them to partner with Google Cloud for its TPUs, testament to Google’s first-mover advantage here and just how well-positioned Google was for this AI revolution.

The point is, the deployment of Google’s TPUs by a giant like Apple should buoy Google’s endeavor to build a software ecosystem around its hardware, and in turn encourage more and more of its cloud customers to opt for its in-house silicon over Nvidia’s expensive GPUs.

Subsequently, this could reduce the volume of semiconductors Google has to purchase from third-parties like Nvidia and AMD going forward, easing future capital expenditures, to the delight of shareholders as more top-line revenue flows down to the bottom line.

Moreover, the increasing competitiveness of Google’s TPUs would also strengthen the company’s negotiation power with Nvidia. Weakening pricing power for the semiconductor giant would not only ease Google’s CapEx pressures going forward, but also improve Return On Investment [ROI] potential for shareholders as the cost element of the equation improves over time.

Google will now have to prove to investors that it can indeed keep the ball rolling from here with more and more enterprises adopting its TPUs, and in fact already boasts enterprises like Salesforce and Lightricks on its customer list.

Market participants are now waiting to see how successful ‘Apple Intelligence’ will be. Strong consumer engagement, experiences and feedback from Apple users would serve as a signal to other cloud customers that generative AI is possible without Nvidia’s expensive GPUs. Although, Apple isn’t the only consumer electronics giant relying on Google Cloud technology.

Google Cloud increasingly serving the most important form factors in AI era

In the previous article on Google, we discussed how the company is already deploying Gemini capabilities through the Android operating system:

At Samsung’s Galaxy Unpacked event, VP of Gemini User Experience, Jenny Blackburn, showcased how Gemini can be used with applications like YouTube to ask questions about videos, and even with Google Maps to plan trips. Gemini is well-integrated with Gmail and Google Docs to be able to use users’ personal information to complete tasks.

Although, the partnership with Samsung extends more deeply from here, with the consumer electronics giant revealing it is also deploying Google’s Gemini Pro and Imagen 2 models for its own native applications that come pre-installed on its devices.

Samsung is the first Google Cloud partner to deploy Gemini Pro on Vertex AI to consumers…Starting with Samsung-native applications, users can take advantage of the summarization feature across Notes, Voice Recorder, and Keyboard.

…

Galaxy S24 series users can also immediately benefit from Imagen 2, Google’s most advanced text-to-image diffusion technology… With Imagen 2 on Vertex AI, Samsung can bring safe and intuitive photo-editing capabilities into users’ hands…in S24’s Gallery application.

Similar to how Google is striving to build an ecosystem of software developers around its TPUs, the tech giant also seeks to cultivate a network effect around the Google Cloud Platform [GCP] itself, whereby software developers continuously extend the utility and flexibility of Google Cloud solutions.

Samsung’s use of Google Cloud infrastructure for building and running generative AI applications on smartphones could once again serve as an affirmation that this platform is worth building upon, spurring third-party software developers to build more tools and services that help Google Cloud customers deploy AI models into the production of smartphone applications.

With Gemini, Samsung’s developers can leverage Google Cloud’s world-class infrastructure, cutting-edge performance, and flexibility to deliver safe, reliable, and engaging generative AI powered applications on Samsung smartphone devices.

– Thomas Kurian, CEO of Google Cloud

More and more developers working on Google Cloud should enhance the network effect and value proposition of the platform, conducive to more enterprises coming to Google Cloud to build generative AI-powered applications, which in turn should attract even more developers to Google’s flourishing ecosystem.

With Apple building Apple Intelligence models on Google Cloud’s TPUs, and Samsung building native AI features using the Gemini/ Imagen models through Vertex AI, Google Cloud is becoming increasingly better positioned to serve the infrastructure/ cloud computing needs for smartphone-based AI applications.

The market currently considers smartphones as the most potent form factor for enabling end-users to experience generative AI. This is thanks to the greater portability and at-hand accessibility of these devices, compared to AI PCs for instance, which rival Microsoft has been promoting heavily, or smart home devices, which has been Amazon’s main avenue for distributing generative AI capabilities.

This is exactly where Google Cloud’s key advantage comes to the forefront, which is that parent company Alphabet also houses its own line of smartphones (the Pixel series), and more importantly, the most popular mobile operating system in the world, Android. As a result, the tech behemoth naturally had to develop effective Google Cloud infrastructure and solutions to support its own first-party computing needs.

Moreover, Google’s engineers had already been designing custom silicon with generative AI-centric smartphone applications in mind, with its own Gemini Nano model (the smallest model that can be embedded into its Pixel smartphones) being trained on the company’s TPUs. As a result, the company was well-positioned to serve third parties like Apple, which ended up using the same TPUs to train their own AI model that can run on iPhones.

Furthermore, Google’s own endeavors to embed Gemini into its Android operating system, as well as its popular suite of smartphone apps, including YouTube and Gmail, means it needed to build appropriate Google Cloud tools for itself that would enable such deployments. Consequently, solutions for deploying Gen AI applications through smartphones were readily available, conducive to Samsung opting Google Cloud to create and run its own generative AI features.

According to Counterpoint research, Samsung’s share of the smartphone market was 19% in Q2 2024, while Apple held 16%. The two largest smartphone-makers collectively possess 35% of the market, and the fact that each of them used Google Cloud technology in one form or the other is testament to the cloud provider’s strong positioning to facilitate generative AI computations for the most prominent form factor in this new era.

It is certainly a key advantage over Azure and AWS, with neither Microsoft nor Amazon having its own line of smartphones or mobile operating systems. This is not to say that the two rivals are not offering suitable cloud infrastructure and solutions for smartphone applications, of course, but Google benefits from first-party workloads that drive an internal impetus for innovating the most relevant cloud solutions for the ‘smartphone + AI’ era. And that is on top of the fact that Google is the only major cloud provider that boasts its own Large Language Models (LLMs), while Microsoft still remains heavily reliant on OpenAI’s GPT models, and it was recently reported by Reuters that Amazon’s Alexa voice-assistant will be powered by AI start-up Anthropic’s Claude model. The point is, Google Cloud was much better prepared for this generative AI revolution than its competitors, at several layers of the technology stack.

In fact, beyond the fact that Google Cloud has been well-positioned to serve smartphone-centric generative AI computation needs, it was recently revealed by Qualcomm’s (QCOM) CEO that it is working in partnership with Google to build smart glasses.

Qualcomm (NASDAQ:QCOM) is working with Samsung (OTCPK:SSNLF) and Google (NASDAQ:GOOG) (NASDAQ:GOOGL) to explore mixed reality smart glasses that can be linked to smartphones, the chipmaker’s CEO Cristiano Amon told CNBC.

It has been publicized for some time now that Google and Samsung will be jointly launching a new XR (mixed reality) device, the rollout of which has reportedly been pushed back to Q1 2025. Additionally, Google has developed its own Android XR operating system, with the goal of encouraging headset-makers to embed it into their own hardware devices, similar to how most smartphone-makers today embed the Android operating system into their mobile devices.

The continuous development of the Android XR operating system over time, and now the three companies working to enable cross-device experiences with smartphones, is conducive to the build out of Google Cloud infrastructure and solutions that specifically serve generative AI workloads on AR/VR headsets. Moreover, it could spur third-party software developers to create tools and services around Google Cloud that help enterprises deploy AI models into production of applications for smart glasses, which are expected to be the next important form factor to enable consumers to optimally experience generative AI.

The overarching point is, Google Cloud is becoming increasingly well-positioned to serve computing workloads for the most important form factors in the era of generative AI.

Risks and counteractive factors to the bull case

Google Cloud is certainly making strides in the right direction to win market share amidst the AI revolution, and on the Q2 2024 Alphabet earnings call, CEO Sundar Pichai proclaimed the size of its developer base:

Year-to-date, our AI infrastructure and generative AI solutions for Cloud customers have already generated billions in revenues and are being used by more than 2 million developers.

Although an ecosystem of 2 million developers, presumably for the semiconductor layer (TPUs) and Google Cloud Platform layer combined, still dwarfs in comparison to Nvidia’s extensive ecosystem, proclaiming 5.1 million developers for CUDA alone.

Being candid, these specialty AI accelerators [Google’s custom TPUs] aren’t nearly as flexible or as powerful as Nvidia’s platform

– Daniel Newman, CEO of The Futurum Group in an interview with CNBC

This notion of Google’s software layer still notably lagging Nvidia’s CUDA has been further corroborated by reports of developers struggling to build software around the TPUs.

Google has had mixed success opening access to outside customers. While Google has signed up prominent startups including chatbot maker Character and image-generation business Midjourney, some developers have found it difficult to build software for the chips.

Such negative experiences of developers can act as a hindrance to other software developers and companies deciding to build applications around Google’s TPUs, throwing a spanner into Google’s ambitions to cultivate a flourishing developer ecosystem the way Nvidia has done with CUDA.

This is not to say that Google is not making progress on the software side for its TPUs, but the uphill battle is steep.

Additionally, the tech giant also faces intensifying competition on the AI models front, with open-source models gaining preference among enterprises given their more transparent and customizable nature, as discussed in a previous article on the big risk facing Microsoft’s OpenAI edge.

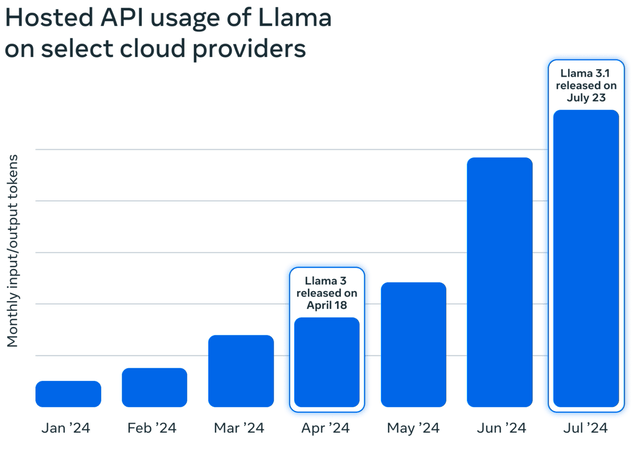

Meta Platforms (META) is perhaps the most notable threat here, which open-sourced its most powerful LLM to date, Llama 3.1, in late July. In fact, just one month after its release, Meta revealed in a blog post that:

Llama models are approaching 350 million downloads to date (more than 10x the downloads compared to this time last year), and they were downloaded more than 20 million times in the last month alone, making Llama the leading open source model family.

Llama usage by token volume across our major cloud service provider partners has more than doubled in just three months from May through July 2024 when we released Llama 3.1.

Monthly usage (token volume) of Llama grew 10x from January to July 2024 for some of our largest cloud service providers.

In fact, on the Q2 2024 Meta Platforms earnings call, CEO Mark Zuckerberg highlighted how AWS in particular has built noteworthy tools and services to help cloud customers deploy and customize the Llama models for their own use cases:

And part of what we are doing is working closely with AWS, I think, especially did great work for this release.

On top of this, Zuckerberg had also emphasized Amazon’s valuable work in the rollout of Llama in his open letter:

Amazon, Databricks, and NVIDIA are launching full suites of services to support developers fine-tuning and distilling their own models.

In the absence of Amazon having its own proprietary AI model, AWS is striving to offer the best services possible to enable cloud customers to optimally deploy open-source models, thereby protecting its dominant cloud market share from Google and Microsoft.

Although, note that Llama 3.1 is not multimodal, subduing the extent to which it challenges Google’s powerful Gemini model at the moment. Nonetheless, Meta CEO Mark Zuckerberg has already disclosed that Meta is working on its own multimodal, agentic models, and if the social media giant decides to open source such models as well, it would pose a threat to future demand for closed-source models like Gemini. This would present a significant blow to Google’s ROI potential for Gemini, particularly considering the heavy capital-intensive endeavor of building such models.

Though it is worth noting that Google released its own open-source Gemma series models earlier this year, realizing the growing preference for code transparency among enterprises. While the Gemma models are certainly not as powerful as Gemini, it enables the company to have one foot in the open-source space to sustain the developer engagement on the Google Cloud Platform.

Google Financial Performance and Valuation

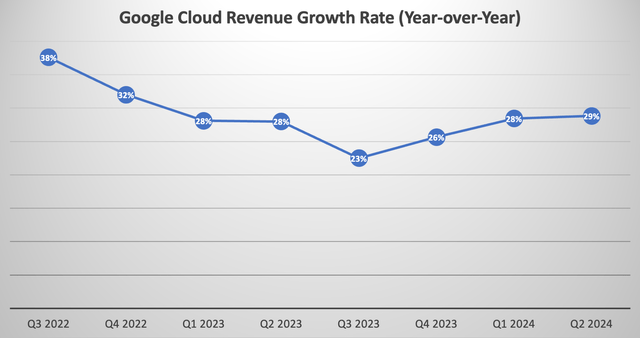

Google Cloud delivered a revenue growth of around 29% in Q2 2024.

Nexus Research, data compiled from company filings

Though keep in mind that the Google Cloud segment consists of both the Google Cloud Platform [GCP], as well as the Google Workspace offerings, the suite of productivity apps competing against Microsoft’s Office 365. Moreover, on the last earnings call, CFO Ruth Porat disclosed that GCP actually grew faster than the 29% overall growth rate for Google Cloud (emphasis added):

Turning to the Google Cloud segment. Revenues were $10.3 billion for the quarter, up 29%, reflecting first significant growth in GCP, which was above growth for cloud overall and includes an increasing contribution from AI.

Nonetheless, the revenue growth rate remains slower in comparison to the 30-40% growth rates the company was delivering in 2022 just before the launch of ChatGPT that triggered the AI revolution.

Furthermore, rivals AWS and Azure grew by 19% and 29%, respectively, last quarter. Consider the fact that these competitors hold much larger market shares, 32% for AWS and 23% for Azure, relative to Google’s mere 12% share in Q2 2024, as per data from Synergy Research Group. From this perspective, the 29%+ growth rate of GCP is insufficient to impress investors, given that it is growing off of a much smaller revenue base.

The fact that the largest competitor in the cloud market, AWS, delivered revenue growth of 19% despite being between 2-3 times larger than Google Cloud once again reflects Amazon’s ability to fight back and defend its moat in this new era. As discussed earlier, the market leader is particularly leveraging the growing prominence of open-source models over the proprietary models that gave Google and Microsoft-OpenAI their initial edge in the AI race, which could make it harder for GCP to continue taking market share from AWS going forward.

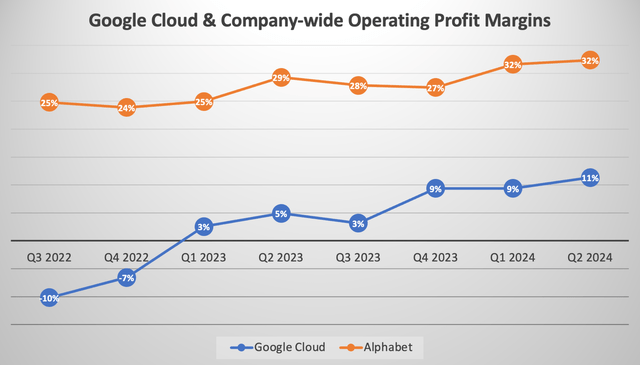

Simultaneously, Google Cloud also remains less profitable than its two key rivals, with an operating margin of 11% in Q2 2024. AWS saw an operating margin of 35.5% over the same period. Microsoft does not break out Azure’s profitability separately, though its broader Intelligent Cloud segment (which also encompasses server sales for on-premises computing) boasted an operating margin of 45% last quarter.

Nonetheless, Google Cloud has been making notable progress in expanding its profit margins since it stopped being a loss-maker in Q1 2023, contributing to the company’s overall profitability.

Nexus Research, data compiled from company filings

Just like all other major cloud providers, the tech giant has been spending heavily on CapEx, with guidance from CFO Ruth Porat on the last earnings call signaling no plans to slow down the pace of investments:

With respect to CapEx, our reported CapEx in the second quarter was $13 billion, once again driven overwhelmingly by investment in our technical infrastructure with the largest component for servers followed by data centers.

Looking ahead, we continue to expect quarterly CapEx throughout the year to be roughly at or above the Q1 CapEx of $12 billion

Though as discussed earlier in the article, Google is likely best positioned to reduce its reliance on Nvidia’s GPUs going forward, given how its in-house TPUs are increasingly becoming a viable alternative, buoyed by the fact that the largest consumer electronics company in the world, Apple, deployed these custom chips to train their own generative AI models.

As more and more cloud customers also opt for Google’s own silicon over Nvidia’s hardware, it should lower the company’s need to spend this heavily on CapEx going forward, allowing more dollars to flow to the bottom line.

Alphabet’s expected EPS FWD Long Term Growth (3-5Y CAGR) is 17.43%, which is higher than Microsoft’s 13.31%, but lower than Amazon’s 23.44%.

In terms of Google’s valuation, the stock currently trades at almost 20x forward earnings, considerably cheaper than both MSFT and AMZN, with Forward PE multiples of over 32x and 39x, respectively.

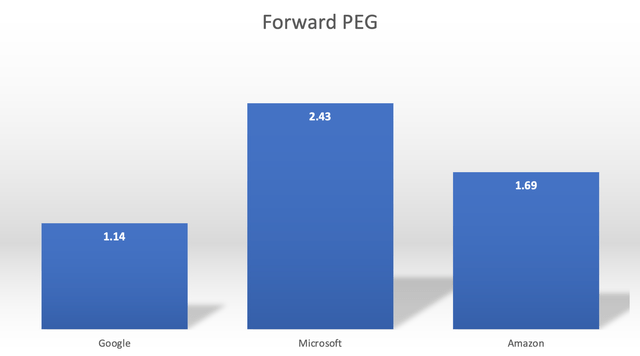

Though, a more comprehensive valuation metric is the Forward Price-Earnings-Growth ratio, which adjusts the Forward PE multiples by the anticipated EPS growth rates.

Nexus Research, data compiled from Seeking Alpha

For context, a Forward PEG multiple of 1x would imply that the stock is roughly trading around fair value. Hence, out of the three major cloud providers, Google is currently trading closest to fair value.

That being said, GOOG is trading at a discounted valuation relative to its tech peers for a reason, reflecting the market’s growing concerns around its Search business amid the rise of new rivals and the unfavorable antitrust rulings against the company in the midst of the AI revolution. Now in the previous article, we had extensively discussed Google’s advantages to defend its moat in the AI era, and I will continue to assess the strength of its ads business as the dynamics of the industry continue to evolve.

For now, I am maintaining a ‘buy’ rating on the stock, buoyed by Google Cloud’s strong positioning to capitalize on the generative AI era.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.