Summary:

- Advanced Micro Devices, Inc. stock has been trading in bear market territory for several months now, still down around 30% from its all-time high.

- While Nvidia Corporation is celebrated as the market leader in the AI era, mounting evidence is calling for a reality check about chip demand trends that keeps AMD in the game.

- Even Nvidia CEO Jensen Huang has gradually begun admitting a truth about the dynamics of chips demand, a truth that falls in AMD’s favor.

- While AMD may seem expensive based on a Forward P/E basis, it is actually very reasonably valued based on another more comprehensive valuation metric.

- AMD stock is being upgraded to a “buy” rating.

David Becker

Advanced Micro Devices, Inc. (NASDAQ:AMD) stock has been trading in bear market territory for several months now, still down around 30% from its all-time high. Ever since the launch of ChatGPT triggered hype around the power of generative AI, data center customers have been spending heavily on the Graphics Processing Units (GPUs) that enable the accelerated computing needed for processing such complex workloads. As a result, rival NVIDIA Corporation (NVDA) has been the beneficiary of the lion’s share of expenditure by tech giants and enterprises striving to upgrade their infrastructure for this new era.

However, approaching almost two years since the launch of ChatGPT, we are gaining a more realistic sense of the strengths and weaknesses of generative AI. Mounting evidence is suggesting that the traditional Central Processing Units (CPUs) will still be needed for the foreseeable future, given the limitations of generative AI. And the data center CPUs market is essentially Intel and AMD’s territory. AMD stock is being upgraded to a “buy” rating.

In the previous article, we discussed how AMD may be struggling to catch up to Nvidia in the AI race. In particular, we emphasized the uphill battle to catch up to Nvidia’s CUDA moat and the absence of rack-scale systems from AMD’s portfolio. Though since then, AMD has been making notable progress through the acquisitions of ZT Systems and Silo AI to enable the build-out of more end-to-end solutions and compete more aggressively against Nvidia’s supercomputing clusters.

Simultaneously, despite the euphoria around GPUs, a growing amount of research is suggesting that traditional computing solutions may still have to stick around given the drawbacks of generative AI. Before the launch of ChatGPT in November 2022, the story around AMD was essentially centered around its impressive market share gains in the CPU server market from Intel.

In this article, we will be covering how CPUs may remain an essential technology for the foreseeable future. We examine the value proposition of less complex, more cost-effective GPUs, all of which play in AMD’s favor.

CPUs still in demand

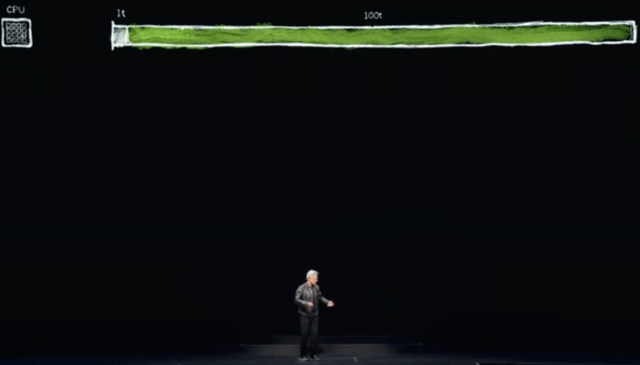

At Nvidia’s Computex event in June 2024, CEO Jensen Huang set out to highlight the projected ratio between GPU and CPU usage in this new era, with the “t” in the presentation diagram representing “units of time.” He implicated that 100t will be spent on GPUs, while a mere 1t will be spent on CPUs.

Nvidia’s projected ratio between GPU and CPU usage (Nvidia Computex)

The 1t is code that requires sequential processing, where single-threaded CPUs are really quite essential. Operating systems. Control logic. Really essential to have one instruction executed after another instruction. However, there are many algorithms. Computer graphics is one that you can operate completely in parallel. Computer graphics. Image processing, physics simulations. Combinatorial optimizations, graph processing, database processing, and of course, the very famous linear algebra of deep learning. There are many types of algorithms that are very conducive to acceleration through parallel processing. So we invented an architecture to do that by adding the GPU to the CPU, the specialized processor can take something that takes a great deal of time and accelerate it down to something that is incredibly fast. And because the two processors can work side by side

– Nvidia CEO Jensen Huang, Computex 2024 (emphasis added).

Despite Huang’s affirmation that CPUs will still be needed in this new era, it’s not surprising that he projects the majority of computing workloads to run on GPUs, given that these “parallel processing” chips are the “bread and butter” of Nvidia.

Nonetheless, Huang’s affirming that CPUs will still be necessary for computing workloads like running “operating systems” and “control logic” systems is certainly welcomed by CPU vendors.

Moreover, in 2023, Nvidia had even begun shipping its Grace CPU, as well as the GH200 Grace Hopper Superchip that is a combination of the Hopper GPU with its Grace CPU. This was to be able to also accommodate workloads where sequential processing is still required.

On the past several earnings calls, Huang has been proclaiming the industry-leading energy-efficiency performance of its CPU-GPU superchip, with healthy demand growth creating a new revenue source for the tech giant.

Grace Hopper Superchip is shipping in volume. Last week at the International Supercomputing Conference, we announced that nine new supercomputers worldwide are using Grace Hopper… We are also proud to see supercomputers powered with Grace Hopper take the number one, the number two, and the number three spots of the most energy-efficient supercomputers in the world.

– Nvidia CEO Jensen Huang, Q1 FY2025 Nvidia earnings call.

Nonetheless, with Nvidia charging high premium prices for its semiconductors, customers will certainly want to diversify towards alternative sources. And this creates a great entry point for AMD, which possesses decades’ worth of experience in designing CPUs, and has been eating into Intel’s market share over the past decade thanks to continuous performance enhancements.

Similar to how Nvidia has cleverly combined its Grace CPU with its highly sought-after GPUs to form a superchip that can handle both sequential processing and parallel processing, AMD has also introduced its own superchip called “MI300A.” This combines their CPU and GPU architecture together. AMD has highlighted the embedding of MI300A chips into the El Capitan, EVIDEN BullSequana XH3000, and HPE Cray EX supercomputers.

More notably, though, AMD has been delivering re-acceleration in demand for its pure CPUs in the midst of the Gen AI revolution.

Cloud adoption remains strong as hyperscalers deploy fourth-gen EPYC CPUs to power more of their internal workloads and public instances. We are seeing hyperscalers select EPYC processors to power a larger portion of their applications and workloads, displacing incumbent offerings across their infrastructure with AMD solutions that offer clear performance and efficiency advantages. The number of AMD-powered cloud instances available from the largest providers has increased 34% from a year ago to more than 900.

– AMD CEO Lisa Su, Q2 2024 AMD earnings call.

While Jensen Huang has repeatedly asserted that the majority of all workloads in the AI era will need to be processed on GPUs, AMD CEO Lisa Su has been proclaiming the use of its CPUs for inferencing workloads. This includes Meta Platforms’ (META) smaller-scale Llama model:

A growing number of customers are adopting EPYC CPUs for inferencing workloads, where our leadership throughput performance delivers significant advantages on smaller models like Llama 7B

– AMD CEO Lisa Su, Q4 2023 AMD earnings call (emphasis added).

The point is that CPUs appear to be able to handle smaller AI workloads, subduing the need for expensive GPUs for simpler tasks.

Lisa Su proclaimed a compelling list of EPYC CPU customers on the last earnings call:

We are seeing a strong pull for these instances with both enterprise and cloud-first businesses. As an example, Netflix and Uber both recently selected fourth-gen EPYC Public Cloud instances as one of the key solutions to power their mission-critical customer-facing workloads. In the enterprise, sales were increased by a strong double-digit percentage sequentially. We closed multiple large wins in the quarter with financial services, technology, health care, retail, manufacturing, and transportation customers, including Adobe, Boeing, Industrial Light & Magic, Optiver, and Siemens.

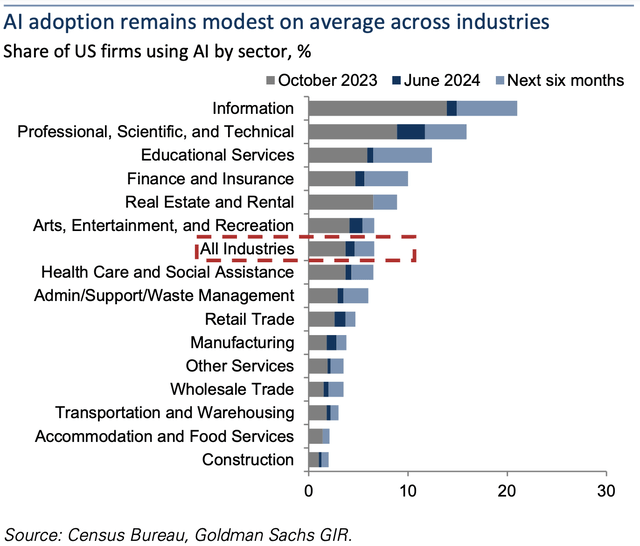

In fact, research from Goldman Sachs revealed how the average rate of AI adoption was merely in the mid-single digit percentage points across the majority of industries. Even over the next six months, less than one-tenth of companies in most industries intend to deploy AI, aside from a few early movers:

AI adoption remains very modest outside of the few industries-including computing and data infrastructure, information services, and motion picture and sound production

Despite the hype around the incredible possibilities with generative AI, the notion that it will transform/ replace all traditional work tasks and processes could take longer to manifest than bulls are hoping. This is particularly true given the error-prone nature of current generative AI-powered applications:

people generally substantially overestimate what technology is capable of today. In our experience, even basic summarization tasks often yield illegible and nonsensical results. This is not a matter of just some tweaks being required here and there; despite its expensive price tag, the technology is nowhere near where it needs to be in order to be useful for even such basic tasks. And I struggle to believe that the technology will ever achieve the cognitive reasoning required to substantially augment or replace human interactions. Humans add the most value to complex tasks by identifying and understanding outliers and nuance in a way that it is difficult to imagine a model trained on historical data would ever be able to do.

– Jim Covello, Head of Global Equity Research at Goldman Sachs.

Sorting through generative AI’s current imperfections could further slow down the rate at which businesses and industries replace traditional technology with this nascent technology. As an example, Robert Blumofe, chief technology officer at Akamai Technologies, Inc. (AKAM), is reportedly sticking to conventional technologies for several of its computing tasks, citing cost-performance dynamics:

Akamai, a cybersecurity, content delivery, and cloud computing company, is using more conventional forms of nongenerative AI to identify anomalies in network traffic, and flag cybersecurity incidents, and recommend products to customers

These nongenerative algorithms have the advantage of being less expensive, less energy-guzzling and more accurate than nascent generative AI models, he said. That is in part because of their smaller size, he said. “Using GenAI can mean expending megawatts to solve problems that can be solved with milliwatts,” he said.

The issue is that generative AI is being promoted as a problem-solver for problems that can still be solved by traditional, simpler and less expensive technology. The cost of running complex models on expensive GPUs raises the bar for generative AI to prove worthwhile for companies to allocate resources towards it and replace their current technology stacks. Even if the accuracy issues of generative AI-powered services are resolved, the technology could still face deployment hurdles if costs remain high, and the value addition of this new technology barely exceeds current systems. The point is, until generative AI becomes worthwhile to deploy at scale, businesses sticking to conventional systems should sustain demand for AMD’s CPUs.

In fact, there is even the possibility that generative AI may not be the right approach at all for certain computing tasks, given the reported limitations:

Generative AI is great for generating content, but of less compelling value for things like demand forecasting, anomaly detection, predictive maintenance and churn prediction, according to Sameer Maskey, founder and chief executive of enterprise AI company Fusemachines.

As a result, there is the possibility of businesses sticking to traditional AI (or non-generative AI) systems for various IT processes where generative AI simply makes little sense to incorporate. That being said, CPUs may not be the proper fit for all traditional AI workloads, as the parallel processing capabilities of GPUs could enable more efficient computing in various instances.

These conventional AI systems don’t necessarily require Nvidia’s ultra-powerful (and expensive) GPUs. Instead, AMD’s cheaper MI-series GPUs could make a better fit for these cases from a cost-performance standpoint, buoying AMD’s ability to carve out a slice of the GPU server market for itself.

Risks and contrarian arguments

Now while AMD is well-positioned to capitalize on customers’ needs for more cost-effective computing solutions (relative to Nvidia’s hardware), through both its MI series GPUs and EPYC CPUs, note that the company also faces competition on this front. Chief rival Intel already offers its Gaudi GPUs for AI workloads, and rising AI startups are also launching their chips, including Groq, which reportedly raised $640M recently to build differentiated forms of semiconductors:

Groq produces language processing units, or LPUs, which it says are faster and more cost-efficient than GPUs for handling AI training needs. – Seeking Alpha News on the Groq funding round.

Additionally, Intel has also been proclaiming the efficacy of its CPU chips for various AI computation processes, playing down the need for expensive GPUs. For example, in partnership with Dell Technologies, Intel tested Xeon CPU-powered servers for AI inferencing workloads, proclaiming the potency of these chips for “AI computer vision workloads, such as the Scalers AI Traffic Safety Solution using object detection.”

For context, in Q2 2024, Intel held 70.8% of the server market. Though, AMD is still continuing to gradually take share from the market leader, possessing 22.5% of the server market last quarter.

The point is, while AMD’s cost-effective CPUs/GPUs are competitively positioned to run the smaller-scale generative AI workloads and traditional IT workloads, as discussed earlier, the rivals in this space should not be underestimated here.

Furthermore, just because generative AI is not cost-effective today, and substantial value addition from it is still absent for businesses across industries to deploy this new technology, does not mean it will never get to that stage.

AI technology is undoubtedly expensive today. And the human brain is 10,000x more effective per unit of power in performing cognitive tasks vs. generative AI. But the technology’s cost equation will change, just as it always has in the past…the scaling of x86 chips coupled with open-source Linux, databases, and development tools led to the mainstreaming of AWS infrastructure. This, in turn, made it possible and affordable to write thousands of software applications, such as Salesforce, ServiceNow, Intuit, Adobe, Workday, etc. These applications, initially somewhat limited in scale, ultimately evolved to support a few hundred million end-users… Nobody today can say what killer applications will emerge from AI technology. But we should be open to the very real possibility that AI’s cost equation will change, leading to the development of applications that we can’t yet imagine.

– Kash Rangan, Senior Equity Research Analyst at Goldman Sachs (emphasis added).

Indeed, the lack of generative AI-powered applications that completely transform the ways of working (and other activities) today does not mean such new-era applications will never be created.

Generative AI may not seem like a practical solution for computing processes like demand forecasting and predictive maintenance currently. However, the formation of new software applications, or even the eventual rise of AI agents that can be trained to complete entire end-to-end workflows (rather than instructing generative AI-powered chatbots to complete one task at a time), should shift an increasing amount of workloads from CPUs to GPUs over time. This sustains the risk that CPUs lose importance in the future.

Now, such a major transformation could take time as companies gradually learn to trust AI agents to complete entire projects constituting multiple tasks and requiring reliable judgment for independent decision-making. So despite Nvidia CEO Jensen Huang’s assertions that the ratio of workloads shared between CPUs and GPUs will heavily lean towards GPUs, this could take longer to manifest than proclaimed.

Nonetheless, the longer-term trend remains in the direction of end-to-end automation, which will require ultra-powerful GPUs, an area where Nvidia continues to hold the lead. Currently, AMD’s key selling point over Nvidia remains the lower price point of its AI chips. However, Nvidia has reportedly been lowering the prices of its own GPUs amid intensifying competition, undermining AMD’s ability to gain market share.

Furthermore, in a recent article, we discussed Nvidia’s wide lead through the CUDA software platform that significantly extends the capabilities of its AI chips, boasting 5.1 million developers, and 3,700 GPU-accelerated applications.

Though AMD does seem to be making progress on both the hardware and software front, with CEO Lisa Su proclaiming on the last earnings call that:

On the AI software front, we made significant progress enhancing support and features across our software stack, making it easier to deploy high performance AI solutions on our platforms… customer response to our multi-year Instinct and ROCm roadmaps is overwhelmingly positive and we’re very pleased with the momentum we are building.

Nonetheless, for AMD to convince investors that it can seriously challenge Nvidia’s CUDA moat, the company will need to start offering key statistics relating to ROCm. These include the size of its developer base and the number of software applications that specifically extend the functionalities of its MI-series chips.

And that is just one aspect of the software race. In a previous article, we had discussed in-depth just how ready Nvidia was for the AI era with its “NVIDIA AI Enterprise” software service, which essentially serves as an operating system for running generative AI-powered applications.

The citation earlier mentioned how the improving cost-effectiveness of AWS during the cloud computing revolution gave rise to various enterprise software applications. It is worth noting that software giants like ServiceNow have already adopted “NVIDIA AI Enterprise” as an operating system powering their new software applications, a testament to the wide lead Nvidia has over AMD.

AMD financial performance and valuation

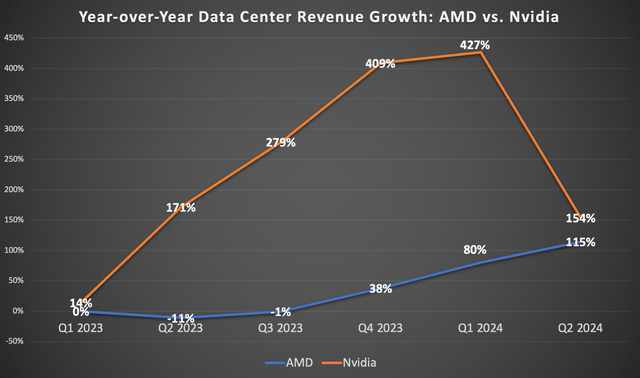

Despite Nvidia’s clear leadership over AMD in the AI race, the Lisa Su-led company delivered impressive data center revenue growth of 115% last quarter.

Nexus Research, data compiled from company filings

data center segment revenue increased 115% year-over-year to a record $2.8 billion, driven by the steep ramp of Instinct MI300 GPU shipments and a strong double-digit percentage increase in EPYC CPU sales.

– Lisa Su, Q2 2024 AMD earnings call.

While the focus has been on the ramping of the company’s AI chips, the robust growth of CPU sales in the midst of the AI revolution is a favorable development. It is, in fact, a continuation from the prior quarter when Lisa Su also highlighted a “double-digit percentage increase in server CPU sales.”

As discussed throughout the article, this is a testament to CPUs continuing to offer value as businesses stick to traditional technologies until generative AI proves worthwhile and cost-effective, which Lisa Su highlighted effectively during the April earnings call.

In the enterprise, we have seen signs of improving demand as CIOs need to add more general purpose and AI compute capacity while maintaining the physical footprint and power needs of their current infrastructure. This scenario aligns perfectly with the value proposition of our EPYC processors… As a result, enterprise adoption of EPYC CPUs is accelerating, highlighted by deployments with large enterprises

– CEO Lisa Su, Q1 2024 AMD earnings call.

This demand for EPYC processors should be sustained while generative AI proponents address its limitations and flaws. They will strive to win the trust and conviction of corporate customers that this new technology is worth replacing their cheaper, traditional technology stacks with and deploying across their organizations. As cited earlier, with less than 10% of businesses across most industries adopting/ planning to adopt AI in 2024, this transformational shift could take longer than bulls are proclaiming, to the benefit of CPU vendors like AMD.

That being said, the longer-term trend still leans towards GPU-accelerated computing as the technology is perfected over time, and services like AI agents with end-to-end workflow automation become increasingly prevalent.

While AMD’s total sales of GPUs and CPUs (as well as other networking products) totaled $2.8 billion in data center revenue last quarter, it still pales in comparison to Nvidia’s $26.3 billion in data center revenue in Q2 FY2025. The majority of that was sales of accelerated hardware devices. This is a testament to the belief among corporate tech leaders that accelerated computing, to run technologies like generative AI, is still the future.

AMD’s GPUs were initially considered by the market to be more suitable for smaller-scale AI deployments, with Nvidia’s GPUs the preferred choice for more complex, larger models. However, AMD is increasingly proving that its models are also very effective for large-scale deployments.

multiple partners used ROCm and MI300X to announce support for the latest Llama 3.1 models, including their 405 billion parameter version that is the industry’s first frontier-level open-source AI model. Llama 3.1 runs seamlessly on MI300 accelerators, and because of our leadership memory capacity, we’re also able to run the FP16 version of the Llama 3.1 405B model in a single server, simplifying deployment and fine-tuning of the industry-leading model and providing significant TCO advantages.

– Lisa Su, Q2 2024 AMD earnings call.

So in essence, AMD is well-positioned to capitalize in two areas. It meets accelerated compute needs with its MI-series proving increasingly economical for running the industry’s largest, leading AI models. It also services the sustained need for general compute with its powerful EPYC processors, until businesses and industries can fully trust generative AI systems to replace traditional IT processes.

Additionally, even though AMD is spending heavily to catch up to Nvidia in the AI race, the company has still been able to deliver both gross and operating profit margin expansion for investors:

the team also started to implement operational optimization to continue to improve gross margin. So we continue to see the gross margin improvement. Over time, in the longer term, we do believe gross margin will be accretive to the corporate average

…

Data center segment operating income was $743 million or 26% of revenue compared to $147 million or 11% a year ago. Operating income was up more than 5 times from the prior year, driven by higher revenue and operating leverage, even as we significantly increase our investment in R&D. – AMD CFO Jean Hu, Q2 2024 AMD earnings call

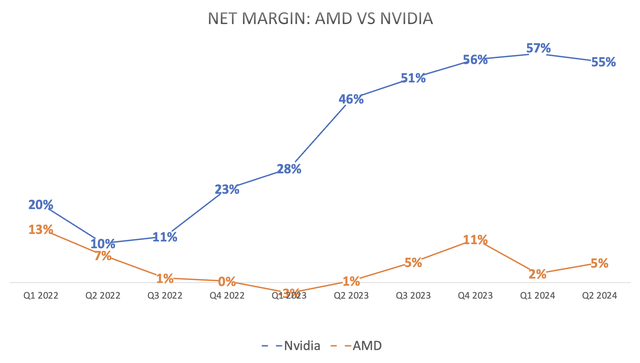

Though despite AMD’s improving profitability, its current net margin of 5% is around one-tenth of Nvidia’s lofty 55% net margin, with the market leader enjoying much stronger pricing power.

Nexus Research, data compiled from Morningstar

And as mentioned earlier in the risks section of the article, Nvidia has been cutting prices for its GPUs to shrug off competitors trying to take market share. Intensifying price competition would compress Nvidia’s enviable profit margins, but would also undermine AMD’s ability to expand its own net margins.

AMD’s Earnings Per Share [EPS] is currently $0.16, a fraction of Nvidia’s EPS of $0.68.

Now the market is expecting AMD’s EPS to grow at an attractive rate, with an EPS FWD Long Term Growth (3-5Y CAGR) of 43.03%. Though despite Nvidia’s much larger EPS, its projected EPS growth rate of 37.22% is quite close to AMD’s. This is a testament to the market’s expectation that Nvidia will sustain its market leadership, and is well-positioned to generate recurring software revenue off the large installed base of its GPU customers.

In terms of AMD stock’s valuation, share prices currently trade at around 43x forward earnings, which is in line with its 5-year average valuation, and roughly where chief rival Nvidia trades, at less than 45x forward earnings.

Although adjusting the Forward P/E multiples by the anticipated EPS growth rates would give us Forward Price-Earnings-Growth [PEG] multiples of 1.01x and 1.20x for AMD and Nvidia, respectively. So from this perspective, AMD is notably cheaper than NVDA.

For context, a Forward PEG ratio of 1 would imply the stock is trading at fair value. So AMD is essentially trading at fair value. Buying a stock at a Forward PEG of 1 subdues the risk of overpaying in case the earnings growth rate turns out to be much slower than anticipated.

At this attractive valuation, Advanced Micro Devices, Inc. stock is an appealing buy. This is particularly given the sustained demand for its CPU chips for longer-lasting traditional IT workloads, as well as the improving value proposition of its GPUs for generative AI computations.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.