Summary:

- New businesses running on Azure create new network effects, constantly growing the revenue potential from various industry verticals.

- Empowering cloud customers to build their own copilots is an incredible moat builder, strengthening Azure’s revenue growth potential.

- Buying Microsoft at a forward P/E of over 34x may indeed seem dangerous, but sitting on the sidelines waiting for a cheaper valuation could be more painful.

- If you buy Microsoft stock at the expensive valuation and the stock corrects, don’t sweat it, it’s an opportunity to buy more.

jewhyte

The 1990s and 2000s witnessed the rise of the internet, and the 2010s saw the rise of the smartphone economy through mobile apps. Likewise, the 2020s will be the age of AI, and Microsoft Azure (NASDAQ:MSFT) stands to be one of the greatest beneficiaries of this revolution. Through the OpenAI partnership, Microsoft Azure is able to offer AI-optimized infrastructure and industry-leading foundation models to help accelerate customers’ AI endeavors, allowing it to serve new industry-verticals, and promising long-term revenue growth potential. Despite Microsoft’s lofty valuation, the stock is a buy.

The growth potential of Azure OpenAI

The Azure OpenAI Service, which became generally available in January 2023, offers cloud customers access to OpenAI’s industry-leading foundation models, including GPT-4, DALL-E 2, and Codex.

For context, foundation models form the basis for developing a wide range of AI applications and services in the cloud. These models can be customized by cloud customers’ developers to suit their specific business or industry requirements, providing a starting point for creating new AI-driven products and services. For instance, GPT-4 is the foundation model that serves as the base of Microsoft’s Bing Chat service.

Microsoft has evidently struck gold with exclusive access to OpenAI’s foundation models. ChatGPT has become one of the fastest-growing apps in the world, and businesses across industries want to replicate this success by integrating their own products/ services with OpenAI’s models, including the advanced large language model, GPT-4. Unsurprisingly, Microsoft is seeing stellar customer acquisition for its Azure OpenAI Service, as CEO Satya Nadella shared on the last earnings call:

From Coursera and Grammarly to Mercedes-Benz and Shell, we now have more than 2,500 Azure OpenAI Service customers, up 10x quarter-over-quarter…

Azure also powers OpenAI API and we are pleased to see brands like Shopify and Snap use the API to integrate OpenAI’s models. More broadly, we continue to see the world’s largest enterprises migrate key workloads to our cloud. Unilever, for example, went all in on Azure this quarter in one of the largest ever cloud migrations in the consumer goods industry.

Furthermore, it is not just businesses coming directly to Azure, but also businesses going to OpenAI for its numerous APIs to integrate their services with ChatGPT, that are bringing new workloads to Azure, as these APIs essentially run on Azure. Consequently, the OpenAI partnership is enabling Microsoft to attract customers from industries it had little experience in serving before, as Satya Nadella proclaimed:

Some of the work we’ve done in AI even in the last couple of quarters, we are now seeing conversations we never had, whether it’s coming through you and just OpenAI’s API, right? If you think about the consumer tech companies that are all spinning essentially Azure meters, because they have gone to open AI and are using their API. These were not customers of Azure at all.

Second, even Azure OpenAI API customers are all new, and the workload conversations, whether it’s B2C conversations in financial services or drug discovery on another side, these are all new workloads that we really were not in the game in the past, whereas we now are.

This is testament to just how strongly positioned Azure has become to take market share in the cloud industry, thanks to its OpenAI partnership. For instance, Shopify was primely a Google Cloud customer, but thanks to exclusive access to OpenAI’s versatile foundation model, Shopify is now also running workloads on Microsoft Azure.

Keep in mind that new types of workloads running on Azure also induces new network effects, constantly augmenting the revenue potential from various industry verticals. For instance, going back the Shopify case, as the e-commerce giant deploys Azure OpenAI’s foundation models as the base of its new services, Shopify’s developers (as well as third-party developers specializing in e-commerce) will inevitably build applications and tools around Azure OpenAI that better serve the e-commerce industry’s needs. This in turn can attract even more e-commerce players to the Azure platform, and so the virtuous network cycle plays out.

OpenAI’s advanced large language model, GPT-4, is indeed proving to be a big game-changer, which will give birth to all sorts of new products and services across industries. This will result in the generation of new data sources, which can subsequently be used by enterprises to further update and improve their models. As companies consistently refine their AI-driven solutions, more and more data is generated, which leads to increased training workloads for enhancing these models. As a result, there will be a continuous need for computational power, as enterprises frequently retrain and optimize models using larger datasets. This persistent demand for training and inferencing translates to long-term revenue growth potential for Microsoft Azure.

For instance, the rise of ChatGPT has captivated online education platforms. Coursera became an Azure OpenAI service customer last quarter, and Khan Academy uses OpenAI’s API, hence indirectly also an Azure customer. Firstly, educational content is something that naturally keeps growing overtime, as new events occur to be taught in history classes, and new scientific discoveries are made, for example. But in the era of generative AI, online tutoring platforms will also constantly keep collecting data on how students interact with the platform in terms of the questions they ask, and which teaching techniques/ examples work better than others, for example. This data will then feed into re-training/ optimizing the underlying AI models. Therefore, regular re-training/ optimizing with larger datasets each time compounds the computing power required from Azure, resulting in strong revenue growth overtime.

Furthermore, ChatGPT’s popularity also enables Microsoft to cross-sell its other cloud solutions that OpenAI used to build its own successful models. One of these solutions includes Cosmos DB, a “relational database for modern app development”. CEO Satya Nadella said on the call:

Cosmos DB is the go-to database, powering the world’s most demanding workloads at any scale. OpenAI relies on Cosmos DB to dynamically scale their ChatGPT service, one of the fastest-growing consumer apps ever, enabling high reliability and low maintenance… we are taking share with our analytics solutions. Companies like BP, Canadian Tire, Marks & Spencer and T-Mobile all rely on our end-to-end analytics to improve speed to insight.

Microsoft is indeed striving to deeply integrate Cosmos DB with its Azure AI and Azure OpenAI services, making it a crucial element of the AI development process, thereby maximizing the revenue it generates per customer, and keeping them increasingly knotted into the Azure ecosystem.

GitHub Copilot, which “turns natural language prompts into coding suggestions across dozens of languages”, is another key AI-powered service to keep customers tied to the Microsoft Azure platform. GitHub Copilot is powered by OpenAI’s Codex foundation model.

While the conversation around Copilot has amplified amid the generative AI hype, Microsoft had actually introduced it in June 2021, made GitHub Copilot generally available in June 2022, and in February 2023 offered GitHub Copilot for Business, which includes additional features like organization-wide policy management and advanced privacy. On the last earnings call, the Microsoft CEO shared:

Today, 76% of the Fortune 500 use GitHub to build, ship and maintain software. And with GitHub Copilot, the first at-scale AI developer tool, we are fundamentally transforming the productivity of every developer from novices to experts. In three months since we made Copilot for Business broadly available, over 10,000 organizations have signed up, including the likes of Coca-Cola and GM as well as Duolingo and Mercado Libre, all of which credit Copilot with increasing the speed for their developers.

Developers’ enhanced ability to produce and iterate software more efficiently enables customers to roll out new AI-powered services and offer continuous advancements at an accelerated pace, which should enable these companies to produce revenue faster from new product introductions, and potentially even improve their pricing power, depending on how effectively developers can use Copilot to enhance the value proposition of their services.

As Microsoft Azure customers benefit from faster revenue growth brought about by advancements in generative AI, it strengthens Microsoft’s ability to set prices for these tools and facilitates faster revenue extraction from customers. As businesses strive to remain competitive by continually iterating their services, Microsoft can leverage this improved pricing power to capture revenue at an accelerated rate.

It is of no surprise then that earlier this month at a Microsoft event discussing AI, EVP & CFO Amy Hood said she believed “the next generation AI business will be the fastest growing $10 billion business in our history. I think I have that confidence because of the energy we’re seeing.” EVP of AI & CTO Kevin Scott re-affirmed this optimism saying “yeah, a hundred percent. And the other thing too, I will say, is that because it really is a very general platform, we have lots of different ways that that $10 billion of ARR [Annual Recurring Revenue] is going to first show up. So, there is all of the people who want to come use our infrastructure, whether they’re training their own models, whether they are running an open source model they’ve got, or whether they are making API calls into one of the big frontier models that we’ve built with OpenAI.”

Investors should recognize that Microsoft Azure’s revenue growth potential is not just driven by high demand for AI infrastructure from organizations across various industries seeking to transform their operations and product offerings, but also magnified by Microsoft’s ability to respond to that demand by supplying powerful solutions, such as GitHub Copilot, that enable cloud customers to build and deploy AI-powered products faster, enabling Microsoft to better monetize customers and generate revenue more rapidly.

That being said, investors may not see immediate results. Accelerated revenue growth from innovations like GitHub Copilot should better materialize overtime as the tools become more efficient. Currently, Copilot can generate “46% [of a developer’s code] across programming languages”, and GitHub CEO Thomas Dohmke expects this to reach 80% soon. Additionally, “Dohmke noted that the team is constantly working to improve latency. GitHub’s data shows that developers quickly get restless when it takes too long for Copilot to generate its code”.

Furthermore, Google has also introduced its own Codey model, “which accelerates software development with real-time code completion and generation, customizable to a customer’s own codebase”, rivaling OpenAI’s Codex model powering Copilot. Hence, these competitive factors also undermine Microsoft’s revenue growth potential through Copilot.

Nevertheless, Microsoft is doubling down on the promise of Copilot technology with the launch of Azure AI Studio in May, using which “developers can now ground powerful conversational AI models, such as OpenAI’s ChatGPT and GPT-4, on their own data”, and combined with the power of Azure Cognitive Search, developers can “create richer experiences and help users find organization-specific insights, such as inventory levels or healthcare benefits, and more”.

It essentially enables customers to build their own copilots, while yielding them better control over data privacy. Furthermore, these in-house, customized AI copilots can be enhanced with cloud-based storage to effectively track conversations with users and respond contextually based on previous conversations. The copilots can also be extended by integrating them with third-party plug-ins, granting them access to external data sources as well as additional third-party business services.

The overarching point is this. Once businesses build their own copilots using Azure OpenAI’s foundational models, they are unlikely to build an additional similar chatbot with another cloud providers’ AI models. They will ideally only manage one such application that serves the entire organization. Moreover, their customized copilots will only get better overtime as more and more employees use it more regularly. As mentioned earlier, the cloud-based storage functionality will store previous conversations, which not only allows the chatbot to offer more contextually aware responses, but this data can also feed into re-training/ optimizing the customized AI models. This significantly enhances Microsoft’s ability to keep customers knotted into the Azure platform, reducing the likelihood of customers migrating away from Azure, and bolstering the software giant’s ability to generate recurring revenue. Subsequently, as the customized copilots become increasingly imbedded into businesses’ core daily operations, it also creates great opportunities for cross-selling more Azure solutions, thereby encouraging customers to move more of their workloads to Azure, enhancing Microsoft’s revenue growth prospects.

Cloud industry rivals will indeed be offering similar capabilities to stay competitive. Customized copilots could potentially discourage cloud customers from adopting multi-cloud strategies (whereby customers use more than one cloud computing service from different providers to meet their organization’s needs), as it becomes increasingly convenient to have all data stored and managed with one provider. Whether or not customers forsake their multi-cloud strategies, offering them the ability to create their own customized chatbots will become an increasingly strong moat factor, crucial to retaining customers and market share. With the introduction of Azure AI Studio, Microsoft has made a powerful competitive move, better positioning it for both market share retention and growth, conducive to long-term revenue growth.

Is Microsoft’s P/E too high?

The biggest risk to the bull case for Microsoft is its valuation. Amid the generative AI hype pushing Microsoft’s stock price nearly 40% higher this year, investors are starting to question whether the stock is becoming too expensive. Microsoft’s forward PE is currently more than 34x, trading 17% higher than its 5-year average forward PE of over 29x.

Microsoft’s largest segment, and consequently the main driver of financial performance, is the Intelligent Cloud unit which consists of both Azure Cloud Services and Server Products, contributing 42% to total revenue in Q3 2023. It is also the fastest-growing segment, with a 3-year CAGR of almost 25%, primarily driven by Azure.

Azure’s generative AI potential is undoubtedly enormous. Through the Azure OpenAI Service, and the OpenAI partnership more broadly, Microsoft is strongly positioned to attract businesses from diverse industries, conducive to new network effects augmenting revenue growth potential. Furthermore, new AI-powered innovations like GitHub Copilot should induce faster revenue generation, and offering the AI infrastructure to enable customers to build their own Copilots enables Microsoft to both sustain and grow recurring revenue through cross-selling and market share and growth.

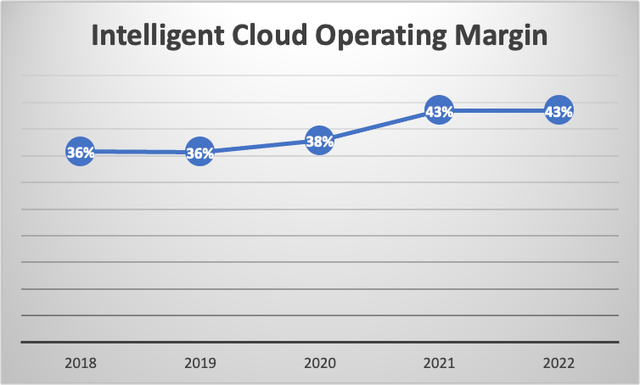

Nonetheless, facilitating the training, inferencing and deployment of customers’ growing AI models and applications will indeed be capital intensive, and revenue growth will need to outpace expense growth to sustain profitability levels. The intelligent cloud segment has been delivering profit margin expansion over the last several years, growing from 36% in 2018 to 43% in 2022, according to company filings.

Though on the last earnings call, CEO Satya Nadella and CFO Amy Hood expressed a greater focus on market share growth than profitability:

Amy Hood

…It’s probably a good opportunity to explain a bit about how I think about where we are, which is — if you look at all of the businesses we’re in and we look about our competitiveness in those businesses. And this is before Satya started to comment a bit about our relative performance versus absolute. And I’ll tell you that the energy and focus we put right now is on relative performance and share gains.

… it is, in fact, how we think about long-term success, in being well positioned in big markets, taking share in those markets, committing to make sure we’re going to lead this wave, staying focused on gross margin improvements where we can.

Satya Nadella

Yeah. I mean, just to add to it, during these periods of transition, the way I think as shareholders, you may want to look at is what’s the opportunity set ahead. We have a differentiated play to go after that opportunity set, which we believe we have. Both the opportunity set in terms of TAM is bigger, and our differentiation at the very start of a cycle, we feel we have a good lead and we have differentiated offerings up and down the stack.

And so therefore, that’s the sort of approach we’re going to take, which is how do we maximize the return of that starting position for you all as shareholders long term. That’s sort of where we look at it. And we’ll manage the P&L carefully driving operating leverage in a disciplined way but not being shy of investing where we need to invest in order to grab the long-term opportunity. And so obviously, we will see share gains first, usage first, then GM, then OPInc, right, like a classic P&L flow. But we feel good about our position.

Given the opportunity ahead, Microsoft’s executives are right to prioritize gaining market share from competitors, rather than profitability. Consequently, Microsoft is unlikely to shy away from making the necessary large-scale capital investments to grab the lucrative opportunities in AI. Though over the near-term, this could potentially compromise profit margins. This makes investors more wary of Microsoft’s lofty valuation multiple amid potential profit margin compression.

Nonetheless, even in such a scenario, the market could continue rewarding Microsoft a high forward PE, as top line growth and market share gains take the spotlight. Investors should not focus too much on near-term profitability, as they may end up missing the sustained rally higher driven by promising revenue growth prospects.

Now regarding Microsoft’s notably higher forward PE multiple relative to its 5-year average of over 29x, consider that this average reflects Microsoft’s growth prospects pre-ChatGPT. With the potential of generative AI becoming more and more visible, the market is right to re-rate the stock higher commensurate to the AI opportunities ahead. Hence, the higher valuation multiple is indeed here to stay.

Buying Microsoft at a forward PE of over 34x may indeed seem dangerous, but sitting on the sidelines waiting for a cheaper valuation could be more painful. Even if the stock sees a correction, drawdowns are likely to be limited and short-lived, as investors quickly snap up the stock on any potential dips. If you buy Microsoft at the expensive valuation and the stock corrects, don’t sweat it, it’s an opportunity to buy more.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of MSFT either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.