Summary:

- Meta’s Llama AI advancements emphasize an open-source mindset, which CEO Zuckerberg believes will drive innovation and industry standards, similar to Linux’s impact on computing.

- Open-source AI models like Llama 3.1 and Llama 3.2 offer developers flexibility and control, avoiding lock-in with closed vendors, and fostering a broader ecosystem of compatible tools.

- Meta’s collaboration with Nvidia enhances Llama’s performance, making it a cornerstone for enterprise AI applications, with significant cost savings and efficiency improvements.

Derick Hudson

Introduction

Per my April article, Meta’s (NASDAQ:META) AI developments have huge implications. Since that time, we have new information such as a July 23rd Llama 3 research paper, the July 23rd Llama 3.1 letter from CEO Mark Zuckerberg, Nvidia (NVDA) AI Foundry updates from July 23rd, a July 29th SIGGRAPH discussion with CEO Zuckerberg and Nvidia CEO Jensen Huang, Nvidia’s 2Q25 call and Meta’s 3Q24 numbers.

Apple (AAPL) taxes developers and puts restrictions on companies like Meta in terms of what they can build and how they can track advertising. Meta CEO Zuckerberg makes good arguments as to why things can and will shift to a more open ecosystem in the future as AI becomes a bigger part of our lives. My thesis is that people should not underestimate CEO Zuckerberg.

Open Philosophy – Open Ecosystems

I’m not able to buy kindle books on my iPhone, but I can buy them on the internet from my desktop computer. This is basically because Apple has a closed system for mobile, but the internet when accessed from desktop computers is an open system. Amazon would have to pay a tax to Apple on the closed system, but they don’t have to do this on open systems. There are many ways in which open systems are better for everyone, and I believe CEO Zuckerberg and Meta are on the right track with Llama. A November 5 article from The Verge says the EU has recognized some of the harm from Apple’s closed system, and a penalty is being levied.

Foundation models such as Llama 3.1 form the basis of AI systems. A July 23rd Llama research paper says Meta hopes the open release of a flagship model, Llama 3.1, will accelerate a responsible path towards the development of artificial general intelligence (“AGI”).

CEO Zuckerberg’s July 23rd Llama 3.1 letter explains the way the world is changing with AI, and he believes AI will develop over closed-sourced models the way Linux developed over closed-source Unix. One of the reasons for this is because open source AI has benefits for everyone. One factor as to why AWS (AMZN) is the biggest cloud company in the world as opposed to Oracle (ORCL) is because AWS didn’t force developers to be locked in with respect to databases. There is a similar lock-in concern with AI models, as developers tell CEO Zuckerberg they need flexibility. He addresses the subject in his letter (emphasis added):

Many organizations don’t want to depend on models they cannot run and control themselves. They don’t want closed model providers to be able to change their model, alter their terms of use, or even stop serving them entirely. They also don’t want to get locked into a single cloud that has exclusive rights to a model. Open source enables a broad ecosystem of companies with compatible toolchains that you can move between easily.

A November 14 Reuters article talks about this, saying the FTC is investigating claims stating Azure (MSFT) has been imposing punitive licensing terms to discourage customers from moving their data to other cloud providers.

CEO Zuckerberg explains in his letter how open-source AI is good for Meta by starting with the obvious: they’re not locked into the closed ecosystem of a competitor with build restrictions. Apple is specifically called out for taxing developers and applying arbitrary rules. He says Llama wouldn’t develop into a full ecosystem of tools, efficiency improvements and silicon optimizations if Meta was the only company using it. Competitors with closed AI models generate revenue by selling access, but Meta has a history of finding other ways to monetize offerings. Additionally, Meta has saved billions in the past with their open-source approach for the Open Compute Project, PyTorch and React. CEO Zuckerberg says AI has more potential than any other tech with respect to human productivity, creativity, quality of life, economic growth and medical/scientific research. He says open-source AI is good for the world relative to closed systems with respect to economic opportunities and security:

It seems most likely that a world of only closed models results in a small number of big companies plus our geopolitical adversaries having access to leading models, while startups, universities, and small businesses miss out on opportunities. Plus, constraining American innovation to closed development increases the chance that we don’t lead at all. Instead, I think our best strategy is to build a robust open ecosystem and have our leading companies work closely with our government and allies to ensure they can best take advantage of the latest advances and achieve a sustainable first-mover advantage over the long term. When you consider the opportunities ahead, remember that most of today’s leading tech companies and scientific research are built on open source software.

Meta isn’t alone in terms of helping developers have success with Llama 3.1. Nvidia is working closely with Meta and taking big steps to build a flourishing ecosystem. Nvidia AI Foundry is an end-to-end platform service for building custom models, and it uses Meta’s Llama 3.1. The bottom row of their diagram shows all 3 hyperscale cloud options: AWS, Google Cloud and Microsoft Azure. Per a July 23rd Nvidia blog post, their Nvidia Inference Microservices (“NIMs”) minimize interference latency and maximize throughput for Llama 3.1 models to generate tokens faster:

The above graph is explained in a July 23rd, Forbes article:

Nvidia shows that it can increase performance of models like Llama 3.1 with optimized NIMs. Inferencing solutions like NVIDIA TensorRT-LLM improve efficiency for Llama 3.1 models to minimize latency and maximize throughput, enabling enterprises to generate tokens faster while reducing total cost of running the models in production.

A July 29th SIGGRAPH conversation between CEO Zuckerberg and Nvidia CEO Huang sheds more light on Meta’s open philosophy in general and Llama 3.1 in particular. Nvidia CEO Huang says the excitement for Llama 3.1 is off the charts, as it’s going to enable all kinds of applications. CEO Zuckerberg says Facebook was late relative to other tech companies in terms of building out distributed computing infrastructure. As such, they didn’t have an early mover competitive advantage, and they decided they might as well make it open. They took their server designs, the network designs and over time, the data center designs and published these components on Open Compute. This became an industry standard and the supply chains got organized around it, which saved money for everyone – billions of dollars were saved by making this public and open. CEO Zuckerberg says they’ve also saved money with things like React and PyTorch such that they were leaning towards a similar approach at the time Llama came around.

CEO Zuckerberg explained problems we’ve seen over the years with closed platforms like Apple. Meta apps are shipped through competitors’ mobile platforms. The first version of Facebook was on the web, where the ecosystem was open. As things shifted to mobile, Apple won the smartphone market with respect to profits and set the terms of their closed ecosystem. Before that, Windows was a much more open system – the open market won in the PC generation. He hopes we return to an open ecosystem winner in the next generation of computing. Here are CEO Zuckerberg’s words on the subject at SIGGRAPH (emphasis added):

We basically just want to return the ecosystem to that level where that’s going to be the open one and I’m pretty optimistic that the next generation, the open ones, are going to win. For us specifically, you know I just want to make sure that we have access to, I mean this is sort of selfish but I mean it’s – you know after building this company for a while, one of my things for the next 10 or 15 years is like, I just want to make sure that we can build the fundamental technology that we’re going to be building social experiences on. Because there have just been too many things that I’ve tried to build and then have just been told, nah you can’t really build that, by the platform provider, that at some level I’m just like nah [f-bomb] that for the next generation.

CEO Zuckerberg continues the SIGGRAPH talk by saying Llama 3.1 needs an ecosystem around it, so it wouldn’t work well if it wasn’t open sourced. Additionally, he says silicon will be optimized to run well on it. The SIGGRAPH discussion continues, with CEO Huang saying Nvidia loves Llama 3.1 so much that the current AI Foundry is built on it. The foundry output for the customer is a NIM which the customer can download and then run anywhere including on premise (emphasis added):

I recognize an important thing and I think that Llama is genuinely important. We built this concept to call an AI Foundry around it so that we can help everybody build. Take, you know a lot of people, they have a desire to build AI and it’s very important for them to own the AI because once they put that into their flywheel, their data flywheel, that’s how their company’s institutional knowledge is encoded and embedded into an AI. So they can’t afford to have the AI flywheel, the data flywheel that experienced flywheel somewhere else. And so open source allows them to do that. But they don’t really know how to turn this whole thing into an AI and so we created this thing called an AI foundry. We provide the tooling. We provide the expertise – Llama technology. We have the ability to help them turn this whole thing into an AI service and then when we’re done with that they take it. They own it.

Again, AWS became the biggest cloud company in the world as they gave customers an ecosystem where being locked in wasn’t a concern. I believe this is one of the reasons why they’re embracing Meta’s Llama open-source foundation model (“FM”). During Meta’s 2Q24 call, CEO Zuckerberg said AWS especially did great work for this Llama release. CEO Zuckerberg went on in the 2Q24 call to point out how Llama doesn’t lock customers in (emphasis added):

I think that that’s one of the advantages of an open source model like Llama is – it’s not like you’re locked into one cloud that offers that model, whether it’s Microsoft with OpenAI or Google with Gemini or whatever it is, you can take this and use it everywhere and we want to encourage that.

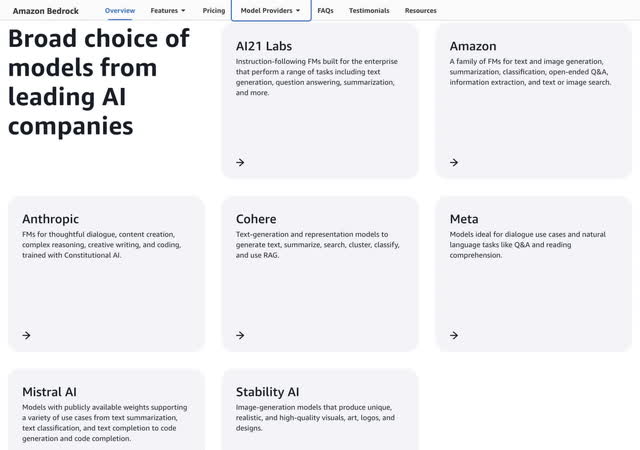

Amazon has repeatedly explained that there are 3 layers of AI, despite the fact that most headlines focus on the top layer when having discussions about breakthroughs like ChatGPT. Amazon Bedrock is a key part of the middle layer, as it allows customers to leverage an existing FM and customize it with their own data. The Bedrock section of Amazon’s website talks about Meta Llama 3.1, saying the models are ideal for dialogue use cases and natural language tasks:

Nvidia CEO Juang talked about working with Meta in the 2Q25 call on August 28 (emphasis added):

During the quarter, we announced a new NVIDIA AI foundry service to supercharge generative AI for the world’s enterprises with Meta’s Llama 3.1 collection of models. This marks a watershed moment for enterprise AI. Companies for the first time can leverage the capabilities of an open source frontier-level model to develop customized AI applications to encode their institutional knowledge into an AI flywheel to automate and accelerate their business. Accenture is the first to adopt the new service to build custom Llama 3.1 models for both its own use and to assist clients seeking to deploy generative AI applications. NVIDIA NIMs accelerate and simplify model deployment. Companies across health care, energy, financial services, retail, transportation, and telecommunications are adopting NIMs, including Aramco, Lowes, and Uber. AT&T realized 70% cost savings and 8x latency reduction after moving into NIMs for generative AI, call transcription and classification.

A VentureBeat article from October 16 says Nvidia dropped the Llama-3.1-Nemotron-70B-Instruct model on Hugging Face, drawing attention for its exceptional performance.

An October 24 post from Qualcomm (QCOM) talks about the way Llama 3.2 is catching on (emphasis added):

Meta’s recent Llama 3.2 1B/3B has been a game-changer and they’re already getting new upgrades. Qualcomm Technologies has teamed up with Meta and Ollama once again to support the new Llama 3.2 quantized models. This collaboration underscores our commitment to pushing the boundaries of what’s possible with on-device AI and increasing the accessibility and effectiveness of the cutting-edge Llama models for a wide range of AI applications.

During the 3Q24 call, CEO Zuckerberg said we’re seeing great momentum with Llama and exponential growth with token usage. As Llama becomes the industry standard, he expects improvements to flow back to all of Meta’s products. He talked about the release of Llama 3.2 during the quarter and his excitement about Llama 4:

This quarter we released Llama 3.2 including the leading small models that run on device and open source multimodal models. We are working with enterprises to make it easier to use and now we’re also working with the public sector to adopt Llama across the US government. The Llama 3 models have been something of an inflection point in the industry but I’m even more excited about Llama 4 which is now well into its development. We’re training the Llama 4 models on a cluster that is bigger than 100,000 H100s or bigger than anything that I’ve seen reported for what others are doing.

CEO Zuckerberg also mentioned in the 3Q24 call that numerous researchers and independent developers do work on Llama because it is open and widely available. Making improvements and publishing their findings, these researchers make it easy for Meta to incorporate findings in their products. Cost savings may be the most important benefit:

I mean, this stuff is obviously very expensive. When someone figures out a way to run this better, if they can run it 20% more effectively, then that will save us a huge amount of money. And that was sort of the experience that we had with Open Compute and part of why we are leaning so much into open source here in the first place is that we found counterintuitively with Open Compute that by publishing and sharing the architectures and designs that we had for our compute, the industry standardized around it a bit more.

Later in the 3Q24 call, CEO Zuckerberg said as Llama gets adopted more, they are seeing folks like Nvidia and AMD optimize their chips more to run Llama specifically well.

A November 4 New York Times article said Meta is already working with companies like Palantir (PLTR) who have defense ties, and they have made Llama available to federal agencies.

A November 7 article from The Economist makes the case for open software and Llama, saying the foundations of the web and Spotify are from open tech:

The benefits of open software are plain to see. It underpins the technology sector as a whole, and powers the devices billions of people use every day. The software foundation of the web, the standards of which were released into the public domain by Tim Berners-Lee from CERN, is open-source; so, too, is the Ogg Vorbis compression algorithm used by Spotify to stream music to millions.

One obvious benefit of an open approach stands out to me, and this is with respect to cost savings. It may be developers outside of Meta who determine how to run Llama more efficiently, and this will save everyone money, including Meta. Another cost-saving consideration with widely used Llama is custom silicon. These cost savings allow Meta to make investments in their products, which will eventually lead to more revenue.

Valuation

AI and Reality Labs pose some risk to Meta’s valuation. I believe there is a good chance some of the investments in the metaverse are still too early and too ambitious. I believe there is a smaller chance the bulk of the AI investments won’t work out, but it is something we should consider. It’s possible AI will end up looking more like the mobile smart phone and less like the open internet, in which case Meta is losing a substantial amount of capital. Even if AI ends up being somewhat open and Llama does well, it doesn’t mean everything will happen in a straight line and investments may get ahead of themselves. I saw this in early 2000 when internet companies across the board crashed with respect to valuation. Many like Amazon came back, but others like Cisco (CSCO) languished for decades.

Meta’s valuation has improved as they have gotten much more efficient over the last 2 years. They’re bringing in record amounts of revenue, and they’re doing it with fewer employees. The quarterly revenue per employee for 3Q24 was nearly $561 thousand and 2 years ago it was significantly less, coming in between $317 and $318 thousand:

|

Headcount |

Quarterly revenue per employee |

Quarterly revenue in millions |

|

|

1Q21 |

60,654 |

$431,480 |

$26,171 |

|

2Q21 |

63,404 |

$458,599 |

$29,077 |

|

3Q21 |

68,177 |

$425,510 |

$29,010 |

|

4Q21 |

71,970 |

$467,848 |

$33,671 |

|

1Q22 |

77,805 |

$358,692 |

$27,908 |

|

2Q22 |

83,553 |

$344,955 |

$28,822 |

|

3Q22 |

87,314 |

$317,406 |

$27,714 |

|

4Q22 |

86,482 |

$371,927 |

$32,165 |

|

1Q23 |

77,114 |

$371,463 |

$28,645 |

|

2Q23 |

71,469 |

$447,733 |

$31,999 |

|

3Q23 |

66,185 |

$515,918 |

$34,146 |

|

4Q23 |

67,317 |

$595,852 |

$40,111 |

|

1Q24 |

69,329 |

$525,826 |

$36,455 |

|

2Q24 |

70,799 |

$551,858 |

$39,071 |

|

3Q24 |

72,404 |

$560,591 |

$40,589 |

Meta is worth the amount of cash which can be pulled out from now until judgment day. One of the reasons I’m optimistic about their valuation is because CEO Zuckerberg is good at shifting focus and figuring out how to make money in new ways. At the July 29th SIGGRAPH event, CEO Zuckerberg remarked to Nvidia CEO Huang that the two of them are grizzled at this point seeing as they are the two longest standing founders in the industry. Nvidia CEO Huang noted how CEO Zuckerberg always seems to know when it is time for his company to pivot the focus, going from desktop Feed to mobile Feed to mobile Reels to VR to AI.

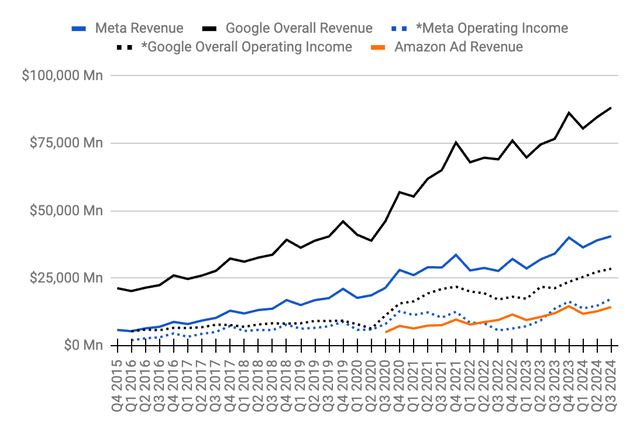

Google (GOOG) (GOOGL), Meta and Amazon (AMZN) are digital ad leaders, and they continue to increase ad operating income and revenue. Note that some of Google’s revenue below comes from sources other than advertising, whereas we only show the ad segment for Amazon. For example, nearly 13% of Google’s overall 3Q24 revenue was from Google Cloud below:

Digital ad revenue (Author’s spreadsheet)

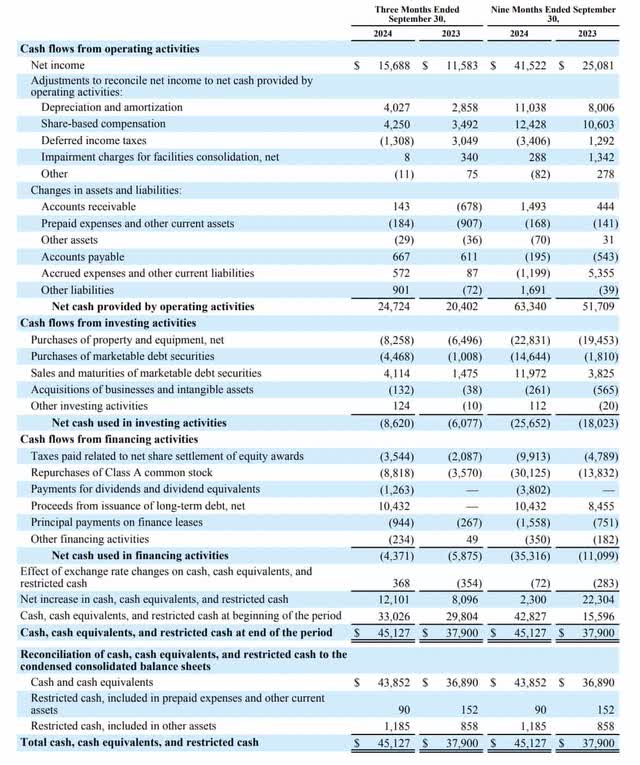

At the time of my April article, I thought Meta was worth about 24 to 26 times trailing twelve months (“TTM”) free cash flow (“FCF”). I’m a bit more optimistic now because the pathway for growth is clearer. 9M24 net income of $41,522 million was nearly 66% higher than 9M23 net income of $25,081 million which was 35% higher than 9M22 net income of $18,547 million:

Looking at the 3Q24 slides, overall TTM FCF is $50.5 billion or $11,505 million + $12,531 million + $10,898 million + $15,522 million. Much of the capex is growth capex but some of it is offset by share-based compensation. The TTM operating income from the Family of Apps is $79.8 billion or $21 030 million + $17 664 million + $19 335 million + $21 778 million. Overall TTM operating income is $62.4 billion or $16,384 million + $13,818 million + $14,847 million + $17,350 million. The operating income difference is because of $17.4 billion in TTM operating losses from Reality Labs.

I now believe Meta could be worth up to 23 to 25x TTM operating income implying a range of $1,435 to $1,560 billion when rounded to the nearest $5 billion.

Per the 3Q24 10-Q, 2,180,000,871 A shares + 344,487,662 B shares gives us a total of 2,524,488,533 as of October 25, 2024. Multiplying by the November 12 share price of $584.82 gives us a market cap of $1,476 billion. The market cap is inside my valuation range so I mainly think the stock is a hold, but I’ve been doing some trimming.

Forward-looking investors should look for Llama updates when Nvidia comes out with their 3Q25 numbers on November 20.

Disclaimer: Any material in this article should not be relied on as a formal investment recommendation. Never buy a stock without doing your own thorough research.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of META, AAPL, AMZN, GOOG, GOOGL, PDD, VOO either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.