Summary:

- Microsoft’s advancements in error correction for quantum computing position it ahead of the competition.

- The new Qubit-Virtualization code significantly improves qubit fidelity, creating the most reliable logical qubits ever, far surpassing current industry standards.

- Azure Quantum’s integration of these advancements could establish Microsoft as the dominant quantum computing platform, similar to its historical success with MS-DOS.

- Despite the progress, scaling and further improvements in qubit fidelity are necessary to fully realize the potential of quantum computing.

koto_feja/E+ via Getty Images

In this article, I will consider recently published research that suggests Microsoft Corporation (NASDAQ:MSFT) has made a leap forward in error correction, which puts clear blue water between itself, IBM, Google, and the rest in the field of quantum computing suggesting we may be approaching Microsoft’s most profitable years to date.

As the Quantum industry progresses, themes are beginning to emerge, companies and governments are investing in the sector, and so astonishing advances are being made.

D-Wave (QBTS) has achieved quantum advantage with its annealing machine for optimization problems. Quantinuum managed to teleport a qubit (in real life, not on Star Trek), and Colorado has invested $40 million in a Quantum Park. Google has begun building out its quantum offering with a new simulation kit from Keysight. Keysight and Google have been working together since 2021. IQM, the European quantum company, has manufactured 30 quantum computers as it moves towards scale manufacturing.

A Quantum Universal Gate computer, a quantum computer that can solve all types of problems, remains elusive and constrained by scale and error correction issues. Microsoft’s new research takes us one step closer to this goal.

This is my second article on Microsoft Corporation’s (MSFT) quantum computing efforts and my eighth on quantum computing companies. My first Microsoft article looked at Microsoft’s research into topological qubits using Majorana Zero Modes. This ethereal particle might exist and might be the next generation of qubits, perhaps hundreds of times more resilient than those developed today.

The Quantum Possibility

Many quantum algorithms have been written to do everything from designing new batteries to discovering new medicines. When writing about D-Wave (QBTS), I interviewed the CEO of Zapata (ZPTA); he discussed solutions the company had, from solving network problems for telecom companies to designing new drugs. He said many times the hold up for running his solutions was the hardware; computers don’t yet exist that can run the algorithms.

An algorithm is like a flow chart of operations that must be performed to get from a starting point to an endpoint. In quantum programming, which is the linear algebra you might have studied at college, each operation is multiplying matrices together (a matrix is an array of numbers). The more complicated the problem, the bigger the matrices and today’s computers cannot multiply large matrices in any acceptable amount of time.

One of the first and probably the most famous algorithm written that would require a quantum computer to run is Shor’s algorithm. I will use the Shor’s Algorithm as an example because the problem is easy to understand and explains the difference between what can be achieved today using classical computers and what could be achieved tomorrow with quantum computers.

Shor’s algorithm is about prime factorization. It takes a number and writes it as a product of primes, a multiplication sum of prime numbers. For small numbers this easy 15 = 5 x 3, 132 = 2 x 2 x 3 x 11. For very large numbers, this is extremely difficult. It is so difficult that RSA Security LLC offered a $200,000 reward to anyone who could work out the prime factorization of a number they call RSA-617. They call it that because it has 617 digits

If you are interested, here is the number

25195908475657893494027183240048398571429282126204032027777137836043662020707595556264018525880784406918290641249515082189298559149176184502808489120072844992687392807287776735971418347270261896375014971824691165077613379859095700097330459748808428401797429100642458691817195118746121515172654632282216869987549182422433637259085141865462043576798423387184774447920739934236584823824281198163815010674810451660377306056201619676256133844143603833904414952634432190114657544454178424020924616515723350778707749817125772467962926386356373289912154831438167899885040445364023527381951378636564391212010397122822120720357.

If we were to use the most powerful supercomputer available today and the best factoring algorithms we have, it would take more electrical power than the Earth has ever produced and take an amount of time greater than the universe has been in existence to find the answer.

The time a quantum computer would take to solve this problem depends on the number of reliable qubits it has available. Still, with 4,000 error-corrected qubits, it would take around 23 hours and use an amount of energy similar to a regular laptop.

The difference is staggering and explains the worldwide rush to develop quantum computers.

I covered the mathematics behind quantum in my previous article on Microsoft’s quantum research and here I am going to focus on the progress they have made and explain the gap developing between Microsoft and the rest on the road to true quantum computing.

The Quantum Problem

The Quantum industry faces two challenges: error correction and scaling. They have to make computers with lots of qubits and those qubits have to give the right answer. It is proving far more difficult than expected to do this but Microsoft has recently announced some groundbreaking results suggesting they are getting very close to the holy grail of a large computer good enough to run Shor’s algorithm.

Scaling is a function of the type of qubit each manufacturer chooses to work with. International Business Machines (IBM), Alphabet (GOOG), Rigetti (RGTI) and many others are working with superconducting qubits. The scaling issue here is an engineering one, they need to link small loops of superconducting wires that constitute a superconducting qubit with other loops using more superconducting wire.

IONQ (IONQ) and Quantinuum use trapped ions; here, atoms have an electron removed, which turns them into ions. Each ion is held in a small well, unfortunately, all ions have the same charge and repel each other, leading to a scaling problems very difficult to solve. IONQ is trying to solve it by entangling their qubits with photons and using the photons to link one string of ions with another. Quantinuum developed a racetrack architecture where they move qubits in and out of a holding area. I covered these scaling issues in my article on IONQ (Don’t buy the wrong Qubit Technology), suggesting that Neutral Atom qubits, those without an electron removed, might be the easiest to scale.

Atom Computing, QuEra and Pasqual are leading the research into neutral atom qubits, they seem to scale easily but have more error problems.

Microsoft is pursuing its own path, it is concentrating on topological qubits, which have many potential advantages. (please see my earlier article for a complete discussion)

Error Correction

All qubits, regardless of their type are inherently unreliable things. They interact with each other and with everything else. Every time they interact, they change their value, which causes errors in calculations; they even change their value when you measure them, and often, for no reason we understand.

Quantum manufacturers use the term fidelity to describe how resilient their qubits are to error, and they quote seemingly impressive figures for. MIT quoted 99.9% fidelity in 2023 and only last week IONQ (IONQ) announced they had reached the same milestone. It sounds really impressive and does represent very impressive engineering and scientific progress as problems many thought intractable are solved by advanced science and engineering. Still, it is one of those areas where our natural understanding of large numbers can let us down and lead us to assume things are better than they are.

If the fidelity of a qubit is 99.9%, then the chance of it having an error is 0.1%; if we ran 1,000 operations representing a small algorithm, the possibility of getting the correct answer would be 0.999^1000 or 37%. So even with 99.9% fidelity, the computers deliver the wrong answer 63% of the time due to qubit errors. Shor’s algorithm requires millions of operations per second, on a million operations the chance of getting the right answer with 99.9% fidelity is as close to zero as possible (zero point Four hundred and thirty-five zeros three)

The search for a solution to this error problem began with Shor, as most things quantum do, and his Shor Code. It was the first development beyond just doing the calculation multiple times and checking the answer. Shor’s 9-Qubit code was one of his most significant discoveries and the basis for all error correction work that has followed.

Shor’s Code links together nine qubits and makes them act together as if they were one qubit; most of the nine qubits are working on error detection and correction and as a unit they deliver the right answer. The nine qubits are known as one logical qubit. The original breakthrough was improved by others to make a 7-qubit code and then a 5-qubit code.

The problem is Shor’s method does not scale beyond a small number of logical qubits, but it led directly to Surface Code which has been the dominant error-correcting code for many years.

Surface Code

There are two parts to surface code: a geometric design for positioning physical qubits to make logical qubits and a set of operations that are continually carried out on the qubits to detect and correct errors.

As long as the qubits have a fidelity over 99.9% surface code leads to an error-protecting coating over the qubits. Surface codes are many, generally referred to as LDPC codes; they have been well studied and seem robust. The problem is scale, as the number of logical qubits goes up, the number of physical qubits increases almost exponentially. It is likely that a working quantum computer would need in the order of 20 million qubits to run using surface code and as the leading hardware manufacturers have not yet managed to get very far past a thousand, it is unlikely to be a final solution.

International Business Machines (IBM) published a paper describing a much-improved version of surface code. Their new code delivered a tenfold reduction in the number of qubits needed so down from 20 million to 2 million but requires qubit fidelity of 99.999% to work effectively, commonly known as five nines it will require a jump in qubit manufacturing to achieve as we have only just got to three nines.

Microsoft and Software error correction

Recently published work from Microsoft points to a significant leap forward, suggesting that Microsoft is accelerating away from the rest of the quantum industry and its big competitors, such as IBM and Google.

Microsoft has the Most Reliable Logical Qubits ever produced.

In April 2024, MSFT released an update on it’s ongoing partnership with Quantinuum, a private quantum hardware manufacturer, showing that they had produced the most reliable logical qubit the world has seen.

The code dubbed Qubit-Virtualization used 30 Quantinuum ion-trapped qubits with a fidelity of 99.8% and ran 14,000 operations without a single error. The QV code created 4 logical qubits from 30 physical qubits (the quantum machine in question has 32 qubits available). The entangled logical qubits had a fidelity rate of 99.99999% or seven-nines a huge jump, far ahead of the rest of the field. That is an improvement of x800.

Earlier this month, MSFT released the latest results from this collaboration with Quantinuum. The QV code had been improved and Quantinuum made more qubits available. In this new piece of research, 12 logical qubits were created, the fidelity dropped from seven to six nines. The qubits still had a base fidelity of 99.8%. Importantly, the number of physical qubits grew in line with the number of logical qubits, there was no exponential growth as required by the original surface code or to a lesser extent IBM’s latest improvement.

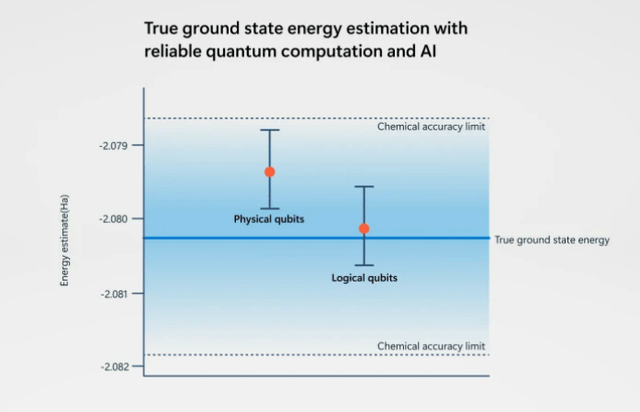

The second result has greater significance because 12 logical qubits with the stated fidelity is good enough to try solving some actual problems. MSFT used its end-to-end hybrid system, combining AI-high-performance computing-Quantiuums computer and the new QV code, to estimate the ground state of an active catalyst. The results show the best achieved by physical Qubits, the best that can be achieved by chemical work, the results from the new logical qubits and the actual true state.

Quantinuum/Microsoft Results (Microsoft)

Neutral Atom Qubits

In my recent article on IONQ I discussed the various types of qubits currently being researched and expressed my belief that neutral atom qubits are likely the best bet we have because of the ease at which they can be scaled with all to all connectivity.

IONQ contacted me about several points they wished to raise following my article (I covered them in a pinned comment to that article). One of the issues they raised is that they believe neutral atoms will be noisy and unable to meet the fidelity of trapped ion qubits. There is some truth in this; currently, the fidelity of neutral atom qubits is struggling to get above 99.5%, well below what is needed, scaling is the other side of the problem. IONQ hopes for 64 qubits in 2025, whereas Atom is already over 1,000 and is aiming for 10,000 next year.

Atom Computing is a leader in neutral atom qubits. Their H2 machine has 1,200 qubits and they increase the number of qubits by a factor of 10 with each upgrade. Atom has plans for 10K qubits using the current architecture and 100K qubits with the H3 architecture.

Atom use lasers to move neutral atoms around their system. To make the qubits entangle (link with each other), they excite them and move them into a Rydberg state where the electrons orbit the nucleus at greater distances, enabling them to connect with their nearest neighbors. IONQ believes the Rydberg state is where errors will occur.

The latest paper from Atom shows they are improving fidelity; they have moved to 99.7% on CZ gates and 99.9% on RZ gates (these are the two types of operations that need to be performed). This is still quite a bit behind IONQ but is a significant improvement from the previously published results below 99.5%.

Atom computing and Microsoft

Atom and Microsoft have proved that the MSFT QV error correction code works on Atoms H2 quantum device, from the linked press release.

Together, Microsoft and Atom Computing have created logical qubits and are optimizing their systems to enable reliable quantum computation.

Using the information from the MSFT/Quantinuum work, it appears that the MSFT code needs around 7 or 8 qubits for each logical qubit. Atom currently has 1,200 qubits implying around 150 logical qubits. The next generation of Atom machines with 100,000 qubits could produce 12,500 logical qubits.

Looking back to the beginning of this article, I discussed Shor’s algorithm for the prime factorization of large numbers. Factorizing a 600-digit number, a problem that would take all of the energy the world has ever produced and as much time as the universe has been in existence would take 4 hours on a 12,500 logical qubit machine.

Beware, our minds understanding of numbers lets us down when we get to large numbers. The Microsoft error correction code improves the fidelity of the physical qubit by x800. Atom will have to significantly improve the fidelity of its qubits, by an order of magnitude perhaps x1000 for the combination of Atom and Microsoft to deliver a reliable quantum computer. So, we are still talking about a potential future some way down the road.

Microsoft Quantum Azure

In Azure MSFT describes three levels of quantum computing. Foundational, Resilient and Scale. Foundational is where we are now with noisy physical qubits. MSFT class all current quantum computers as foundational but has brought many of these machines to its Azure platform for experimentation, allowing customers to prepare for the next level. They have machines from IONQ, Pasqual, Quantinuum, QCI and Rigetti available.

The second stage will be the arrival of stable logical qubits that can remain error-free for the duration of the calculation. The longer a logical qubit is stable the bigger and more complex the problems it can solve. The new error-correcting code from Microsoft moves us towards this second stage and I look forward to more information from Microsoft which may suggest stage two has been reached.

The final stage would be scale, when the computer has a large number of reliable logical qubits it can begin to solve some of the most intractable problems available.

As Microsoft makes developments, it adds them to Quantum Azure for customers to explore, which will constantly attract new customers to the Quantum Azure ecosystem. At the moment, Azure is the most advanced Quantum computing platform available. Its end-to-end hybrid supercomputer-AI-Quantum is, in my view, the best available by a considerable margin, it is probably the only one available with a wide scope and definitely the only one on general release.

The logical qubits developed on Quantinuum’s machine used to solve the ground state energy problem. Plus the computational chemistry package that utilized the end-to-end platform has been added to the quantum toolkit and is available via Microsfot’s private preview quantum elements program.

Private Preview of quantum elements is designed to advance the take-up of Azure Quantum, it uses Copilot AI to improve the learning curve and develop quantum algorithms for a customer’s needs. It boasts a reduction in the time taken to get projects started and the opportunity to use tens of millions of materials in AI based computations speeding up chemistry simulations by as much as 500,000 times.

Microsoft Azure and Share Price

The thesis of this article is that Microsoft is building a competitive advantage in quantum computing that will deliver a technological superiority to its Azure cloud division helping Azure to outpace the competition, capturing market share in a growing market.

The market may see Azure as a proxy measurement, reflecting how Microsoft is faring in its AI battle with the other large scale tech companies.

The performance of Azure has become critical to the performance of Microsoft shares. In the last earnings report, Microsoft delivered a beat on both top and bottom lines, yet the share price fell by an immediate 3% when Azure reported disappointing growth rates. It led to a sell-off, bringing shares down from the all-time high of $469 to below $390 a month later.

Microsoft is led by Satya Nadella who became CEO in 2014, before that he was in the Azure division. Since 2014 the stock is up 1,000%. I consider the largest competitor to Azure to be Amazon’s (AMZN) AWS offering, it was first to arrive in the space and there are immense costs in moving a large business from one web stack to another. Taking market share from Amazon seemed to be an impossible task, but Microsoft does seem to be doing just that.

Wells Fargo believes that the market underestimated Azure’s growth because Microsoft does not provide apples-to-apples figures for comparison to AWS. Wells Fargo suggests Azure has revenue of $62 billion against the $105 billion of AWS; Azure now has 85% of the Fortune 500 companies using it and is estimated to have grown its market share from 26% to 31%.

Wells Fargo forecasts revenue of $200 billion for Azure by 2030, a growth of over 450%. Quantum Azure is developing into a significant competitive advantage for Microsoft and offers the possibility of taking an increasing market share from AWS and other large tech companies. If Microsoft can maintain its lead in quantum computing, delivering the world’s first error-corrected scaled machine, then it will likely attract a growing share of the market to its Azure Quantum and consequently to Azure as a whole.

The Quantum industry is forecast to grow quickly over the coming years. However, the industry is still in its infancy and has yet to prove it can deliver the error-corrected machines needed to deliver useful devices, so we must tread a little carefully. Fortune forecasts a CAGR of 34% and the market reaching $12 billion by 2032. Markets and Markets forecasts a CAGR of 32.7%. There are many others; taking 30% CAGR as a rough average and a 2024 industry size of $1.4 billion (from Markets and Markets) would imply a market size of $13.7 billion in 10 years and $190 billion in 20 years.

The addition of Quantum to Azure might offer Microsoft a sustainable advantage the like of which we have seen before. In 1981, Microsoft bought QDOS (Quick and Dirty Operating System) for $50,000 Microsoft adapted it and renamed it MS-DOS. For almost 30 years MS-DOS was the basis for almost all operating systems delivering Microsoft a near monopoly and made Microsoft into the company it is today.

There are parallels with Quantum Computing today, Microsoft is building software for Noisy (not Dirty) Quantum Computers that make them useful today, it is putting that software into the Azure cloud, in the same way it put MS-DOS into IBM machines, perhaps developing the dominant quantum computing platform for the next 30 years.

At the moment, Microsoft seems to be ahead of the competition in hardware research, software integration and customer acquisition.

Risks

Perhaps the biggest risk is what we don’t know. We have published research from IBM to compare with but little from META, Amazon or Oracle and nothing from the Chinese tech companies. We know Microsoft is ahead on what has been published but we have no idea of the gains that may have been achieved but kept private.

Another issue is that all of this remains theoretical. Microsoft has made a big step forward, but we will need many more steps before the success of quantum can be assured. We will need to see improvements in qubit fidelity to prove to allow error correction to work over millions of operations per second, and then it all needs to be scaled.

Microsoft has proven it has the software to create the most accurate logical qubits the world has ever seen but has not yet proven it can scale quantum computers large enough to make a meaningful difference or develop qubits with sufficient fidelity to make the most of its logical qubit software.

Microsoft’s work on topological qubits is elegant and offers the promise of the highest fidelity physical qubits we have ever seen, but we can not be absolutely certain they even exist.

Conclusion

Mathematical algorithms have already been written that could solve some of the worlds most intractable problems if only they had a reliable quantum computer to run on. Microsoft is currently ahead in the race to develop these machines based on research that has been published.

Microsoft’s work on topological qubits still seems to have the potential to develop the highest fidelity qubits possible, and if and when they arrive, they will be added to quantum Azure, cementing Microsoft as the world leader for perhaps another 30 years.

The newest research and the basis for this article is the step forward Microsoft has made with error-correcting code that links physical qubits together to produce the most accurate logical qubits we have seen.

This is an exciting time in computing and Microsoft may be developing a quantum advantage as great as its digital advantage it had when it released MS-DOS.

I will continue to update as time goes on and hold onto my Microsoft position, I am not adding at this point as the holding is already over the maximum my rules allow. However, if I had room I would buy and I reiterate my Strong Buy recommendation.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of MSFT either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.