Summary:

- Nvidia Corporation’s revenue and net income skyrocketed in fiscal year 2024, driven by their transition from a video game company to an AI company.

- CUDA, Nvidia’s programming interface, gives them a significant advantage in accelerated computing and has created a large community of AI programmers.

- Nvidia’s focus on being a platform company, rather than just a chip company, sets them apart from competitors and positions them for continued growth in the AI industry.

Justin Sullivan

Introduction

A December 2023 article in The New Yorker does a thorough job introducing Nvidia Corporation (NASDAQ:NVDA) to readers. It explains how CUDA inventor and Accelerated Computing Business VP Ian Buck chained 32 GeForce cards together to play Quake using 8 projectors back in 2000. CEO Jensen Huang gave a nice background on Nvidia in a March 2023 CNBC interview. Nvidia pioneered accelerated computing about three decades ago, and they define it as the use of specialized hardware such as GPUs to speed up work with parallel processing. Up until Nvidia’s contributions, it was widely believed general purpose central processing unit (“CPU”) software was best for almost everything.

For many years Nvidia was pretty much known for computer graphics applied to video games, but the ambition was to be a computing platform company. They always believed accelerated computing would impact many industries. Expansion from video games into design prefaced the extension into scientific computing and physical simulation. Eventually, AI found Nvidia because of the enormous benefits of accelerated computing. Now Nvidia is the world’s engine for AI. CEO Jensen Huang says we’re at a watershed event for the AI industry – the iPhone moment.

Nvidia’s fiscal 2024 year ended on January 28, 2024. They had an inflection point in fiscal 2024 when their reinvention from a video game company to an AI company came to fruition. Net income skyrocketed over 750% from $1.4 billion on revenue of $6.1 billion in Q4 2023 up to $12.3 billion on revenue of $22.1 billion in Q4 2024.

My thesis is that Nvidia has a bright future with accelerated computing. This is Nvidia, the business, which isn’t necessarily the same as the stock, which might be ahead of itself with optimistic expectations priced in. Forward-looking investors should consider getting familiar with the business, even if they think the stock is overpriced right now. This is because the stock is volatile and buying situations may arrive in the quarters ahead for those who understand the company and have a price in mind for what it is worth.

CUDA And Platform Dominance

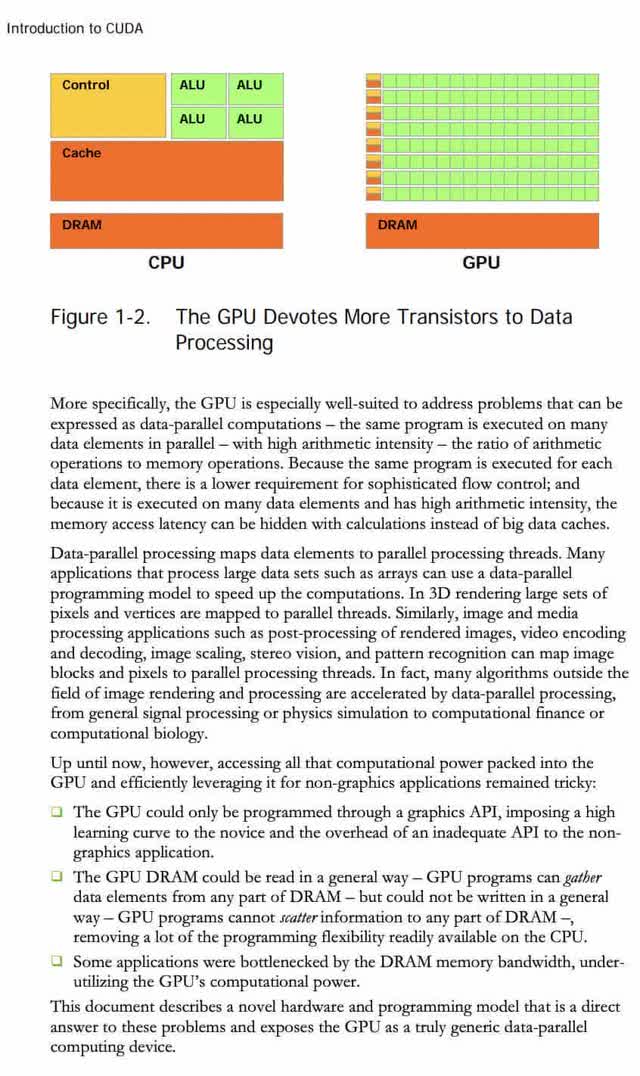

Compute Unified Device Architecture (“CUDA”) is the most salient consideration as to why Nvidia should continue to have a leg up on competitors for years to come with accelerated computing. CUDA is the ubiquitous gateway for accessing all the computational power of the GPU and leveraging it beyond just graphics applications. Per a programming guide from way back in 2007, the goal of the CUDA programming interface is to give users familiar with the C programming language an easy path to write programs for execution by the graphics processing unit (“GPU”). Engineers take their C programs, identify data parallel parts and compile them down to the GPU. Again, CPUs have been powering things for decades, but GPUs which are specialized for compute-intensive, highly parallel computation gained popularity for graphics and video games years ago. Nvidia’s 2007 CUDA programming guide shows how GPUs devote more transistors to data processing than CPUs:

CUDA (Nvidia’s 2007 CUDA programming guide)

An August 2023 NY Times article talks about Nvidia’s advantages with their CUDA platform. Neuroscientist Naveen Rao explains how Nvidia’s ecosystem with millions of CUDA developers differentiates them from others trying to sell AI hardware:

He found that Nvidia had differentiated itself beyond the chips by creating a large community of A.I. programmers who consistently invent using the company’s technology.

“Everybody builds on Nvidia first,” Mr. Rao said. “If you come out with a new piece of hardware, you’re racing to catch up.”

Technology Analyst Aaron Rakers says there are 4.8 million CUDA GPU developers, and he asks Nvidia CFO Colette Kress about the stickiness at the November 2023 Wells Fargo conference. CFO Kress responds by saying their CUDA development platform has been around for close to 15 years. She notes how new developers want to join the same community as existing developers (emphasis added):

Everything that we do on our GPUs today is both backward-compatible and forward-compatible. Every customer knows that. They change [the] generation of architecture to our new generation. Everything is still working. We also have to think through, where would that development community like to be. They like to be where all the other developers are because so much work has been built over time. Somebody would have to rebuild that. And so, our position there has just been a very full end-to-end solution that no one can overall argue with and they understand that we are here to continue to innovate going forward.

An August 2023 Medium article says CUDA is embedded into all aspects of the AI ecosystem (emphasis added):

All the major deep learning frameworks like TensorFlow, PyTorch, Caffe, Theano, and MXNet added native support for CUDA GPU acceleration early on. CUDA provided the most robust path to unlock the computational horsepower of GPUs. This created a self-reinforcing virtuous cycle – CUDA became the standard way of accessing GPU acceleration due to its popularity and support across frameworks, and frameworks aligned with it due to strong demand from users. Over time, the CUDA programming paradigm and stack became deeply embedded into all aspects of the AI ecosystem. Most academic papers explaining new neural network innovations used CUDA acceleration by default when demonstrating experiments on GPUs.

Asked about competition from Analyst Joseph Moore at the March 2024 Morgan Stanley conference, CFO Kress talked about Nvidia’s platform focus. The question involved the hyperscale cloud companies along with Advanced Micro Devices, Inc. (AMD) and Intel Corporation (INTC) (emphasis added):

We are focused very differently than many of those companies, as they focus on silicon or a specific chip for a certain workload. Stepping back and understanding our vision is a platform company, a platform company that is able to deliver any form of data center computing that you may have in terms of the future. Creating a platform is a different process than creating a chip and our focus to make sure at every data center level, all of the different components we may be able to provide them, whether it be the computing infrastructure, the networking infrastructure, the overall memory part of it.

CFO Kress went on at the March 2024 Morgan Stanley conference to stress the importance of CUDA and Nvidia’s full end-to-end stack (emphasis added):

So, that’s our business and that comes with an end-to-end stack of software. Software that says, at any point in time, as AI continues to ramp, we’re going to see new things. People are going to need to go into that development phase of something like CUDA to make sure that they keep up to date with the latest and greatest road of AI.

Understanding CUDA helps us see Nvidia is not merely a chip business, but a platform company figuring things out end to end. Competitors may make cheaper GPUs but CUDA and Nvidia’s overall ecosystem help keep the total cost of ownership (“TCO”) low relative to offerings from competitors.

Summary Of Accelerated Computing And AI Transitions

Per CEO Huang’s comments in the Q4 2024 call, conditions are excellent for data center growth in calendar 2024, calendar 2025 and beyond. This is because of two industry-wide transitions. The first is moving from general-purpose CPU computing to accelerated computing. Advancements with CPUs have been slowing such that cloud service providers (“CSPs”) have been able to use existing equipment longer and extend the depreciation. The first transition of moving to accelerated computing has led to the second transition which is generative AI (emphasis added):

The first one is a transition from general to accelerated computing. General-purpose computing, as you know, is starting to run out of steam. And you can tell by the CSPs extending and many data centers, including our own for general-purpose computing, extending the [CPU] depreciation from four to six years. There’s just no reason to update with more CPUs when you can’t fundamentally and dramatically enhance its throughput like you used to. And so you have to accelerate everything. This is what NVIDIA has been pioneering for some time. And with accelerated computing, you can dramatically improve your energy efficiency. You can dramatically improve your cost in data processing by 20 to 1. Huge numbers. And of course, the speed. That speed is so incredible that we enabled a second industry-wide transition called generative AI.

CEO Huang closed the 4Q24 call with more comments on the transitions, saying the trillion-dollar installed base of data centers is converting. He says Nvidia provides AI generation factories such that software can learn, understand and generate any information (emphasis added)::

We are now at the beginning of a new industry where AI-dedicated data centers process massive raw data to refine it into digital intelligence. Like AC power generation plants of the last industrial revolution, NVIDIA AI supercomputers are essentially AI generation factories of this Industrial Revolution. Every company in every industry is fundamentally built on their proprietary business intelligence, and in the future, their proprietary generative AI.

AI Details

Nvidia’s revenue should continue increasing as their AI Factories are on the rise all over the world. Of course everyone has heard of ChatGPT and there are countless articles describing the way Nvidia and Microsoft Corporation (MSFT) worked together to help bring it to fruition. ChatGPT is huge, but there are many other developments ongoing. In an October 2023 interview at Columbia Business School, Nvidia CEO Huang said we’ve taught computers to represent information in numerical ways. AI can take one kind of information and generate it into information of another kind. Explaining Word2Vec, he explains how English can be represented with numbers. Stable Diffusion is mentioned, and it is a generative AI which makes images from words. Here is an example I made from stablediffusionweb.com by typing “panda drinking coffee” and then pressing the “draw” button:

Generative AI image (Generated from author’s “panda drinking coffee” prompt at stablediffusionweb.com)

CEO Huang says generative AI which goes from images to words is called captioning.

CEO Huang spoke about how AI recommender systems are moving from a CPU approach to a GPU approach in the 4Q24 call. The worldwide size of recommender systems is enormous, so this change will benefit Nvidia prodigiously (emphasis added):

These recommender systems used to be all based on CPU approaches. But the recent migration to deep learning and now generative AI has really put these recommender systems now directly into the path of GPU acceleration. It needs GPU acceleration for the embeddings. It needs GPU acceleration for the nearest neighbor search. It needs GPU acceleration for the re-ranking and it needs GPU acceleration to generate the augmented information for you. So GPUs are in every single step of a recommender system now. And as you know, [the] recommender system is the single largest software engine on the planet. Almost every major company in the world has to run these large recommender systems. Whenever you use ChatGPT, it’s being inferenced.

CFO Colette Kress repeated the importance of recommender engines at the March 2024 Morgan Stanley Technology, Media & Telecom Conference (emphasis added):

Recommender engines fuel every single person’s cell phone in this room and the work that they have put in both whether it’s news, whether it’s something that you’re purchasing, whether it’s something in the future of restaurants or otherwise.

Valuation Risks

I like to start the valuation framework by looking at some risks to the business. Of course there is also a risk that the business is fine, but the stock is too expensive. We’ll talk about this consideration in the next section.

Hyperscale cloud providers are not a huge concern for me as competitors at this time in part because of CUDA. Amazon.com, Inc. (AMZN) continues building their own training and inference chips, but Nvidia should continue to stay ahead by doing things well with their general purpose accelerated computing platform. Amazon hardware will likely remain concentrated in the AWS ecosystem whereas Nvidia hardware is in every cloud.

There is an excellent book called The Innovator’s Dilemma, and it explains how incumbents can struggle relative to newcomers with new ways of doing things. I believe this may apply to AMD and Intel who are traditionally focused on CPUs as they try to compete with Nvidia which has always had a GPU focus. This doesn’t mean AMD and Intel can’t take some GPU market share. I believe they can, but I also believe the entire GPU market will grow enormously such that Nvidia will continue to increase GPU revenue over the years.

Per comments from CFO Kress in the 4Q24 call, the throttling impositions from the US government are hurting data center sales in China. A March 2024 WSJ article talks about China’s attempts to “delete America” from their technology, so there’s a chance Nvidia’s data center revenue from China could fall to zero eventually. It was a mid-single digit percentage in the 4Q24 quarter. Sometimes the end location is different from the billing location but if we look at the revenue billing location for fiscal 2024 as a whole then we see China was responsible for $10.3 billion of the $60.9 billion total.

AI training is more resource-intensive than AI inference. If most of the AI work ends up being inferenced, then some people feel Nvidia’s advantage is lessened as CPUs can compete in this area. One of the reasons I’m not worried about this is because Nvidia’s fiscal 2024 10-K said 40% of their fast-growing data center revenue was for AI inference.

Valuation

There is a considerable chance expected growth will not materialize such that the current stock price is too high. However, I think it’s a reasonable bet the growth will meet or exceed some estimates being discussed today.

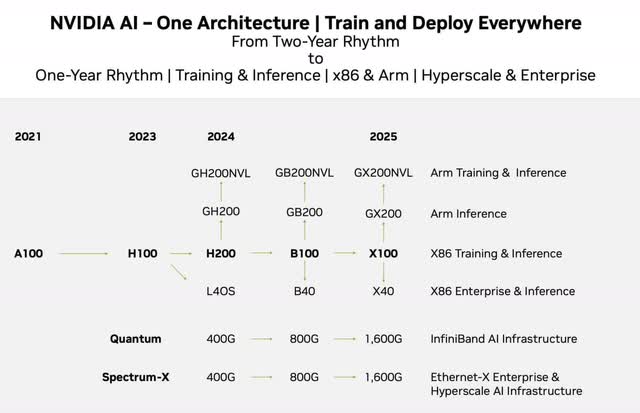

Per an October 2023 presentation, the architecture is always evolving. Soon we’ll see the Blackwell Architecture and the B100 GPU:

Nvidia architecture (Nvidia October 2023 presentation)

Per comments from CFO Colette Kress in the 4Q24 call, H200 nearly doubles inference performance of H100, so demand is strong.

Given Nvidia’s dominance with CUDA and their platform mindset, I think it will be difficult for competitors to cause significant pain over the next few years. I’ve owned shares in Amazon.com, Inc. (AMZN) since June 2018, and I think Nvidia’s outlook is similar to what we’ve seen with AWS since Alphabet Inc. (GOOG, GOOGL) started disclosing their cloud financials going back to 4Q19. AWS has lost some market share over time as many predicted, but their revenue has still gone up prodigiously because the cloud market pie has grown faster than the rate at which their slice of the market has shrunk. I’m optimistic the same type of thing will happen to Nvidia in the years ahead with the GPU market. CFO Kress talked about Nvidia’s total cost of ownership (“TCO”) advantage versus competitors at the March 2024 Morgan Stanley conference. AWS and others will almost certainly design cheaper chips and take a bit of GPU share, but we have to look at the entire equation and not just the chip piece (emphasis added):

We’re going to see simple chips from time to time come to market. No problem. But always remember, a customer has to balance that at the overall TCO of using those things. Right now, you’ve got a developer group that is focused on the NVIDIA platform, a very important large size of developers. Developers want to spend time, where there’s other developers. So when you think about some of the other types of silicon, you have to convince a developer to say, that’s a good use of time. That is a resource dedication and a total change in TCO in order for them to think about it. It’s not the cost of the chip. It’s the cost of that full TCO.

When looking at the numbers, I like to keep in mind the fact that founder-led companies like Nvidia often have special qualitative factors which increase the valuation. The 4Q24 release shows a net income of $12.3 billion and an operating income of $13.6 billion on revenue of $22.1 billion such that the net income margin was nearly 56%. All these figures are up prodigiously from 3Q24 when the respective values were $9.2 billion, $10.4 billion, and $18.1 billion. This 4Q24 actual revenue figure was more than 10% higher than the guidance estimate of $20 billion. The net income run rate is over $49 billion.

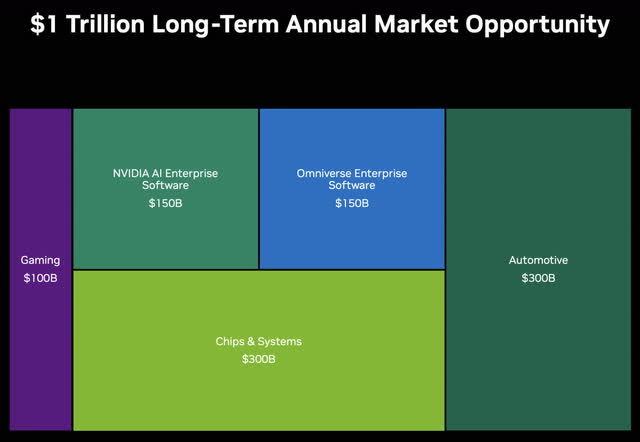

Per the September 2023 Citi conference presentation, Nvidia is the biggest and fastest-growing company in a market which should eventually have an annual opportunity of $1 trillion:

Long-term market (September 2023 Citi conference presentation)

The revenue guidance for 1Q24 is $24 billion. I am optimistic this guidance will be met or exceeded and hopefully, the 2Q25 guidance will be even higher.

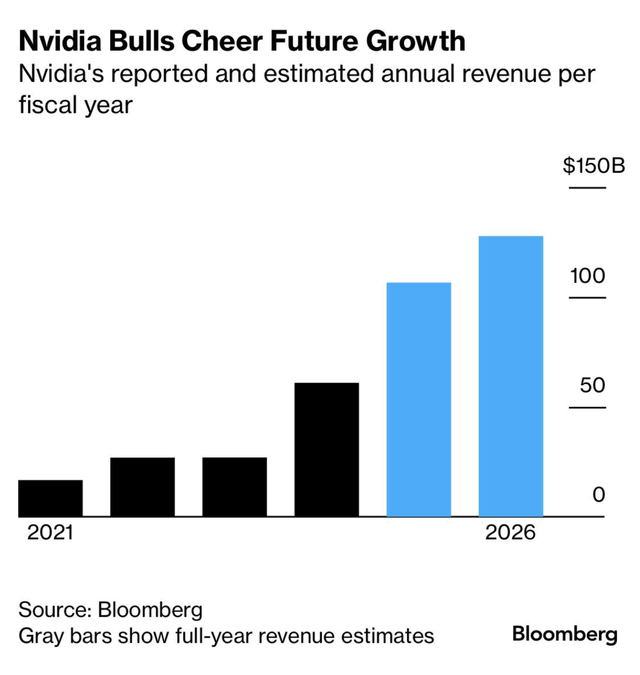

Nvidia had revenue of $60.9 billion in fiscal 2024 which ended in January of this year. Citing Bloomberg, Wccf tech says Nvidia should have revenue of more than $100 billion in fiscal 2025 which ends in January of next year and $130 billion in fiscal 2026 which ends in January 2026:

Future revenue (Wccf tech citing Bloomberg)

The estimate for a little more than $100 billion in revenue for the fiscal year ending in January of next year seems reasonable. The revenue estimate of $130 billion for the fiscal year ending in January 2026 might be low! Either way, I won’t be surprised if net income is more than $50 billion for the fiscal year ending January of next year and more than $75 billion the following year.

A tremendous amount of optimism is already priced into the stock. Per the 2024 10-K, there were 2.5 billion shares outstanding as of February 16th. The March 12th share price was $919.13, so the market cap is $2.3 trillion.

Based on the net income run rate of more than $49 billion based on 4Q24, we have a P/E ratio of 47. Normally, a P/E at this level is considered to be very high, but the net income run rate one year earlier from the 4Q23 period was just $5.7 billion! In one year, the net income run rate increased almost 9 times! Based on 1Q25 guidance, we won’t see anything close to another 9x increase in the next fiscal year, but net income could still go up prodigiously such that people may look back at today’s stock price and say the stock was not expensive. Given the expected growth ahead, I view Nvidia Corporation stock as a hold despite the frightening backward-looking P/E ratio.

Disclaimer: Any material in this article should not be relied on as a formal investment recommendation. Never buy a stock without doing your own thorough research.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA, AMZN, GOOG, GOOGL, META, MSFT, VOO either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.