Summary:

- The delay in Blackwell GPU shipping in addition to top customers already seeking alternatives to Nvidia’s chips has certainly emboldened the bears to call the top for Nvidia Corporation.

- The bulls argue that this delay will simply be filled with demand for Nvidia’s previous generation chips, namely the H200 GPUs.

- Though, the real battleground is on the software side, with challengers seeking to use any setback to catch up to Nvidia’s CUDA moat.

- While this will be a steep uphill battle for challengers, it does plant the seeds of weakening pricing power for Nvidia going forward, undermining future revenue growth and profitability.

- We discuss in a simplified manner how the tech industry is progressing in challenging Nvidia’s moat, and the stock price potential ahead.

Justin Sullivan

NVIDIA Corporation (NASDAQ:NVDA) stock saw a steep correction of around 27% recently, though it has now recovered most of those losses. The reversal of the yen carry trade and the delay in Blackwell GPU shipping in addition to top customers already seeking alternatives to Nvidia’s chips has certainly emboldened the bears to call the top for NVDA. And with the Q2 earnings release in a few days, investors will be keenly looking out for any impact on guidance for the rest of 2024, and 2025. Though for investors who are concerned about the Blackwell delay impacting sales growth projections for the quarters ahead, it is important to understand the CUDA software moat that should sustain demand for Nvidia’s GPUs regardless of minor setbacks.

The real battleground is on the software side, with Nvidia leveraging the value proposition and stickiness of the CUDA platform to keep customers entangled in its ecosystem. While catching up to CUDA will be a steep uphill battle for challengers, it does potentially plant the seeds of weakening pricing power for Nvidia going forward. Nonetheless, Nvidia’s moat is still safe for a few more years.

Nvidia bull case still intact

In the previous article, we extensively discussed in a simplified manner how Nvidia is beautifully positioned to earn great streams of recurring revenue through its “NVIDIA AI Enterprise” software service. The company is striving to make it the core operating system for generative AI-powered software applications in this new era. Moreover, we also covered how the risk of cloud service providers designing their own chips is subdued by Nvidia increasingly building AI factories (private data centers) for enterprises directly, locking companies into its ecosystem and reducing enterprises’ need to migrate to the cloud. So with enterprises increasingly making up a larger proportion of Nvidia’s data center sales mix, and the tech giant set to generate large sums of recurring software revenue going forward, the bull case remains intact.

Matter of fact, on the earnings call in a few days, I expect CEO Jensen Huang to particularly emphasize the growing demand from enterprise customers. On the Q1 FY2025 Nvidia earnings call a few months ago, Huang proclaimed “In Q1, we worked with over 100 customers building AI factories.” This has become an increasingly important source of data center revenue for Nvidia, sustaining demand for its GPUs beyond just the Cloud Service Providers (CSPs). I am maintaining a ‘buy’ rating on NVDA.

That being said, it is still significant to cover the broader tech sector’s efforts to build alternatives to Nvidia’s platform, and how this tests the tech giant’s moat. Markets tend to price in future events before they materialize and to enable investors to make more informed investment decisions for the long term.

Nvidia Blackwell delay

The delay in the shipping of Blackwell GPUs due to certain design flaws intensified the recent correction in the stock price. The fear is that such delays could create windows for rivals like AMD and Intel to capture some market share away from Nvidia.

Nonetheless, these fears have subdued somewhat amid demand for Nvidia’s previous generation chips, the Hopper series, remaining strong, with a note from UBS analyst Timothy Arcuri pointing out that:

There is sufficient headroom and short enough lead time in H100/H200 supply for Nvidia to largely backfill lower initial Blackwell volumes with H200 units and the supply chain is seeing upward revisions consistent with some backfill, as per the analysts.

So on the earnings call next week, expect CEO Jensen Huang and CFO Colette Kress to repeatedly emphasize the continuous strong demand for H100/H200s until Blackwell starts shipping. The revenue bump from the higher-priced Blackwell GPUs will simply be pushed out to the first quarter of 2025. The guidance for the rest of 2024 should continue to reflect strong sales growth, as tech companies continue to build out their AI infrastructure regardless of the Blackwell delay.

That being said, the high price tag of Nvidia’s GPUs has induced customers and challengers to seek, or even build their own alternatives, posing a potential risk to future demand for Nvidia’s chips.

The risk of rising alternatives to Nvidia’s platform

It’s no secret that Nvidia’s top cloud customers, Microsoft Corporation (MSFT) Azure, Amazon.com, Inc.’s (AMZN) AWS, and Alphabet Inc. (GOOG) (GOOGL) Cloud are designing their own chips, and have been encouraging their customers to opt for their in-house hardware over Nvidia’s GPUs.

A prime reason why Nvidia’s chips remain in high demand among cloud customers is the CUDA platform built around its GPUs. It boasts an extensive base of developers and thousands of applications that significantly extend the capabilities of the GPUs.

Software developers from organizations, as well as independent developers, continue to build around the CUDA platform to optimally program the GPUs for various kinds of computing workloads. The expanding versatility and stickiness of this software layer is what enables Nvidia to keep customers entangled in the ecosystem and sustain demand for its expensive GPUs.

Moreover, Nvidia boasts a broad range of domain-specific CUDA libraries that are oriented towards serving each particular economic sector. In fact, at Nvidia’s COMPUTEX event in June 2024, CEO Jensen Huang proclaimed that:

These domain-specific libraries are really the treasure of our company. We have 350 of them. These libraries are what it takes, and what has made it possible for us to have opened so many markets… We have a library for AI physics that you could use for fluid dynamics and many other applications where the neural network has to obey the laws of physics. We have a great new library called Ariel that is a CUDA accelerated 5G radio, so that we can software define and accelerate the telecommunications networks.

The point is, CUDA’s functionalities are broad and extensive, used across numerous sectors and industries, making it difficult for challengers to penetrate its moat in a short space of time. But that certainly has not stopped the company’s largest customers from trying.

Google Cloud was the first cloud provider to offer its own TPUs to enterprises in 2018 and boasts AI startups like Midjourney and character.ai as users of these chips.

Although most notably, last month it was reported that Apple used Google’s TPUs to train its AI models as part of Apple Intelligence.

This is a massive win for Google. Not just because it positions its chips as a viable alternative to Nvidia’s GPUs, but also because it could accelerate the development of a software ecosystem around its chips. In this process, third-party developers build more and more tools and applications around its TPUs that extend its capabilities, with Apple’s utilization serving as an affirmation that this hardware is worth building upon.

Such grand-scale use by one of the largest tech companies in the world should indeed help Google convince more enterprises to use its TPUs over Nvidia’s GPUs. This undermines the extent to which Google Cloud will continue buying GPUs from Jensen Huang’s company in the future or the volume/frequency at which it upgrades to newer generation chips from Nvidia.

And then there is AWS, which has also been offering its semiconductors for training and inferencing AI models, offering the Trainium chips since 2021, and the Inferentia chips since 2019. Moreover, on the Amazon Q2 2024 earnings call, CEO Andy Jassy emphasized the value proposition of its in-house chips over Nvidia’s GPUs:

We have a deep partnership with NVIDIA and the broadest selection of NVIDIA instances available, but we’ve heard loud and clear from customers that they relish better price performance. It’s why we’ve invested in our own custom silicon in Trainium for training and Inferentia for inference.

He certainly makes a compelling argument here on the price-performance benefits, given that the company’s in-house chips can be more deeply integrated with other AWS hardware and infrastructure to drive more performance efficiencies. In fact, using an example of AI startup NinjaTech (an AWS customer), the computing cost difference between using Amazon’s in-house chips and Nvidia’s GPUs could reportedly be huge:

To serve more than a million users a month, NinjaTech’s cloud-services bill at Amazon is about $250,000 a month, … running the same AI on Nvidia chips, it would be between $750,000 and $1.2 million.

Nvidia is keenly aware of all this competitive pressure, and that its chips are costly to buy and operate. Huang, its CEO, has pledged that the company’s next generation of AI-focused chips will bring down the costs of training AI on the company’s hardware.

Nvidia’s aggressive pricing strategy is under pressure. Top cloud customers are luring enterprises into using their custom chips over Nvidia’s hardware by highlighting cost advantages. They are thereby simultaneously inducing companies’ developers to build software tools and applications around these in-house chips in a bid to catch up to CUDA.

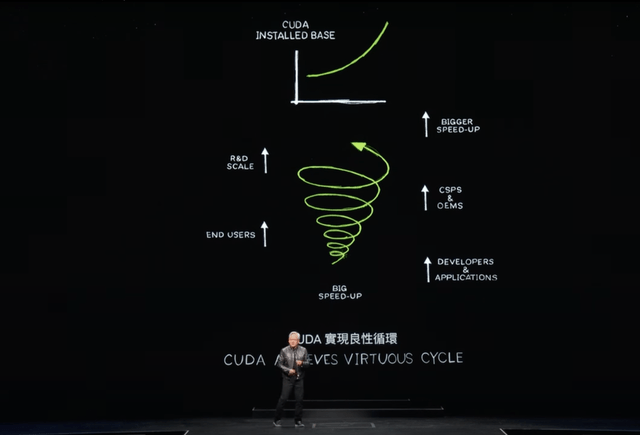

At Nvidia’s COMPUTEX event a few months ago, Jensen Huang emphasized that while Nvidia’s GPUs carry high-price tags, the company’s ecosystem benefits from a powerful network effect that continuously drives down the cost of computing on its chips:

Because there are so many customers for our architecture, OEMs and cloud service providers are interested in building our systems… which of course creates greater opportunity for us, which allows us to increase our scale, R&D scale which speeds up the application even more. Well, every single time we speed up the application, the cost of computing goes down. … 100X speed up translates to 96%, 97%, 98% savings. And so when we go from 100X speed up to 200X, speed up to 1000X, speed up the savings, the marginal cost of computing continues to fall.

Nvidia’s CUDA network effect (Nvidia COMPUTEX)

Incredibly, Nvidia’s virtuous network effect allows it to drive down the cost of computing on its GPUs. This is so more workloads can be run more cost-efficiently on its chips, allowing customers to save on training/ inferencing costs over the long term. Until now, Nvidia capitalized on this network effect by charging higher prices for its hardware devices, citing the longer-term cost benefits.

Though now with competition and alternatives ramping up, Nvidia’s pricing power could inevitably diminish, subduing the extent to which it can charge premium prices for its GPUs, despite its CUDA moat. In fact, Nvidia has reportedly already lowered the price of its GPUs in response to increased competition from AMD. Moreover, in a previous article, we had extensively covered AMD’s strategy (and benefits) of catching up to Nvidia’s closed-source CUDA platform through open-sourcing its own ROCm software platform. This was a bid to attract more developers to build tools and applications around its own GPUs.

And it’s not just AMD that is leveraging the power of open-source to challenge Nvidia’s moat, as reportedly:

The enormous size of the market for AI computing has encouraged an array of companies to unite to take on Nvidia.

…

Much of this collaboration is focused on developing open-source alternatives to CUDA, says Bill Pearson, an Intel vice president focused on AI for cloud-computing customers. Intel engineers are contributing to two such projects, one of which includes Arm, Google, Samsung, and Qualcomm. OpenAI, the company behind ChatGPT, is working on its own open-source effort.

…Investors are piling into startups working to develop alternatives to CUDA. Those investments are driven in part by the possibility that engineers at many of the world’s tech behemoths could collectively make it possible for companies to use whatever chips they like and stop paying what some in the industry call the “CUDA tax.

So the efforts to dethrone Nvidia’s CUDA are certainly intensifying. While building a software platform/ ecosystem commensurate against CUDA will take time, it could certainly undermine Nvidia’s pricing power going forward. In fact, we even have a historical analogy portraying how the rise of open-source technology subdued pricing power during the computing revolution, as Goldman Sachs research argued:

AI technology is undoubtedly expensive today. And the human brain is 10,000x more effective per unit of power in performing cognitive tasks vs. generative AI. But the technology’s cost equation will change, just as it always has in the past. In 1997, a Sun Microsystems server cost $64,000. Within three years, the server’s capabilities could be replicated with a combination of x86 chips, Linux technology, and MySQL scalable databases for 1/50th of the cost. And the scaling of x86 chips coupled with open-source Linux, databases, and development tools led to the mainstreaming of AWS infrastructure.

Similarly, this time round, the tech sector is eager to invest in and develop alternatives to Nvidia’s platform, with a particular emphasis on open-source software. One shouldn’t underestimate the power of open-source technology, given how it gave rise to new computing giants like AWS. Challengers are seeking to repeat this history now in the generative AI era, refusing to continue paying premium prices to access Nvidia’s ecosystem of AI solutions.

Challenging Nvidia’s software moat could be easier said than done

Now, while the endeavors to challenge Nvidia’s CUDA moat should certainly not be taken too lightly, it is undoubtedly a steep uphill battle. This is simply by virtue of the fact that it has taken the market leader almost two decades to build this moat, and reportedly (emphasis added):

Year after year, Nvidia responded to the needs of software developers by pumping out specialized libraries of code, allowing a huge array of tasks to be performed on its GPUs…The importance of Nvidia’s software platforms explains why for years Nvidia has had more software engineers than hardware engineers on its staff.

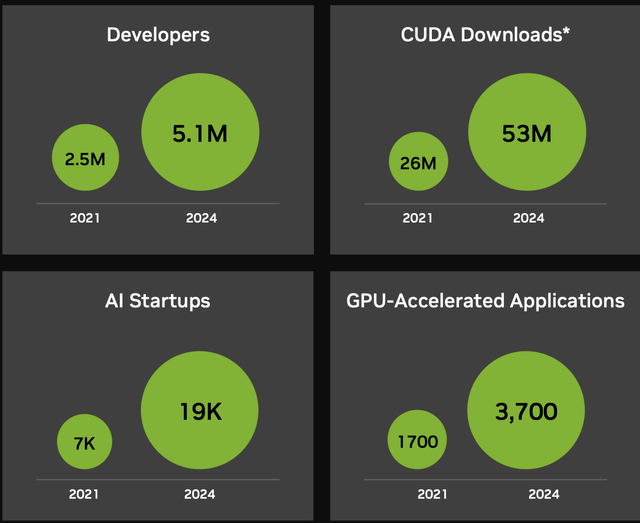

Moreover, the size of Nvidia’s CUDA ecosystem has continued to grow quarter after quarter, boasting 5.1 million developers and 3,700 GPU-accelerated applications in Q1 FY2025, up from 4 million developers and 3,200 applications in the prior quarter.

CUDA ecosystem statistics (Nvidia)

And it is safe to assume that this ecosystem will have grown even larger over Q2 FY2025, with new statistics expected to be released alongside their earnings report next week.

Furthermore, CEO Jensen Huang took a swipe at challengers at the COMPUTEX event in June, explaining the difficulty of cracking Nvidia’s moat:

Creating a new platform is extremely hard because it’s a chicken and egg problem. If there are no developers that use your platform, then of course there will be no users. But if there are no users, there are no installed base. If there are no installed base, developers aren’t interested in it. Developers want to write software for a large installed base, but a large install base requires a lot of applications so that users would create an installed base. This chicken or the egg problem has rarely been broken, and it’s taken us 20 years. One domain library after another, one acceleration library after another. And now we have 5 million developers around the world. We serve every single industry from health care, financial services, of course, the computer industry, automotive industry. Just about every major industry in the world

Indeed, developers are attracted by high earnings potential. And right now, Nvidia is the only tech giant that has a large enough installed base for independent software developers to be able to make a living from selling tools and services through the CUDA platform. Consequently, the CUDA developer base continues to rapidly grow, adding over a million developers in just one quarter, continuously steepening the uphill slope for challengers to catch up.

It is also worth noting that rivals striving to build a software platform similar to CUDA are barely a threat to Nvidia until they get their execution right. Take Google Cloud as an example, for whom building a software ecosystem around its TPUs hasn’t been smooth sailing reportedly:

Google has had mixed success opening access to outside customers. While Google has signed up prominent startups including chatbot maker Character and image-generation business Midjourney, some developers have found it difficult to build software for the chips.

Execution matters. It’s one thing to embark on building a software platform, it’s another thing to get it right. This is not to imply that these CSPs and other challengers are not making any progress in building out their software ecosystems, but their struggles are a testament to Nvidia’s decades’ worth of work to build a proficient platform.

Furthermore, even as rivals progress in building out the software ecosystems around their in-house chips, convincing enterprises to migrate away from Nvidia GPUs is not that easy, as Amazon Web Services executive Gadi Hutt reportedly admitted (emphasis added):

Customers that use Amazon’s custom AI chips include Anthropic, Airbnb, Pinterest, and Snap. Amazon offers its cloud-computing customers access to Nvidia chips, but they cost more to use than Amazon’s own AI chips. Even so, it would take time for customers to make the switch, says Hutt.

This is a testament to just how sticky Nvidia’s CUDA platform is. Once a company’s developers are accustomed to powering their workloads using CUDA-based tools and applications, it can be time-consuming, energy-consuming, and simply inconvenient to migrate to a different software platform. It is also reflective of the immensely broad range of capabilities available through CUDA, requiring developers to conduct comprehensive due diligence to ensure adequately similar capabilities are available through rival platforms.

Now, challengers will certainly strive to build more and more tools and services that ease the migration from Nvidia’s GPUs/ CUDA to their own AI solutions, but it will take time for these competitors to commensurately catch up.

Nvidia financial performance and valuation

Now for Q2 FY2025, Nvidia has guided $28 billion in total revenue, which would imply a year-over-year growth rate of 107%. While most market participants believe Nvidia should be able to handily beat this number, the real focus will be on the guidance for the rest of 2024 and commentary around the Blackwell delay. This is what will drive post-earnings stock price action.

As long as Nvidia’s executives can tell analysts and investors on the earnings call that the Blackwell issue has been resolved, or is very close to being resolved, and can offer a visible timeline for starting to ship the GPUs at the end of this year/ beginning next year, then the stock price should be able to continue rallying higher following the steep correction recently.

Moreover, despite the delay, executives should be able to satisfy investors by offering strong sales guidance for the rest of 2024, given the bullish commentary offered by CEOs of Nvidia’s top customers. For instance, Google CEO Sundar Pichai shared on the Alphabet Q2 2024 earnings call that:

I think the one way I think about it is when we go through a curve like this, the risk of under-investing is dramatically greater than the risk of over-investing for us here, even in scenarios where if it turns out that we are over-investing. We clearly — these are infrastructure, which are widely useful for us. They have long useful lives and we can apply it across, and we can work through that.

And we saw the same mindset from CEO Mark Zuckerberg on the Meta Q2 2024 earnings call:

at this point, I’d rather risk building capacity before it is needed rather than too late, given the long lead times for spinning up new inference projects.

So, in case you are hesitant about buying NVDA ahead of the earnings report amid the Blackwell delay and the potential impact on guidance, one can be confident that demand for the H100/H200 GPUs should remain sustainably high. This is despite top customers still building out their AI infrastructure. And as discussed throughout the article, the powerfully versatile CUDA platform that accompanies Nvidia’s GPUs should keep customers entangled in the ecosystem, while it could still take years for challengers to catch up to this software moat.

In fact, former Google CEO Eric Schmidt recently gave a talk at Stanford University and emphasized just how much the leading AI companies will need to spend on AI hardware in the coming years. This signals more growth ahead for Nvidia, with Eric Jackson (founder and portfolio manager of EMJ Capital), saying that:

Talking to Sam Altman, they [Schmidt and Altman] both believed that each of the hyper scalers and the OpenAI’s of the world would probably have to spend $300 billion each over the next few years, so Nvidia could basically only supply those hyper scalers, and their order book will be full for the next four years.

Now various experts could project differing CapEx numbers, but the key point is, demand for Nvidia’s GPUs will remain elevated over the next couple of years during this AI infrastructure build-out phase.

You have to ask, despite these hyper-scalers each boasting about the price-performance capabilities of their custom in-house chips, and AI start-ups also creating alternatives to Nvidia, why are top customers still purchasing so much AI hardware from Nvidia? It is simply a testament to just how far ahead Nvidia’s GPUs are in performance and the value proposition of the sticky CUDA software platform that deeply extends the capabilities of these chips. This is one of the key reasons NVDA stock will continue performing strongly from here.

That being said, as competition inevitably ramps up, Nvidia’s pricing power could indeed diminish over time, as discussed earlier. So while the tech giant currently boasts gross profit margins of 70%+ and net margins of 50%+, expect margins to somewhat compress going forward as Nvidia lowers prices of its GPUs to discourage migrations to rivals’ AI solutions.

Now, any hint of softer pricing for next-generation GPUs in the future could induce “weak hands” to sell out of the stock, conducive to a steep correction. Though for long-term investors, this would present a beautiful buying opportunity.

At present, Nvidia is right to charge premium prices for its GPUs. This is given its wide market leadership in the absence of meaningful competition, as it gives the company the opportunity to invest more in R&D to sustain its leadership, as well as build up a massive cash reserve.

But even when Nvidia is compelled to lower prices in the future as competition catches up, this would be the right move. The tech giant’s main goal should be to sustain a large installed base of its hardware, upon which it can earn rent in the form of recurring software revenue. We discussed extensively the growth opportunities through the “NVIDIA AI Enterprise” software operating system in the previous article, whereby Nvidia stands to generate $4,500 per GPU annually from enterprises as they run their generative AI-powered software applications in this new era.

So any potential margin compressions from weakening pricing power for GPUs should be recouped from higher-margin software revenue, making up a larger portion of the tech behemoth’s revenue base. As discussed in a prior article, Nvidia’s recurring software revenue is expected to make up 30% of total revenue going forward, which should be supportive of profit margins, as well as stock price performance going forward.

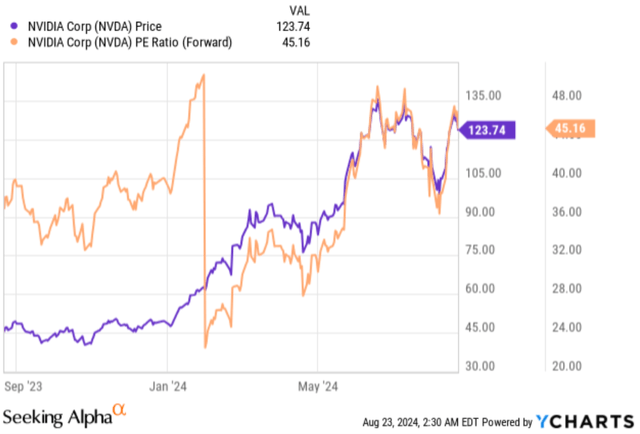

Now in terms of Nvidia stock’s valuation, it is currently trading at around 47x forward earnings, in line with its 5-year average multiple. Though amid the recent 27% correction in the stock price, NVDA was trading at around 36x earlier this month, creating a beautiful opportunity for dip buyers.

Nonetheless, when we adjust the current Forward P/E multiple by the expected EPS FWD Long-Term Growth (3-5Y CAGR) of 36.60%, we obtain a Forward Price-Earnings-Growth [PEG] multiple of 1.28x. This is significantly below its 5-year average of 2.10x.

For context, a Forward PEG multiple of 1x would reflect a stock trading at fair value. Though, it is common for popular tech stocks to command multiples of above 2x, with the market assigning a premium valuation based on factors such as “broad competitive moats” and “strength of balance sheet.” For example, Microsoft stock currently trades at a Forward PEG ratio of 2.39x.

Coming back to NVDA, even after the stock recovered from its recent selloff, a Forward PEG of 1.28x is still a very attractive multiple to pay for a company with such a powerful moat around its leadership in the AI era.

That being said, even in the run-up to the earnings report in a few days, the stock could continue to face volatility. “Weak hands” could continue to sell out of the stock on short-term developments like the Blackwell delay. In fact, on August 22nd, the stock had declined by almost 4% again. However, long-term investors that understand the wide lead of its CUDA platform, and the software growth opportunities ahead, should not miss any dip buying opportunities in the stock as we approach the earnings release. NVDA maintains its “buy” rating.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.