Summary:

- The market is fixated on Nvidia’s superior AI chip, but one must acknowledge the broader data center opportunity as a whole to truly appreciate the bull case for Nvidia stock.

- Investors are missing Nvidia’s product bundling sales strategy which is enabling the tech giant to optimally capitalize on the market opportunity ahead and impede competitors’ ability to take market share.

- Similar to how Apple iPhone users upgrade to advanced iPhone models thanks to the stickiness of the services, Nvidia is locking enterprises into the Nvidia software ecosystem for the long term.

imaginima

Nvidia’s stock has surged by around 240% this year thanks to its leadership position in the generative AI revolution. The AI market opportunity is undoubtedly massive, and the AI-related growth story has only begun. Many investors seem to be fixated on Nvidia’s superior AI chip, but it is also important to acknowledge the broader data center opportunity as a whole to truly appreciate the bull case for Nvidia stock. The tech giant has been deploying a great sales strategy, namely through selling HGX systems, that enables Nvidia to optimally capitalize on the market opportunity ahead and lock customers into the Nvidia ecosystem for the long term. Nexus maintains a ‘buy’ rating on Nvidia stock.

In our previous article on Nvidia, Nexus explained in a simplified manner the relation between Nvidia’s hardware solutions and software services, and how Nvidia has successfully designed its chips in a manner that paves the way for massive software revenue growth prospects. Nvidia’s market share in the AI chip market is estimated to be around 80%, benefitting from a large installed base upon which it can build a flourishing software business. Now in this article, we will delve into another important driver of Nvidia’s financial performance, the sale of HGX systems, and how it fosters Nvidia’s ability to capitalize on the market opportunity ahead.

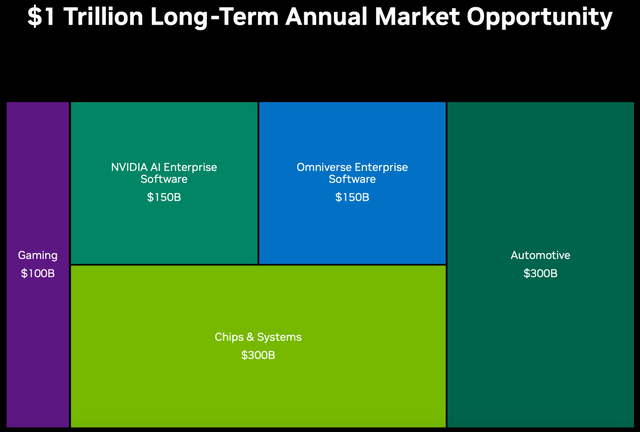

In September 2023, Nvidia projected its total long-term annual market opportunity to reach $1 trillion but did not specify a target year for this estimate. Although the company did offer a breakdown of expected market size opportunities for specific segments.

For context, the company is expected to generate over $58 billion in revenue this year, which would imply significant multiplicative growth prospects for Nvidia’s top line going forward. Now how much of this $1 trillion market opportunity Nvidia will actually be able to capitalize on is up for debate.

Though focusing particularly on the data center opportunity, which is Nvidia’s largest segment and made up 80% of total revenue last quarter, the company’s executives have emphasized how the high demand for HGX systems has been a key driver of revenue growth. On the Q3 FY2024 Nvidia earnings call, CFO Colette Kress shared:

“The continued ramp of the NVIDIA HGX platform based on our Hopper Tensor Core GPU architecture, along with InfiniBand end-to-end networking drove record revenue of $14.5 billion, up 41% sequentially and up 279% year-on-year.”

So what is the HGX platform?

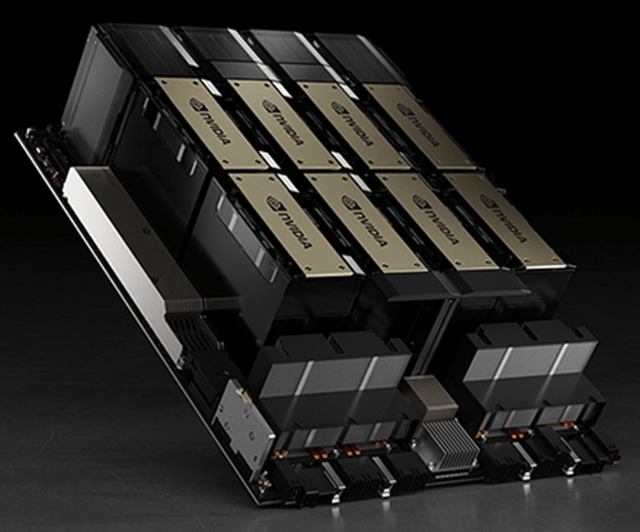

The HGX platform, also known as Nvidia’s AI supercomputer, is built for developing, training, and inferencing generative AI models. It combines four or eight AI GPUs (such as A100s or H100s) together using Nvidia’s networking solutions (Infiniband) and NVLink technology and also includes the NVIDIA AI Enterprise software platform.

Nvidia HGX supercomputer (Nvidia)

So essentially, instead of data center customers having to purchase various hardware components separately, Nvidia combines all the components needed for AI workloads into a single system. This is a great product sales strategy whereby Nvidia can cross-sell its other data center solutions, such as networking solutions, alongside the sales of its highly sought-after chips, as one big bundled solution.

In fact, on the Q2 FY2024 Nvidia earnings call, Kress had shared that:

“Strong networking growth was driven primarily by InfiniBand infrastructure to connect HGX GPU systems.”

On the last earnings call the CFO revealed that “Networking now exceeds a $10 billion annualized revenue run rate”, which means that Nvidia generated over $2.5 billion in networking revenue last quarter.

So, when you hear about Nvidia’s jaw-dropping data center revenue growth rates, remember that these sales growth numbers don’t just reflect the high demand for its GPUs, but also other data center solutions that Nvidia is savvily cross-selling with its AI chips through HGX systems. Nvidia estimates the long-term market opportunity for ‘Chips & Systems’ (which includes HGX systems) to be $300 billion, and this bundled sales strategy enables Nvidia to optimally capitalize on this opportunity.

It is worth noting that data center customers often tend to use a diversified set of suppliers for chips and other hardware solutions, instead of buying all components from a single supplier in order to avoid vendor lock-in. For instance, a data center customer might purchase Nvidia’s GPUs, as well as AMD’s GPUs, and then combine them together using networking solutions from Broadcom. This is to avoid giving too much pricing power to a single supplier, and being able to switch suppliers more easily if they find a better deal elsewhere.

Keeping this in mind, the fact that Nvidia has still been able to successfully encourage data center customers to purchase HGX systems as opposed to individual chips/ networking solutions is a testament to Nvidia’s superior AI solutions. This is what enables Nvidia to deliver incredible year-over-year revenue growth of 279%, as the HGX system binds the tech giant’s various data center solutions together into one big bundled product.

It essentially allows Nvidia to lock end-customers into its ecosystem, paving the way for the chip giant to be able to cross-sell and upsell more AI solutions going forward, particularly its AI software stacks. As discussed in the previous article, Nvidia’s software services are higher-margin solutions, allowing for profit margin expansion. Nvidia already enjoys strong pricing power for its AI chips, with the H100 selling for prices ranging from $25,000 to $40,000, which is beefing up its bottom line. Now as software-related sales become an increasingly larger portion of Nvidia’s total revenue going forward, it should widen the tech giant’s profit margins even further. For context, Nvidia’s non-GAAP gross margin expanded from 71.2% to 75% last quarter.

Moreover, as enterprises increasingly use the HGX platform for their AI workloads, it subsequently also encourages more and more software developers to build out software applications specifically optimized for Nvidia’s AI hardware solutions. This continuously advances the functionalities of Nvidia’s HGX platform and chips, which in turn attracts even more customers to use Nvidia’s AI solutions, thereby creating a virtuous network effect. The tech giant projects the market size for its NVIDIA AI Enterprise Software to reach $150 billion on an annual basis.

Furthermore, as data center customers increasingly buy Nvidia’s complete HGX systems instead of individual chips, it subdues the extent to which these customers buy other data center solutions (e.g. networking equipment) from competitors, making it harder for rivals to gain share in the data center market. For context, around 30% of traditional data center customers’ capital expenditure is estimated to go towards Nvidia’s products next year.

Nvidia would want to avoid its GPUs being connected with competitors’ hardware solutions in the occasions where customers strive to build their own computing systems (as mentioned earlier), as it could potentially compromise or obscure the performance attributes of Nvidia’s chips.

Therefore, increased sales of the HGX systems yield Nvidia more control over the performance of its chips, as they are integrated with Nvidia’s own adjacent hardware solutions like NVLink and Infiniband, allowing for optimized performance. This enables Nvidia to better showcase the superiority of its data center solutions working aggregately together, as opposed to when its chips are integrated with competitors’ hardware products such as AMD’s chips and Broadcom’s networking solutions.

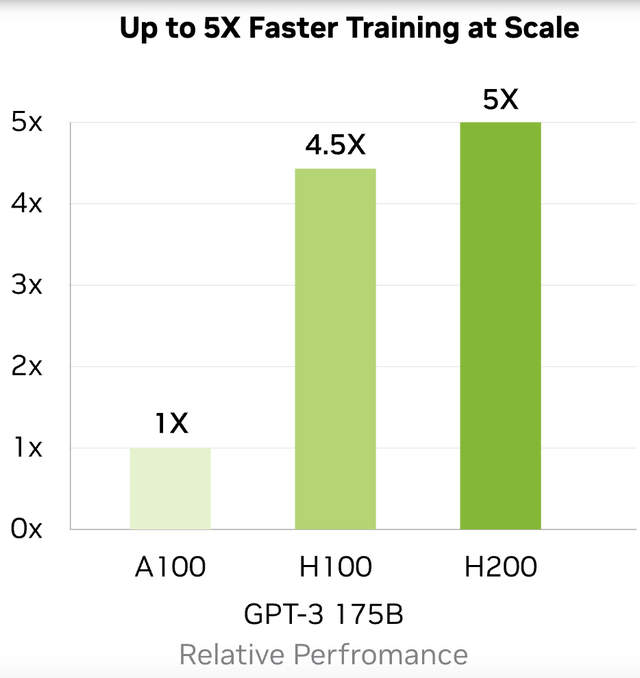

In fact, earlier this week, Nvidia introduced a more advanced AI supercomputing platform, powered by its latest H200 chips, which enables even faster training and inferencing of AI models.

Note: Performance comparisons are relative to Nvidia’s A100 (Nvidia)

The ongoing AI revolution has companies racing to develop, train, and inference their own customized AI models through their cloud providers, and deploy them across their own products and services as quickly as possible. Hence, it comes as no surprise then that the leading cloud providers, “Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among the first cloud service providers to deploy H200-based instances starting next year”, in order to fulfill customers’ needs for faster and more efficient training and inferencing.

Access to Nvidia’s latest AI solutions has become a key competitive factor among data center customers, in order to avoid losing customers to rivals, which should not only drive sales of the new HGX H200 next year but also enable Nvidia to continuously expand its software ecosystem for further profit margin expansion.

Risks to consider

Data center customers are still diversifying: It is worth noting that despite the high demand for Nvidia’s HGX systems, other competitors selling data center solutions are also witnessing demand growth.

On AMD’s Q3 2023 earnings call, CEO Lisa Su proclaimed:

“Based on the rapid progress we are making with our AI road map execution and purchase commitments from cloud customers, we now expect Data Center GPU revenue to be approximately $400 million in the fourth quarter and exceed $2 billion in 2024 as revenue ramps throughout the year.”

Furthermore, Broadcom’s sales of networking solutions (e.g. switches and routers) grew by 20% year-over-year last quarter, indicating that data centers are also looking to connect hardware solutions from different suppliers together, in the interest of some diversification away from Nvidia. In a previous article on Broadcom stock, we discussed how:

“Broadcom designs its networking solutions in a way that work seamlessly with chips from a variety of vendors, to allow for improved performance and scalability, while assisting in data center customers’ diversification strategy.”

So, Broadcom will strive to help customers build their own computing systems that offer equivalent, if not better performance than Nvidia’s HGX systems.

Therefore, Nvidia will need to continuously prove that its HGX systems sold as a complete, aggregated solution offer meaningfully superior performance over systems built with a diversified set of components, in order to maintain its market share dominance.

Data center customers are producing their own chips: All three major cloud providers; Amazon’s AWS, Microsoft Azure, and Google Cloud, now have their own AI chips, as they try to reduce their reliance on Nvidia. In fact, in a previous article, we discussed how Amazon’s AWS has already been able to encourage AI start-ups to adopt cloud services powered by its own chips as opposed to Nvidia GPUs.

Furthermore, while Nvidia is striving to encourage more and more enterprises to deploy their AI applications through the NVIDIA AI Enterprise software platform, cloud providers will be trying to induce greater utilization of their own AI-centric software platforms for application development and deployment, challenging Nvidia’s ability to optimally capitalize on the $150 billion market opportunity it foresees for its AI software solutions.

Now it will be difficult for these cloud providers to completely ward off their reliance on Nvidia given the popularity of its CUDA software library as well as Nvidia’s own DGX cloud initiatives to keep end-customers knotted to the Nvidia ecosystem. Nonetheless, cloud providers’ efforts to migrate more of their customers’ workloads onto their in-house hardware and software solutions indeed subdues Nvidia’s ability to optimally capitalize on the market opportunity ahead.

China chip curbs: the intensifying rivalry between the US and China has resulted in a ban on Nvidia’s ability to sell its AI chips to China. While Nvidia has come out with new chips to work around these bans, it is unlikely to stop Chinese customers from exploring domestic alternatives, undermining Nvidia’s long-term sales growth potential in China. Last quarter, sales to China made up 22% of total revenue. Hence, worsening relations between the two nations will have a meaningful impact on Nvidia’s revenue growth prospects going forward.

Nonetheless, the massive AI market opportunity ahead for Nvidia, including the upgrade cycle for data center infrastructure worldwide, as well as the software services opportunity, should help subdue the lost revenue impact from China.

Is Nvidia stock a buy?

Despite the growing risks, the growing sales of HGX systems enable Nvidia to aggressively capture and sustain market share by selling its range of data center solutions in an aggregated manner through a single product, optimally capturing the $300 billion market opportunity in ‘Chips & Systems’.

Furthermore, the software layer of the HGX platform, Nvidia AI Enterprise software, is conducive to customers sticking to the Nvidia ecosystem over the long term. This strongly positions Nvidia to capitalize on the estimated $150 billion AI software opportunity and also increases the likelihood of data centers continuing to upgrade and purchase the latest AI hardware solutions from Nvidia, similar to how an Apple iPhone user is very likely to upgrade to a more advanced iPhone model thanks to the stickiness of the services. However, investors should still beware of the slowing pace in sales of its GPUs and HGX systems going forward as various data center customers embark on building their own competing AI solutions in-house.

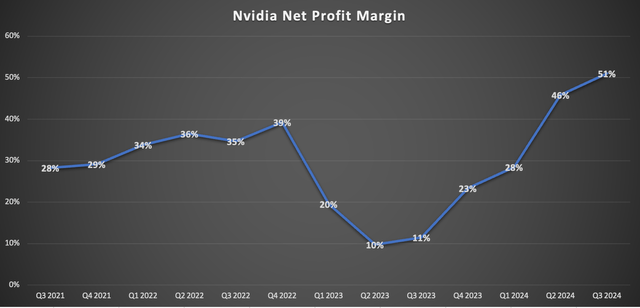

Now based on Nvidia’s long-term market opportunity projections, at least 30% of Nvidia’s long-term revenue is expected to derive from software services, which are higher-margin solutions that help drive profit margin expansion. Last quarter, Nvidia’s GAAP net margin expanded to an astounding 51%.

Nexus, data compiled from company filings

During the Evercore ISI 2023 Semiconductor Conference, CFO Colette Kress shared that:

“Now our overall software that we are selling separately will likely reach near $1 billion this year. So it is scaling. It is scaling quickly. This is key for NVIDIA AI Enterprise. That’s a key part of it. We also have our Omniverse platform. And then long term, you’re also going to see autonomous driving be a key factor of our software revenue as well.”

Nvidia is estimated to generate around $58 billion in total revenue this fiscal year, hence $1 billion in software revenue implies that its contribution to company-wide revenue is only in the low single digits. But as Nvidia’s revenue composition evolves towards a 70%-30% split between hardware and software, it bolsters Nvidia’s ability to sustain a net profit margin of above 50%.

Now in terms of valuation, Nvidia’s forward PE (non-GAPP) currently stands at over 40x fiscal 2024 earnings, and almost 25x fiscal 2025 earnings. For context, Nvidia’s 5-year average forward PE is 44.94x. Hence, the stock is cheap relative to its historical valuation trends.

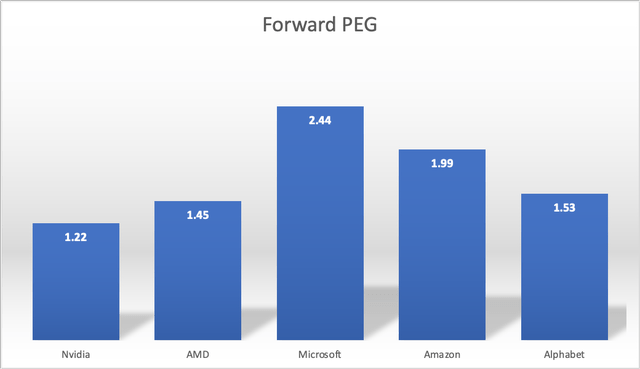

Keep in mind that the forward PE ratio does not take into consideration the rate at which earnings are growing. Hence, Nexus prefers the forward Price-Earnings-Growth [PEG] metric, whereby a company’s forward PE multiple is divided by the projected earnings growth rate. The forward PEG ratio helps investors assess how attractively the stock is valued relative to its projected growth rates, the idea being that companies with faster earnings growth rates deserve to trade at higher forward earnings multiples.

A forward PEG ratio of around 1 is reflective of a fairly valued stock. Though unsurprisingly high-quality stocks with promising growth prospects rarely tend to trade at fair value. Instead, the market assigns a premium valuation to such stocks, based on factors such as market share dominance, competitiveness of its products/services, and balance sheet strength.

Nvidia currently trades at a forward PEG ratio of 1.22, which means it trades at a 22% premium to its fair value. This is cheaper than arch-rival AMD and the major cloud providers that also stand to win big from the AI revolution.

Data compiled from Seeking Alpha

Now keep in mind that it is not unusual for high-quality stocks to trade at forward PEG ratios above 2, as they are often perceived as safe-haven investment securities.

The 5-year average forward PEG ratio for Nvidia stock is 2.29, and the market has historically been right to have assigned such a premium valuation to the stock given the proven competitive strength of Nvidia’s products and promising growth prospects.

Now despite the monstrous rally in the stock this year, Nvidia only trades at a forward PEG ratio of 1.22, which is not only cheaper than other AI stocks but considerably below its historical average, leaving ample room for multiple expansion. Over the long term, Nexus believes the stock should trade at a forward PEG ratio that is above 2 (closer to its 5-year average), given Nvidia’s ability to solidify its AI moat through software services.

Nexus maintains a ‘buy’ rating on Nvidia stock.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.