Summary:

- NVIDIA Corporation’s bull case lies in the compelling growth story and strong competitive advantage.

- The bear case lies in the uncertainty of supply and demand, and I detail the factors underlying that uncertainty.

- The path forward is uncertain, but one fact remains: Nvidia is an incredible business and a triumph of American capitalism.

spxChrome

Prelude

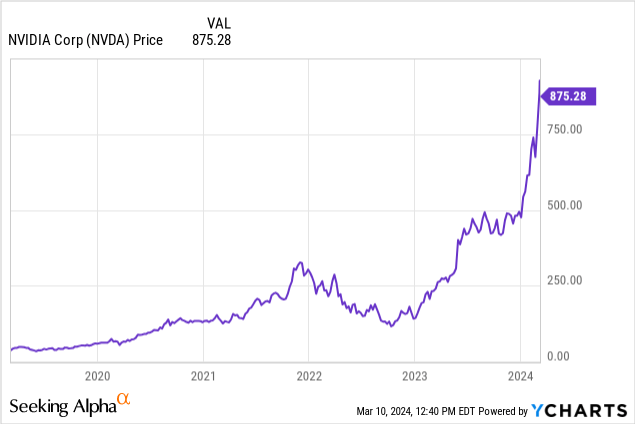

When a stock chart looks like that of NVIDIA Corporation (NASDAQ:NVDA), investors rightfully become wary of purchasing additional shares. Clearly, the best time to buy recently was mid-2022, but that was before the ChatGPT launch and a time of intense fear of recession. It was anything but clear at the moment.

Many analysts, myself included, didn’t even believe in the first leg of the rally. In one of my worst recommendations since joining Seeking Alpha, I told readers to sell the irrational exuberance in May 2023. Nvidia stock is up 130% since that article.

I made the classic mistake of using backward-looking valuation metrics to analyze a company with mouth-watering growth prospects. By November 2023, I flipped my opinion. I covered NVIDIA Corporation (NVDA) again, this time with a Buy recommendation, and the stock has been up 80% since then.

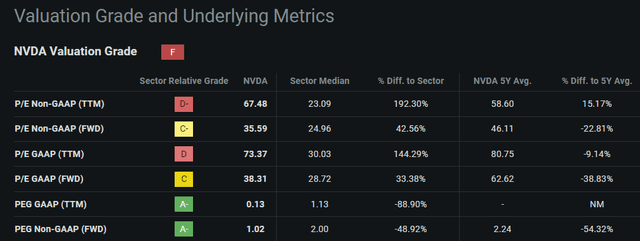

Throughout all of 2023, indeed even now, Nvidia’s growth estimates yield reasonable forward-looking valuation metrics:

Seeking Alpha NVDA Quant Valuation

Paying 35x earnings for a company that grew revenue 126% YoY in its most recent report is reasonable. Nvidia’s Q1 FY 2025 guide of $24b suggests 8.5% QoQ growth, and analysts expect full-year revenue to reach $110.2b in the January 2025 report, suggesting 81% growth from the $60.9b recent mark.

By 2027, 20 analysts expect Nvidia’s revenues to reach $150b, which would represent a 35% CAGR from the recent report.

Seeking Alpha NVDA Earnings Tab

Nvidia’s growth story is compelling, but the investment case remains difficult. Parabolic price action is frightening to buy into, but forward-looking valuations make the current price look entirely reasonable.

In this article, I’d like to weigh the factors underlying Nvidia’s growth outlook and present bear and bull cases for investing in Nvidia at current prices.

Since I’ll be taking both sides of the argument here, I’m rating Nvidia a Hold. For full transparency, I have been cost-averaging into Nvidia since mid-2023 and neither plan to sell nor stop accumulating shares. I believe Nvidia is one of the world’s best businesses, and the market is largely pricing that sentiment in.

With that, let’s dive into the bull and bear cases for Nvidia stock.

The Bull Case

The bull case is quite simple: growth. Using the information I detailed above, some would even classify Nvidia as a growth at a reasonable price, GARP, play.

Nvidia offers industry-leading AI solutions. Artificial intelligence has taken the world by storm since the late 2022 release of ChatGPT, and major cloud service providers and enterprises alike are scrambling to procure enough GPUs or GPU capacity to commercialize AI at scale. This scrambling has benefitted no one more than Nvidia, who has a deep competitive advantage in AI solutions.

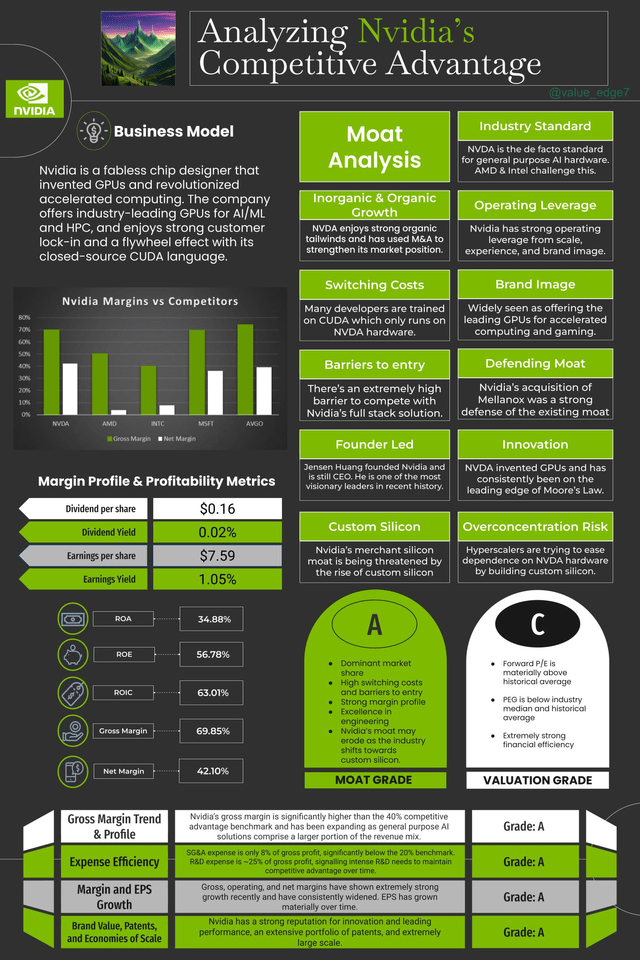

Bull Case #1: Nvidia’s Full Stack Moat

Nvidia’s deep competitive advantage in AI applications made their full-stack solution the only option. Financial results show this, there isn’t a single company benefitting from AI spend as much as Nvidia. Nvidia’s GPU programming software, CUDA, which can only run on Nvidia hardware, has the most robust developer ecosystem and touts the most extensive set of AI libraries in the industry. The only serious competitor to this is Advanced Micro Devices, Inc. (AMD), with its MI300X accelerator and open-source ROCm programming software.

GPU programming allows engineers to optimize chip performance for their specific use cases. The workhorse of Nvidia GPUs is known as an SM or Streaming Multiprocessor. CUDA does a few important things: 1) it optimizes memory read path, which decreases memory latency; 2) it oversubscribes SMs to optimize memory latency; and 3) it defines data structures into parallel processing blocks and assigns these blocks to SMs, so the GPU can perform tasks in parallel, defeating the memory bandwidth bottleneck. I discuss this in more detail in a recent post on X.

Nvidia began developing CUDA in 2007, 10 years before AMD launched ROCm, and as a result, has by far the best ecosystem. The Mellanox acquisition gave Nvidia industry-leading fiber channel networking capabilities, rounding out the full-stack hardware-software-networking AI accelerator platform. Nvidia’s stack is unmatched and is the reason why the H100 has become the industry-standard chip for AI applications.

I put together this graphic as a resource:

This isn’t the entire bull case, though. Nvidia’s true bull case is only of supply-demand rebalancing.

Bull Case #2: Supply-Demand Equilibrium

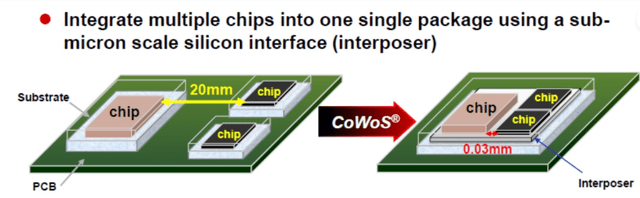

Throughout all of 2023, Nvidia’s growth was hamstrung by a supply crunch. The source of the supply crunch was the advanced packaging method used for H100s.

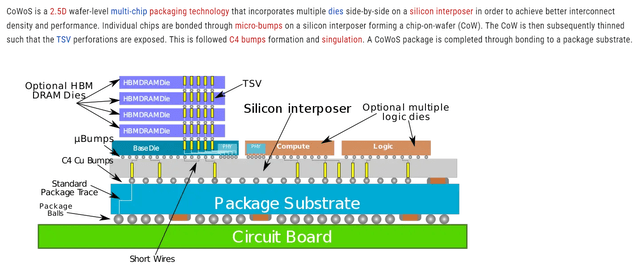

H100s use the Taiwan Semiconductor Manufacturing Company Limited (TSM) aka TSMC “oWoS” packaging design. This is “chip-on-wafer-on-substrate.” It’s a packaging method designed to enhance chip performance without necessarily increasing transistor density.

As the name suggests, this involves stacking multiple silicon chips atop another silicon wafer. This first layer allows for easier interconnection of individual chips through the silicon wafer, enhancing performance on the same die size.

Then, the stacked chip-on-wafer is placed on an interposer which sits atop the substrate material.

High bandwidth memory, HBM, was another reason that CoWoS packaging was necessary. Building HBM involves stacking multiple DRAM (dynamic random access memory) chips atop each other, drilling holes in them called TSVs (through silicon vias), and connecting the chips together by running interconnects through the TSVs.

So, logic and memory chips are placed together on a wafer. Numerous interconnects may be built into this wafer to allow for chip-to-chip communication. Then, this CoW structure is placed atop a packaging substrate and connected through soldering balls onto the PCB, printed circuit board.

CoWoS packaging allows Nvidia to pack way more performance into the same die size without shrinking transistor size or increasing transistor density.

The tradeoff here is process complexity, and therefore production capacity. TSMC was terribly underprepared for the demand shock caused by ChatGPT, and Nvidia simply couldn’t buy enough chips to meet demand. This supply imbalance is still felt – CFO Colette Kress said the company expects B100, the next-generation AI accelerator, to be supply constrained as well. There is simply too much demand.

The supply crunch did two things: 1) gave Nvidia extremely strong pricing power with H100s; and 2) hamstrung Nvidia’s growth in 2023. Yes, without supply constraints Nvidia would have sold way more GPUs in 2023.

TSMC has ramped its CoWoS production capacity up significantly since early 2023, and Nvidia will enjoy more balanced supply/demand moving forward. This could lead to prices coming down, but given the intense demand, increased unit sales will more than make up for this. Indeed, supply meeting demand may lead to Nvidia beating the already ludicrous growth expectations.

The next question is then, how sustainable is this demand? The semiconductor ecosystem is very cyclical, and AI demand will likely continue this trend. ASML Holding N.V. (ASML) CEO Peter Wennink stated in the recent earnings call that the industry is emerging from a long trough that began during the COVID-19 supply shock. He believes 2025 will be a year of strong growth for ASML, and this growth ripples throughout the entire ecosystem. More ASML machines mean more production capacity for Nvidia chips, which means more unit sales. Thus, Nvidia’s growth estimates do look reasonable for fiscal years 2025 and 2026.

Finally, the sustainability of AI demand may be shielded by some of the most severe cyclical troughs because of the scaling law. Scaling laws state that AI inference performance increases as compute capacity increases, without any change to the underlying engineering.

In other words, even without improvements in model engineering, if companies just supply their models with more compute then performance will improve.

This does two things to demand: 1) it gives all hyperscalers the incentive to buy as many GPUs as possible or else face the risk of being left behind, and 2) as more compute-intensive models get released (GPT-5, Sora), even more compute capacity will be required to reap further scaling benefits.

Indeed, scaling laws present a very compelling virtuous cycle for AI hardware demand.

As businesses try to distribute AI at scale, like Adobe Inc. (ADBE) with Firefly and Microsoft Corporation (MSFT) with Copilot, inference demand will continue increasing.

Looking out into the uncertain future, demand for AI hardware truly seems limitless right now. As long as this demand remains robust, Nvidia will be a good investment. Growth and innovation will continue, vendor lock-in will become more severe, and Nvidia’s flywheel effect will become stronger.

Robust demand underpins the bullish assumption that growth estimates are achievable, and therefore the current valuation is reasonable.

What if demand falls off a cliff, though? Worse yet, what if we have another supply shock?

Herein lies the bear case for Nvidia: supply and demand uncertainty.

The Bear Case

After the initial release of ChatGPT in late 2022, a few important things happened. First, almost everyone who used it was awe-struck. Myself included. You input a prompt in human language, and a computer talks back to you with detailed, mostly accurate responses. It seemed like magic at first. Usage exploded, and the required compute to run it skyrocketed.

Bear Case #1: Inverse Scaling Law Eroding Consumer Demand

Over time, many frequent users became disgruntled. ChatGPT got lazy, you would feed it a long document, and it would only scan the first few pages. Ask it to check a code block, and it corrects a few lines then tells you to do the rest. If it had trouble analyzing data, it would just return an error.

Many people began speculating that OpenAI was intentionally throttling model performance, and eventually, it was discovered that this may have been the case. Why? They didn’t have enough compute to run inference at scale. They were suffering from the flip side of the scaling law: not enough compute decreased model performance.

They limited usage and made GPT-4 less effective. This illustrates quite nicely the truth of the scaling law, but also highlights the key risk in commercializing AI at scale. Can our compute capacity keep up with inference demand?

On the surface, this is a demand tailwind for Nvidia. CSPs and AI companies will seemingly always need more compute. Over time though, this could shift consumer sentiment to broadly viewing AI as not useful enough. If Microsoft can’t sell enough Copilot subscriptions, the economics of stockpiling GPUs won’t make as much sense. They could scale back hardware purchases, and Nvidia demand could face demand headwinds or inventory write-downs.

While I do believe this is a possible scenario, I don’t think this is likely to be a major headwind. Even if consumer demand falters, enterprise demand will be robust. Enterprises have a strong incentive to use AI to decrease costs, like Klarna’s recent announcement that AI chatbots handled two-thirds of customer chats in its first month. Therefore, I do not believe the bear case of weak consumer demand will hold Nvidia back in the long run.

Bear Case #2: Wariness of Vendor Lock-In

I recently wrote an earnings review on Broadcom. In this report, I touched on Broadcom’s custom silicon business and why it’s such a tailwind. In this context, Nvidia is a merchant silicon provider. Merchant silicon is general purpose hardware. Sometimes, Nvidia GPUs are referred to as “GPGPUs,” or “General Purpose GPUs.” They are built to be the everything AI accelerator. H100s are one size fits all. This is why CUDA is so important, it allows for optimization of merchant silicon.

Over time, big tech companies still needed more compute power, though. This led to a shift away from merchant silicon toward custom silicon. Since 2009, Broadcom’s custom silicon division has grown operating profits by more than 177x. Broadcom’s major customers are Alphabet Inc. (GOOG), (GOOGL) with its TPUs, and Meta Platforms, Inc. (META) with its MTIA chip. Increasingly complex software applications require an offload of tasks from merchant silicon to custom silicon. Custom silicon is purpose-built and application-optimized, it’s built to do one thing really well and much more efficiently than a GPGPU could do it.

This threat is real, and Nvidia itself recognized it. Nvidia itself is venturing into the custom silicon business. The key selling point of a custom silicon provider is their IP portfolio, design expertise, and relationships with foundries. Nvidia fits the bill exceedingly well and is likely to become a major player in custom silicon.

However, there’s a growing narrative that CSPs and other data center operators are becoming wary of vendor lock-in with Nvidia. If Nvidia controls the full stack of AI solutions, big CSPs will lose negotiating power and could become price takers over time.

This has led to many CSPs opting for the custom silicon route – Google and Meta with Broadcom, Microsoft Corporation (MSFT) is building custom chips Cobalt and Maia, Apple Inc.’s (AAPL) “M” and “A” series chips have been custom for years, and Amazon.com, Inc. (AMZN) released custom chips dubbed Trainium and Inferentia.

It’s clear that demand for custom silicon solutions is increasing, partly because of inherent GPGPU limitations but also from fear of Nvidia gaining too much negotiation power. Nvidia’s custom silicon business could end up being a bust for this reason.

Regardless, the data center of the future will have a combination of merchant and custom silicon. Nvidia’s demand will remain strong because H100s are basically indispensable for commercializing AI at scale.

Nvidia’s long-run demand roadmap is safe. It has a strong competitive advantage and custom silicon won’t outright replace merchant silicon. Therefore, I don’t believe these bear cases are very compelling yet but they are worth monitoring. The final bear case, a supply shock, is the most compelling.

Bear Case #3: Supply Shock

We’ve talked in detail about Nvidia’s robust demand, why it exists, and why it’s sustainable. We’ve also talked about Nvidia’s competitive advantage and how a supply/demand imbalance kept Nvidia’s sales contained throughout 2023.

The black swan that isn’t priced in is a conflict between China and Taiwan. While all analysts recognize the threat, it’s impossible to predict what will happen.

One reason China is so intent on taking Taiwan is its chip manufacturing capability. China has decent domestic chip manufacturing capabilities but has been cut out of the EUV supply chain by the U.S. government. ASML received pressure from both the Trump and Biden administrations to restrict China from the EUV lithography supply chain. ASML hasn’t shipped any EUV machines to China, cutting China off from the single supplier of the only machine capable of producing leading-edge chips. EUV lithography is indispensable for leading-edge production with economical yield.

For a brief period, China still seemed to be advancing well. The Mate 60 Pro, Huawei’s competitor to the iPhone 14, has a 7 nm chip. I discuss this in detail in an article on Apple. While the processor in the Mate 60 Pro was still not equivalent to the 5 nm A16 Bionic chip in the iPhone 14, it surprised many pundits. It was a failure of US sanctions that meant to restrict China from producing at this advanced of a process node.

On March 8th, 2024, Fortune reported that SMIC, the China fab that produced the chip, used American WFE equipment from Applied Materials, Inc. (AMAT) and KLA Corporation (KLAC). American technology that likely has since been sanctioned. It’s unlikely that China will be able to procure future generations of WFE machines from AMAT and KLAC. What was once a triumph of Chinese manufacturing independence has now become an embarrassing example of dependence on sanctioned American technology.

I doubt Beijing is happy about this Fortune article. In fact, I believe this increases the likelihood of geopolitical conflict. If Taiwan is placed into the worst-case scenario, a Chinese invasion, chip exports will almost certainly decrease precipitously.

Nvidia is entirely dependent on TSMC for its leading-edge GPUs and thus, this is the key risk Nvidia faces. Any impact on TSMC’s production will impact Nvidia in a major way.

This risk is mostly short-term. Although the world is dependent on Taiwan today, many governments are subsidizing domestic industry in the pursuit of on-shored supply chains. For example, Intel Corporation (INTC) has announced several manufacturing expansions and marked the first pre-order of ASML’s next-gen lithography machine dubbed EUV High NA. Long-term industry trends favor Nvidia’s continued growth despite significant short-term risks with Taiwan.

Make no mistake though, this risk should be taken seriously. Even an increasingly combative rhetoric between the U.S. and China could flip sentiment on Nvidia. If sentiment waivers, the stock could experience a severe multiple contraction.

This multiple contraction would present a very compelling buying opportunity, though. Nvidia’s dominance is dependent on Taiwan today, but this dependence is easing. All fabless chip designers are acutely aware of this risk and will continue to work on diversifying their supply chains. Intel could be the largest beneficiary of this and is undergoing a sweeping transformation in its own right.

Conclusion

Nvidia’s story is a triumph of American capitalism. They embarked on a risky and uncertain journey to build the best-accelerated computing platform, far before there was any meaningful demand for accelerated computing. Clearly, the company has been abundantly rewarded for this risk, and the technology they’ve built is becoming the most critical component to the AI revolution sweeping the globe. Nvidia Corporation is a wonderful business that I intend to own indefinitely until the valuation gets far more overstretched or the growth thesis falls apart.

This was my longest article to date on Seeking Alpha. If you made it this far, I sincerely appreciate your attention. If you agree or disagree with anything, or feel that I’ve missed some critical considerations, let’s discuss it in the comments. If I earned a follow, I really appreciate the support. Thanks for reading!

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.