Summary:

- Nvidia’s Q2 2025 earnings beat estimates, but the stock dipped due to perceived slower growth; however, absolute revenue growth remains robust and impressive.

- AI is a generational opportunity, and Nvidia’s dominance in data center GPUs positions it well for long-term growth despite increased competition.

- The shift to liquid cooling in data centers will drive further demand for Nvidia’s Blackwell products, supporting sustained revenue growth.

- Nvidia’s valuation is attractive for long-term investors, with strong financials, ongoing AI advancements, and significant future growth potential.

JHVEPhoto

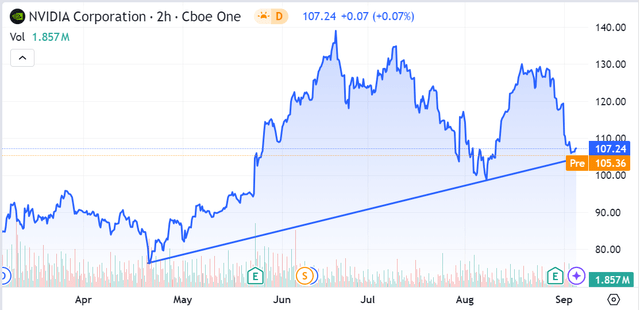

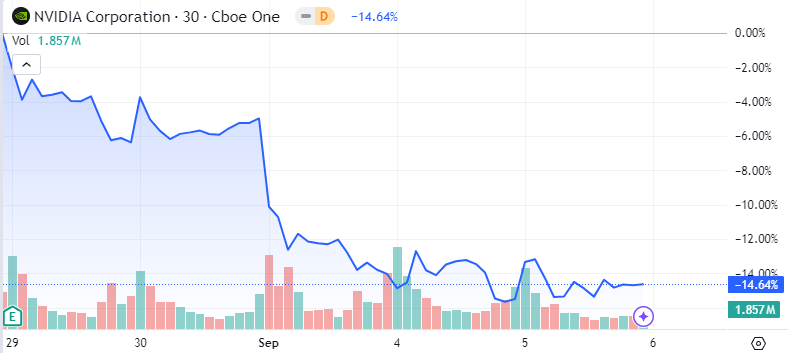

Nvidia Corp’s (NASDAQ:NVDA) double-digit percentage dip after Q2 2025 earnings were announced is an understandable one. Despite beating on both lines and more than doubling its quarterly revenues, the company’s topline performance – and, in particular, forward guidance – clearly failed to meet investor expectations.

SA

The problem, as I see it, is not that Nvidia didn’t put up solid numbers for the quarter. On the contrary, the company posted a near-102% topline growth rate between Q2 2023 and Q2 2024, but reported a much higher rate of 122% between Q2 2024 and Q2 2025, beating analyst estimates by nearly $1.3 billion. At the bottom line, too, the company reported adjusted earnings per share of 68 cents and beat estimates by 4 cents.

Those aren’t really numbers that would deserve a beat-down of the stock, so what’s going on? Some analysts are saying that “The size of the beat this time was much smaller than we’ve been seeing.” The chief argument seems to be that the company posted growth rates in the range of +200% over the past three quarters (on a YoY basis) but only showed a 122% increase in Q2.

I find that argument to be faulty on many levels. Q2 2024 revenues grew by 102%, coming in at $13.5 billion and up from $6.7 billion in the year-ago quarter, so Nvidia is effectively showing much stronger growth this year with that 122% topline growth rate. We need to keep in mind that FY 2023 wasn’t a great year for Nvidia. Revenue growth was flat from the year-ago period, barely $60 million higher. Indeed, Q4 2023 revenues of $6.05 billion were 21% down from Q4 2022. Go back one more quarter, and we see Q3 2023 revenues of $5.93 billion were down 17% from Q3 2022.

So, we had a year of flat growth in FY 2023, but the revenue surge hadn’t yet begun. In Q1 2024, Nvidia posted a -13% growth rate at the top at $7.19 billion, and it was not until Q2 2024, as we saw that the company started hitting positive growth rates – an in triple digits, no less. That’s what Q2 2025 is comping against, and I don’t see that as a growth rate decline; rather, I see it as the company having added nearly $7 billion to the top line between Q2 2023 and Q2 2024 for a 102% growth rate, but then adding $16.5 billion on top of that between Q2 2024 and Q2 2025. To me, it seems like the market (as well as analysts) is focusing too much on the percentage increase rather than the sheer dollar increase, which remains nothing less than superb. Adding $16.5 billion is definitely much harder than adding $7 billion, and many investors who have sold off since the latest earnings report seem to have missed that nuance.

Moving to the Q3 guidance figure of $32.5 billion at the top, that equates to adding about $14.5 billion compared to the prior period. Again, the market seems to obsess about the figure ‘only’ representing an 80% growth rate, while the dollar value isn’t being considered. Sure, you could look at that as a drop in growth rate, but we also need to remember that Nvidia’s annualized revenue run-rate is now at $120 billion. It’s not easy to keep adding the same percentage increases at that scale, but that doesn’t stop Nvidia from adding real dollar volumes in a stable and sustainable manner.

In short, while I’ll concede that the apparently slower growth ahead is a disappointment to investors who were expecting the +200% cadence to continue, I don’t believe it calls for a sell-off of this magnitude.

As such, I think NVDA represents a compelling investment case even now. The correction is simply an opportunity for long-term investors to lower their cost basis. Growth is far from over, and that’s what I plan to dive into with the rest of this article. My rating remains a Strong Buy after Q2 2025 earnings were released a little over a week ago.

And this is why…

No, AI is Not Dead, Not a Fad, and Not a Bubble

One of the key concepts that investors need to understand is that Artificial Intelligence, or AI, is not a flash-in-the-pan technological development. It is a generational opportunity that has gained momentum over the past two years, but behind it are decades of experimentation, research, development, testing, and learning.

One of the enablers of AI proliferation in recent years has been the ability to run these workloads on the cloud and leverage the power of scalability. More specific to my NVDA thesis is the fact that it is the growth in data center computer power that has altered the AI landscape and made it fertile enough for various industries to benefit significantly from.

This is exactly where NVDA has a near-insurmountable lead over the competition – whether that’s coming from Advanced Micro Devices (AMD) or any other chipmaker competing in the data center GPU space. As of 2023, NVDA shipped 3.76 million units for a mind-numbing 98% total shipment market share against a combined 90,000 units for AMD and Intel (INTC). Of course, that’s when the data center GPU game was starving for competition, so with AMD aggressively pushing its MI300 agenda these past few quarters and looking at a Q4 release for the MI325 accelerator platform, that market share is likely to see some erosion as we navigate the final leg of H2 2024.

That should actually be excellent news for Nvidia because it will keep management on its toes. I don’t see it as a threat as much as an expansion of offerings in the space that addresses a range of price-point and performance requirements.

My point, though, is that GPU shipments aren’t likely to slow down their acceleration, and there are several indicators that point to this conclusion.

Strong Demand Continues for Current-Gen Hopper Products

As of Q2 2025, data center revenues topped $26.3 billion for a +150% increase YoY, and what I liked even more was the fact that customer concentration is spread out almost evenly between the hyperscale clients and other major customer cohorts – 45% and 50%, respectively. That’s a nugget many investors might be missing because it implies that demand is spreading beyond cloud service providers and into on-prem and hybrid deployments. I expect to see that spread widening in favor of non-CSPs as we progress through FY 2025. This shift from a primarily CSP-heavy revenue base to a more expanded customer cohort mix isn’t sudden. Indeed, management clued us in on this in the Q1 2025 press release from May 22 this year:

Beyond cloud service providers, generative AI has expanded to consumer internet companies, and enterprise, sovereign AI, automotive and healthcare customers, creating multiple multibillion-dollar vertical markets.

It also follows, logically, that since Blackwell products weren’t widely available as of Q2 and likely won’t in Q3, the bulk of revenue growth is still coming from the Hopper series of products, particularly the H200 platform that stepped up shipments in Q2. On that front, management had this to say:

NVIDIA H200 platform began ramping in Q2, shipping to large CSPs, consumer Internet, and enterprise company. The NVIDIA H200 builds upon the strength of our Hopper architecture and offering over 40% more memory bandwidth compared to the H100.

So, we should be seeing an increase in H200 shipments in Q3, which should tide the top line over nicely until Blackwell starts to pick up on shipments later this year. The company still expects a strong Q4 rollout for Blackwell, and the rumored delay is very likely linked to the improvements that were implemented to enhance Blackwell’s production yields, in my opinion. These points were all validated (to a degree) by management:

We executed a change to the Blackwell GPU mass to improve production yields. Blackwell production ramp is scheduled to begin in the fourth quarter and continue into fiscal year ’26. In Q4, we expect to get several billion dollars in Blackwell revenue. Hopper shipments are expected to increase in the second half of fiscal 2025.

So, what we’re looking at for the two quarters ahead is a sharp increase in Hopper shipments and revenues for both Q3 and Q4, with a strong expectation of Q4 revenues getting a further boost from Blackwell sales.

As such, I’m not really convinced by arguments that point to a gradual revenue decline for Nvidia. Yes, at this scale, growth on a percentage basis will naturally slow down, but from an absolute dollar value perspective, adding $14.5 billion in quarterly revenues on a YoY basis doesn’t reflect a company that’s seeing any kind of material decline in its largest segment.

That’s the first point of validation for my ‘AI is not dead’ claim. The second one, which I discuss in the next section, is even more interesting.

The Shift to Liquid Cooling

Although Nvidia does offer air-cooled Blackwell compute platforms – B100 and B200 – with the HGX form factor, the Grace Blackwell accelerator needs liquid cooling, and data centers are already ramping up their infrastructure spending to include liquid cooling systems. There’s clear evidence of that from multiple quarters.

Vertiv Holdings (VRT), for example, which is collaborating with Nvidia “to build state-of-the-art liquid cooling solutions for next-gen NVIDIA accelerated data centres powered by GB200 NVL72 systems”, in its Q2 earnings presentation, reported a $7 billion backlog that’s up nearly 50% on a YoY basis and 11% on a sequential basis (from the $6.3 billion backlog reported at Q1 end).

Of course, not all of that backlog is related to liquid cooling, but a strong supporting argument for growth in this industry niche comes from the fact that H2 2024 is expected to be the inflection point for the DCLC or data center liquid cooling market (emphasis mine):

According to a recently published report from Dell’Oro Group, the trusted source for market information about the telecommunications, security, networks, and data center industries, the Data Center Liquid Cooling market has hit an inflection point, with mainstream adoption of liquid cooling starting in the second half of 2024. We forecast this to materialize over the next five years (2024-2028) in a market opportunity totaling more than $15 B.

Beyond that, projections out to 2031 show a doubling of the DCLC market to $30 billion, which is a clear sign that DLC or direct-to-chip liquid cooling is here to stay. Of course, Nvidia does recognize that not all data centers are going to completely transition to liquid cooling, but one thing that CEO Huang said on the Q2 call really stuck in my mind:

“the way to think about that is the next $1 trillion of the world’s infrastructure will clearly be different than the last $1 trillion, and it will be vastly accelerated. With respect to the shape of our ramp, we offer multiple configurations of Blackwell. Blackwell comes in either a Blackwell classic, if you will, that uses the HGX form factor that we pioneered with Volta.

And I think it was Volta. And so, we’ve been shipping the HGX form factor for some time. It is air-cooled. The Grace Blackwell is liquid-cooled.

However, the number of data centers that want to go to liquid-cooled is quite significant. And the reason for that is because we can, in a liquid-cooled data center, in any data center — power-limited data center, whatever size data center you choose, you could install and deploy anywhere from three to five times the AI throughput compared to the past. And so, liquid cooling is cheaper. Liquid cooling, our TCO is better, and liquid cooling allows you to have the benefit of this capability we call NVLink, which allows us to expand it to 72 Grace Blackwell packages, which has essentially 144 GPUs.

The way I see it, the shift to liquid cooling is a tectonic one that will see TCO or total cost of ownership drop further as these technologies are increasingly adopted by data center operators. Right now, there’s a capex hurdle to DCLC adoption, but the benefits of lower opex over extended periods of time greatly reduces the TCO for these systems. Over time, I believe we’ll see much more momentum in this shift from air cooling to liquid cooling, and in turn, that supports a stronger case for Nvidia’s liquid-cooled Blackwell products and the roadmap beyond.

That brings me to my third point to support the ‘AI is not dead’ case.

Tangible AI Gains are on the Rise

One of the biggest challenges to AI deployment is the inability to accurately assess the value it can bring to an organization. In a recent Gartner survey (results published in May 2024), nearly half of all respondents considered this to be their biggest hurdle.

Gartner Survey

That’s not very encouraging for AI as a whole, but the other aspect of this is that Gen AI or generative AI deployments are currently the main drivers of AI growth, and many companies are seeing tangible results from their Gen AI deployments. From an overview perspective, this is what was reported in early August:

Early adopters report significant advantages, including improved consumer service (69%), streamlined workflows (54%), increased client satisfaction (48%), and better use of analytics (41%). Other tangible results reported are revenue increases (34%), improvements in ratings (40%), and boosts in team productivity (32%).

Another report specific to payroll processing states that:

integrating AI into the payroll system yielded a remarkable 60% increase in process efficiency—a testament to tangible benefits: efficiency gains, cost savings, and error reduction.

Yet another domain where AI excels and on the verge of showing tangible benefits is preventive healthcare, where algorithms are already doing the following:

- AI may help identify which currently healthy people are likely to develop breast cancer within five years, based on information hidden in mammograms that today’s clinicians can’t yet interpret

- AI can predict which patients in memory care clinics are likely to develop dementia within two years

- AI can aid early detection of diabetes by identifying hidden patterns of correlation in large patient data sets

- AI may assist in predicting acute kidney injury up to 48 hours before it occurs

Closing Thoughts and The Valuation Question

Diverse industries are benefitting or likely to soon benefit from AI deployments, not just Gen AI. In the face of this deluge of reports from every quarter, how can we keep claiming that AI is a bubble?

The truth of the matter is, we’ve not even scratched the surface when it comes to AI capabilities, and I believe investors who sold off Nvidia on the basis of claims by some analysts about revenue declines, margin contraction, and other factors aren’t taking the time to understand Nvidia’s business and how it fits into the larger scheme of the AI revolution.

As for my Strong Buy thesis, which I’m vehemently holding on to since I upgraded from Hold, it is based on some very simple ideas:

- Nvidia still enjoys a very long runway for revenue growth over the next decade or more, as evidenced by the three key points raised in this article

- As long as no competitor comes close to Nvidia’s flagship products in terms of performance, the company has a strong moat in place – mind you, the competition is already here and will heat up over the next several years, but Nvidia’s position right now is a relatively stable one at the top of the data center GPU industry

- The shift to liquid cooling will create more opportunities for Nvidia’s Blackwell and future products from its roadmap; for now, it offers a clear pathway for rapid Blackwell adoption

- Tangible gains are being seen in a myriad of industries, which validates further spending on AI infrastructure, platform, and application layers despite the temporary lack of clarity around how to monetize AI

We’re still in the very early stages of AI development, and Nvidia is at the cutting edge of this generational transformation. However, what about investment-specific risks? With valuation levels still so high, can the stock move up further, or does it first need to grow into its current valuation before it can surge ahead?

I believe that investors who couldn’t get those questions answered to their satisfaction decided to take their money elsewhere. In a way, that’s great for long-term investors. If you’re still in the game, I’d urge you to consider the following:

- At a price to adjusted forward earnings multiple of 37x, NVDA is currently trading at a 20% discount to its 5Y average multiple of 47x

- On a price to forward revenue basis, NVDA is trading (21x) nearly in line with its 5Y average of about 19x

- In terms of price to forward cash flows, the current multiple of 39x is still at a 20% discount to its 5Y average of 48x

- Long-term EPS growth (3-5Y) is projected at 36% annually, which is 46% higher than its 5Y historical average of 25%

- Operating cash flows are still growing in triple-digit percentages (128% YoY for Q2 2025 over Q2 2024)

- The balance sheet is stronger than ever, with nearly $60 billion in total current assets against $14 billion in current liabilities, and a book value (shareholder equity) of $58 billion as at the end of Q2 2025

We’re only at the beginning of the AI revolution, and the compute and networking needs of tomorrow’s data centers need to be prepared for today, which is what Nvidia has been doing over the past several decades, ever since it invented the GPU in the late 1990s. My firm opinion is that there’s still tremendous value in this company from a long-term capital appreciation perspective, and its meteoric rise over the past few years is not a flash-in-the-pan event that’s one and done. By no means is this growth story over, and the sooner investors realize this, the better for their portfolios.

Are there still material threats to the business? Absolutely! AMD is closing in on the GPU front, albeit very gradually, and in spurts. Although it may be years before it reaches the level of Nvidia, that gap is definitely closing. At the macro level, the current state of the economy leaves a lot to be desired. The high cost of debt could be hampering capex capabilities for thousands of potential customers of NVDA, so once the Fed starts its rate cut schedule, my assumption is that we should see material increases in capex allocations across multiple industries and segments. The only other major threat is a failure to execute on its plans for Blackwell. If those rumors I addressed in my last article were true and Blackwell doesn’t start shipping in Q4, revenue growth may be impacted; worse, investors could start losing faith in management’s ability to execute.

On balance, I would say that this is still a very attractive investment opportunity, particularly after the macro, market, and results-related stock price corrections of the past two months. There seems to be some technical support at the current (pre-market) level of $105, but I’m not really concerned when I look at the long-term trajectory for the stock.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.