Summary:

- Nvidia Corporation’s AI hardware demand surged post-ChatGPT, driving record revenues and operating income, showcasing their strength in modernizing computing and building AI factories despite architectural transitions.

- Nvidia’s dominance is bolstered by the widespread adoption of their CUDA software, making competitors’ hardware less appealing despite potential price advantages.

- CEO Huang emphasizes Nvidia’s role in the AI revolution, modernizing data centers and creating AI factories, predicting tremendous growth in AI-driven industries.

- Despite risks of overvaluation akin to the dot-com era, Nvidia’s conservative guidance, robust financials, and founder-led management make it a compelling long-term investment.

Sundry Photography

Introduction

Per my August article, Nvidia Corporation (NASDAQ:NVDA) keeps breaking sales records while waiting for their Blackwell architecture. Since then, we’ve had the September 11 Goldman Sachs Communacopia Tech conference and Nvidia’s Q3 25 filings. My thesis is that Nvidia is showing strength by modernizing computing and creating AI factories at a furious pace despite being in the middle of a change in architecture.

The fiscal quarters for Nvidia differ from the calendar quarters. The way I think about it is, they are about a month late and a year off. For example, Nvidia’s fiscal Q3 2025 period just ended on October 27, 2024.

The Numbers

The world had an inflection point when ChatGPT reached 100 million users in January 2023. Demand for AI hardware started to skyrocket for several reasons. One reason is because the old way of doing things stopped being optimal. Instead of programmers writing code by hand and then running it on CPUs, we now opt to modernize data centers with accelerated computing. Another reason is because AI factories are needed for new AI companies trying to build new tools. ChatGPT didn’t replace anything, it was a new type of company. Many other new AI companies are forming and the demand for AI hardware is prodigious as a result. Nvidia is building the AI factories these new companies require.

We’re still in the early days of AI, and I expect Nvidia to show strength for years to come. It wasn’t long ago in March 2024 during a Lex Fridman podcast when Meta Platforms, Inc. (META) Chief AI Scientist Yann Lecun said large language models, or LLMs, lack four key intelligence characteristics. He named these as understanding the physical world, having persistent memory, reasoning, and planning. Nvidia CEO Huang discussed improvements in these areas in the 3Q25 call when answering a question from The Goldman Sachs Group, Inc. (GS) Analyst Toshiya Hari. The question was about a recent article from The Information saying Blackwell chips have been overheating. Citing numerous engineering steps, CEO Huang did not seem worried about overheating. What matters is what he and CFO Kress said about delivering more Blackwells in the quarter ending in January than they had previously estimated. He specifically mentioned intelligence characteristics, saying we’re now going to the next level with foundation models (emphasis added):

And we’re at the beginning of a new generation of foundation models that are able to do reasoning and able to do long thinking and of course, one of the really exciting areas is physical AI, AI that now understands the structure of the physical world.

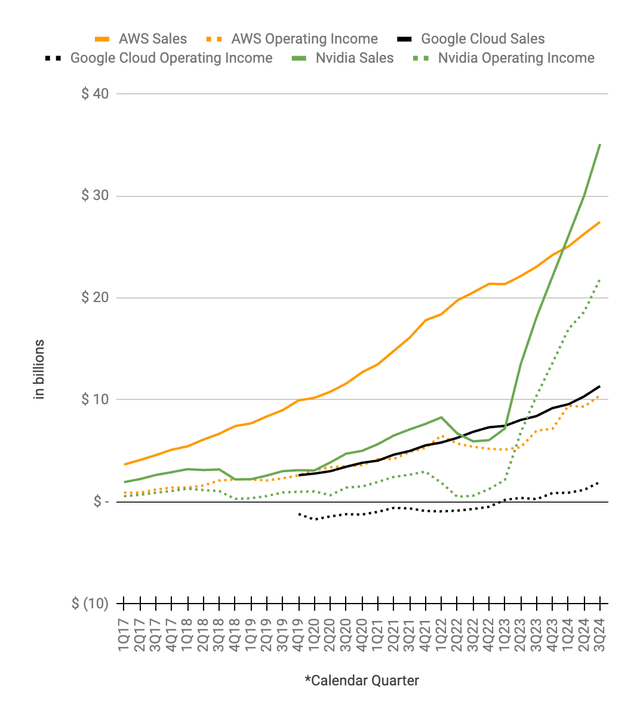

Among large S&P 500 (SP500) companies, the ChatGPT inflection point benefited Nvidia more than anyone, but many other large companies using Nvidia as a supplier started flourishing as well. I like to look at Nvidia’s operating income and revenue along with the same numbers for some large companies relying on them, such as hyperscale cloud providers. Per various reports, Nvidia has more sales to Microsoft Corporation’s (MSFT) Azure than anyone, but Microsoft obfuscates Azure’s cloud figures with numbers from other businesses so they are not shown below. We do have numbers from Alphabet Inc.’s (GOOG, GOOGL) Google Cloud and Amazon.com, Inc.’s (AMZN) AWS. Nvidia’s operating income climbed from just $2.14 billion in the quarter ending April 30, 2023, all the way up to $21.87 billion in the quarter ending October 27, 2024. Over the same time, Nvidia’s revenue soared from $7.19 billion to $35.08 billion. Said another way, Nvidia has shown unbelievable strength in the last six quarters:

Nvidia operating income and revenue (Author’s spreadsheet)

*Again, Nvidia quarters are off from calendar quarters by about a month and the year is different as well. I put their fiscal numbers into the closest calendar quarter above so their latest fiscal 3Q25 period ending October 27th goes under the 3Q24 calendar quarter ending September 30th.

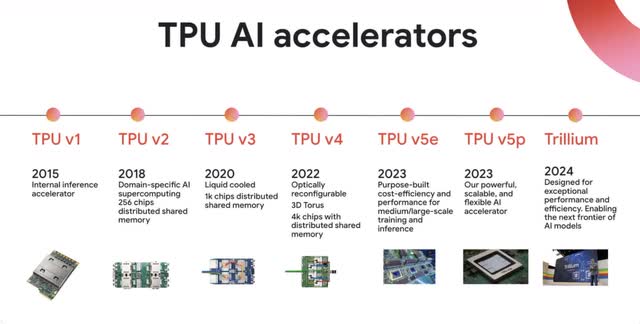

A July 2024 Google Cloud update shows the progress they have made with their TPU AI hardware. Seeing as Google’s TPUs have been around since 2015, which was before the ChatGPT inflection point, I think of them as growing alongside Nvidia hardware. I consider TPUs to be on a slightly different path on a similar journey as Nvidia’s GPUs:

TPU history (July 2024 Google Cloud update)

Up through the end of 2022, Google Cloud had operating losses every single quarter. Coinciding with the initial popularity of ChatGPT, Google Cloud had their first operating profit of $191 million on revenue of $7,454 million in their 1Q23 calendar period. These figures climbed to $1,947 million and $11,353 million, respectively, for Google Cloud’s 3Q24 calendar period. One reason Google Cloud experienced operating income increases is because they make a nice profit offering Nvidia’s hardware to clients. Another reason is because Google Cloud also makes a solid profit on their self-designed TPUs. The point is, Google’s TPUs have flourished and will continue to flourish, but they are not a significant threat to Nvidia.

A November 13 FT article talks about chips from Annapurna Labs of AWS which can be used as a substitute for Nvidia’s hardware. Ostensibly this is true, but hardware designed by Nvidia and hardware designed by AWS have both been growing. Amazon.com, Inc. (AMZN) discussed their AWS custom-designed Inferentia AI chip as far back as their 4Q18 earnings release, saying it was made to “help customers improve performance and lower the cost of running their inference workloads.” I began seeing discussions of their Trainium chip in their 4Q20 earnings release, where they said it was “coming to Amazon EC2 and Amazon SageMaker in the second half of 2021.” Like Google’s TPUs, Amazon’s custom silicon came before the ChatGPT inflection point and I think about Amazon’s custom silicon growing with Nvidia.

An April 2023 AWS blog post explained the ChatGPT inflection point. We saw an operating income jump for AWS after ChatGPT gained popularity. AWS had an operating income of $5.1 billion on revenue of $21.4 billion in their 1Q23 calendar period. These figures climbed to $10.5 billion and $27.5 billion, respectively, by their 3Q24 calendar period. The reasons for AWS’s silicon growth are similar to what we saw above with Google Cloud. Like Google’s self-designed TPUs, AWS’s self-designed silicon will continue to do well, but it is not a substantial threat to Nvidia.

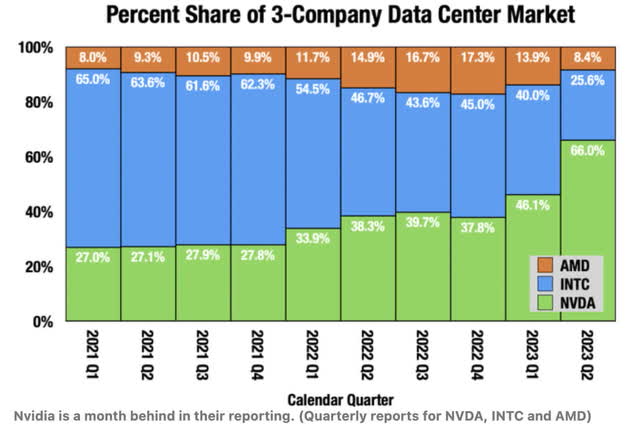

One of the reasons Nvidia is so strong is because they can’t be easily replaced. Even when others like Amazon, Alphabet Inc. (GOOG), (GOOGL), and Advanced Micro Devices, Inc. (AMD) can make hardware which may perform better for certain tasks, engineers are hesitant to switch because they are used to Nvidia’s CUDA software. This wasn’t the case when AMD started disrupting Intel’s data center CPU business with Azure in late 2017 and AWS in 2018. Back then, silicon changes could be made without wide-scale compatibility issues. Another way to think about Nvidia’s strength is by looking at their data center sales relative to Intel Corporation (INTC) and AMD. A September 2023 Seeking Alpha article shows the amount of share these three companies had regarding each other in their data center triopoly:

Data center market (September 2023 Seeking Alpha article)

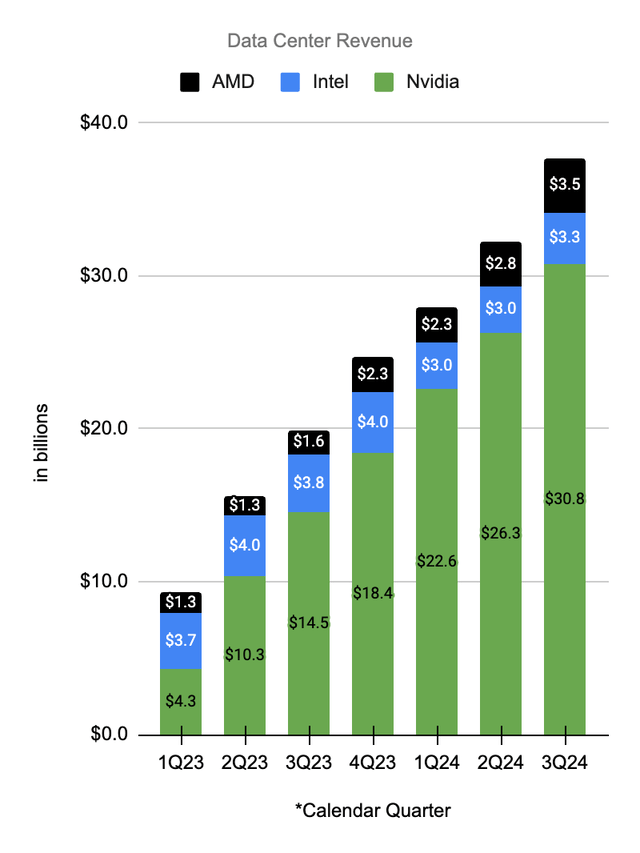

The above type of graphic was useful when the data center triopoly was growing at a slower rate. However, now I prefer to look at dollars instead of percentages because the market has been expanding rapidly since the January 2023 ChatGPT inflection point. In the case of AMD, it’s better to have a thin slice of a huge pizza than a wide slice of a small pizza. In other words, revenue dollars matter now more than triopoly revenue share. AMD has done well with revenue dollars, going from $1.3 billion in 1Q23 to $3.5 billion in 3Q24. This happened despite their triopoly revenue share dropping from roughly 14% to roughly 9%. They got more pizza even as their slice shrank.

Impressive as AMD has been, Nvidia has been phenomenal, going from data center revenue of $4.3 billion in 1Q23 to $30.8 billion in 3Q24 (as a reminder, we put Nvidia’s fiscal quarters into the closest matching calendar quarters below). Nvidia kept taking share until the 1Q24 calendar quarter and their triopoly share has been within about 100 basis points of the 1Q24 calendar level ever since. Because the data center triopoly market has been growing so fast, Nvidia’s revenue dollars have kept going up since the calendar 1Q24 period, despite their triopoly share being relatively flat at a level between 81% and 82%. AMD looks solid, but Nvidia is showing enormous strength:

Data center revenue (Author’s spreadsheet)

Nvidia CEO Jensen Huang keeps explaining why we continue seeing these strong numbers every quarter. It is because we are modernizing computing for existing tasks and building AI factories for brand-new companies who are trying to become the next ChatGPT. He detailed the modernization concept at the September 11 Goldman Sachs Communacopia Tech conference (emphasis added):

If you’re doing SQL processing, accelerate that. If you’re doing any kind of data processing at all, accelerate that. If you’re doing – if you’re creating an Internet company and you have a recommender system, absolutely accelerate it and they’re now fully accelerated. This, a few years ago was all running on CPUs, but now the world’s largest data processing engine, which is a recommender system, is all accelerated now. And so if you have recommender systems, if you have search systems, any large-scale processing of any large amounts of data, you have to just accelerate that. And so the first thing that’s going to happen is the world’s trillion dollars of general purpose data centers are going to get modernized into accelerated computing.

It isn’t just Nvidia saying we are in the early days of an AI revolution involving the modernization of data centers and the build-out of AI factories. Following the September 11 Goldman Sachs Communacopia Tech conference, Forbes cited numerous sources in a September article saying we have a long runway of AI investments yet to come. AWS CEO Matt Garman said current AI use cases are just scratching the surface. ServiceNow, Inc. (NOW) CEO Bill McDermott said AI is the wellspring of opportunity for the economy. The article also quotes Snowflake Inc. (SNOW) CFO Mike Scarpelli and Microsoft CTO Kevin Scott regarding upcoming AI investments:

Snowflake CFO Mike Scarpelli explained that he thinks “it’s still in the very early innings,” but “the reality is that very few are using it en masse today.” Bringing AI to the masses, when adoption of AI is commonplace, is when the industry will unlock things previously seen as impossible or extremely costly, according to Microsoft CTO Kevin Scott. Scott believes we could be 5 to 10 years out from seeing what developers are capable of and what applications can be created.

CEO Huang answered a question from UBS Group AG (UBS) Analyst Timothy Arcuri in the 3Q25 call in broad strokes by repeating his message about the world being at the beginning of two fundamental shifts in computing (emphasis added):

The first is moving from coding that runs on CPUs to machine learning that creates neural networks that runs on GPUs. And that fundamental shift from coding to machine learning is widespread at this point. There are no companies who are not going to do machine learning. And so machine learning is also what enables generative AI. And so on the one hand, the first thing that’s happening is a trillion dollars’ worth of computing systems and data centers around the world [are] now being modernized for machine learning. On the other hand, secondarily, I guess, is that, that on top of these systems are going to be – we’re going to be creating a new type of capability called AI. And when we say generative AI, we’re essentially saying that these data centers are really AI factories. They’re generating something. Just like we generate electricity, we’re now going to be generating AI.

CEO Huang makes powerful points about Nvidia’s strengths towards the end of the 3Q25 call, saying we are seeing exponential growth for pre-training and post-training (emphasis added):

The tremendous growth in our business is being fueled by two fundamental trends that are driving global adoption of NVIDIA computing. First, the computing stack is undergoing a reinvention, a platform shift from coding to machine learning. From executing code on CPUs to processing neural networks on GPUs. The trillion-dollar installed base of traditional data center infrastructure is being rebuilt for software 2.0, which applies machine learning to produce AI. Second, the age of AI is in full steam. Generative AI is not just a new software capability, but a new industry with AI factories manufacturing digital intelligence, a new industrial revolution that can create a multi-trillion dollar AI industry.

We are seeing all this power in the midst of a hardware shift. For companies of medium strength, hardware shifts are less specific regarding the information broadcasted about the latest and greatest offerings. Tesla, Inc. (TSLA) is an example, as they are rumored to be working on a new Model Y, but they haven’t officially announced it because they would rather not harm sales of existing Model Ys. In a tremendous display of power, Nvidia can announce new offerings well in advance without significantly hurting existing sales. In May, it was revealed that Nvidia’s new Blackwell architecture will do generative AI on LLMs at up to 25 times less cost and energy than Nvidia’s current Hopper architecture. Customers have not been able to buy Blackwell as of the latest 3Q25 fiscal period, yet Nvidia’s revenue continues growing. Oftentimes when a company announces a new product, the sales of the old product go down, yet this hasn’t happened regarding Nvidia’s Hopper architecture. This is a tremendous show of strength.

In Nvidia’s 3Q25 call, CFO Kress said demand for the new Blackwell architecture is staggering! She also said Microsoft will be the first provider to offer Blackwell-based cloud instances. Additionally, she said 64 Blackwell GPUs can do the same work as 256 H100 Hoppers (emphasis added):

Just 64 Blackwell GPUs are required to run the GPT-3 benchmark compared to 256 H100s or a 4 times reduction in cost. NVIDIA Blackwell architecture with NVLINK Switch enables up to 30 times faster inference performance and a new level of inference scaling throughput and response time that is excellent for running new reasoning inference applications like OpenAI’s o1 model. With every new platform shift, a wave of start-ups is created. Hundreds of AI native companies are already delivering AI services with great success. Though Google, Meta, Microsoft, and OpenAI are the headliners and Anthropic, Perplexity, Mistral, Adobe Firefly, Runway, Midjourney, Lightricks, Harvey, Codeium, Cursor, and Bridge are seeing great success, while thousands of AI-native startups are building new services.

Valuation

There are many risks to Nvidia’s stock valuation. A November 21 FT article says Cisco Systems, Inc. (CSCO) stock got as high as 130 times earnings before the dot-com crash. Investors who bought Cisco leading up to the dot-com crash were right about the internet being important in the decades ahead, but they were mistaken on valuation. Many agree AI will change the world drastically. However, it is also likely we’ll see valuations for AI stocks being ahead of themselves occasionally, in a matter rhyming with the way valuations were unrealistic with Internet stocks before the dot-com crash. We know Nvidia’s overall gross margin is expected to decline in the upcoming quarters during the early ramp of Blackwell. Hardware from Annapurna Labs of AWS, Google Cloud, AMD, Intel, and others can take sales away from Nvidia. I’m still very optimistic about Nvidia’s business and Nvidia’s stock despite these risks.

Nvidia is worth the amount of cash we can pull out of the company from now until judgment day. These are some of the numbers I think about when valuing Nvidia ($ in millions):

|

Fiscal Quarter |

FCF |

FCF Margin |

SBC |

Op. Inc. |

Op. Inc. Margin |

Gross Profit |

Gross Margin |

Revenue |

QoQ Rev. Growth |

YoY Rev. Growth |

|

1Q24 |

$2.6 |

37% |

$0.7 |

$2.1 |

30% |

$4.6 |

65% |

$7.2 |

19% |

-13% |

|

2Q24 |

$6.0 |

45% |

$0.8 |

$6.8 |

50% |

$9.5 |

70% |

$13.5 |

88% |

101% |

|

3Q24 |

$7.0 |

39% |

$1.0 |

$10.4 |

57% |

$13.4 |

74% |

$18.1 |

34% |

206% |

|

4Q24 |

$11.2 |

51% |

$1.0 |

$13.6 |

62% |

$16.8 |

76% |

$22.1 |

22% |

265% |

|

1Q25 |

$14.9 |

57% |

$1.0 |

$16.9 |

65% |

$20.4 |

78% |

$26.0 |

18% |

262% |

|

2Q25 |

$13.5 |

45% |

$1.2 |

$18.6 |

62% |

$22.6 |

75% |

$30.0 |

15% |

122% |

|

3Q25 |

$16.8 |

48% |

$1.3 |

$21.9 |

62% |

$26.2 |

75% |

$35.1 |

17% |

94% |

These past numbers came at a time when we had about $1 trillion for the world’s data centers. CEO Huang gave more details about modernizing computing and building AI factories in the 3Q25 call when BofA Analyst Vivek Arya asked about Blackwell. CEO Huang said ChatGPT didn’t replace anything, and he thinks of it as being similar to the time when the world first received the iPhone. In the same way, we saw mobile-first companies when the iPhone came out, we are now seeing AI natives. AI factories are needed for this new industry, and CEO Huang gave us an idea about what this market should look like (emphasis added):

Let’s assume that over the course of four years, the world’s data centers could be modernized as we grow into IT. As you know, IT continues to grow about 20%, 30% a year, let’s say. And let’s say by 2030, the world’s data centers for computing is, call it a couple of trillion dollars. And we have to grow into that. We have to modernize the data center from coding to machine learning. That’s number one. The second part of it is generative AI, and we’re now producing a new type of capability that the world has never known, a new market segment that the world has never had. If you look at OpenAI, it didn’t replace anything. It’s something that’s completely brand new. It’s in a lot of ways as when the iPhone came.

In addition to quantitative considerations for valuation from the table above, there are qualitative factors as well. I believe founder-led companies like Nvidia are special. Obviously, Nvidia is not a software as a service (“SaaS”) company, but they’re not as much of a hardware company as one might think either, seeing as they use software to design hardware. Fabricators like Taiwan Semiconductor Manufacturing Company Limited (TSM) are the companies who actually make most of the hardware.

The rule of 40 for SaaS companies says YoY revenue growth plus the earnings margin should add up to 40% or more. On the earnings margin side of the rule, I’ve seen FCF margin, operating margin and EBITDA margin all discussed. In the last period, Nvidia had YoY revenue growth of 94% with an FCF margin of 48% and an operating income margin of 62%.

Management has a history of being conservative with guidance. Per the 2Q25 release, 3Q25 revenue was expected to be $32.5 billion +/- 2%. The 3Q25 release shows it actually came in at $35.1 billion, which was $2.6 billion higher or 8% above the estimate. The 3Q25 release says 4Q25 revenue is expected to be $37.5 billion +/- 2%. I won’t be surprised if the actual revenue for 4Q25 ends up being closer to $40 billion.

Nvidia had a 3Q25 operating income of $21,869 million on revenue of $35,082 million. The operating income run rate is $87.5 billion, and I don’t think a valuation range of 40 to 50x this amount is unreasonable, implying an optimistic range of $3,500 to $4,375 billion.

We see 24.49 billion shares as of November 15 on the 3Q25 10-Q, giving us a market cap of $3,314 billion based on the November 27 share price of $135.34. The market cap is below an optimistic valuation range, and I think the stock is a buy for long-term investors.

Forward-looking investors should keep an eye out for updates on Blackwell and other developments. The events mentioned by CFO Kress during the 3Q25 call shouldn’t be missed. These include the December 3rd UBS Global Tech and AI Conference, a CES keynote on January 6th, and a CES Q&A on January 7th.

Disclaimer: Any material in this article should not be relied on as a formal investment recommendation. Never buy a stock without doing your own thorough research.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA, AMZN, CRM, GOOG, GOOGL, META, MSFT, TSLA, TSM, VOO either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.