Summary:

- Stan Druckenmiller has significantly reduced his Nvidia stake, reflecting broader concerns about the AI infrastructure boom.

- Nvidia has surged 706.65% in two years since our “Buy” rating, prompting our first downgrade to “Sell” as the rally may be overextended.

- The potential for an “AI overbuild” could lead to a short-term downturn as capital spending on AI datacenters outpaces revenue generation.

- Despite our long-term optimism about AI, short-term uncertainties around Nvidia’s revenue growth and margin stability warrant a more cautious investment approach.

J Studios

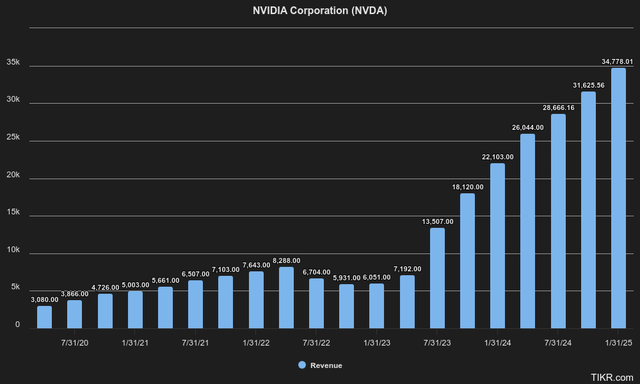

Nvidia Corporation (NASDAQ:NVDA), previously known for its role in graphics, gaming and crypto, recently became the leading provider of AI Compute and AI software solutions, after the company experienced a boom in Datacenter revenue. We have to admit that while we did anticipate back in 2022, before ChatGPT, that there would be a big switch to AI and Datacenter revenue, we certainly did not expect it to hit the scale it is at today.

For reference, Nvidia is up 706.65% in merely 2 years time since we assigned it a “Buy” rating. Since then, we have continuously updated our thesis and maintained either “Strong Buy” or “Hold” ratings, despite the general bearish sentiment surrounding the stock, in which many initially referred to a bubble. This is the first time, however, we change our rating to a “Sell”, and believe that it’s finally time to trim after this ferocious rally.

One other notable strategist, Stan Druckenmiller, has also signaled in a recent 13F filing that he’s mostly sold out of the position as of Q1, selling 1,545,370 shares amounting to $192.52M. He currently owns 214,060 shares, down from the 9,500,750 he once owned in Q2 2023. Besides steadily trimming his positions in Nvidia, he has also been trimming positions in other AI plays like Microsoft (MSFT) and adding to other positions which may benefit from AI in an indirect way, as we discuss further.

We remain bullish on AI in the long term, but present our thesis on why we see a likely overspend cycle happening in the short to medium term. We also see Nvidia being fundamentally overpriced for the first time, with the valuation being too far ahead in our view, even based on future earnings expectations.

The Upcoming AI Infrastructure Cycle

We anticipate challenging times ahead for Nvidia after its spectacular rally, which we believe will primarily be attributable to an “AI overbuild”, or an overly optimistic infrastructure buildout in the short term, as was usually the case when investors were overly optimistic about future potential investments. Perhaps not exactly like the dot-com bubble, but people do still tend to got caught up in massive capital spending cycles, which Stan Druckenmiller has raised questions about as well, having the potential to impact margins and profitability drastically.

Venture capital firm Sequoia calls this “the $600BN question,” referring to the amount of revenue that end-user companies would need to generate to justify the current capital spending on GPUs. They derived this number by taking the current data center revenue for Nvidia, which is estimated to be $150BN already this year, after which another $150BN would have to be needed to run these GPUs in terms of AI data center infrastructure and utilities, as estimated by Nvidia. This means that to justify this $300BN capital expenditure, for end-user companies with a margin of about 50% in the technology and software industry, these companies would need to generate $600BN.

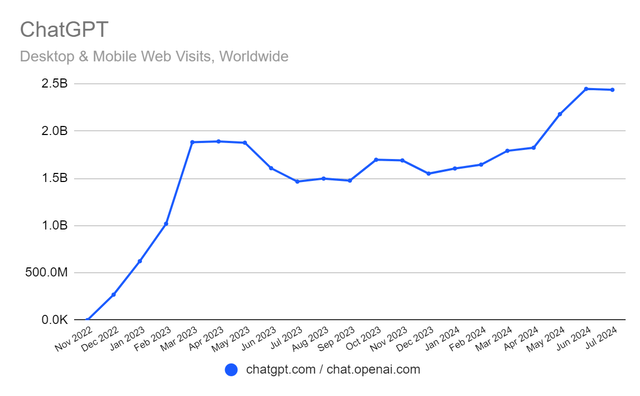

While these may be quite rough calculations, they do show just how much end-user cash generation will be needed to sustain this infrastructure build-out that’s ongoing. And from our perspective, we’re not seeing this level of revenue generation or value creation just yet. Taking the poster-child company in the AI world, OpenAI, for example, is only expected to bring in $3.4BN in annual revenue. What’s perhaps not often mentioned, and even worse, is the fact that OpenAI is currently quite the money void, as they’ve burned trough $8.5BN and are estimated to lose up to $5BN this year. This came after Sam Altman earlier this year went on an interview at Stanford and said:

Whether we burn $500 million, $5 billion, or $50 billion a year, I don’t care. I genuinely don’t as long as we can stay on a trajectory where eventually we create way more value for society than that and as long as we can figure out a way to pay. (Sam Altman, Stanford)

Since the spectacular launch and neck breaking user growth, ChatGPT went through in late 2022 to early 2023, website traffic does seem to have tapered off quite some more, perhaps raising questions about the payoff in terms of improvements in newer models compared to the capital spending required to create these models.

One other prime example of a possible “AI overbuild” we believe is Andreessen Horowitz, a venture capital firm, who is simply stockpiling reportedly approx. 20,000 Nvidia GPU’s under their “Oxygen” project, just in order to give their own portfolio companies access to these GPUs. Given the pricing of H100 GPU’s, we could be talking about $500M to $800M worth of GPUs alone, not even taking into account the operational costs. Given they’re renting out these GPUs at “below-market rates” also shows us the significant trust investors at the VC level are placing on the future ability of current start-ups to generate massive amounts of Free Cash Flow.

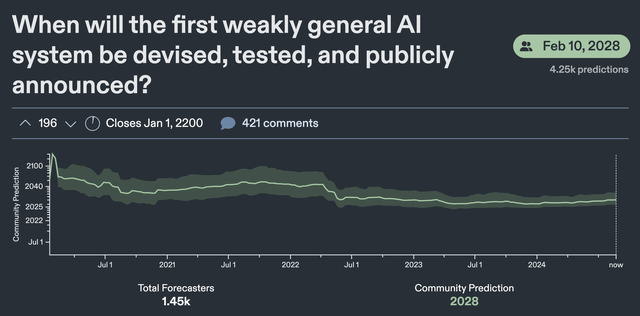

Another indicator that we usually follow closely to track the development of Artificial General Intelligence is the prediction aggregator Metaculus. While the prediction timeline of the development of weakly Artificial General Intelligence drastically shortened in 2022 and continued through 2023, it is now reversing and predicting that the first weak General AI system will be announced in February 2028, against a prediction of 2026 at the end of last year.

Shifting Attention

Despite the headwinds that we anticipate hardware vendors such as Nvidia and Big Tech spending huge amounts of CapEx on AI compute will face, we are by no means bearish on the entire AI space. We also certainly do not believe that this AI story is over; on the contrary, we are bullish about the long-term prospects for AI and believe there is likely a shift happening within the AI space itself from hardware and computers to end-user applications.

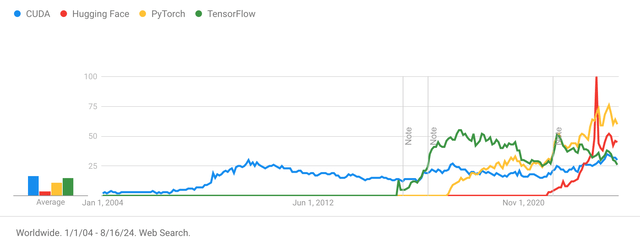

We can see this shifting in Web search data, for example. Search interest in Nvidia’s “CUDA” software has increased, but has mostly leveled off in the last few months. PyTorch and TensorFlow, which are key in building AI models, also show a flat to upward trend. Hugging Face, on the other hand, which is aimed at end users, does rise. Thus, we see the current period as a broader industry shift focus from the hardware-level and model building to end-user applications and integration.

Another example, as mentioned earlier, AI data centers consume a lot of electricity, and Stan Druckenmiller seems to be leaning in that direction, as he entered new positions in several energy companies last quarter. It could also be a rather defensive play in utilities in anticipation of a future recession. He also took a new position from Adobe (ADBE), currently one of the most prominent players in the AI space, integrating it into their software solutions focused on end-user value creation.

In the days of the dot-com bubble, a similar overbuild of infrastructure happened as well with fiber, as everyone over-estimated the demand in the short term. A lot of these infrastructure providers saw massive margin compression along with financial difficulty all the way to 2009. On the other hand, this overcapacity did drive down prices and made way for new innovations on a long-term timeframe like Amazon (AMZN), and other big tech companies. AI could be similar to this situation, as Stan Druckenmiller describes it:

AI could rhyme with the internet, as we go through all this Capital spending we need to do. The payoff, while it’s incrementally coming in by the day, the big payoff might be 4 to 5 years from now. So AI might be a little overhyped now, but underhyped long term. (Squawk Podcast)

The $15.5 Trillion Question

In similar fashion to the $600BN question, we also ask ourselves a much broader question about the Magnificent 7, which currently have a valuation of $15.57T, or $12.43T excluding Nvidia. The reason for the focus on these 7 companies is due to the fact that they make up a big chunk of Nvidia’s revenue. The 4 AI Hyperscalers which include Microsoft, Meta (META), Amazon (AMZN) and Google (GOOG) reportedly make up for 40% of Nvidia’s revenue alone. Some analysts even estimate that Nvidia’s top 10 customers make up for 60% to 70% of all revenue.

Nvidia is by far the first company who has been able to tap into the largest pool of Free Cash Flow, the Magnificent 7, at a time when they’re hungry for growth. As a result, the CapEx of the Magnificent 7 saw a drastic increase, with a large amount of it being allocated to AI datacenter. In a sense, with some analysts calling the Magnificent 7 a bubble, you could call Nvidia a “bubble inside another bubble.”

As shown in the graph above, the CapEx expansion of the 4 AI Hyperscalers closely matches with the huge increase in Free Cash Flow growth Nvidia has seen. The question now remains whether the customers of these AI Hyperscalers, which we believe are largely startups backed by the VC community and the Magnificent 7 itself, will be able to sustain the soaring costs of compute imposed on them by Nvidia, which sells GPUs at a sky-high markup. In theory, this CapEx spend should only survive if AI generates enough money/value or if VCs and big tech companies are willing to continue funding losses.

A Fundamental Reality Check

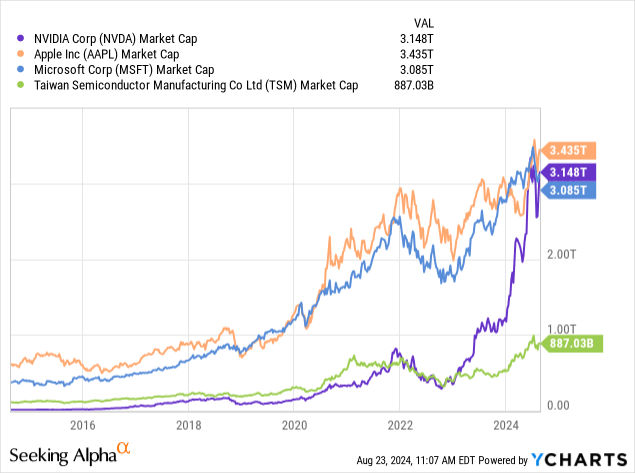

The last reason why we’ve changed our stance on Nvidia, is quite clearly due to valuation concerns. On a fundamental level, when Nvidia recently became briefly worth more than Apple (AAPL) and Microsoft in terms of valuation, we ran a thought experiment and assessed how vital Microsoft, Apple and Nvidia are to the global economy.

As recently showcased with an outrage in Microsoft Windows, due to an error made by CrowdStrike (CRWD), millions of computers were disrupted, leading to flights being grounded, supply chains being disrupted, massive business/ government agencies disruptions and even critical infrastructure like basic healthcare systems malfunctioning. Similarly, we would view Apple products and their ecosystem currently as indispensable without causing utter chaos and disruption on a global scale.

While Nvidia on the other hand is important in areas like AI Compute, graphics, the scientific community and breakthroughs in Autonomous Driving, we just don’t see it underpinning the economy as much as Apple and Microsoft do. Similarly, we also don’t see why Nvidia should be trading at over 3x Taiwan Semiconductor’s (TSM) valuation, given Nvidia’s massive reliance on them to fabricate chips.

From our view, Taiwan Semiconductor has a lot more leverage on Nvidia than some may think, and is already hinting to push through costs to Nvidia in the form of higher prices. Nvidia for example recently left the idea of a future Samsung Fab behind, which we believe is likely due to a far superior Wafer Yield that’s obtained with TSM. Not only does Nvidia solely rely on TSM’s production, but so does virtually the whole world, making us puzzled about the recent divergence in TSM’s valuation compared to Nvidia. And even with TSM building a fab in the United States, we expect it to be years behind when it comes online. TSM’s CEO Morris Chang himself even states that the fab would be 2-2.5x more expensive, and labor costs 50% higher.

In addition, we see Nvidia playing out quite similar to how Tesla (TSLA) played out in 2023 in a sense that, we see downside risks to margins once supply finally catches up with demand and any additional competition enters the space. Similar to Nvidia, Tesla also had the privilege of being years ahead of competitors in terms of EV production and expertise, which allowed them to expand gross margins to a record of 29.11% in Q1 2022. Today, however, gross margins are about the lowest they’ve been in 5 years at 17.95% as of last quarter. While Nvidia was able as we expected to expand margins in a tight market, we expect the opposite could happen as well when demand eases.

Even if competition in Nvidia’s case isn’t able to catch up or challenge Nvidia’s “CUDA” software stack, they’ll very likely still face massive scrutiny, as they’re already under, from the DOJ and FTC, receiving a ton of antitrust litigation, as has OpenAI, Microsoft and Google.

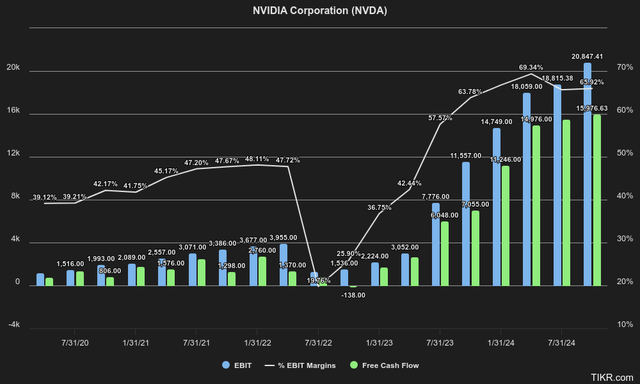

Taking into account the aforementioned risks, we find the current valuation Nvidia is trading at to be rather irrational. At a future expected Q2 EBITDA of $18.9BN, or $75.6BN at an annualized rate, even at the generous 30x multiple we usually give Nvidia it should be worth an Enterprise Value of $2.27T. Adding $20.20BN worth of Net Debt would bring us to a market cap of $2.29T or $93.09 per share at a very generous valuation of 30x Forward EV/EBITDA. From a Price to Free Cash Flow ((P/FCF)) perspective, Nvidia looks even more expensive, generating $14.98BN in FCF or $59.90BN annualized. Taking into account an additional $4.04BN in Share Based Compensation, Nvidia would be trading at an adjusted P/FCF multiple of 56.93x.

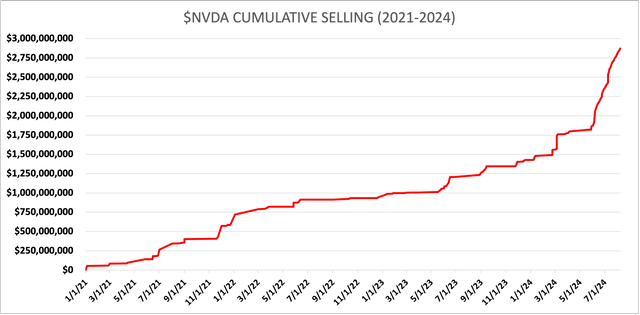

As things stand right now, with year-over-year revenue growth according to estimates likely to peak now in Q2, and operating margins standing at an eye-watering 64.9% compared to the usual 30-35% in recent history, we see most of the risks like disappointing growth or demand pointed to the downside. As Stan Druckenmiller would say: it’s not a fat pitch. Recent insider selling has also been ramping up, as can be seen in the graph below, with Jensen Huang being the most prominent seller. Since 2021, according to our data, there has only insider selling, with the cumulative volume totaling approx. $2.87BN.

The Bottom Line

Although we are changing our rating for Nvidia from “Strong Buy” to “Sell,” we are generally not overly bearish on the stock, but we do believe that it is finally time to seriously wind down or even exit the position completely. To be clear, there is no universe in which we would consider shorting the stock, as we are bullish on AI over the long term. Perhaps we could be wrong on some level, for example maybe underestimating Nvidia’s Blackwell Architecture potential, and be proven wrong.

But as things stand, the risks for Nvidia appear to be downside, and we think valuation is ahead of the curve, even on a forward basis. Still, we think there are likely other strategies to gain exposure to AI, should an “AI Overbuild” occur, which could see some serious tailwinds, such as energy and utility stocks and companies that benefit from implementing AI at the end-user level.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.