Summary:

- Nvidia’s Blackwell processors, initially delayed, are now in full production.

- Despite concerns, Nvidia’s Q3 Data Center revenue hit record highs, driven by insatiable demand for AI accelerators, outpacing supply constraints.

- Blackwell outperforms AMD’s MI325X, with Nvidia’s AI accelerators leading in efficiency and performance, crucial as data centers face power limitations.

- Nvidia’s data center disruption, shifting from x86 CPUs to GPU accelerators, is expected to drive over 100% revenue growth in fiscal 2025 and beyond, sustaining long-term growth.

Sundry Photography

Back when Nvidia (NASDAQ:NVDA) reported its fiscal 2025 Q2 results, it announced that production of its forthcoming Blackwell processors for data center AI acceleration would be delayed to fiscal Q4. At the time, there was concern about the impact of the delay on fiscal Q3 results, and whether the delay represented more serious design problems with Blackwell. Neither concern has turned out to be well-founded.

Q3 financial impacts of the Blackwell delay were hardly noticeable

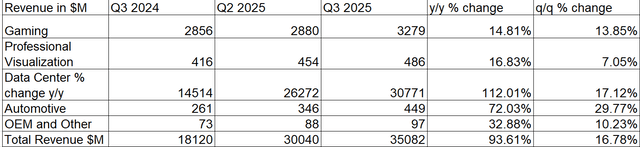

The guidance offered at the time of the fiscal Q2 earnings report suggested that the missing revenue from Blackwell sales would be noticed. It wasn’t. Nvidia set a new revenue record for fiscal Q3 with over 100% y/y growth in the Data Center segment:

For those concerned about or anticipating “cannibalization” of Hopper sales by Blackwell, there’s an important lesson here. Arguably, Blackwell represents a much better value, according to Nvidia, offering as much as four times the performance of Hopper H100 for about the same price.

Why then didn’t Data Center revenue drop in Q3 as customers waited to buy Blackwell? The simple answer is that demand for AI accelerators is so large that it compensated for any lost sales due to waiting for Blackwell.

Sure, Blackwell will take the sale away from H100, but H100 will be discontinued anyway. The overall market for data center accelerators is still growing, which means the market for Blackwell is still growing.

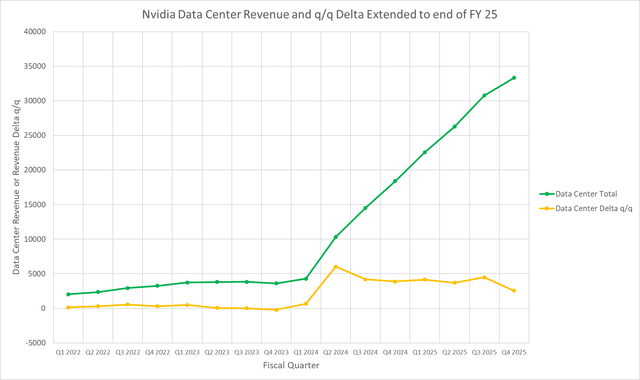

Nvidia’s Data Center revenue trajectory has been an almost perfect straight line since early 2023:

The q/q revenue difference represents the slope of the line. Why has the slope remained constant? I believe that it’s limited by Taiwan Semiconductor Manufacturing Company’s (TSM) willingness to expand production of Nvidia’s data center chips.

TSMC is being cautious and limiting production expansion so that it doesn’t wind up with overcapacity. But so far, Nvidia is still in a supply limited situation. That will certainly continue as it transitions to Blackwell.

Blackwell entered full production as promised in fiscal 2025 Q4 following the mask change

The slight downturn in projected data center revenue for Q4 reflects the midpoint of Nvidia’s guidance, but once again, I believe that the guidance is overly conservative, as it was for fiscal Q3. Blackwell is now in production, as CFO Colette Kress announced at the fiscal Q3 conference call:

Blackwell is in full production after a successfully executed mask change. We shipped 13,000 GPU samples to customers in the third quarter, including one of the first Blackwell DGX engineering samples to OpenAI.

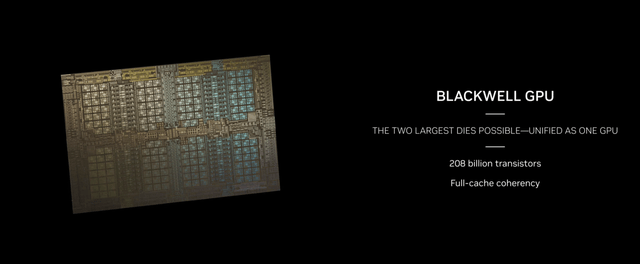

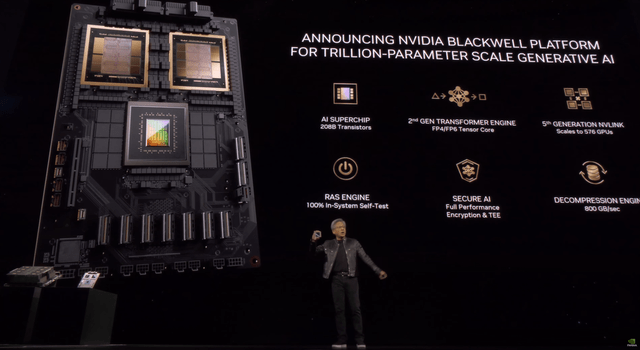

With the benefit of hindsight, it appears that reports that the Blackwell delay was the result of serious design flaws were at best speculative. The reports were mostly traceable to an August 4 article in Semi Analysis. Among other things, the article claimed that there was a fundamental problem of thermal warpage in the Blackwell package. Blackwell represents Nvidia’s first multichip GPU, combining two silicon dies on an interposer:

The article claimed that the packaging difficulties were such that Nvidia would only be able to produce “small volumes” of Blackwell. Yet, Kress’s guidance for Q4:

Total revenue is expected to be $37.5 billion, plus or minus 2%, which incorporates continued demand for Hopper architecture and the initial ramp of our Blackwell products, while demand greatly exceeds supply, we are on track to exceed our previous Blackwell revenue estimate of several billion dollars as our visibility into supply continues to increase.

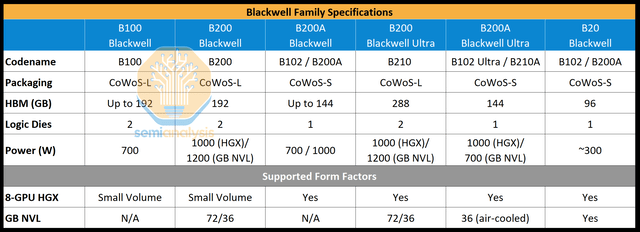

Over several billion dollars in Q4 doesn’t sound like a small volume. Furthermore, the Semi Analysis article claimed that Nvidia would be forced to fall back on a single die version of Blackwell:

Yet, I can find no evidence that such a version even exists. Certainly, there’s no mention of it on Nvidia’s site. Nvidia only lists two versions, B100 and B200, both of which contain two silicon dies.

To return to the revenue guidance, I do think it’s a little light, but Kress is typically very conservative, and usually Nvidia’s results exceed guidance. In this case, I think the conservatism is due to uncertainty about how much Blackwell production TSMC will be able to deliver in the quarter.

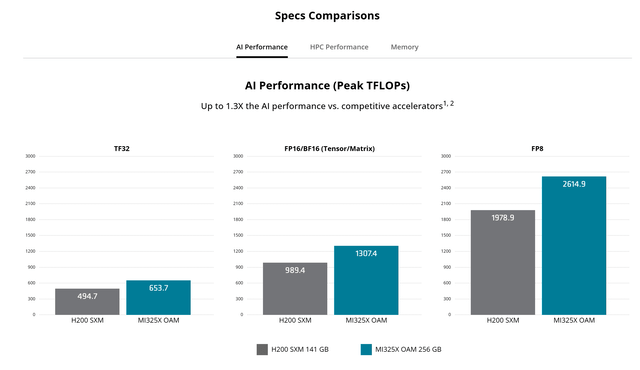

How Blackwell compares to AMD’s MI325X

In the interim, Advanced Micro Devices (AMD) has released the MI325X GPU accelerator. As I expected, the MI325 is essentially identical to the MI300X, but with more memory and higher memory bandwidth. The performance specs of the MI325X are identical to the MI300X.

AMD claims that the MI325X is faster in some processing tasks than the Hopper H200:

But this probably doesn’t get them anywhere close to Blackwell. For purposes of comparison, I prefer to rely on ML Commons. For the very difficult AI training benchmarks, there are no listings for AMD’s Instinct accelerators.

There are some preliminary listings for Blackwell. For the Bert AI model training benchmark, Blackwell B200 is about 36% faster than H200. For the llama-2 inference benchmark, Blackwell B200 is about 2.5x faster than H200 and about 3.7 times faster than the MI300X.

As I’ve discussed previously, the MI300X series are derived from SOCs intended for use in supercomputers rather than as dedicated AI accelerators. However, by all appearances, they’re selling well, and AMD’s Q3 Data Center revenue grew y/y by 122% to $3.549 billion.

This suggests that the combined accelerator output of Nvidia and AMD is still not able to meet the demand from data centers.

Investor takeaways: “staggering” Blackwell demand

During the Q3 conference call, CFO Kress described the customer adoption of Blackwell:

Blackwell demand is staggering and we are racing to scale supply to meet the incredible demand customers are placing on us. Customers are gearing up to deploy Blackwell at scale.

Oracle announced the world’s first ZettaScale AI Cloud computing clusters that can scale to over 131,000 Blackwell GPUs to help enterprises train and deploy some of the most demanding next-generation AI models. Yesterday, Microsoft announced they will be the first CSP to offer in private preview Blackwell-based cloud instances powered by NVIDIA GB200, and Quantum InfiniBand.

This provides some inkling of the scale of data center AI growth. AI models continue to be developed, and they keep getting larger. The compute requirements grow proportionately. The real limitation is electrical power, and here Blackwell and its successors will be favored for their improved efficiency.

The need to keep driving efficiency is probably what sustains AI accelerator spending beyond the immediate needs of current AI models. As data centers bump up against power supply limitations, the only way to grow the AI models will be to run them on more efficient hardware.

So the AI accelerator race is really becoming an efficiency race, and here Nvidia has staked out the high ground through its Grace-Blackwell superchip, which combines an ARM CPU with two B200s:

Nvidia’s disruption of the data center has occurred at a fundamental architectural level, shifting the computational burden from the traditional x86 CPU to the GPU accelerator. GPU acceleration is versatile and energy efficient, able to handle a broad range of workloads besides AI.

This disruption is still in its early stages, and I believe that it will generate revenue growth in fiscal 2025 of over 100%, and revenue growth in fiscal 2026 of about 50%. Eventually, AI spending will slow, and Nvidia’s data center revenue will level out, but I don’t expect that for several more years. I remain long Nvidia and rate it a Buy.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA, TSM either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

Consider joining Rethink Technology for in depth coverage of technology companies such as Apple.