Summary:

- Nvidia’s FY4Q24 results continued to exceed expectations, with its datacenter business once again beating all expectations.

- Datacenter revenues grew 27% sequentially, while gaming revenues remained flat and automotive segment grew 8%.

- Nvidia continues to extend its lead with the scaling up of the H200, the world’s most powerful GPU, with enhanced performance and memory capacity.

- In the fourth quarter, more than half of Nvidia’s data center revenues came from the large cloud providers like AWS, Azure and Google Cloud.

- 40% of its data center revenue for the last year was for AI inference, which shows end customers are using AI for real world use cases.

Justin Sullivan

Nvidia (NASDAQ:NVDA) reported their FY4Q24 results, which continued to show strength in their business fundamentals while growing its lead over competitors.

2024 looks like a year for Nvidia given that demand continues to exceed supply through the year.

Nvidia is also not slowing down as it continues to grow its moat and ecosystem, launching new and innovative products, proving itself to be a leader in the space by a margin.

I think that after this report, I am still a buyer of Nvidia after given the very strong tailwinds the company is likely to continue to experience in 2024.

I have written about Nvidia on Seeking Alpha, and in my last article, I shared about how the company’s data center was posting stellar growth, with tremendous demand for AI and strong visibility on demand for 2023 and 2024 while it continues to ramp up supply in the near-term.

FY4Q24 results

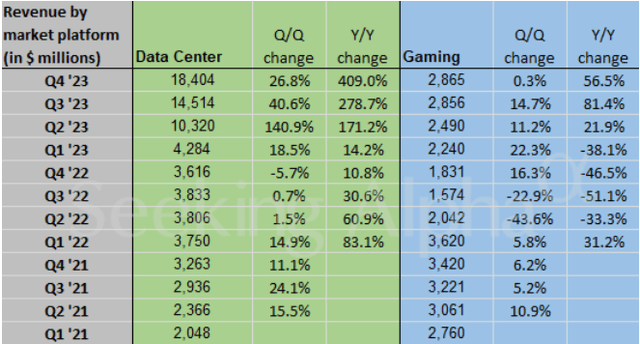

Nvidia revenues for FY4Q24 came in at $22.1 billion, up 22% sequentially, and beating consensus expectations by 8%. The beat was due to the continued strength in its datacenter business.

Data center revenues grew 27% sequentially as a result of strong demand for H100 products, which was up 27% sequentially, while high performance networking products was up 28% sequentially. This was compared to the consensus expectations of 15% sequential growth. In fact, networking revenues surpassed $13 billion annualized revenue run-rate. Data center revenues were up 409% on a year-on-year basis.

Revenue mix for Nvidia (Seeking Alpha)

Gaming revenues were flat sequentially due to strong seasonal factors and strong demand for its RTX 40 series. This was compared to the consensus expectations of negative 3% sequential growth. Pro Visualization revenues grew 11% sequentially, continuing to recover from the channel inventory normalization. This was compared to the consensus expectations of 4% sequential growth.

Automotive segment was up 8% sequentially. This was compared to the consensus expectations of 6% sequential growth.

Gross margins came in at 76.7%, above consensus expectations of 75.4% by 130 basis points.

EPS came in at $5.16, well above consensus.

Nvidia guided for $24 billion in revenues at the mid-point for the FY1Q25 quarter, ahead of consensus expectations by 12%.

Gross margins were guided at 77%, ahead of the market consensus by 130 basis points.

Apart from reiterating that Nvidia continued to see demand far outstrip supply, the company also highlighted that this demand supply dynamic is likely to continue through the calendar year of 2024, and that its next-generation products will continue to be “supply constrained as demand far exceeds supply”.

The narrative supporting Nvidia’s growth lies on the growing AI data center demand, but how fast will this grow and how big will this get?

Firstly, Nvidia CEO made a prediction that over the next four years, $1 trillion will be spent on upgrading data centers for AI.

Secondly, according to TrendForce, shipments for AI servers grow 38% in 2023 compared to the prior year and from 2022 to 2026, the AI server shipments are expected to grow at 22% CAGR.

Lastly, JLL also reports in a study that it is seeing huge demand for data centers as a result of growing AI demand, with global colocation megawatts expected to grow 15% over the next five years.

In addition, the company is sampling China compliant chips, which could bring an upside to the fiscal year.

All in all, the quarter does suggest another strong year for Nvidia in 2024, with the narrative continuing to support a demand supply dynamic that supports growth in the year.

Extending its lead

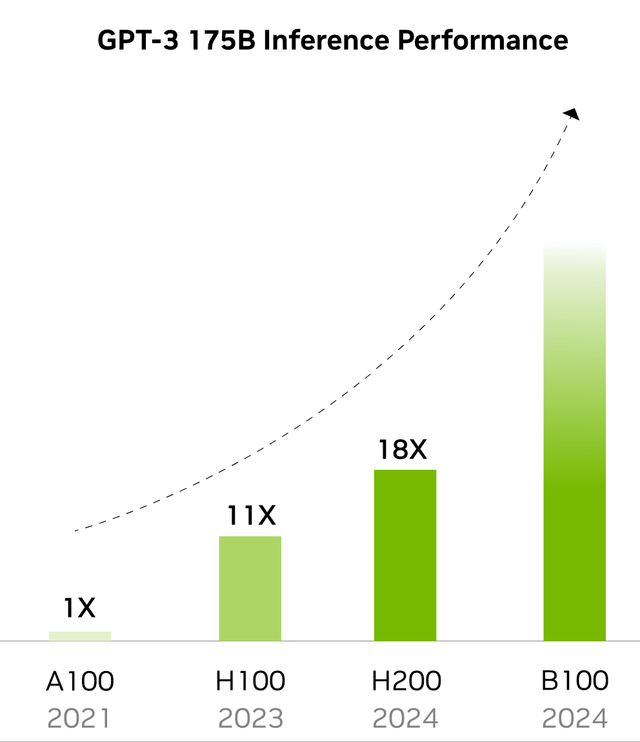

Just when you thought the Nvidia’s H100 was the best and the standard for accelerated computing and AI infrastructure out there today, the company is now on track to ramp H200, with the initial shipments expected in the second quarter.

The H200 will be based on Nvidia’s Hopper architecture and will be the world’s most powerful GPU.

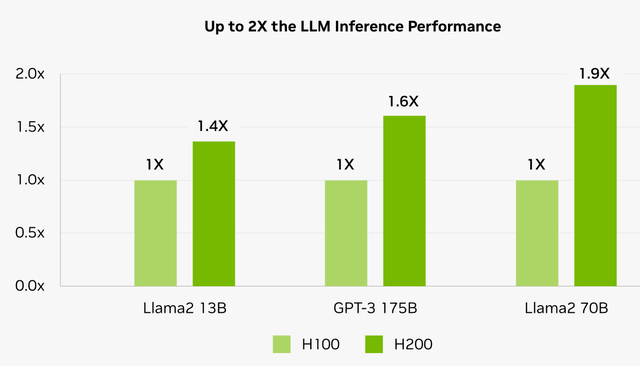

One reason is because Nvidia’s H200 is the first GPU in the world with HBM3e, offering 141 gigabytes of HBM3e memory at 4.8 terabytes per second. To put that into perspective, that is almost two times the capacity of Nvidia’s own H100 and also with 1.4 times more memory bandwidth.

With the larger and faster memory, this means that the H200 is expected to lead to further acceleration of high-performance computing workloads.

In fact, the H200 is expected to almost double the inference performance of the H100.

2x the inference performance (Nvidia)

In the fourth quarter earnings call, management shared that they are on track to ramp H200, with the initial shipments in the second quarter.

From a demand perspective, given the advantages of the H200, it is expected that the demand is strong for the new product.

As a reminder, from the last earnings call, management shared that cloud service providers like Amazon, Google, Microsoft and Oracle will be amongst the first to be delivered Nvidia’s H200 chips.

To me, the chart below is why I think Nvidia will continue to remain ahead of competition for a long time. While it is currently commanding a huge market share in the AI chips space, the company continues to invest and innovate, bringing new versions of its GPUs that bring about improved performance and cost savings.

I think that Nvidia is in a very good position where its H100 chip already has a strong competitive positioning, and it continues to launch new innovative products that will continue to enable it to maintain its leadership in the space. This makes it very difficult for other competitors to get close.

Networking and software built for AI

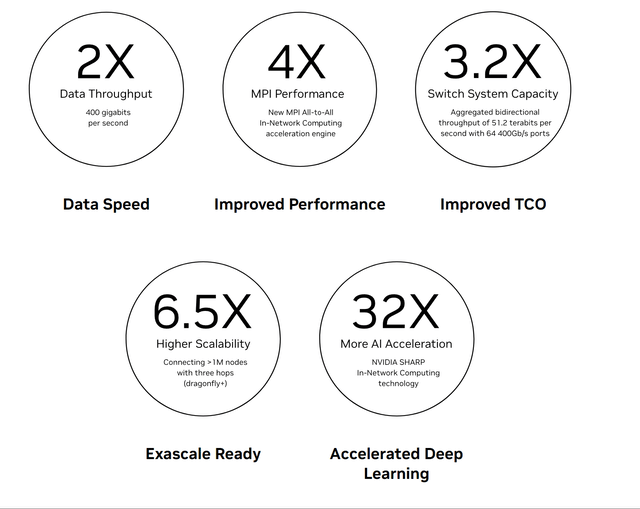

Nvidia’s networking revenue is starting to grow, with the segment exceeding a $13 billion annualized revenue run rate.

The reason for that, once again, is AI data centers.

With greater need for the best end-to-end networking solutions with the best performance, Nvidia’s Quantum InfiniBand solutions grew by more than five times on a year-on-year basis.

As a result, Nvidia’s Quantum InfiniBand is becoming the standard for the highest performance AI infrastructures.

Nvidia also announced the launching of its new Spectrum-X end-to-end offering designed for an AI-optimized networking for the data center. When used together with Nvidia’s BlueField DPU and software stack, it is able to achieve 1.6x higher networking performance for AI processing compared to the conventional ethernet solutions in the market.

With the new Spectrum-X offering, Nvidia is now also entering the ethernet networking space and the company expects to ship Spectrum-X this quarter.

Lastly, on software, Nvidia reached $1 billion in annualized revenue run rate in the fourth quarter.

Nvidia also announced that AWS has also been included as a partner to DGX Cloud, joining others like Microsoft Azure, Google Cloud and Oracle Cloud as partners to DGX Cloud. Nvidia’s DGX Cloud is an AI-training-as-a-service platform, which brings a serverless experience for enterprise developers that’s optimized for generative AI. It is being used for Nvidia’s own AI R&D and custom model development, and also used by Nvidia’s developers. DGX Cloud will bring the CUDA ecosystem to Nvidia’s cloud service provider partners.

CUDA stands for Compute Unified Device Architecture and was launched in 2007. CUDA is meant for developers to use the parallel processing capabilities of Nvidia GPUs for non-graphics workloads.

CUDA currently has 4 million developers using it and is likely one of the competitive advantages of Nvidia that will prove to be positive for the company in the long-term.

Nvidia’s CUDA headstart since 2007 has made it very difficult for competitors to displace it. Rivals like AMD (AMD) has created MIOpen and Intel (INTC) has created oneAPI, both meant as alternate GPU programming platforms, but neither has caught on by much compared to CUDA. This is because of a limited user base and also gaps in the tools or support levels relative to CUDA.

As such, CUDA will likely support Nvidia’s continued dominance in the near to medium-term given its strong foothold and ecosystem.

Growing AI use cases across verticals

In the fourth quarter, more than half of Nvidia’s data center revenues came from the large cloud providers to not only enable internal workloads but also external public cloud customers.

The largest and earliest adopters of AI is undoubtedly the big tech companies like Microsoft (MSFT) and Meta Platforms (META).

Microsoft currently has more than 50,000 organizations that use GitHub Copilot to help improve the productivity of its developers. In addition, Microsoft is seeing its Copilot for Microsoft 365 adoption grow faster in the first two months since release compared to the two previous major Microsoft 365 enterprise suites.

Companies in the social media space like Meta Platforms are using AI to drive their AI recommendation engines and continue to invest in AI to improve in customer engagement, click-through rates and ad conversion.

The company is also investing in generative AI to help multiple stakeholders, like content creators, advertisers and customers have more automation tools for content and ad creation, amongst others.

Google (GOOGL) is also working with Nvidia to optimize their Gemma language models to improve and accelerate the inference on Nvidia GPUs.

Another big group of customers that are applying generative AI are enterprise software companies. Nvidia highlighted that the early customers that they have partnered with for the training and inference of generative AI have already seen considerable commercial success.

One example highlighted was ServiceNow (NOW), which has generative AI products that led to their largest net new annual contract value contribution of any new product family release.

Of course, ServiceNow is not the only enterprise software working with Nvidia to bring generative AI applications to customers. Other players like Databricks, Snowflake (SNOW), Adobe (ADBE), Getty Images and SAP (SAP) are also other companies that Nvidia are working with today.

In addition, there are many companies that are working on improving the foundation of large-language models, which includes players like Anthropic, Google, Inflection AI, Microsoft, OpenAI amongst others. There are also private companies and new startups that are rising so quickly to meet the strong demand in generative AI like Adept AI, AI21 lABS, Character.ai, Cohere, Mistral, and Perplexity, amongst others.

It is worth noting that Nvidia is seeing significant adoption of AI across verticals, including the automotive, healthcare and financial services verticals.

One of the most notable trends over the past year is the significant adoption of AI by enterprises across the industry verticals such as automotive, healthcare and financial services. NVIDIA offers multiple application frameworks to help companies adopt AI in vertical domains such as autonomous driving, drug discovery, low latency machine learning for fraud detection or robotics, leveraging our full stack accelerated computing platform.

The automotive sector is one of the largest sectors that is using AI today to develop automated driving and AI cockpit applications. According to Nvidia, almost every automotive player working on AI is working with Nvidia and the automotive vertical brought in more than $1 billion in data center revenues for Nvidia last year. Of course, Nvidia Drive is the company’s solutions to develop autonomous driving, including the systems and software required for the development.

Generative AI is helping to improve and reinvest drug discovery, surgery, medical imaging, amongst others. Nvidia has been investing in this area with its Nvidia Clara platform and Nvidia BioNeMo, and the generative AI service can be used for computer-aided drug discovery.

It was also interesting to note that Nvidia estimated that 40% of its data center revenue for the last year was for AI inference, which again, goes to show that its customers are actually making real life use cases for it that the end customers have started to use in the last one year.

Valuation

Context around Nvidia

The reality with Nvidia is that this is a very rare scenario for a stock to be ever in, and I argue that the valuation that the company is trading at today is justified because of the following four factors.

This is one of the best position that a stock can ever be due to the following factors:

- Dominant market position with H100 chip as accelerated computing demand grows.

- Lead over competitors grow with H200 chip and beyond as the company continues to invest and innovate.

- Very strong demand situation where supply is unable to catch up.

- Continued strong demand through 2024.

As such, this sets the context for where I think Nvidia could trade and why I am buying the stock today.

I do think that 2024 is somewhat de-risked from a financial forecast standpoint given the commentary but anything beyond that is difficult to forecast and even Nvidia does not guide more than one quarter at a time.

The market expects some form of inventory digestion in 2025, but while that is the consensus, we could continue to see demand creep up in 2025 if adoption of AI across different use cases continues to grow.

Relative valuation

When thinking about the valuation of Nvidia, I think it is also important to look at relative valuation and compare it to competitors like AMD.

AMD currently trades at 55x 2024 P/E while Nvidia trades at just 35x 2024 P/E, but when looking at EPS growth, AMD is just growing 37% in the next year while Nvidia is growing at 89% in the next year.

That said, while AMD is listed as a competitor here, I do not necessarily see it as a big threat to Nvidia given that AMD is a few steps behind Nvidia. AMD only just started shipping the first Instinct MI300X AI GPUs in late January 2024.

AMD’s Instinct MI300X was demonstrated by the company to outperform Nvidia’s H100 chips, so this is likely a formidable competitor to Nvidia. That said, Nvidia is also one step ahead after it announced it will be shipping its H200 chip in the second quarter of 2024.

Simply put, when you put the two together and compared their P/E multiples and benchmark it to the growth they produce, Nvidia is currently 0.40x PEG but AMD is trading at 1.5x PEG.

Given that competitors like AMD trade at 1.5x PEG, assuming Nvidia trades at 0.6x PEG, we could see Nvidia reach $1,390 by the end of the year, and if Nvidia trades at 1.0x PEG, the company’s share price could even reach $2,300 by the end of the year, but I would not count on it.

Conclusion

I would continue to be a buyer of Nvidia stock.

With fears of something that might derail it off its course, Nvidia managed to surpass expectations and show that there continues to be very strong demand for its AI chips, and that demand will continue to outstrip supply through the calendar year of 2024, helping reduce fears that there is going to be an inventory correction in the second half of the calendar year.

In addition, the company showed that generative AI applications are growing and provided strong examples of AI monetization from their customers.

Nvidia is launching multiple new products across its data center portfolio, ramping the H200 and Spectrum-X in the next fiscal year to drive continued growth momentum.

When I look towards the competitive landscape, it is increasingly difficult for competitors to compete meaningfully with Nvidia given that it is able to maintain a one to two steps lead ahead of competitors, whether it is in silicon, hardware or software platforms. In addition, Nvidia has a very strong ecosystem, with 4 million CUDA software developers and an aggressive cadence of new product launches and more product segmentation over time.

Editor’s Note: This article discusses one or more securities that do not trade on a major U.S. exchange. Please be aware of the risks associated with these stocks.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

Outperforming the Market

Outperforming the Market is focused on helping you outperform the market while having downside protection during volatile markets by providing you with comprehensive deep dive analysis articles, as well as access to The Barbell Portfolio.

The Barbell Portfolio has outperformed the S&P 500 by 50% in the past year through owning high conviction growth, value and contrarian stocks.

Apart from focusing on bottom-up fundamental research, we also provide you with intrinsic value, 1-year and 3-year price targets in The Price Target report.

Join us for the 2-week free trial to get access to The Barbell Portfolio today!