Summary:

- We identified Nvidia as the company at the epicenter of one of the biggest technological paradigm shifts of the last 50 years.

- It is not lost on us that semiconductors is a notoriously cyclical industry.

- Many investors have missed the boat thinking that Apple is just a smartphone company, Amazon is just a retailer, and Tesla is just a car company.

- We believe we are in the early stages of a multi-decade disruption.

BING-JHEN HONG

The following segment was excerpted from this fund letter.

Nvidia (NASDAQ:NVDA)

At its core, our investment philosophy is centered on identifying and investing in Big Ideas, which we define as businesses that enable or benefit from disruptive change, have many characteristics of being or becoming platforms with network effects and attractive unit economics, and are likely to become materially larger in the future than they are today.

We identified Nvidia as the company at the epicenter of one of the biggest technological paradigm shifts of the last 50 years as computing is shifting from sequential to accelerated and as we begin to see the early stages of the use cases of generative AI (GenAI) enter the mainstream.

Is GenAI real? Is it going to be material, sustainable, and disruptive? Will Nvidia (and other GenAI leaders and disruptors) benefit from this disruptive change? Our research suggests that the answer to all of these questions is an unequivocal – yes.

Is there hype around GenAI? Sure. There is always a hype cycle around major new technologies.

Is GenAI a bubble similar to what we saw during the Internet bubble of late 1990s/ early 2000s? We don’t think so.

First of all, it is important to recognize that while there were many stocks trading at silly valuations on newly invented metrics (peak multiples on peak eyeballs), the internet itself proved to be a paradigm changing disruption, giving birth to a plethora of Big Ideas. But even more importantly, while the rise in Nvidia’s stock price has been nothing short of unprecedented for a company of its size, it was fueled almost entirely by rapid growth in revenues, earnings, and cash flows – not multiples. Nvidia’s stock price exited 2023 with a P/E ratio of 24.7 and ended the first quarter with a P/E ratio of 35. We can debate whether it is cheap or expensive, but it cannot be compared to the triple-digit multiples that were assigned to the perceived market leaders of the early Internet era that were clearly not in as strong of a competitive position then, as Nvidia is today.

It is not lost on us that semiconductors is a notoriously cyclical industry. Historically, the hyperscalers (AWS, Azure, GCP, etc.), who are among Nvidia’s largest customers, have not invested/spent/consumed CapEx in a straight line. It will be more than a mild surprise then if there was no pullback in demand leading to a significant growth deceleration and a potentially meaningful correction in the price of the stock, sometime in the near future. So, it is incumbent upon us to manage the size of this investment appropriately, while continuing to imagine what the future will likely look like without losing sight of what reality on the ground is today.

Then again…Nvidia is not just a semiconductor company. Many investors have missed the boat thinking that Apple is just a smartphone company, Amazon is just a retailer, and Tesla is just a car company. We have long argued that just like the other three, Nvidia is a platform. We are more certain of this now than ever before.

In March, we spent the better part of a week in San José, attending Nvidia’s annual developer conference GTC 2024 and got to experience firsthand what Forbes magazine called the Nerd Woodstock. After several years of being held online due to COVID-19, over 17,000 nerds (us included) attended the four-day event in person. With over 900 featured sessions, 1,700 presenters, over 300 exhibits, and more than 20 technical workshops, all centered around AI, there was a lot to choose from. From our perspective, there was nothing better than watching start-up founders debate the merits of Large Language Models (LLMs) compared to domain-specific Small Language Models, and how to get LLMs to have long-term memory, or what are the key challenges that need to be solved to enable reasoning, planning, and multi-agent LLMs (AI models that rely on and work together with other models). When it was all said and done, we came away with several observations:

- AI is developing rapidly across industries – near term, there is a lot of excitement around AI for areas such as consumer chatbots, AI-based customer service, AI-based assistants for a variety of business tasks such as coding, marketing, back-office, and more. Longer-term avenues of development are broad and include drug discovery, in which the opportunity for AI is significant due to the long timelines for drugs to reach approval and the high probability of failure (90% of drugs fail); planning and running factories and supply chains using digital twins (with help from Nvidia Omniverse – Nvidia’s real-time collaborative simulation); and using AI to build robots across a variety of use cases (from autonomous machines to humanoids). Multi-domain, multi-industry disruption.

- We are early on the S-curve – most companies are still in the proof of concept stage, while very few are ready for production today. Hurdles in implementing AI include data prep, model adaptation and fine-tuning, and embedding of AI into existing workflows. There is a lot of innovation taking place to reduce these hurdles – from tools and infrastructure that help companies build and run AI models more easily, to third-party AI models exposed via Application Programming Interfaces (APIs) that enable companies to use them without building their own models from scratch. Nvidia’s ecosystem across developers, system integrators, cloud providers, and independent software vendors, and internal software innovation are lowering these hurdles as well. For example, one of the most interesting announcements at the GTC Conference were Nvidia Inference Microservices – or NIMs, which are APIs to easily access open-source models (Nvidia already has dozens of models available) without the need to worry about model optimizations, security, patching, or sending data to third parties. NIMs could ease AI adoption for enterprises while also driving incremental monetization for Nvidia, priced at $4,500/GPU or at $1/GPU hour if used on the cloud, and increase the stickiness of Nvidia’s platform.

- We are rapidly coming down the demand elasticity curve – while in the Moore’s Law era, performance improvements were driven by cramming more transistors into a piece of silicon, AI is a data center scale problem with performance improvements driven by every layer in the stack:

- GPUs – The GPUs themselves are getting faster and better – Nvidia’s latest Blackwell GPUs are composed of 2 dies with 208 billion transistors, compared to 80 billion for the prior generation (Hopper), showing a performance boost of 2.5 times to 5 times depending on the use case.

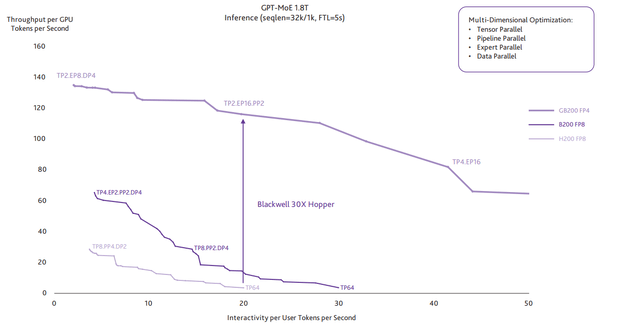

- The system layer – Nvidia is innovating across the system from the accelerator (the GPU) to the CPU (Grace), server design and networking (between GPUs, between GPUs and CPUs, within a rack and between racks). For example, Nvidia announced the latest fifth generation NVLink, a networking solution that connects multiple GPUs together, enabling order of magnitude higher memory bandwidth (1.8TB/sec) as compared to the standard PCIe (NVLink has 14 times more bandwidth than the fifth generation PCIe), which alleviates a significant bottleneck for many AI models. The latest fifth generation NVSwitch also enables connecting up to 576 GPUs together, which creates significantly higher overall bandwidth for a much larger unit of compute, which is especially important for very large LLMs (previously only 8 GPUs were connected with NVLink, while connecting a higher number required using the slower bandwidth, PCIe). Overall, the Blackwell GPU can see performance improvements of up to 30 times for inferencing compared to the prior Hopper generation1:

- The software layer – Nvidia’s rapid software innovation improves performance on the same hardware while lowering the hurdles for adoption. From NIMs described above to NeMo microservices (which make it easier for organizations to fine-tune existing models for their own data, needs, and requirements) to their innovation with optimizing existing models to run better on Nvidia’s existing hardware. For example, its recent TensorRT LLM software, optimizes LLMs for inference, showing a 2.9 times improvement in performance thanks to incorporating innovations such as in-flight batching.2

- AI algorithms – The AI algorithms themselves are rapidly improving – some recent innovations include models from the Mixture-of-Experts (MoE) family. MoE models split the total number of parameters they have between a number of “experts.” These experts are each trained to be particularly good at inferring a particular part of the data (essentially becoming experts on some of the data). A router layer then routes each token to the appropriate expert. Each of the experts, which could be MoEs by themselves, and the router layer are also trained. This specialization enables reaching high-quality results with fewer parameters activated at each run. The benefit of MoE models is that they are faster (in both training and inferencing) because not all parameters are activated on all data points. For example, Databricks’ new DBRX model3, a MoE-type model, beat existing models on various quality benchmarks, including a score of 70 in a programming benchmark (even better than the much larger GPT-4’s 67%). Databricks compared its current model with the MPT-7B model it released in May of 2023, and the new model required 3.7 times less compute (as measured by FLOPs) to reach the same quality – thanks to various algorithmic improvements including in the MoE architecture, various optimizations and better pre-training data. Additionally, “DBRX inference throughput is 2-3x higher than a 132B non-MoE model”. Another example for the pace of innovation is Claude 3 Opus (from Anthropic), which reached a 84.9% score on the same programming benchmark, 86.8% on multiple choice questions (vs. 86.4% GPT-4), a 95.4% on Reasoning (vs. 95.3%), 95% on grade school math (vs. 92%) and 60.1% in math problems benchmark (vs. 52.9%).4

- Another way to look at the pace of innovation is to compare the price OpenAI charges for its latest model, GPT-4 Turbo, compared to its previous model, GPT-3.5 Turbo. For the earlier model, the price is $10 for 1 million input tokens and $30 for 1 million output tokens. For GPT-4 Turbo, the price is 95% lower at $0.5 for 1 million input tokens and $1.50 for 1 million output tokens.

Roughly 18 months after the ChatGPT moment, GenAI is already showing rapid real-world adoption with revenues of GenAI companies exceeding $3 billion, excluding the revenues that the large cloud providers (like Google and Meta) are generating from AI due to better engagement and better ad targeting. To put this in perspective, it took the cloud Software-as-a-Service (SaaS) companies 10 years to reach $3 billion in revenue.5

We believe we are in the early stages of a multi-decade disruption. Jensen Huang, Nvidia’s co-founder, president, and CEO suggested at the conference that similar to how in the industrial revolution, raw materials came into the plant and final products came out, in the GenAI era, companies would become AI factories with data as a raw material and tokens as the output. Tokens can represent words, images, videos, or controls of a robot.

Over time, as models continue to improve, and the cost of running them declines, an increasing number of human tasks could be augmented or replaced entirely by AI. We expect decision-making to become much more data-driven, enabled by AI, as consumers, corporations, and governments alike, take advantage of the vast amounts of unstructured data we generate, which is estimated to represent 90% of all data generated.6

With increasingly challenging demographics across many economies (especially in developed markets), a greater proportion of global growth must come from productivity enhancements. AI, in our view, is likely to be a key driver behind these productivity gains, and potentially, the basis for technological breakthroughs that help humanity solve a host of the most difficult challenges from climate change to finding cures for diseases that have remained unsolved. We believe this disruptive change will be truly profound.

|

Footnotes 2NVIDIA Hopper Leaps Ahead in Generative AI at MLPerf/ 3Introducing DBRX: A New State-of-the-Art Open LLM 4Introducing the next generation of Claude Investors should consider the investment objectives, risks, and charges and expenses of the investment carefully before investing. The prospectus and summary prospectus contain this and other information about the Funds. You may obtain them from the Funds’ distributor, Baron Capital, Inc., by calling 1-800-99-BARON or visiting Baron Funds – Asset Management for Growth Equity Investments. Please read them carefully before investing. Risks: Growth stocks can react differently to issuer, political, market and economic developments than the market as a whole. Non-U.S. investments may involve additional risks to those inherent in U.S. investments, including exchange-rate fluctuations, political or economic instability, the imposition of exchange controls, expropriation, limited disclosure and illiquid markets, resulting in greater share price volatility. Securities of small and medium-sized companies may be thinly traded and more difficult to sell. The Fund may not achieve its objectives. Portfolio holdings are subject to change. Current and future portfolio holdings are subject to risk. The discussions of the companies herein are not intended as advice to any person regarding the advisability of investing in any particular security. The views expressed in this report reflect those of the respective portfolio managers only through the end of the period stated in this report. The portfolio manager’s views are not intended as recommendations or investment advice to any person reading this report and are subject to change at any time based on market and other conditions and Baron has no obligation to update them. This report does not constitute an offer to sell or a solicitation of any offer to buy securities of Baron Global Advantage Fund by anyone in any jurisdiction where it would be unlawful under the laws of that jurisdiction to make such offer or solicitation. Price/Earnings Ratio or P/E (next 12 months): is a valuation ratio of a company’s current share price compared to its mean forecasted 4 quarter sum earnings per share over the next twelve months. If a company’s EPS estimate is negative, it is excluded from the portfolio-level calculation. Free cash flow (‘FCF’) represents the cash that a company generates after accounting for cash outflows to support operations and maintain its capital assets. BAMCO, Inc. is an investment adviser registered with the U.S. Securities and Exchange Commission (SEC). Baron Capital, Inc. is a broker-dealer registered with the SEC and member of the Financial Industry Regulatory Authority, Inc. (FINRA). |

Editor’s Note: The summary bullets for this article were chosen by Seeking Alpha editors.