Summary:

- Nvidia’s key partner, Super Micro Computer, recently reported the delivery of direct liquid cooling (DLC) systems for NVDA chips.

- NVDA’s Blackwell AI chips, when combined with DLC, could fundamentally shift the cost structure for AI developers and end users.

- I expect to see this catalyst reflected in NVDA’s upcoming Q2 earnings report.

- The potential energy saving and performance boost are so significant and thus will materially widen NVDA’s long-term competitive moat in my view.

Antonio Bordunovi

NVDA stock Q2: Potential impacts from direct liquid cooling

I last wrote on Nvidia Corporation (NASDAQ:NVDA) earlier this month (on August 1, 2024 to be exact). That article was titled “Nvidia: Inventory And LLM Demand Point To Another Monstrous Quarter”. As you can guess from the title already, that article focused on the recent developments of its inventory and LLM (large language models) training demands. Quote:

Recent inventory data shows a buildup for key rivals such as AMD. In contrast, NVDA sits on record low inventory. Together with its higher unit price, this serves as a clear sign for robust volume and superior pricing power for NVDA. I further project demand from training/deploying Large Language Models to continue. These catalysts all point to another quarter of outsized growth for NVDA.

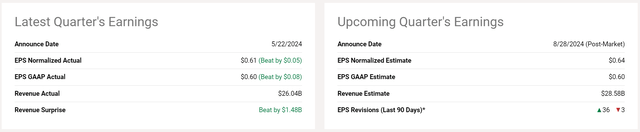

Since my last writing, there have been several new developments that merit a follow-up analysis. The most important developments are twofold in my mind. First, its key partner, Super Micro Computer, Inc. (SMCI) released its fiscal Q4 earnings report (ER) in the middle of the month. SMCI ER described several developments of relevance to NVDA, in particular, the progress it made on its direct liquid cooling (DLC) technologies. Advancements on this front, combined with NVDA’s new chips, could lead to fundamental shift in the cost structural of AI applications in my view. Second, NVDA’s own Q2 ER also approaches. The company is scheduled to report its FY Q2 earnings on Aug 28, 2024, after market close. Many analysts have updated their forecasts recently as you can see from the chart below. The majority of the revisions were upward (36 of them) with only 3 downward revisions. Currently, the consensus EPS forecast stands at $0.64. In contrast, the reported EPS for the same quarter in 2023 was $0.25 only. Thus, the forecasts point to another quarter of triple-digit growth rate.

Given this development, the remainder of this article will be a preview of NVDA’s Q2 earnings. I will argue why I totally share the analysts’ bullish outlook for its upcoming Q2.

NVDA stock and SMCI liquid-cooled clusters

The growth drivers I detailed in my last article are of course still valid. To recap, these drivers include superior pricing power and robust demand, both reflected in its record-low inventory currently.

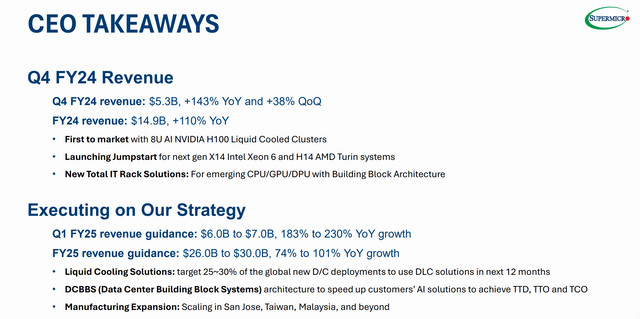

Therefore, here, I want to concentrate on a new catalyst that was reported in SMCI’s fiscal Q4 ER. I want to stay on point and do not want to make this analysis an earnings review for SMCI’s Q4. I will risk being terse and only pointing out the parts that are mostly relevant to my thesis here. Overall, SMCI reported a monstrous quarter in my view with 143% YOY growth and 38% growth QoQ as seen in the slide below. As a key partner for NDVA, such growth rates serve as another supporting sign for NVDA’s own growth in the past quarter.

What I really want to focus on here is the development of SMCI’s DLC technology. SMCI reported in its Q4 ER the delivery of its 8U liquid-cooled clusters for NVIDIA’s H100 AI chips (the first one to market).

Next, I will explain why the advancement in DLC and NVDA chips could form a killer combination for AI applications.

SMCI 2024 FY Q4 earnings slide

NVDA stock: cooling is an AI bottleneck

Out of all the roadblocks (technical, legal, ethical, etc.) for more expansive AI deployment, cooling and the associated energy consumption is a key roadblock. NVDA’s H100 chip consumes 700W of energy at peak operation, more than the average consumption of American households. Most of this power is converted into waste heat, and only a negligible amount is used for the actual computing. This huge amount of waste is also restricted to a tiny volume, given the small footprint of modern-day chips. A large amount of heat confined to a small volume is the perfect recipe for a cooling nightmare.

Such a nightmare translates into many practical issues, and the top two on my lists are reduced performance and high energy cost. To avoid overheating, chips do not (or cannot) run at their designed specifications all the time. Better cooling thus could help unleash the full potential of NVDA’s leading chips and SMCI’s liquid-cooled racks are designed for this purpose, as you can read from its following news release (the emphases were added by me):

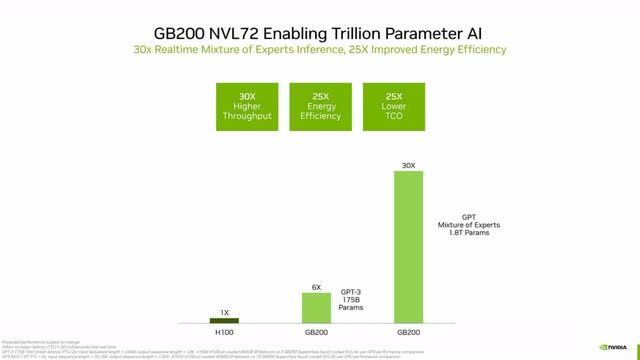

With Supermicro’s 4U liquid-cooled, NVIDIA recently introduced Blackwell GPUs can fully unleash 20 PetaFLOPS on a single GPU of AI performance and demonstrate 4X better AI training and 30X better inference performance than the previous GPUs with additional cost savings. Aligned with its first-to-market strategy, Supermicro recently announced a complete line of NVIDIA Blackwell architecture-based products for the new NVIDIA HGX™ B100, B200, and GB200 Grace Blackwell Superchip.

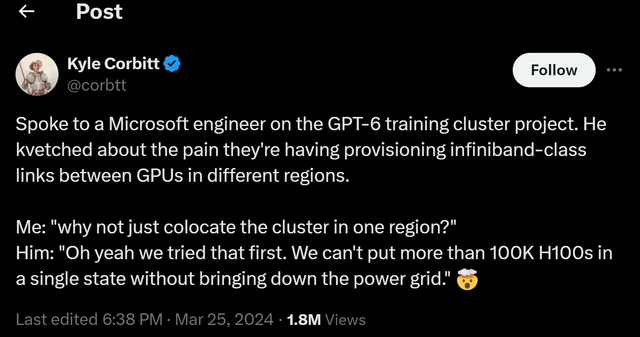

Note that a complete line of these racks is specially designed for NVDA’s Blackwell chips, which leads me to the next key practical issue of energy cost. In practice, data centers employ high-end chips in the thousands and the power demand can bring down the power grid of a region (see the following X.com comments from a Microsoft engineer).

It’s always fun to run a topic like this on social media. However, for business operations, this means an outsized electricity bill. As an estimate for the cost breakdown for training/deploying AI Models, I gather the following inputs from this Forbes report (slightly edited with emphases added by me):

- The cost of the chips amounted to millions of dollars. According to a technical overview of OpenAI’s GPT-3 language model, each training run required at least $5 million worth of GPUs.

- These models require many, many training runs as they are developed and tuned, so the final cost is far in excess of this figure. When asked at an MIT event in July whether the cost of training foundation models was on the order of $50 million to $100 million, OpenAI’s cofounder Sam Altman answered that it was “more than that” and is getting more expensive.

- The cost doesn’t end there. Running inference on the models, once trained, is also expensive. Estimates suggest that in January 2023, ChatGPT used nearly 30,000 GPUs to handle hundreds of millions of daily user requests. Sajjad Moazeni, a University of Washington assistant professor of electrical and computer engineering, says those queries may consume around 1 GWh each day.

I need to assume a cost of electricity per kilowatt-hour (kWh) to convert the above consumption into a dollar amount. I will just use my own electricity price of ~$0.14 per kWh for this purpose (as a broader context, electricity costs are in the range of $0.12 to $0.17 per kWh in the U.S.). Under this assumption, 1 GWh per day means an electricity bill of $140,000 PER DAY. Or $51 million per year, which is more than tenfold of the GPUs’ upfront costs and on the same order of magnitude as the energy cost to train the model.

NVDA’s Blackwell boasts both improved performance and drastically higher energy efficiency. As you can see from the following slide below, Blackwell is designed to deliver 30x higher throughput and 25x energy efficiency compared to earlier generations. With the help of liquid cooling, these chips can spend more time running at their peak performance. The DLC racks are more energy efficient themselves and thus require lower power to operate. All of these point to a significant reduction in electricity consumption, and thus lower energy costs for training and deploying, large-scale AI models.

Other risks and final thoughts

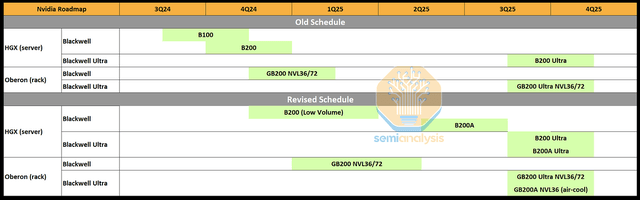

In terms of downside risks specific to my above forecast, the top risk is the technical issues and delays the Blackwell chips are experiencing now. Its Blackwell product line is experiencing technical issues in reaching the target volume production. More details are summarized in the following chart. More specifically,

… setback has impacted their production targets for Q3/Q4 2024 as well as the first half of next year. This affects Nvidia’s volume and revenue, as we detailed in the Accelerator Model on July 22nd. In short Nvidia’s Hopper is extended in lifespan and shipments to make up for a chunk of the delays. Product timelines for Blackwell are pushed out some, but volumes are affected more than the first shipment timelines.

All told, I believe these setbacks to be only temporary and do not change the underlying force at work here. To recap, I believe the combination of NVDA’s next-generation AI chips with DLC has the potential to fundamentally shift the budget allocation for AI developers and also end users. I further expected such a shift to be reflected in NVDA’s upcoming release of its financials in the past quarter. Looking further out, my view is that the potential energy saving is so large that it will help to further widen NVDA’s competitive moat.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

As you can tell, our core style is to provide actionable and unambiguous ideas from our independent research. If you share this investment style, check out Envision Early Retirement. It provides at least 1x in-depth articles per week on such ideas.

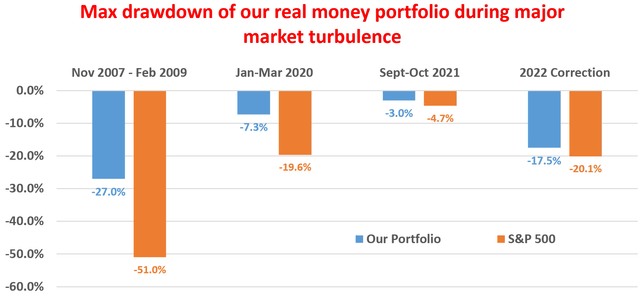

We have helped our members not only to beat S&P 500 but also avoid heavy drawdowns despite the extreme volatilities in BOTH the equity AND bond market.

Join for a 100% Risk-Free trial and see if our proven method can help you too.