Summary:

- 2023 Q1 saw a downturn in data center infrastructure spending, a pause that might have been the result of a pivot towards generative AI models like ChatGPT.

- Microsoft Corporation eclipses Google with generative AI copilots for search and browser, which came from its collaboration with OpenAI.

- Microsoft has made a huge investment in supercomputing infrastructure to support its new AI services.

- Microsoft’s AI infrastructure revolves around Nvidia Corporation’s GPU data center accelerators such as the H100, unveiled in 2022.

- Nvidia captures the generative AI acceleration market by having hardware ready to go.

Justin Sullivan

Since OpenAI launched ChatGPT last November, it has become the fastest-growing consumer application, with over 100 million users by January. It has also caused cloud service providers to re-evaluate what AI can do. Microsoft Corporation (MSFT) has gone all-in on generative AI, which is the general term for ChatGPT-like AIs, and hosts all of OpenAI’s services.

Nvidia Corporation (NASDAQ:NVDA) CEO Jensen Huang recently declared that generative AI represents an “iPhone moment.” While the long-term impact of generative AI remains to be seen, its immediate impact appears to be to alter, perhaps irrevocably, the approach to cloud infrastructure. As the exclusive provider of hardware acceleration to Microsoft for generative AI services, Nvidia should be the provider of choice for AI acceleration in the cloud.

The downturn in data center spending may reflect the impact of ChatGPT

In the first quarter of this year, the major cloud service providers, Microsoft, Alphabet Inc./Google (GOOG), and Amazon.com, Inc. (AMZN), all grew cloud services revenue substantially. Microsoft’s Intelligent Cloud segment grew revenue by 16% y/y. Google Cloud grew revenue by 28% y/y. Amazon Web Services (“AWS”) grew revenue by 16% y/y.

Despite this growth, there has been a downturn in spending for cloud services hardware, most notably at Intel Corporation (INTC), where Data Center revenue was down 39% y/y. Furthermore, Intel’s outlook for the second quarter implied a further, though unspecified. y/y revenue decline for Data Center. Things were better at Advanced Micro Devices, Inc. (AMD), where Data center revenue was flat y/y, but the company guided for a y/y Data Center revenue decline in Q2.

How do we reconcile continued cloud growth with the apparent downturn in cloud infrastructure spending? One could always just attribute it to caution in the face of macroeconomic headwinds, combined perhaps with some digestion of hardware.

But I think there’s a more fundamental technological shift underway, which has been accelerated by the advent of generative AI. And that’s the shift in focus from traditional CPUs as the main computational engine of the data center to a mix of CPUs and data accelerators, which are mostly GPU-based.

This is an approach that Nvidia has championed for years, arguing that GPU acceleration is inherently more energy-efficient and cost-effective than CPUs alone. The counter-argument to this has been that CPUs are more versatile, while GPUs are restricted to certain tasks that benefit from the massive parallelism of the GPU.

But the range of tasks that benefit from the GPU has been growing. Energy-efficient supercomputing is now the almost exclusive domain of GPU acceleration. In commercial cloud services, GPUs accelerate everything from game streaming to the metaverse. And, of course, AI.

In this regard, AMD, with a substantial portfolio of GPUs is better positioned than Intel, and this may in part account for AMD’s much better Q1 Data Center results. However, the advent of generative AI has upended the market for AI acceleration.

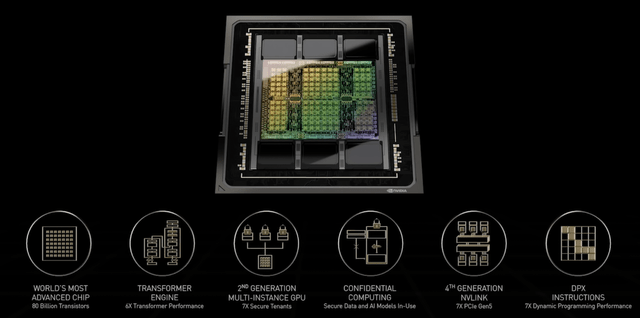

Probably, GPTs, which is short for Generative Pre-trained Transformer, have rendered conventional GPU acceleration obsolete. When Nvidia unveiled the “Hopper” H100 data center accelerator, in April 2022, it included a “Transformer Engine” to accelerate generative AI workloads. The Transformer Engine builds on Nvidia’s Tensor Cores to provide a 6X speed improvement in training transformers:

In a white paper released at the same time, Nvidia explained the motivations for the Transformer Engine:

Transformer models are the backbone of language models used widely today from BERT to GPT-3 and require enormous compute resources. Initially developed for natural language processing (NLP) Transformers are increasingly applied across diverse fields such as computer vision, drug discovery, and more. Their size continues to increase exponentially, now reaching trillions of parameters and causing their training times to stretch into months, which is impractical for business needs due to the large compute requirements. For example, Megatron Turing NLG (MT-NLG) requires 2048 NVIDIA A100 GPUs running for eight weeks to train. Overall, transformer models have been growing much faster than most other AI models at the rate of 275x every two years for the past five years.

In 2022, Nvidia’s Transformer engine seemed like merely interesting technology. It was an appropriate innovation considering that Nvidia wanted to stay relevant to the AI research community. I had no sense at the time of how critical it would become to the considerations of cloud providers like Microsoft wishing to make GPTs available to the general public.

How Microsoft leaped ahead with the OpenAI collaboration

When Microsoft first unveiled its generative AI-powered search and browser back in February, I noted that the company seemed to be much further along in integrating GPTs into its products than Google. And now, as Microsoft offers its AI “copilots” as standard features, Google’s competing “Bard” is still experimental.

It’s clear that Microsoft has engineered a major coup in AI with the help of its collaboration with OpenAI. Microsoft’s Azure cloud service provides all the hosting for partner OpenAI, including ChatGPT and more advanced generative AIs. Furthermore, it’s clear that Microsoft has had access to OpenAI’s generative AI technology at the code level and has incorporated it into various AI “copilots” that the company now offers.

But wait, the reader may ask, how did Microsoft develop such a close and apparently exclusive relationship with a non-profit research institution? Well, it turns out that OpenAI isn’t exactly a non-profit. In 2019, OpenAI created the OpenAI LP as a wholly owned for-profit subsidiary. This seems to have been done with the sole intent of providing a recipient for a $1 billion investment from Microsoft.

Then in January of this year, Microsoft invested another $10 billion in OpenAI LP, as reported by Bloomberg:

The new support, building on $1 billion Microsoft poured into OpenAI in 2019 and another round in 2021, is intended to give Microsoft access to some of the most popular and advanced artificial intelligence systems. Microsoft is competing with Alphabet Inc., Amazon.com Inc., and Meta Platforms Inc. to dominate the fast-growing technology that generates text, images and other media in response to a short prompt.

At the same time, OpenAI needs Microsoft’s funding and cloud-computing power to crunch massive volumes of data and run the increasingly complex models that allow programs like DALL-E to generate realistic images based on a handful of words, and ChatGPT to create astonishingly human-like conversational text.

$10 billion is a lot to pay for which amounts to a bunch of code, but it’s code that no one outside of OpenAI and Microsoft has access to.

Microsoft’s huge generative AI investment

In order to get the jump on Google, which had invented the generative AI approach, Microsoft had to make a massive investment not only in OpenAI’s software but also in hardware, primarily Nvidia hardware. How massive, we’re only beginning to learn through some Microsoft blog posts. A March 13 blog post by John Roach described in general terms the magnitude of the investment:

In 2019, Microsoft and OpenAI entered a partnership, which was extended this year, to collaborate on new Azure AI supercomputing technologies that accelerate breakthroughs in AI, deliver on the promise of large language models and help ensure AI’s benefits are shared broadly.

The two companies began working in close collaboration to build supercomputing resources in Azure that were designed and dedicated to allow OpenAI to train an expanding suite of increasingly powerful AI models. This infrastructure included thousands of NVIDIA AI-optimized GPUs linked together in a high-throughput, low-latency network based on NVIDIA Quantum InfiniBand communications for high-performance computing.

The scale of the cloud-computing infrastructure OpenAI needed to train its models was unprecedented – exponentially larger clusters of networked GPUs than anyone in the industry had tried to build, noted Phil Waymouth, a Microsoft senior director in charge of strategic partnerships who helped negotiate the deal with OpenAI.

Despite the massive infrastructure investment, the customer base at this point was relatively limited to researchers at OpenAI and within Microsoft. The shift from research program to commercial cloud services has been called by Nvidia CEO Jensen Huang and others “the industrialization of AI”.

During his March 2023 GPU Technology Conference keynote, CEO Huang referred to the required infrastructure as “AI factories.” In designing the AI factory that OpenAI needed, Microsoft certainly had ample opportunity to evaluate various hardware alternatives before settling on Nvidia.

Nvidia’s likely sales into Microsoft

The construction of these AI factories is still underway and will continue as demand for generative AI services expands. A sense of the business opportunity for Nvidia can be derived from another blog post by Matt Vegas, Principal Product Manager for Azure HPC. In the post, he announces that Microsoft is starting to offer virtual machine instances with a minimum of 8 Nvidia Hopper H100 GPUs. This is scalable for AI use into “thousands” of H100s.

Essentially, this is an H100-based DGX system in which the 8 H100s are cross-connected by NVLink so that they can function as a single unified GPU. The DGX systems are then linked to each other via Nvidia InfiniBand fiber optic links with a total data bandwidth of 3.2 Tb/sec. That’s right, 10^12 bits/sec.

How many actual H100 accelerators Microsoft has bought is difficult to determine, but it is apparently in the thousands. We can get a sense of how many thousands by estimating the number of H100s required to host OpenAI services.

OpenAi has been very secretive about the details of ChatGPT, so it’s difficult to determine the hardware resources that a single instance of ChatGPT requires. Tom’s Hardware has an interesting article in which the author ran a less capable GPT on a PC equipped with an RTX 4090 GPU (Nvidia’s current top of the line for consumers).

If a single GPU with 24 GB of VRAM can run the lesser GPT, then I would estimate that a single H100 with 80 GB of VRAM would be sufficient to run a ChatGPT instance. This is only an estimate. In fact, an instance of ChatGPT may have its processing spread over multiple H100s and access more than 80 GB of VRAM, depending on the workload. More advanced GPTs, either from OpenAI or Microsoft, might require even more.

OpenAI reportedly receives 55 million unique visitors per day for an average visit time of 8 minutes. If I assume each visitor gets exclusive use of an H100 for the duration of the visit, then this implies that there must be about 300,000 H100s in the Azure cloud service to handle the load. That would amount to 37,500 H100 DGX systems, worth about $3.75 billion in revenue probably spread over a number of quarters.

Up to Nvidia’s fiscal 2023 Q4, most of this infrastructure was probably already accounted for in Nvidia’s Data Center business segment revenue. However, the potential for expansion of GPT-like AI services on Azure implies that there is much more to come. Microsoft’s H100-based service, called ND H100 V5, is currently offered for preview only. This is probably to ensure that the available hardware is not overloaded.

Investor takeaways: Nvidia “gets there firstest with the mostest” in generative AI

Almost every hardware provider in the cloud services space has noted that generative AI represents a huge opportunity. And they’re right, but I doubt that opportunity will be uniformly distributed.

There’s an old saying that battles are won by the side that “gets there firstest with the mostest,” and this describes Nvidia’s AI acceleration business. While competitors are talking about capturing future opportunities, Nvidia is capturing the present market for generative AI acceleration.

Nvidia has done this by staying abreast of current developments and presciently anticipating future needs. Nvidia was there from the beginning of OpenAI, when Jensen Huang personally delivered the first DGX system to OpenAI in 2016.

Nvidia Corporation is about to report the results for its fiscal 2024 Q1 next week (expected May 24 post-market). As usual, I’ll be sharing detailed financial projections for the quarter with Rethink Technology members in advance of the report. I fully expect Data Center revenue to grow significantly y/y, in contrast with Nvidia’s competitors. I remain long Nvidia Corporation stock and rate it a Buy.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA, MSFT either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

Consider joining Rethink Technology for in depth coverage of technology companies such as Apple.