Summary:

- While the Nvidia Corporation bears argue that cloud customers producing their own chips pose a risk to Nvidia’s revenue growth, they are overlooking Nvidia’s counterstrike by offering its own cloud services.

- CEO Jensen Huang has already cleverly arranged his chess pieces in anticipation of his customers’ future moves, by launching DGX Cloud.

- Despite potential risks, Nvidia’s expansion into cloud services helps sustain the value of its AI chips in the cloud industry, making the stock a buy.

Justin Sullivan

NVIDIA Corporation (NASDAQ:NVDA) shares have soared over 175% YTD, thanks to being strongly positioned to capitalize on the artificial intelligence (“AI”) revolution that is just getting started. Given the monstrous rally, the bears are urging investors to tread cautiously amid the rising risks to the bull case. One key bear argument is the fact that Nvidia’s largest cloud customers are producing their own chips to be used in their data centers, posing a risk to Nvidia’s revenue growth going forward. However, the bears are missing the fact that while cloud providers are vertically integrating downwards, Nvidia is vertically integrating upwards by offering its own cloud services, supporting the bull case for the stock.

The last earnings release for fiscal Q1 2024 stunned both the bulls and bears given Nvidia’s jaw-dropping revenue prediction of around $11 billion for Q2 FY24. This would imply revenue growth of almost 35% compared to last quarter, primarily driven by Data Center growth. Data Center is Nvidia’s largest revenue segment, making up 60% of total revenue in Q1 FY24. The tech giant is strongly positioned to capitalize on the shift towards accelerated computing across data centers, as it means solid demand for Nvidia’s AI chips, H100 and A100. Though this bull story is tempered by a key risk facing the stock.

Nvidia’s cloud customers producing own chips

Nvidia bears love to point out that while Nvidia may be the sole arms dealer in the AI revolution at the moment, the fact its largest cloud customers (AWS, Azure and Google Cloud) are producing its own chips is a growing concern, as it could mean significantly lower demand for Nvidia’s chips going forward, undermining Nvidia’s ability to command such a lofty valuation of 62x forward earnings.

Chips designed internally by Cloud Service Providers [CSPs] are better integrated into their servers and software designs, allowing for greater cost and processing efficiency, as well as the ability to offer new cloud services that off-the-shelf chips from the likes of Nvidia and Advanced Micro Devices, Inc. (AMD) would not allow them to do. Subsequently, CSPs strive to lure customers towards cloud services powered by their own chips as opposed to Nvidia’s chips, by highlighting the improved performance capabilities and cost-effectiveness.

The perceived risk is that Nvidia’s chips become less relevant in the CSP industry overtime as end-customers migrate towards cloud services that are increasingly powered by CSP’s own chips.

Nvidia’s counterstrike

The fact that Nvidia’s largest customers are producing their own chips certainly poses a risk to Nvidia’s future sales growth. However, amid all the talk around CSPs’ internal chip projects undermining demand for Nvidia’s chips going forward, the bears are overlooking the fact that Nvidia has already been expanding into offering its own cloud solutions.

Amid CSPs developing their own AI chips, Nvidia’s executives realize that its biggest customers could become its competitors going forward. They were certainly not going to take this threat lying down, and have already made moves to subdue this risk: DGX Cloud. In March 2023, Nvidia introduced its own cloud service, DGX Cloud:

“an AI supercomputing service that gives enterprises immediate access to the infrastructure and software needed to train advanced models for generative AI and other ground-breaking applications.”

Nvidia offers access to its cloud services both directly and through partnering with major cloud providers, which currently includes Microsoft Corporation (MSFT) Azure, Google (GOOG) Cloud, and Oracle (ORCL) Cloud Infrastructure. The way Nvidia is currently going about it is, selling the data center hardware to its partners, and then leasing it back to run DGX Cloud. This is, of course, not Nvidia’s first foray into offering its own cloud services, as they have also been offering NVIDIA GPU Cloud [NGC] since 2017.

Given that cloud providers are seeking to trumpet their own AI-specific cloud solutions, why are they offering their clients access to Nvidia’s DGX Cloud?

- Nvidia’s superior AI capabilities: Despite cloud providers seeking to boast about the capabilities of their own cloud solutions in helping their customers develop AI-driven products and services, Nvidia’s cloud service offers valuable capabilities that help serve clients’ increasing demand for industry-leading AI solutions. Hence inducing cloud providers to offer Nvidia’s services alongside their own services, driven by the fear of missing out on superior capabilities they can offer to their clients. If they don’t offer access to DGX Cloud, they risk losing clients to competitors that do offer access to Nvidia’s cloud service. As a result, these CSPs are inclined to partner with Nvidia to avoid falling behind in the AI race.

- Nvidia’s brand power: Amid the onset of ChatGPT, Nvidia has gained wide-spread proclamation for its ability to offer superior, industry-leading, AI-centric solutions. C-suites across the corporate world that are currently planning to develop and deploy their own AI solutions will inevitably be well-aware of the technology solutions that Nvidia is able to offer in this field. Therefore, Nvidia’s brand recognition and power have certainly augmented over the past several months. Businesses that want to stay competitive in their respective industries amid the AI revolution will want to invest in the highest-quality infrastructure available, and given Nvidia’s wide-lead in this space, the tech giant’s AI solutions will be top of mind. As a result, CSPs feel the need to partner with Nvidia to offer industry-leading AI capabilities, to satisfy customers’ demand for the best-available AI solutions out there.

Hence, while CSPs are striving to design chips optimized for their own cloud services, in order to offer enhanced performance more cost-effectively, Nvidia is offering DGX Cloud services that are optimized for Nvidia’s GPUs, thereby ensuring the availability of cloud services that leverage the full potential of Nvidia’s technology, offering optimum performance and cost-efficiency, and countering the threat of CSPs’ services powered by their own chips gaining prevalence.

Based on what CEO Jensen Huang shared on the last earnings call, the partnerships are indeed tight-knit, creating new market opportunities for both Nvidia and the partners through synergies:

it allows us…to create new applications together and develop new markets together. And we go-to-market as one team and we benefit by getting our customers on our computing platform and they benefit by having us in their cloud…

So, our goal really is to drive architecture to partner deeply in creating new markets and the new applications that we’re doing and provide our customers with the flexibilities to run in their — in their everywhere, including on-prem and so, that — those were the primary reasons for it and it’s worked out incredibly. Our partnership with the three CSPs and that we currently have DGX Cloud in and their sales force and marketing teams, their leadership team is really quite spectacular. It works great.”

The tightly knit nature of these partnerships is testament to just how valuable Nvidia’s technology continues to be to these leading cloud providers. By fostering deeper relationships with its biggest customers through DGX Cloud, Nvidia strives to secure its presence in the cloud industry, which should get stronger overtime through the network effect.

Moreover, cloud providers thrive and build moats through fostering an ecosystem around their services, conducive to a network effect. As more customers join a cloud platform, it inevitably leads to advancements in the platform’s functionalities, as both the enterprises’ developers as well as third-party developers create more applications and integrations to enhance the capabilities of the cloud platform to serve evolving industry-specific needs. These constantly advancing capabilities in turn attract even more cloud customers across various industries to the platform, thereby bolstering a virtuous network effect.

Now, going back to Nvidia, the fact that the major cloud providers are currently offering Nvidia’s GPU-optimized cloud services alongside their own services opens the door for an ecosystem to be built around DGX Cloud, whereby both end-customers’ developers and third-party developers build applications and integrations around the DGX platform, augmenting the value of Nvidia’s cloud infrastructure overtime. As the functionalities and ecosystem of Nvidia’s cloud services continue to grow, DGX Cloud could become an increasingly prominent force to be reckoned with in the cloud industry, subsequently also securing continuous demand for its chips and other data center hardware products.

On top of DGX Cloud, Nvidia’s AI platform also includes NVIDIA AI Enterprise and NVIDIA AI Foundations.

Powered by DGX Cloud:

“NVIDIA AI Foundations is a set of cloud services that advance enterprise-level generative AI and enable customization across use cases in areas such as text (NVIDIA NeMo™), visual content (NVIDIA Picasso), and biology (NVIDIA BioNeMo™).”

NVIDIA AI Enterprise is:

“the software layer of the NVIDIA AI platform, which provides end-to-end AI frameworks and pretrained models to accelerate data science pipelines and streamline the development and deployment of production AI.”

NVIDIA AI Enterprise does not just run on DGX Cloud, but is:

“certified to run on GPU-accelerated public cloud instances, including AWS, Azure, Google Cloud, and Oracle Cloud Infrastructure…NVIDIA AI Enterprise is also available on major cloud marketplaces.”

NVIDIA AI Enterprise essentially aims to allow cloud customers to develop and deploy their AI projects faster. As more and more businesses use NVIDIA AI Enterprise, whether through DGX Cloud or through the CSPs’ platforms, it allows Nvidia to learn increasingly more about how end-customers in various industries are seeking to deploy AI, which in turn feeds into Nvidia’s R&D efforts to further advance existing/ introduce new Al Workflows, Frameworks and Pretrained Models, subsequently encouraging even more businesses to use NVIDIA AI Enterprise, as well as NVIDIA AI Foundations, as they become more valuable, creating a self-reinforcing network effect.

What if partners start competing with Nvidia more contentiously?

While the deep partnerships are certainly a great move to sustain utilization of Nvidia’s technology in the cloud industry, there is of course the risk of these cloud providers building their own AI-centric solutions and encouraging clients to migrate completely to their in-house solutions, away from the DGX Cloud platform.

Surprisingly, CEO Jensen Huang mentioned on the call that if DGX Cloud customers shift towards CSPs’ cloud platforms, it was not a problem:

And for the customers, the way that NVIDIA’s cloud works for these early applications, they can do it anywhere. So one standard stack runs in all the clouds and if they would like to take their software and run it on the CSPs cloud themselves and manage it themselves, we’re delighted by that, because NVIDIA AI Enterprise, NVIDIA AI Foundations. And long-term, this is going to take a little longer, but NVIDIA Omniverse will run in the CSPs clouds.

There are two ways to read into this.

1. Jensen Huang is supportive of customers moving software to CSPs’ cloud, as long as they continue to use NVIDIA AI Enterprise and NVIDIA AI Foundations, keeping them engaged with Nvidia’s AI platform. More importantly, Nvidia’s priority is to continue selling its data center hardware to cloud customers for use in their own first-party workloads, as opposed to selling hardware that Nvidia then leases back to support DGX Cloud workloads.

In fact, on the earnings call when an analyst asked what the split in data center sales was between cloud customers buying for their first-party workloads versus buying to lease to Nvidia to support DGX Cloud, Huang said:

without being too specific about numbers, but the ideal scenario, the ideal mix is something like 10% NVIDIA DGX Cloud and 90% the CSPs clouds

This would make sense, as ideally, Nvidia would want CSPs’ data center workloads to continue to be powered by Nvidia’s chips. And if cloud customers, particularly those shifting from DGX Cloud to CSPs’ cloud, continue to use NVIDIA AI Enterprise and NVIDIA AI Foundations thanks to the strong network effects discussed earlier, it could potentially give Nvidia the opportunity to sustain CSPs’ demand for Nvidia’s hardware products as well, as NVIDIA AI Enterprise/ NVIDIA AI Foundations are optimized for Nvidia’s GPUs, as opposed to CSPs’ own GPUs.

That being said, this may not necessarily stop CSPs from developing cloud services powered by their own chips, as well as producing software services of their own that compete with NVIDIA AI Enterprise, and Jensen Huang knows this.

2. Given the early signs of success from the DGX Cloud partnerships with the cloud providers, Jensen Huang would be inclined to maintain a tone of cordiality with its partners. Even though he is well aware of the downside risks of customers shifting from DGX Cloud to CSP clouds, he would not want to jeopardize the current success from the partnerships, as well as the demand for its GPUs, by hinting at grander ambitions for the DGX Cloud to potentially compete more contentiously against its partners/ customers going forward.

Despite the risk of cloud customers moving to CSPs’ services that are powered by their own chips, customers are unlikely to completely migrate away from Nvidia’s AI platform.

Firstly, even if cloud customers decide to run their software on a CSP’s cloud, it does not necessarily mean they would shift away from DGX Cloud completely. The cloud industry is increasingly seeing customers adopt a multi-cloud/ hybrid cloud strategy, which is the practice of using more than one cloud service provider simultaneously. As long as the new applications provided on DGX Cloud, in partnership with the CSPs, remain valuable, customers could indeed maintain a multi-cloud strategy incorporating DGX Cloud into the mix. In fact, witnessing the multi-cloud trend, CSPs have taken initiatives to support customers’ multi-cloud strategy (e.g., offering integration tools to facilitate seamless communication and data transfer between different cloud platforms), in order to stay integrated into the industry. Given that CSPs have been willing to support customers’ needs to use a competitor’s cloud platform simultaneously, they would certainly be willing to support the use of DGX Cloud as a partner.

Secondly, even if the CSP partners begin to make moves that undermine the DGX Cloud partnership and instead encourage customers to migrate completely to their own platforms, Nvidia is well-positioned to sustain DGX Cloud independently if needed. As mentioned earlier, the ecosystem that will build around DGX Cloud overtime will not only enhance its value to customers, but could also make it inconvenient for customers to completely migrate away from it. Furthermore, Nvidia holds tremendous expertise in building data centers for its customers, and is becoming increasingly proficient at it as more business comes its way. Hence, embarking on mass-scale data center construction for itself with exceptional time-to-market agility is easily conceivable.

Either way, Nvidia’s cloud endeavors are only likely to keep growing from here. Whether it continues to focus on the partnerships route, or increasingly serve enterprises directly instead overtime, the expansion into cloud helps sustain the value of Nvidia’s AI chips in the cloud industry, bolstered by a growing ecosystem around the chips/ cloud services.

Though if Nvidia more aggressively pursues serving enterprises directly through DGX Cloud, in response to leading cloud providers shunning Nvidia’s products for their own, it would entail some risks:

Hurting demand for chips from non-competitors: If Nvidia more evidently strives to serve cloud customers directly through DGX Cloud, it would be considered a competitive threat to all cloud providers, even those that do not intend to produce their own chips. This could discourage them from buying Nvidia’s chips, and instead buy from a competitor instead.

Nonetheless, Jensen Huang is wisely striving to partner with various CSPs as tightly as possible to create new services together, to subdue the risk of DGX Cloud being seen as a threat, and thereby sustaining demand for Nvidia’s hardware and software solutions.

Success is not guaranteed: Nvidia may not necessarily be able to replicate the success it has had with chip designing in its cloud endeavors. Moreover, incumbents will have more experience in serving end-customers, and will have deeper, more established relationships with them. The cloud providers will leverage this as Nvidia increasingly strives to become a bigger player in the cloud industry.

That being said, while Nvidia may not have as much experience serving end-customers directly, it is not a completely unknown territory for the tech giant, given its years of experience serving the cloud industry, including working alongside the cloud providers to help them optimize the performance of their services. Nvidia could use this accumulated knowledge to build its own cloud business and become a formidable competitor to the incumbents.

Furthermore, offering DGX Cloud and NVIDIA AI Enterprise through the leading cloud providers currently allows Nvidia to build a consequential ecosystem and network effect around its services, allowing Nvidia to learn more and more about its end-customers, and enabling it to challenge incumbents more meaningfully.

Profit margin uncertainty: Expanding further into the cloud industry would inevitably impact Nvidia’s profitability, and not just due to increased capital expenditure requirements. Even if Nvidia successfully expands into offering a competitive cloud service, its pricing power in the cloud industry is likely to differ from the pricing power it currently enjoys in the chip industry, given the fact that it would be competing against well-established cloud players. If in the future, an increasing proportion of its revenue is derived from selling cloud services to enterprises as opposed to selling chips to CSPs, its profit margins could potentially contract, undermining Nvidia stock’s ability to sustain the expensive forward earnings multiple.

Though the actual impacts on profitability would of course depend on how efficiently Nvidia is able to manage cloud operations. This is after all a tech giant that has mastered the design of data centers following years of experience in serving cloud customers, hence is strongly positioned to run cloud operations proficiently and competitively.

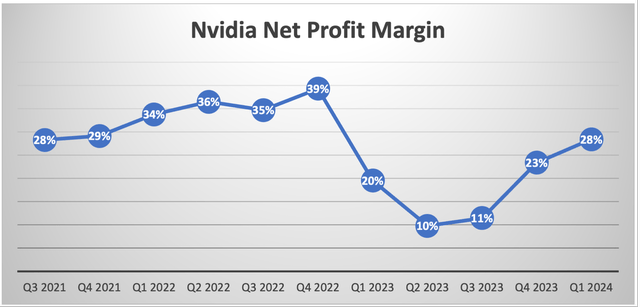

Nevertheless, presently, the tech giant enjoys its monopoly status in the AI chips space. Following the slump in FY2023, net margins have been expanding over the last two quarters, currently at 28%. The spike in demand for its Data Center AI chips amid the AI race, combined with its strong pricing power given its wide lead in AI innovation, allows Nvidia to enjoy solid profit generation for the time being, well-positioning it to reinvest its gains into expanding its cloud endeavors.

Is Nvidia stock a buy?

While the market is focused on the sustainability of Nvidia Corporation ’s leadership in AI chips amid rising competitive threats, investors should pay closer attention to how Nvidia exploits the power of DGX Cloud to sustain its presence in the cloud industry. Nvidia’s AI growth story is sustained by DGX Cloud, Nvidia Corporation stock is a buy.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.