Summary:

- Nvidia Corporation’s powerful GPUs and co-design approach have positioned the company as a dominant player in data center acceleration, handling AI workloads in both training and inference.

- The company’s comprehensive software ecosystem, including the CUDA platform, offers a structural competitive advantage and has the potential to become an AI operating system.

- Despite strong growth prospects, investors should consider risks associated with competition, market dynamics, and regulatory challenges.

Laurence Dutton

Introduction

NVIDIA Corporation (NASDAQ:NVDA) has emerged as a key player in the data center market, leveraging its powerful and versatile GPUs (Graphics Processing Units) to accelerate a wide range of computational workloads. With its relentless focus on hardware-software co-design and a full-stack approach, Nvidia has created a heterogeneous-compute system that is perfectly positioned to ride the current inflection in the AI cycle. This article explores Nvidia’s growing dominance in data center acceleration, its unique software ecosystem, the underappreciated potential for inference workloads, and its role as a potential AI Operating System.

Nvidia GPUs: Driving Data Center Acceleration

Nvidia GPUs are not just powerful gaming processors; they have evolved into general-purpose accelerators capable of handling complex computational tasks. Unlike traditional CPUs, which prioritize single-thread performance, GPUs utilize a parallel processing architecture called single-instruction multiple-thread (SIMT) to maximize throughput. This design enables GPUs to perform tasks like rendering graphics, training large language models, and executing AI algorithms simultaneously, unlocking unprecedented performance gains.

Over the years, Nvidia has consistently pushed the boundaries of GPU performance. Following the empirical law proposed by Gordon Moore, Nvidia’s GPUs have doubled their transistor count every two years until recently. Additionally, advancements in chip architectures and the introduction of features like tensor cores and transformer engines have further boosted the throughput and efficiency of Nvidia’s GPUs, surpassing the predictions of Moore’s law.

Accelerating AI With Nvidia: From ImageNet To Language Models

Nvidia made a significant breakthrough in 2012, when a team from the University of Toronto, using NVDA GPUs, won the ImageNet computer vision contest. This victory showcased the programmability and high floating-point performance of Nvidia’s GPUs, attracting attention from the AI community. Since then, Nvidia has developed more general-purpose GPUs and improved chip architectures, enabling the acceleration of compute doubling time to every 3.4 months.

As AI applications expanded beyond computer vision, Nvidia GPUs found widespread adoption in various domains. From recommender systems to social network feeds, Nvidia scaled its market share in data center processor revenues to over 30% within a decade. The rapid growth of large language models (LLMs) further propelled Nvidia’s dominance, with their GPUs serving as the computing workhorse on which LLMs have exponentially grown.

The Importance Of Software In Nvidia’s Solution

Nvidia’s success extends beyond chip manufacturing. The company has taken a unique co-design approach by integrating hardware and software to create a comprehensive solution for accelerated compute. Nvidia’s CUDA platform, a unified architecture layer across all generations of GPUs, provides developers with end-to-end workflow solutions. It offers high-level programming and extensive libraries for linear algebra and machine learning, making it easier for developers to harness the power of Nvidia GPUs.

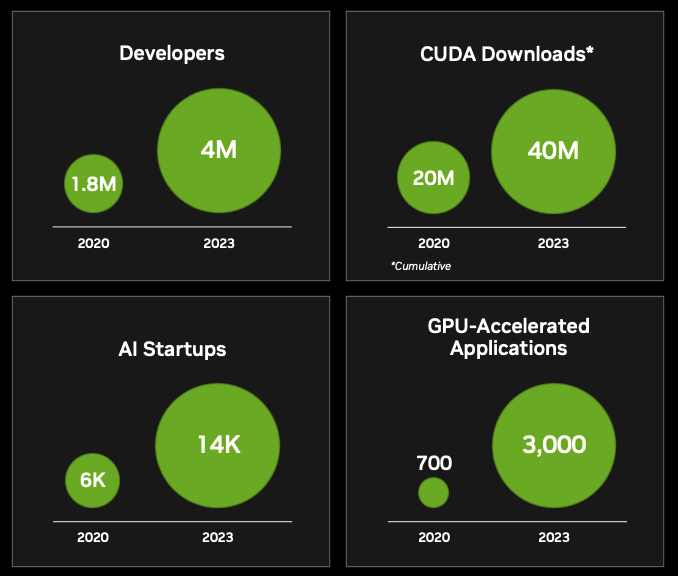

The software ecosystem built around CUDA, including custom accelerated libraries, frameworks, and software development kits (SDKs), forms a structural competitive advantage for Nvidia. With over 4 million developers in its robust ecosystem, Nvidia has established itself as a trusted provider of software solutions for managing AI workflows. This advantage contributes to the performance and average selling price (ASP) uplift of Nvidia’s GPUs at each new architecture release.

The Underappreciated Potential Of Inference Workloads

While Nvidia’s dominance in training AI models is well-known, its value proposition for inference workloads is often underestimated. Inference, the real-time execution of AI models, is a critical aspect of AI applications. As the market for inference continues to grow, driven by low-latency requirements and increasing adoption, Nvidia’s GPUs offer superior price-performance compared to CPUs.

Currently, a significant portion of inference workloads is still performed on CPUs. However, as large language models and multi-domain use cases gain prominence, the computational demands of inference are rapidly increasing. Nvidia’s GPUs, with their ability to handle large-scale parallelism, provide a compelling solution for accelerating inference workloads, offering significant performance advantages over CPUs. As more enterprises realize the importance of efficient and scalable inference, Nvidia is well-positioned to capture a substantial market share in this segment.

Nvidia As An AI Operating System

Looking ahead, Nvidia has the potential to play a central role as an operating system layer for the AI stack. By providing a software platform that abstracts the underlying hardware complexities, Nvidia could create an ecosystem that simplifies the development, deployment, and management of AI applications. Similar to how Microsoft Windows and Apple macOS serve as the foundations for application development, Nvidia’s software platform could become the standard for AI-focused development, enabling seamless integration of AI technologies into various industries.

AI Growth (Nvidia Investor Relations)

Valuation And Risks

Nvidia’s data center revenues have experienced significant growth, with the segment accounting for over 50% of its total revenues. While street models forecast a healthy Compound Annual Growth Rate (CAGR) for data center revenues, factors such as the growing hyperscaler capital expenditure and increasing importance of AI initiatives among major players could drive even higher growth rates. A base case projection suggests strong earnings power, leading to a positive outlook for Nvidia’s stock.

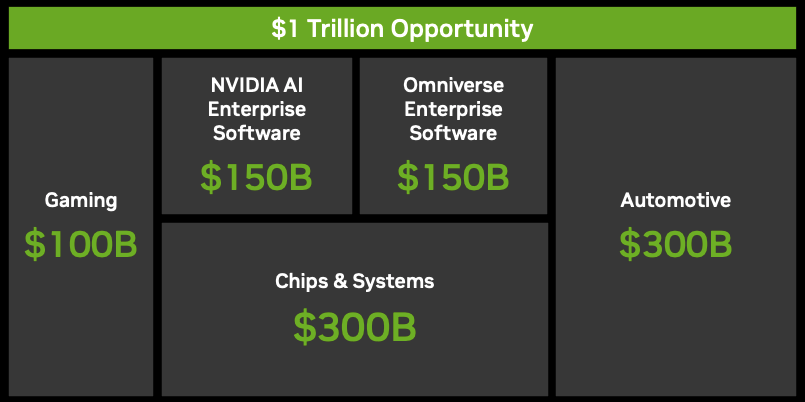

My base case EPS 5-yr CAGR comes to be over 35% under this framework, reaching earnings power in the range of $14.5-$16.5. Assuming an exit NTM P/E of 35x, shares can be worth ~$550. If NVDA’s software solutions get monetized over the next five years to even low single-digit percentage of AI enterprise TAM (50M servers at $3K fee/year) presented in GTC 2022, there could be an additional upside.

Opportunity size for Nvidia (Nvidia Investor Relations)

However, it is important to consider the risks associated with Nvidia’s business. Competition from other players in the accelerated compute market, potential regulatory challenges, and shifts in market demand for GPUs and accelerators could impact Nvidia’s growth trajectory. Additionally, uncertainties regarding hyperscaler spending patterns and the emergence of disruptive technologies pose risks that investors should monitor closely.

Conclusion

Nvidia’s dominant position in data center acceleration, driven by its powerful GPUs and unique co-design approach, positions the company for continued growth. With its comprehensive software ecosystem, Nvidia is well-equipped to handle the demands of AI workloads, both in training and inference. The potential for Nvidia to become an AI operating system further underscores its growth prospects. However, investors should remain vigilant and assess the risks associated with competition, market dynamics, and regulatory challenges. With its technology and ecosystem, Nvidia Corporation is poised to shape the future of accelerated compute and AI.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.