Summary:

- Investors were disappointed that Google’s advertising revenue didn’t grow faster. Though a big positive from the earnings report was the notable growth in Google Cloud’s profitability.

- In a previous article, we discussed how Google Cloud’s profitability is only getting started, as the use of its own chips allowed for deep integration between its customized TPUs.

- In this article, we dive into another powerful strategy Google is using to continue delivering profitable growth.

- We simplify the jargon and complexities relating to Google Cloud and AI initiatives to enable investors to more easily understand the future growth prospects and make more well-informed investment decisions.

- We maintain a ‘buy’ rating on Google stock.

Justin Sullivan

Google (NASDAQ:GOOG, NASDAQ:GOOGL) announced Q4 2023 earnings last week, with investors keen to observe how the generative AI revolution is impacting various parts of the business. Advertising remains the biggest source of revenue and profits for the tech giant, and hence a key focus for investors. While ad revenue growth came in-line with market expectations, investors were underwhelmed by the growth rates, especially considering Meta’s relatively stronger quarter. The stock dived lower after the earnings release, as Google has yet to prove that it can successfully pivot its flagship search ad business and remain highly profitable in the era of generative AI. Although amid all the focus on Google’s advertising business, the market seemed to overlook the very encouraging performance of Google Cloud, where savvy strategies to boost profitability are paying off. We maintain a ‘buy’ rating on Google stock.

In a previous article, we discussed how Google Cloud’s profitability is only getting started, as the use of its own chips allowed for deep integration between its customized TPUs and cloud services, yielding cost benefits that strengthen profitability.

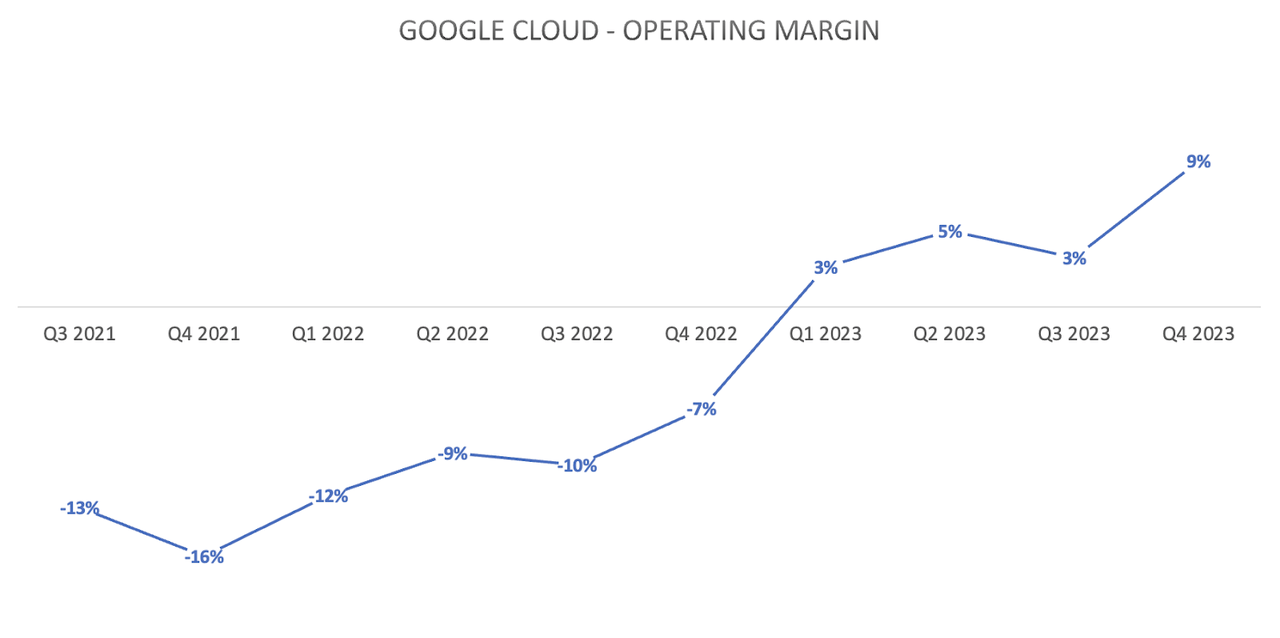

The operating margin for Google Cloud jumped from 3.16% in Q3 to 9.40% in Q4 2023, marking a whopping 6-point expansion.

Data from company filings, Nexus

During Google’s Q4 2023 earnings call, the executives repeatedly mentioned that they continue to durably reengineer their cost base to drive long-term cost efficiencies. While they mentioned some top-level initiatives they are taking across the organization to optimize efficiencies, no specific insights into Google Cloud were shared.

Although on Google’s website in 2023, the tech giant did reveal details regarding how one of its most recent LLM models, PaLM 2, was built using a new technique called “compute-optimal scaling”, outlining:

“The basic idea of compute-optimal scaling is to scale the model size and the training dataset size in proportion to each other. This new technique makes PaLM 2 smaller than PaLM, but more efficient with overall better performance, including faster inference, fewer parameters to serve, and a lower serving cost.”

Now all these technical terms may be overwhelming, so let’s simplify it with some context to better understand the long-term advantages of Google’s AI innovation approach.

To do so, we must first understand what parameters are.

AI models learn from data, but they need some guidance. Parameters are like little dials inside the model that the AI adjusts during training. The model learns which parts of the data are important and how to use them to make predictions and serve users’ queries when it is provided with new data, while adjusting the parameters according to what it has learnt.

For instance, one example of a parameter used in training LLMs is “contextual relationship”. The extensive dataset used to train PaLM includes wide-ranging scenarios exhibiting how words are used in different contexts. The computer then tries to find patterns in the data to understand and respond to nuances in language depending on the situation, by using these “contextual relationship” parameters to create rules for distinguishing between different contexts.

During the training phase, the computer fine-tunes its internal workings by analyzing its errors. It continuously refines its outputs by adjusting crucial parameters, ultimately leading to improved accuracy over time.

It is commonly perceived that a larger number of parameters can make models more versatile, and this is true, up to a certain extent.

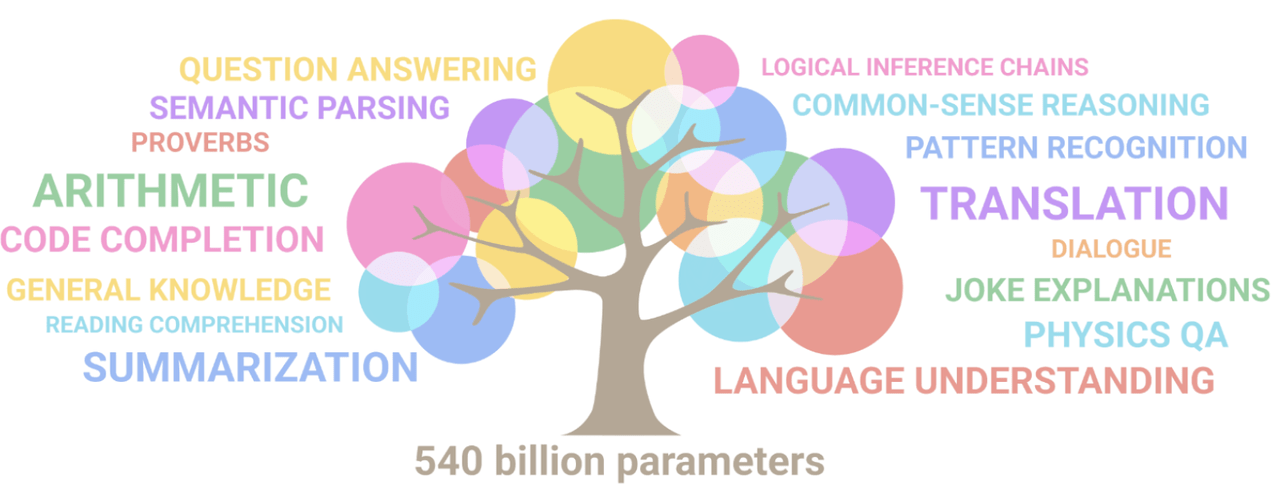

Google’s original PaLM model included 540 billion parameters.

One of the advantages of having more parameters is that it enhances modeling capacity. Adding more parameters in generative AI models allows them to capture and reproduce more complex patterns and structures in the data, allowing for more intricate and realistic outputs.

The whole point of generative AI is to create new forms of content/ output. So the more parameters a model has, the more varied types of outputs it will be able to create, extending the applicability of the generative AI model to many more use cases.

However, more parameters also comes at the cost of more computing power requirements and longer training times. This means greater operating expenses which undermine profitability for shareholders.

Furthermore, an increased number of model parameters can exacerbate the risk of overfitting, particularly in scenarios with constrained training data. Overfitting happens when a model learns the specific details of the training data too well, while performing poorly on new, unseen data.

Now Google is of course not constrained by limited data, as it has unmatched access to large sets of training data through the top two search engines in the world (Google and YouTube), as well as various other digital services, therefore reducing the likelihood of overfitting. Nonetheless, it is key to note that focusing solely on model size can be misleading when evaluating performance.

Now going back to Google’s approach to building PaLM 2, when they outlined that “the basic idea of compute-optimal scaling is to scale the model size and the training dataset size in proportion to each other”, it essentially hinders issues like ‘overfitting’. The number of parameters and the amount of training data available being proportionally balanced allows for improved performance and faster inferencing, enhancing its competitiveness against LLMs from other organizations.

From a shareholders’ perspective, the goal is to encourage more and more cloud customers to use its own AI models like PaLM 2 as their foundation model for building their own AI services. This is done through Google Cloud’s Vertex AI platform, where it offers access to both its own models as well as open source models from third-parties.

While the company has not disclosed how many cloud customers have chosen to use Google’s models, CEO Sundar Pichai did reveal on the Q4 2023 Google earnings call that:

“Vertex AI has seen strong adoption with the API request increasing nearly 6x from H1 to H2 last year.”

Now another key advantage of “compute-optimal scaling” that Google highlighted was “fewer parameters to serve, and a lower serving cost”. Indeed, PaLM 2 has been built using fewer parameters than the original PaLM model, although Google has not specified how many parameters exactly.

This lowers the amount of computational power required to train the model, and shortens training time. More importantly on a forward-looking basis, it lowers the cost incurred for serving these models to cloud customers. And Google could leverage this cost advantage in two ways.

Either it could pass them onto businesses to be more price competitive and attract more customers, or it could absorb the cost savings themselves to expand profit margins for shareholders.

Now while Google has not explicitly revealed that its latest Gemini model was also built using the “compute-optimal scaling” technique, it would be prudent to assume that the company used at least similar strategies to optimize cost efficiency in the interest of long-term profitability.

The overarching point is, Google’s AI innovation endeavors inherently incurs cost-efficient approaches that allow for sustainable profit margin expansion. And it is these strategies like “compute-optimal scaling” that enable Google Cloud to deliver operating margin expansion towards 9.40%.

Financial Performance & Risk Factors

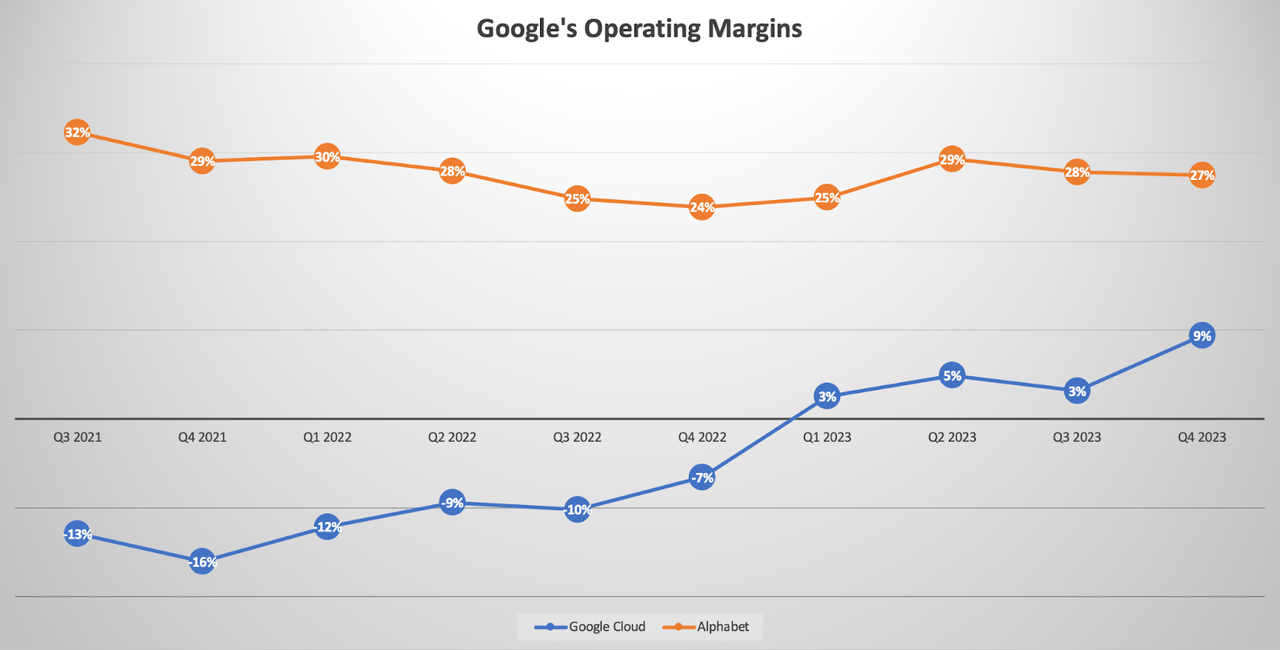

While Google Cloud has been delivering operating profit margin expansion, the company-wide operating margin has not improved over the past few years.

Data from company filings, Nexus

Relative to its two main cloud rivals, Microsoft Azure and Amazon’s AWS, Google Cloud remains the least profitable. In addition, its core business of Search advertising is perceived to be most at risk of disruption from the generative AI revolution, compared to Amazon’s e-commerce business and Microsoft ‘Productivity and Business Processes’ segment, which includes sales of its Microsoft 365 suite of productivity apps like Word and Excel.

In fact, Microsoft’s ‘Productivity and Business Processes’ unit is already highly profitable with a 53.43% operating margin in Q4 2023, and this even before we see the full impact from the rollout of its AI assistant ‘Copilot’.

On the basis of Levered Free Cash Flow Margin, which measures how much cash a company has left over (after paying all its financial obligations) relative to total sales revenue, Microsoft boasts a 25.78% margin, while Google’s margin stands at 19.08%.

This signifies a competitive risk as Microsoft is in a financially stronger position to continue investing heavily in AI infrastructure to support Azure growth.

Google has yet to prove to the street that its digital advertising segment can remain a highly profitable business in the era of generative AI. For years, the advertising unit has been an incredible source of profits and cash flow, which Google was able to invest into its cloud unit.

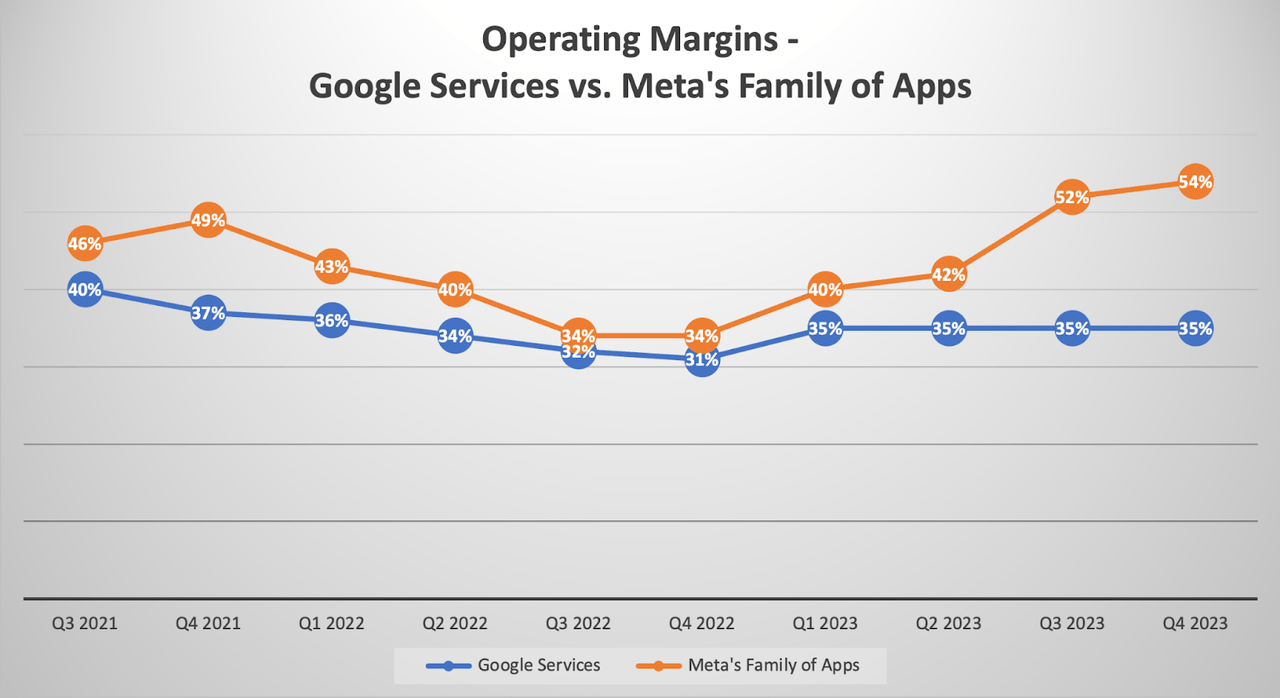

The Google Services segment, which mainly encompasses digital advertising revenue, remains comfortably profitable with an operating margin of 35.03% in Q4 2023. Although the top-line growth rate was underwhelming last quarter at 12.49% year-over-year, as the street wanted to see faster revenue growth relative to expense growth to see margins expand in its flagship business unit.

Meanwhile, rival Meta Platform’s ‘Family of Apps’ segment, majority of which derives its revenue from digital advertising, grew at a much faster rate of 24.18% in Q4 2023 year-over-year. It has also been delivering better profit margin expansion for shareholders over the past year, making it more profitable than Alphabet’s ‘Google Services’ segment.

Data from company filings, Nexus

The market is also perceiving Meta as being able to roll out generative AI-powered features faster and more aggressively than Google.

Alphabet has been cautious with its rollout of new services, such as by keeping Search Generative Experience in test-mode, as it wants to avoid cannibalizing its current lucrative Search ads business.

There is indeed the risk that new rising competitors may introduce new forms of search experiences at a more rapid pace to try and eat into Google’s dominant market share in Search and other digital services, forcing Google to transform its platform with generative AI capabilities more swiftly, without being ready to monetize it at the level at which it is currently monetizing the traditional Search engine. Though as we covered in a previous article, Google is showing promising signs of being able to monetize conversational-based search in this new era.

Additionally, we discussed the advantages of Google’s “compute-optimal scaling” technique for the cloud segment in particular. Although benefits like greater cost efficiencies also enable Google to offer its own generative AI-powered Search services more cost effectively to widen profit margins over the long-term. In fact, the faster inferencing associated with smaller models (with fewer parameters) also enables the tech giant to innovate and adapt its services with more agility to stay competitive as new consumer trends continue to rise and evolve.

On the other hand, rival Meta Platforms had released a blog post back in June 2023 suggesting that:

“In order to deeply understand and model people’s preferences, our recommendation models can have tens of trillions of parameters — orders of magnitude larger than even the biggest language models used today.”

As we discussed earlier, larger models with more parameters does not necessarily translate to more superior performance, and the cost of training such models with trillions of parameters would indeed be enormous.

Comparatively, Google is wisely designing its AI solutions for long-term cost efficiencies. Hence, Google’s ability to sustain or even grow profit margins over the long-term should not be underestimated given the company’s pragmatic approach to AI innovation.

Google Stock Valuation

Now let’s consider Google stock’s valuation. GOOG currently trades at a forward PE (non-GAAP) of 21.77x, which is well below the forward PE ratios of MSFT and AMZN, which trade at 35.42x and 41.37x, respectively.

Although we prefer the forward PEG (Price-Earnings-Growth rate) ratio to better assess the valuation of a stock, as it takes into account the expected future growth rate of a company’s earnings over a certain period.

It is calculated by dividing the forward PE ratio by the projected EPS growth rate, and a ratio of 1 would imply a fairly valued stock. Although it is worth noting that highly sought-after stocks rarely tend to trade at a PEG of 1.

Google currently carries a forward PEG of 1.33, which is lower than Amazon stock’s 1.70, and considerably below Microsoft stock’s 2.41 level.

Although as discussed earlier, Microsoft seems to be in a financially stronger position than Google to invest heavily in cloud computing infrastructure to capitalize on the AI opportunity, not just because Azure is more profitable than Google Cloud, but also because its flagship ‘Productivity and Business Processes’ segment is also highly profitable relative to Google’s advertising business.

Therefore, while Google may seem significantly cheaper than Microsoft stock at first glance, there are good reasons behind why the market is assigning a higher valuation multiple to MSFT relative to GOOG.

Nevertheless, Google’s own savvy strategies like using the “compute-optimal scaling” approach for AI model building certainly enhances the company’s long-term financial strength and profitability prospects.

At a forward PEG of 1.33, it is well below its 5-year average of 1.50 that investors have been willing to pay for the stock historically. And the stock trades closest to its estimated fair value relative to the other major cloud providers.

Therefore, Google stock remains attractively valued, and we maintain a ‘buy’ rating on the stock.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of MSFT either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.