Summary:

- Nvidia Corporation CEO Jensen Huang’s concept of “AI factories” involves using AI supercomputers to generate valuable synthetic data for applications such as self-driving car training and personalized experiences.

- Nvidia’s fourth-quarter earnings report exceeded expectations; the stock was up 16% the day after the company released its report.

- However, the company’s dominance in AI chips may be threatened in the long term as major tech companies like Microsoft, Alphabet, Meta Platforms, and Amazon are developing their own chips.

Justin Sullivan

I last wrote about Nvidia Corporation (NVDA) on October 23, 2023. I gave it a Buy recommendation, as the company is among the best positioned to take advantage of the proliferation of AI. After looking at the company’s fourth-quarter earnings report, where it beat analysts’ revenue estimates by $1.54 billion, beat analysts’ earnings-per-share (“EPS”) forecasts by $0.69, and beat analysts’ consensus expectations of 208% year-over-year growth for the first quarter fiscal year (“FY”) 2025 by forecasting 233% year-over-year growth for the period, I could see why bullish investors filled with positive sentiment pushed the stock price up 16% the day after it reported earnings. Seeking Alpha analyst Bill Gunderson gave Nvidia a strong buy with a 5-year target price of $1,563.43 on February 28, 2024.

However, not everyone is a fan of the company. Near the end of January, over a month before the company released the fourth quarter earnings report, Seeking Alpha analyst The Value Portfolio wrote that he didn’t believe the company’s expected performance during 2024 justified the stock price. He reiterated that view in another report after the fourth-quarter earnings, and he might be right.

After I reviewed the company’s potential upside and risks, the threat of rising competition, and the stock’s valuation, I concluded that potential investors should be cautious about buying the stock at current prices for several reasons. First, although analysts estimate that Nvidia holds anywhere from 80% to 90% market share for Artificial Intelligence (“AI”) chips, it is questionable how long that type of market domination will remain the status quo. Even if a solid second and third vendor fails to emerge soon, some of its more significant customers, like major tech heavyweights Microsoft (MSFT), Alphabet (GOOGL, GOOG), Meta Platforms (META), and Amazon (AMZN) do not like being beholden to one supplier and are working on building their own chips for internal use. It’s not good over the long term when your customers want to compete against you by taking production of your leading product in-house.

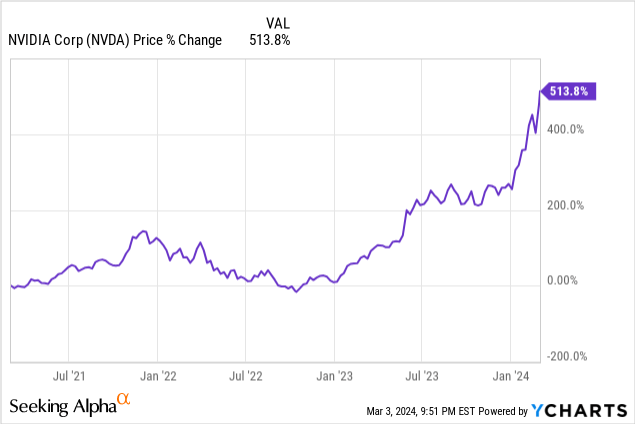

A second concern is that if you look at the price chart below, the stock is up over 500% since the fall of 2022. You must wonder how much gas is left in this rocket ship’s tank, especially when it is already the world’s third most valuable company by market cap, with a value of over $2 trillion.

I thus am downgrading Nvidia to a Hold. This article will cover the company’s upside potential, its fourth-quarter report, competitive factors, a few risks, the stock’s valuation, and why investors might want to hold off on buying it at current prices.

A whole new opportunity in enabling “AI factories”

Why give Nvidia a Hold rating and not a Sell? Nvidia upside might be even beyond what the most bullish investors think if the concept of AI factories spreads globally. When I first heard Nvidia Chief Executive Officer (“CEO”) Jensen Huang talk about “AI factories,” I had a hard time wrapping my head around what he meant by the term. The way Huang defines the term “AI factories” doesn’t mean using AI on a manufacturing plant’s factory floor. Jensen Huang defined AI factories in its fourth quarter FY 2024 conference call (emphasis added):

We now have a new type of data center that is about AI generation, an AI generation factory. And you’ve heard me describe it as AI factories. But basically, it takes raw material, which is data, it transforms it with these AI supercomputers that NVIDIA builds, and it turns them into incredibly valuable tokens [newly generated data].

Source: NVIDIA Fourth Quarter FY 2024 Earnings Call.

In the above statement, CEO Huang is talking about taking data generated in the real world and running that data through an AI supercomputer, which produces new, never-before-seen synthetic data, which Huang calls a token. If you are like me, you might wonder how these “tokens” could be valuable. Well, the tokens might be precious when used in specific applications. For instance, a company like Tesla (TSLA) or Google’s Waymo might use AI factories in the future to generate synthetic data or tokens in the form of a simulation scenario for training self-driving cars. Why would a Tesla or a Waymo use a simulation rather than real-world data?

Training a self-driving car to operate safely in the real world under different weather and lighting conditions, traffic scenarios, road conditions, and unexpected obstacles can be cost-prohibitive and take too long to reach a satisfactory result. So, Tesla and Waymo have resorted to using simulations to train their AI driver software to speed up the training. However, Waymo has discovered that simulations built with gaming engines such as Unreal or Unity have limitations and can take too much work to produce realistic camera, lidar, and radar data. Google AI researchers developed SurfelGAN several years ago to get around the limitations of gaming engine simulation software. SurfelGAN can generate realistic camera images from sensor data collected by self-driving vehicles, so while Google has not officially announced that it has an “AI factory” as defined by Nvidia, it has demonstrated its technical capability to do so with SurfelGAN.

A few other use cases for AI factories are simulations to create novel drugs and new materials, using the factory’s tokens to engage in scientific research, or even building out Meta Platforms CEO Mark Zuckerberg’s vision of the metaverse by creating worlds personalized for a specific individual’s or group’s tastes.

Some of the products of AI factories could help revolutionize society. Imagine if AI factories eventually make it possible to produce a viable quantum computer in half the time of current development, accelerate the development of fusion reactors, produce drugs that can cure cancer, or create a material that could double the efficiency of solar panels or EV car batteries. While all of the above possibilities are speculative, the use of AI factories could quickly multiply if the concept of using tokens results in tangible improvements in various disciplines and industries.

Suppose Huang’s vision of an AI factory comes to fruition; it could increase the company’s total addressable market beyond Nvidia’s management estimates. After all, its projected AI Total Addressable Market (“TAM”) has rapidly risen past the company’s 2021 estimates of $300 billion. In 2022, the company had upped its AI TAM to $1 trillion, suggesting even management may underestimate how enormous its AI opportunity could be.

If Nvidia remains a dominant company in enabling AI technology, it is hard to estimate how large it can become. If you decide to do valuation exercises on Nvidia stock, understand that despite its significant stock rise over the last two years, you could undervalue the stock if concepts like AI factories produce tangible real-world results and the idea proliferates. Part of the heavy demand for Nvidia chips is that technologists within companies are aware of the possible uses of generative AI technology, like my example here, of using it to build data center AI factories.

The potential upside of Nvidia’s opportunity prevents me from slapping a sell rating on the stock today.

The company’s fourth quarter FY 2024 results

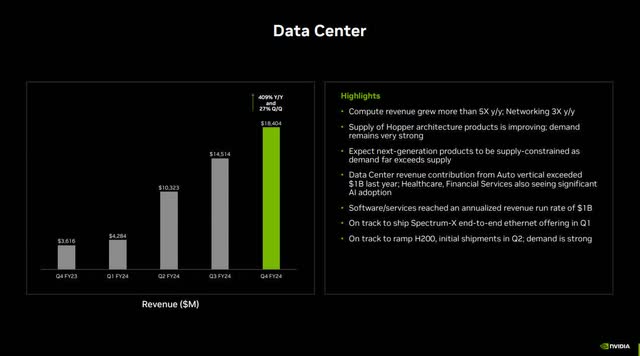

Nvidia once again produced eye-popping numbers. The company generated fourth-quarter revenue of $22.1 billion, above company guidance of $20 billion and up 265% year-over-year. Revenue for FY 2024 was $60.9 billion, up 126% from the prior year. The Data Center segment drove a good portion of this revenue growth, with fourth-quarter FY 2024 revenue growth of 409% year-over-year.

NVIDIA Fourth Quarter FY 2024 Investor Presentation

The above numbers suggest robust demand for Nvidia data center solutions. The company saw growth in both chips used for training large AI models and chips used for inferencing. If you are unfamiliar with inferencing, it means utilizing a trained AI model to answer queries and solve problems. Nvidia estimates that 40% of its Data Center revenue goes toward inferencing applications, signifying that the market is increasingly deploying trained AI models. Management believes that generative AI has triggered a new investment cycle in “AI generation factories,” representing a significant potential revenue growth opportunity for Nvidia data center solutions.

The above image also talks about the improvement of supply for Hopper architecture, and the company likely means by that statement that supply constraints are easing for its first Hopper product, the H100. Nvidia’s next-generation H200 product is also part of the Hopper line. However, the company has indicated that H200 will likely be supply-constrained initially due to demand exceeding its ability to supply the market. Potential Nvidia customers are interested in the increased capabilities of the H200, which has nearly double the inference performance of the H100.

The company also sells into verticals, with the data center revenue contribution of the automotive vertical contributing over $1 billion in revenue, meaning automakers are increasingly adopting its AI solutions. Nvidia Chief Financial Officer (“CFO”) Colette Kress said during the fourth quarter FY 2024 earnings call:

Almost 80 vehicle manufacturers across global OEMs, new energy vehicles, trucking, robotaxi and Tier 1 suppliers are using NVIDIA’s AI infrastructure to train LLMs and other AI models for automated driving and AI cockpit applications. And in fact, nearly every automotive company working on AI is working with NVIDIA. As AV algorithms move to video transformers and more cars are equipped with cameras, we expect NVIDIA’s automotive data center processing demand to grow significantly.

Source: NVIDIA Fourth Quarter FY 2024 Earnings Call.

It also had positive things to say about AI adoption in the healthcare and financial services industries. CFO Kress said about healthcare:

In healthcare, digital biology and generative AI are helping to reinvent drug discovery, surgery, medical imaging and wearable devices. We have built deep domain expertise in healthcare over the past decade, creating the NVIDIA Clara healthcare platform and NVIDIA BioNeMo, a generative AI service to develop, customize and deploy AI foundation models for computer-aided drug discovery.

Source: NVIDIA Fourth Quarter FY 2024 Earnings Call.

For Financial Services, CFO Kress stressed how companies within the industry use AI for everything from “trading and risk management to customer service and fraud detection.” She gave an example of how American Express (AXP) used Nvidia AI to improve its fraud detection accuracy by 6%.

Investors should also remember that Nvidia software and services tied to the data center are a competitive advantage that helps tie customers to its AI hardware. On March 5, 2023, several news outlets reported that Nvidia had recently banned other companies from using its CUDA (Compute Unified Device Architecture) programming model on different hardware platforms like those of Advanced Micro Devices (AMD) and Intel (INTC). Since CUDA is a popular programming code for GPUs, this news could present problems for AMD and Intel’s efforts to catch up to Nvidia, as running existing CUDA code seamlessly on hardware other than Nvidia just became more challenging. Users who rely on existing CUDA code might be less likely to switch to AMD or Intel hardware if it becomes too challenging to use CUDA.

With competition on the rise, investors should consider Nvidia’s competitive advantage with CUDA and how much it creates vendor lock-in. Nvidia software and services, of which CUDA is a part, reached an annualized revenue run rate of $1 billion in the fourth quarter.

The Data Center segment also includes its networking solutions, which rose above a $13 billion annualized revenue run rate. InfiniBand solutions grew more than five times over the previous year’s comparable quarter. Until now, Nvidia primarily relied on its InfiniBand technology to connect its GPUs within the data center. However, some customers increasingly prefer using Ethernet technology for specific applications. As a result, the company developed Spectrum-X, an end-to-end Ethernet solution. Management plans to roll out this new networking product sometime in the first quarter of FY 2025.

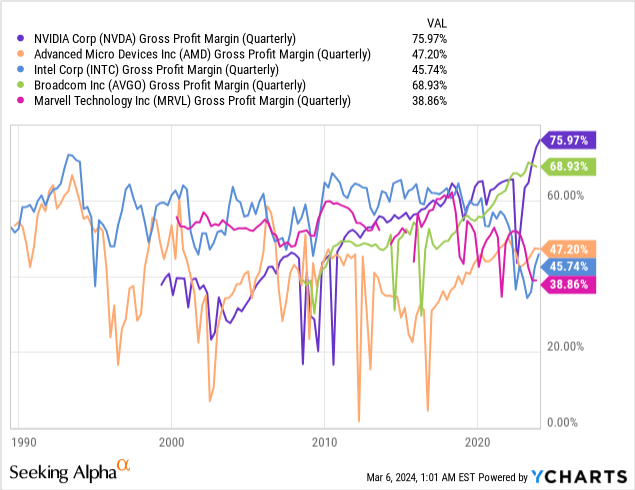

Let’s look at the rest of the profit and loss statement. Nvidia expanded its GAAP (Generally Accepted Accounting Principles) gross margins to 76%, the highest among its primary competitors, Intel and AMD. It’s also higher than other prominent semiconductor companies. CFO Kress attributes the robust gross margin performance to solid data center growth, mix, and better component costs. While these numbers are excellent, considering that the company forecasted GAAP gross margins of 76.3% for the first quarter and a return to the mid-70% range for the rest of the year, it may have maxed out its ability to increase gross margins.

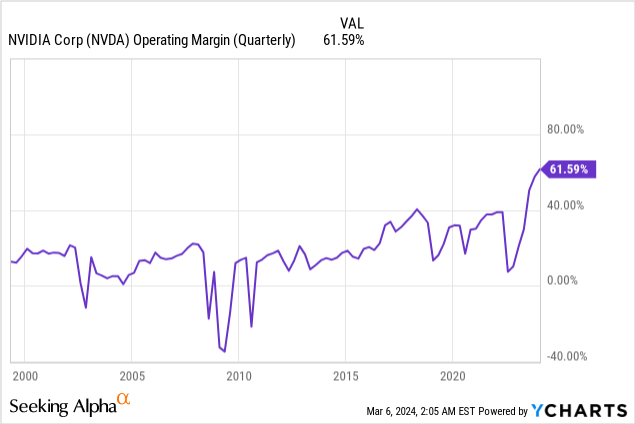

Nvidia operating margins might be even more impressive. In the fourth quarter, the company’s operating margins were larger than AMD’s and Intel’s gross margins, with a GAAP operating margin of 61.59%. Although this number is impressive, investors should not expect the company to expand operating margins much further.

Nvidia recorded a fourth-quarter diluted EPS of $4.93, up 33% sequentially and 765% year-over-year. The company is also in excellent financial condition. At the end of its fiscal 2024 fourth quarter, it had $25.98 billion in cash and short-term investments against $8.46 billion in long-term debt. The company has a debt-to-equity (D/E) ratio of 0.26, meaning shareholder assets primarily fund the company. It has a debt-to-EBITDA (Earnings Before Interest, Taxes, Depreciation, and Amortization) of 0.19, a number less than 1.0 means it generates enough cash from operations to take care of its debts and has enough left over to do other things such as stock buybacks. The company has a quick ratio of 3.67, meaning it has more than enough to pay its short-term obligations.

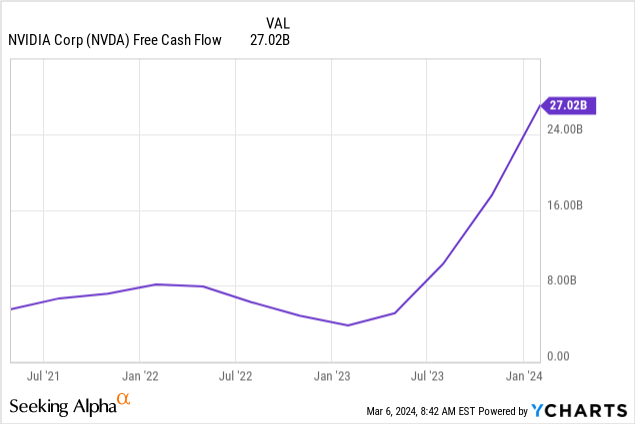

The chart below shows that Nvidia generated an astounding trailing 12-month free cash flow (“FCF”) of $27.02 billion.

Nvidia returned $2.8 billion to its investors in the fourth quarter through share repurchases and cash dividends. During FY 2024, the company used $9.9 billion in cash for shareholder returns, including $9.5 billion in share repurchases.

The competitive environment

If you decide to invest in Nvidia, avoiding becoming too overconfident in the company’s dominance and excellent fundamentals may be wise. Once upon a time, a leader of a dominant chip company named Andy Grove said, “Only the paranoid survive.” It’s too bad the subsequent leaders of Intel didn’t fully heed that advice, and the wind of change eventually knocked the company off its perch.

Prudent Nvidia investors should continually look for signs of whether industry dynamics, existing competitors, or technology that no one has thought of yet reaches a position that could disrupt the company.

Advanced Micro Devices and Intel are the company’s most visible and direct competitors. In December 2023, AMD launched AMD Instinct MI300 Series chips, direct competitors to Nvidia’s H100 chips, which gained fame as the chip powering OpenAI’s popular ChatGPT. Additionally, Intel released its Gaudi AI chip that should compete against both Nvidia’s H100 and AMD’s MI300 series. Although Nvidia is still ahead after introducing its newest AI chip, the H200, in November of 2023, Intel and AMD are hot on Nvidia’s heels.

One of the changing industry dynamics that could allow Intel and AMD to catch up is that while Nvidia may have a considerable advantage in developing Graphics Processing Units (GPUs), which companies mainly use for training AI models, the more significant opportunity in AI is in inferencing. There are other technologies better suited for inferencing outside of GPUs, like application-specific integrated circuits (“ASICs”), field-programmable gate arrays (“FPGAs”), and possibly other technologies. ASICs are custom chips pre-programmed for use in a specific application or function. FPGAs are similar to ASICs, except users can program the chip to perform different functions instead of being pre-programmed.

Seeking Alpha published an article recently that stated (emphasis added):

Nvidia and AMD both said how fast inference is becoming the “vast majority” of the AI GPU total addressable market, analysts led by Timothy Arcuri said. Nvidia said it sees roughly 70% of its total data center demand coming from inferencing, while AMD said roughly 65% to 70% its $400B AI total addressable market by 2027 is from inference.

AMD is the market leader in FPGAs after acquiring Xilinx several years ago. Intel is in second place in the FPGA market. In addition, many Nvidia competitors want to compete against it by designing their own ASIC chips for internal use. This trend started years ago when Alphabet introduced its first ASIC chip for internal use in its cloud products. It named this ASIC chip the Tensor Processing Unit or TPU in 2017.

Now, virtually all of Nvidia’s customers are developing custom-made chips. For instance, Amazon developed an AI inference chip for AWS named Inferentia. According to Reuters, Meta Platforms has developed an AI chip to deploy for internal use in 2024. In late 2023, Microsoft introduced two custom chips at Microsoft Ignite. A Microsoft press release stated,

“Today at Microsoft Ignite, the company unveiled two custom-designed chips and integrated systems that resulted from that journey: the Microsoft Azure Maia AI Accelerator, optimized for artificial intelligence tasks and generative AI, and the Microsoft Azure Cobalt CPU, an Arm-based processor tailored to run general purpose compute workloads on the Microsoft Cloud.”

Additionally, smaller companies have technologies that some companies may use for specialized applications. These companies include Cerebras, with its Wafer-Scale Engine technology, and Graphcore’s Intelligence Processing Unit (IPU) technology.

Nvidia is responding to this threat. According to a Seeking Alpha article, the company is establishing a new unit to design custom-made inferencing chips for cloud providers. However, it may not have the same advantages in the custom-made inferencing arena as it has with its mainstay GPU-based chips designed for training. As the AI and accelerated computing chip industry shifts toward emphasizing various AI inference applications, the likelihood that Nvidia can maintain its current 80% to 90% market share in AI chips has decreased. Monitoring the competitive landscape is vital if you invest in the stock.

Other risks

Although Nvidia bulls may be excited about generative AI and the possibilities of AI factories, there are risks that concepts like AI factories may never evolve or take so long to evolve that it’s outside an investor’s time horizon. In such a case, the company’s future revenue and earnings growth may underperform those investors’ expectations.

One of the kinks that AI developers still need to work out about generative AI technology is its propensity to occasionally produce wrong answers, a potential disaster if it occurs in mission-critical applications. Additional problems include biases in training data that can produce potentially discriminatory outcomes, potential legal questions involving patents or copyrights, and concerns about the environmental impact. Since generative AI requires massive amounts of power, a proliferation of AI factories might stress the power grid and negatively impact the environment.

Valuation

Seeking Alpha lists NVIDIA as trading at a forward price-to-earnings (P/E) ratio of 37.96. While that number is above its sector’s median forward P/E of 28.62, it is below the company’s five-year average forward P/E ratio of 62.61. While forward P/E may be high compared to its industry, its expected earnings-per-share (“EPS”) growth rate of 89.77% is much higher than that of other hardware companies with data center products.

| Company | Forward P/E | EPS Growth Rate Estimate 2024 | Revenue Growth Estimate 2024 |

| NVIDIA | 35.27 | 89.77% | 80.88% |

| AMD | 56.87 | 37.55% | 13.87% |

| Intel | 33.85 | 29.86% | 6.06% |

| Broadcom (AVGO) | 30.01 | 10.59% | 39.14% |

| Marvell (MRVL) | 52.38 | -28.73% | -7.07% |

Data Source: Seeking Alpha.

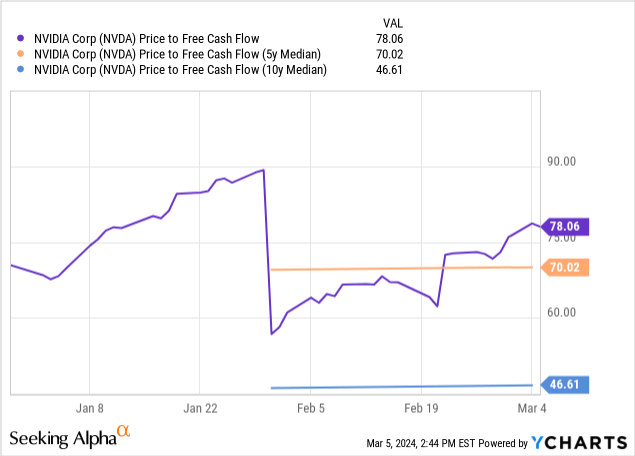

The company trades at a price-to-FCF (P/FCF) of 78.06, much higher than its five- and ten-year median P/FCF ratio, which could indicate overvaluation.

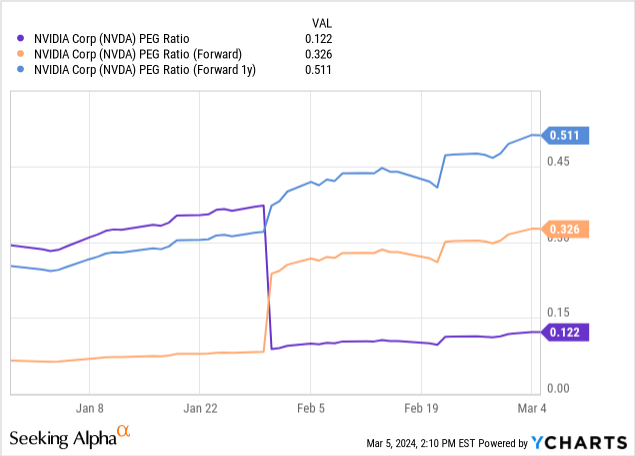

Let’s compare the company’s P/E to its growth rate. The following chart shows Nvidia’s Price-to-Earnings-to-Growth (“PEG”) ratio on a trailing 12-month, a forward, and a one-year forward basis. All three measures are below 1.0, indicating the market may undervalue the company’s earnings growth rate.

The following reverse Discounted Cash Flow (“DCF”) analysis shows what the closing stock price on February 28, 2024, implied about Nvidia’s FCF growth over the next ten years.

Reverse DCF

|

The first quarter of FY 2025 reported Free Cash Flow TTM (Trailing 12 months in millions) |

$27,021 |

| Terminal growth rate | 3% |

| Discount Rate | 10% |

| Years 1 – 10 growth rate | 25.9% |

| Stock Price (March 5, 2024, closing price) | $859.64 |

| Terminal FCF value | $278.481 billion |

| Discounted Terminal Value | $1533.808 billion |

| FCF margin | 44.35% |

According to the above reverse DCF, Nvidia must grow its FCF at 25.9% annually over the next ten years. That’s a steep ask. Suppose you go by Seeking Alpha’s survey of consensus analyst revenue estimates; the company will grow revenue at around 25% annually over the next five years and around 17% over the next ten years.

Additionally, with rising competition, I am dubious that Nvidia can expand its FCF margin much further. In fact, FCF margins may decline from this point over time, presenting a headwind for Nvidia’s ability to achieve FCF growth rates of 26% or higher.

One of the few ways that I believe the stock is justified in going much higher is if the AI opportunity is much larger than anyone could have imagined, enough to where if even Nvidia does lose market share, it could maintain a revenue growth rate of at least 27% or more for the next ten years and an FCF margin above 40%.

Why it’s a Hold

Nvidia stock may continue to increase this year because the company may keep putting up mind-blowing numbers, and there is so much positive investor sentiment. After all, demand for its products should remain high, and serious competition may not begin to impede the company for several more years. You should continue riding this train if you are a momentum investor.

However, in the longer term, investors might start seeing revenue growth and margins tail off as multiple competitive factors collectively eat into their market share. If you are a potential investor looking at Nvidia for the first time and want to invest for three to five years or longer, you should be cautious, as the risks may outweigh the rewards after the stock’s massive rise over the last year.

If you are an existing Nvidia Corporation shareholder, consider selling some shares to lock in gains and continue holding the rest. I rate Nvidia stock a Hold.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of GOOGL, AMZN either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.