Summary:

- Amazon dominates the cloud business with AWS.

- Nvidia is leveraging its chips to build its own cloud business within AWS.

- The two companies, though showcasing a strong collaboration, are getting ready to fight and compete against each other.

- I explain how this battle is being fought and the consequences I see for the rest of the year.

Ociacia/iStock via Getty Images

When we deal with huge corporations such as Amazon.com, Inc. (NASDAQ:AMZN) or NVIDIA Corporation (NVDA), echoing what has been said and written all over the place stands out as a widespread risk. And yet, most of us are interested in these huge and dominant companies; in many cases, they constitute strong holdings in our portfolios. Inevitably, we have to find a way to research them and deeply understand their business, while benefiting from the available information and filtering out and disregarding the noise.

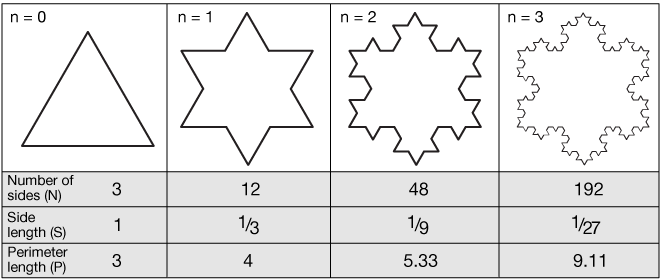

For this reason, I have chosen to deal with a company such as Amazon using what I call a fractal analysis. Fractals are geometric shapes that are self-similar no matter what scale we use.

What do I mean by this image? With huge companies such as Amazon, I am learning that when I look thoroughly at a specific issue, product, or business – that is when I zoom in on a smaller portion of the larger company – I learn better how the company operates and I often refine my understanding to better comprehend the whole and larger picture. In other words, I dive into a smaller-scale portion, to grasp how the whole figure works. This way of research inspired by fractal analysis helps me spot similar patterns recurring at smaller scales, as we can see in a snowflake, where a triangle recurs over and over. The same is often true for huge businesses.

xaktly.com

Using this approach, I first researched Amazon when it released “The Rings Of Power Series”, to understand its value. This investment seemed to make no economic sense, but, in truth, it turned out to be accretive to the company.

I then considered the influence of Costco Wholesale Corporation (COST) on Amazon Prime and explained how Amazon expanded Costco’s business model.

Now, as the title reveals, it is time to touch on a topic I have not yet seen tackled on Seeking Alpha, though, in the past month, I have seen it spreading around the news. I am talking about the relationship between Amazon and Nvidia. At first hand, it seems a strong collaboration. But, under the surface, the two are trying to outdo the other in an arm wrestling match for AI infrastructure market dominance. Both companies, as we will see, want to seize part of the other’s dominance. And, “lo and behold, it seems Amazon is taking a hit right now.

Amazon vs. Nvidia: The Context

When ChatGPT was first released, the Big Bang of AI took place. Investors first crowded the then-depressed Microsoft stock, pushing it quickly to ATHs. But, suddenly, a new winner came out which completely crushed the market. Nvidia soon became the representative of AI, being the leader in accelerated computing thanks to its GPU architecture. At first, the GPU was used for video games and movies to simulate human imagination. Now, it has expanded its capabilities and simulates human intelligence, thanks to its deep learning algorithms. This form of AI, as Nvidia explains in its annual report,

… in which software writes itself by learning from large amounts of data, and can serve as the brain of computers, robots, and self-driving cars that can perceive and understand the world. GPU-powered deep learning is being adopted by thousands of enterprises to deliver services and products that would have been immensely difficult with traditional coding.

But, what matters even more to our topic, is what we read a few lines later:

The world’s leading cloud service providers, or CSPs, and consumer internet companies use our GPUs and broader data center-scale accelerated computing platforms to enable, accelerate, or enrich the services they deliver to billions of end-users, including search, recommendations, social networking, online shopping, live video, translation, AI assistants, navigation, and cloud computing.

Here we are. AWS is the largest cloud service provider in the world. And it uses Nvidia’s GPUs. So far, this might not seem a big issue. But Nvidia is no normal supplier. Currently, it is almost a monopoly and everyone needs its GPUs to power the computing AI requires.

On one side, Nvidia could simply leverage its dominant position by allowing its customers to bid up its GPUs’ prices, in a race to extract as much value as possible from its products. But this is short-sighted. Nvidia knows that, sooner or later, this cycle will soften. Chips, so far, have behaved like this and chipmakers have seen peaks and troughs in their revenue line.

As a result, Nvidia is trying to leverage its dominance in hardware to become a key player in software and cloud services. As Business Insider reported a few days ago, to be ready when GPU demand slows down, Nvidia is after Amazon’s recurring revenue. This is why Nvidia launched its DGX Cloud, “offering scalable capacity built on the latest Nvidia architecture and co-engineered with the world’s leading cloud service providers”. What does this mean? Simply put, Nvidia is “partnering” with AWS and its peers to use their data centers, renting servers powered by its chips to lease them to Nvidia’s customers. In other words, Nvidia is using AWS infrastructure to compete with it. Why does Amazon accept this? It is forced to, at least up until now. In fact, Nvidia sees so much demand for its chips that it can simply ship them elsewhere, leaving Amazon without this key component.

Of course, speculations had been going on for a while. For example, last month, rumors spread about Amazon halting orders of Nvidia Hopper chips. Amazon was quick to reply it had not halted orders of the chips, but that it had simply decided to postpone its purchase until the latest generation of the Blackwell GPUs would have been released. Of course, it was restated how the two companies cooperated successfully and that the transition from the Hopper chips to the Blackwell was decided by both parties.

And yet, the way the news came out possibly hints the relationship between the two giants is not idyllic and that Amazon tried to flex its muscles showing it can withdraw its orders if it wants to.

At the same time, we know Nvidia can stop its deliveries and this would cause a problem for Amazon.

This is why we have to take a step back and rewind this tale to mid-2023, when Amazon announced it was working on custom chips called Inferentia and Trainium, to offer its AWS customers another option other than Nvidia’s GPUs to train their LLMs. This move recalls how it broke its relationship with Intel to manufacture its own M chips to gain control of its core technologies and its supply chain.

Amazon wants to go the same way and eventually rely only on its chips to run its AI servers. Nvidia is aware of this and leverages its current dominance to set up a competing cloud service business while it is still Amazon’s core supplier of GPUs. This is no win-win situation, but rather, it is closer to a “Kiss of Death”, where both companies, while embracing one another, are actually trying to poison the other.

Amazon’s Focus On AI Infrastructure

So far, Nvidia’s technology seems better, but Amazon is working hard to offer a better price/quality ratio. Partly, this is already paying off. In its 2023 shareholder letter, Andy Jassy explained it when he wrote that:

This past fall, leading FM-maker, Anthropic, announced it would use Trainium and Inferentia to build, train, and deploy its future FMs. We already have several customers using our AI chips, including Anthropic, Airbnb, Hugging Face, Qualtrics, Ricoh, and Snap.

But the whole letter dealt with AI and the opportunity it represents for Amazon. Mr. Jassy, in fact, wanted to explain how Amazon thinks about this new technology. He tackles this issue by introducing a concept dear to Amazon from almost the beginning: its primitives. For Amazon’s way of thinking, as Jassy exposed, three layers in the AI stack demand three different kinds of investments:

The bottom layer is the most important one for our topic and, in Jassy’s words (bold is mine):

… it is the layer for developers and companies wanting to build foundation models (“FMs”). The primary primitives are the compute required to train models and generate inferences (or predictions), and the software that makes it easier to build these models. Starting with computing, the key is the chip inside it. To date, virtually all the leading FMs have been trained on Nvidia chips, and we continue to offer the broadest collection of Nvidia instances of any provider. That said, supply has been scarce and cost remains an issue as customers scale their models and applications. Customers have asked us to push the envelope on price-performance for AI chips, just as we have with Graviton for generalized CPU chips. As a result, we’ve built custom AI training chips (named Trainium) and inference chips (named Inferentia). In 2023, we announced second versions of our Trainium and Inferentia chips, which are both meaningfully more price-performant than their first versions and other alternatives. […] We already have several customers using our AI chips.

[…] The middle layer is for customers seeking to leverage an existing FM, customize it with their own data, and leverage a leading cloud provider’s security and features to build a GenAI application – all as a managed service. Amazon Bedrock invented this layer and provides customers with the easiest way to build and scale GenAI applications with the broadest selection of first- and third-party FMs, as well as leading ease-of-use capabilities that allow GenAI builders to get higher quality model outputs more quickly.

[…] The top layer of this stack is the application layer. We’re building a substantial number of GenAI applications across every Amazon consumer business […]. We’re also building several apps in AWS, including arguably the most compelling early GenAI use case – a coding companion. We recently launched Amazon Q, an expert on AWS that writes, debugs, tests, and implements code, while also doing transformations, and querying customers’ various data repositories to answer questions, summarize data, carry on coherent conversations, and take action. Q is the most capable work assistant available today and evolving fast.

[…] These AWS services, at all three layers of the stack, comprise a set of primitives that democratize this next seminal phase of AI and will empower internal and external builders to transform virtually every customer experience that we know (and invent altogether new ones as well). We’re optimistic that much of this world-changing AI will be built on top of AWS.

I think it is not complicated to read what’s really written here, from the perspective of the relationship between Nvidia and Amazon. Amazon has a value-creation mindset it inherited from Jeff Bezos and has always wanted to be a company of inventors. Here, we see Amazon tackling a twofold issue: scarce supply and high prices for chips. By developing its own Trainium and Inferentia, Amazon aims to be independent of Nvidia.

During Amazon’s Q1 2024 earnings call, we hear some more words further proving what Amazon is doing when Andy Jassy once again positively mentioned Nvidia but then remarked on Amazon’s progress with its own custom chips. Speaking of the bottom layer, he said:

We have the broadest selection of NVIDIA compute instances around, but demand for our custom silicon, training, and inference is quite high, given its favorable price-performance benefits relative to available alternatives. Larger quantities of our latest generation, Trainium 2 is coming in the second half of 2024 and early 2025. Companies are also starting to talk about the eye-opening results they’re getting using SageMaker, our managed end-to-end service has been a game changer for developers in preparing their data for AI, managing experiments, training models faster, lowering inference latency, and improving developer productivity.

The narrative is clear: Amazon reassures the market it has the best available product, but then it explains more and more how its chips are a benefit for the market. This is even clearer when we read these words from the same call:

Those [LL] models consume an incredible amount of data with a lot of tokens, and they’re significant to actually go train. And a lot of those are being built on top of AWS, and I expect an increasing amount of those to be built on AWS over time because of our operational performance and security and — as well as our chips, both what we offer and from NVIDIA. But if you take Anthropic, as an example, they’re training their future models on our custom silicon on Trainium.

Here the game Amazon wants to play is clear: Nvidia is a competitor, not a supplier. At least, long-term it will be. Right now, it must also be a supplier and Amazon has to let Nvidia in its ecosystem. Nvidia knows this and is wisely playing its role to develop its own competing cloud.

The Implication For Amazon’s Financials

I doubt anyone has the foresight to stretch so much out into the future to tell what the outcome of this competition will be. Chances are Nvidia will be able to become one of the leading cloud service players, perhaps ranking among the top four companies. To see AWS taken down from its dominant role in the cloud is hard for me to imagine and I don’t expect this to happen any time soon.

But what is happening has some consequences that Amazon’s investors should be aware of. It has to do with capex and, therefore, with free cash flow.

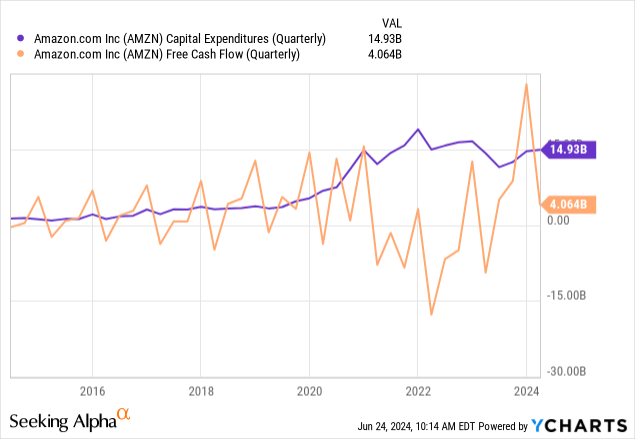

Remember what happened as the pandemic hit? Amazon saw a huge opportunity to double its fulfillment centers and build an unbeatable logistic service business. This requires high capital expenditures. We see in the graph below that, suddenly, starting in mid-2020, Amazon’s quarterly capex almost tripled in just a few quarters. Clearly, free cash flow plunged and went deeply in the negative, with Amazon reporting a negative $15 billion in FCF at the end of Q1 2022.

We all remember how the stock was punished, losing over half of its market cap and trading in the low $80s. Of course, that was the buying opportunity we were all waiting for and those who took advantage of this dip have seen their investment double since then.

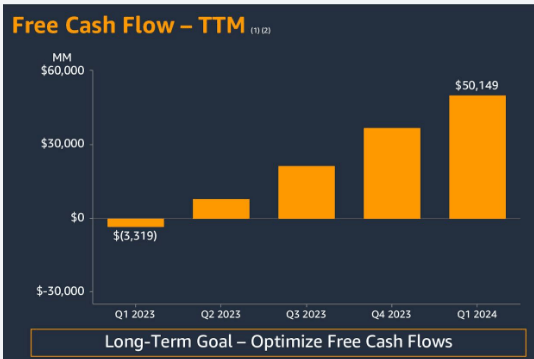

At the end of 2022, however, Andy Jassy realized how investors were demanding Amazon to prove its huge investments were going to generate higher returns. As soon as Amazon switched into profit mode, its FCF sky-rocketed and estimates are expecting the company to close FY 2024 with $50 to $60 billion in FCF. We can see that a year ago, Amazon’s TTM FCF was still in the negative by over $3 billion and that, as per its last Q1 2024 report, its TTM FCF was already above $50 billion. What a swing!

AMZN Q1 2024 Earnings Presentation

In the meantime, however, the AI Big Bang happened and Amazon saw a new opportunity to grow and become even more entrenched in today’s economy. As a result, Jassy started informing investors that, this year, we are going to see Amazon’s capex grow more than previously anticipated with the obvious consequence that Amazon’s FCF will be less than expected. Here is how the topic was addressed during the Q1 earnings call:

We spend most of the capital upfront. But as you’ve seen over the last several years, we make that up in operating margin and free cash flow down the road as demand steadies out. And we don’t spend the capital without very clear signals that we can monetize it this way. […] We’ve done this for 18 years. We invest capital and resources upfront. We create capacity very carefully for our customers. And then we see the revenue, operating income, and free cash flow benefit for years to come after that, with strong returns on invested capital.

Feeling Nvidia’s breath on its neck will surely make Amazon invest faster and more to decouple itself from a dangerous supplier.

At the end of Q1 2023, Amazon reported a TTM capex of $57.6 billion, which had moderated down to $49 billion at the end of Q1 2024. Amazon anticipated in its last earnings call that its capex will increase meaningfully YoY because of infrastructure spend to support AWS. With over $14 billion in capex reported in Q1, Amazon disclosed this should be the low quarter for the year. I would not be surprised to see 2024 capex top $60 billion. What does this mean?

The math is simple: Amazon’s FCF is heading south once again and could be, by the end of this year, in the range between $0 and $15 billion. This might cause some volatility in a stock that is priced at high multiples simply because many are aware that, if Amazon were to focus on profitability, its multiples would suddenly become much lower, making the stock appear almost cheap.

Takeaway

Amazon will once again alter its financials to fight a battle and win a race where the stake is its independence and the protection of its moat. Doubting the success of Amazon’s chips and betting against them seems to be a bit risky, as Amazon has a culture of invention and will probably figure out how to make the chips it needs to run its cloud properly. Believing Nvidia will end up with no share of the pie is risky too. The company is leveraging its role and will probably end up with a new business line that might be quite relevant. In the end, I am inclined to think both companies will fare quite well, but will probably part ways sooner or later. What changes, however, is Amazon’s short-term capex. This might fundamentally influence how the stock trades, creating some major dips that investors should benefit from. I rate Amazon as a buy because long-term, I see it more and more relevant for the future of the cloud and its AI-related services. But I am also considering Amazon to be a bit of a hold right now, because the next 2-3 quarters may present the surprise of low FCF which may cause the stock to go down. If Amazon dips, we might soon enter into strong buy territory.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of AMZN either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.