Summary:

- The bears have been pointing out some downside risks as reasons for investors to tame their future growth expectations.

- In this article, we help investors better understand Nvidia Corporation’s positioning amid the risks.

- The market is too focused on the “Forward P/E” metric, but Nvidia is actually dirt cheap based on another, more comprehensive valuation metric that considers the speed of Nvidia’s earnings.

- Nvidia should be trading at around $1,928 per share, based on the valuation levels that the stock has historically traded at, and its dominant market positioning amid the AI revolution.

- Nvidia stock remains a compelling “Buy.”

IR_Stone

Nvidia Corporation (NASDAQ:NVDA) once again surprised the market to the upside with its blowout Q4 earnings report for the last quarter. CEO Jensen Huang reassured investors that the company foresees continued strong growth going into 2025, which is essential to sustaining the stock price rally in NVDA.

In the previous article, we discussed in a simplified manner Nvidia’s HGX platform, whereby it masterfully cross-sells its various data center solutions together as one big bundled product, as opposed to selling individual chips. This strategy yields incredible advantages like greater control over product performance and customer experiences, enabling Nvidia to tie customers into its ecosystem and fortify its moat. We had assigned a “buy” rating to Nvidia stock given this strong ability to build a moat around its AI leadership position.

We are once again re-iterating a “buy” rating on the stock as the semiconductor giant’s savvy software strategies induce both customer loyalty and profit margin expansion opportunities, and enable Nvidia to maintain its leadership position as we move onto the next stages of this AI revolution.

Bull case remains intact

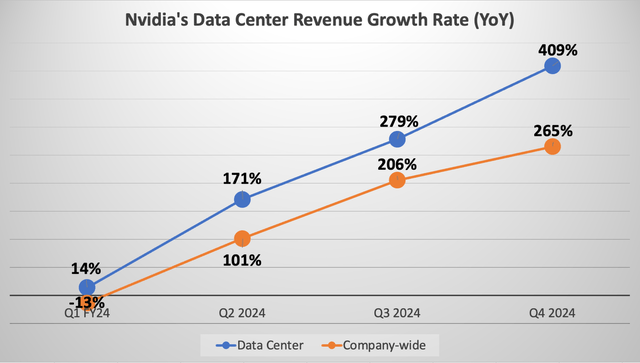

It is now well-established that Nvidia offers the most superior AI chip on the market, with data center revenue more than tripling over the past year to reach $47.5 billion in FY 2024.

Nvidia has revealed that it has “an installed base of several hundred million GPUs,” and now the company is seeking to build a flourishing software business on top of this extensive installed base. This software opportunity is indeed the next leg of growth supporting the continued bullish thesis on the stock.

In order to understand the software-driven bull case for Nvidia, it is important to appreciate the “Multi-Instance GPU” [MIG] chip design. In simple terms, “instances” are instructions on a GPU, and MIG allows for a single GPU to be portioned into seven separate instances. This technique essentially allows for more instances to be run on a single GPU at the same time, which translates to more software applications being run simultaneously on each chip.

The greater capacity of each GPU to run more software services indeed enables Nvidia to sell more software services to its customers. Considering that Nvidia boasts “an installed base of several hundred million GPUs,” the software opportunity is massive. In fact, Nvidia estimates that the long-term annual market opportunity for its ‘Nvidia AI Enterprise’ software and DGX Cloud is $150 billion.

For context, Nvidia produced around $1 billion in total software revenue last year, which means that there is indeed a lot more room for software revenue growth ahead for the tech giant, empowering the bull case for the stock.

Dismantling the bear cases

Now with the stock price more than tripling over the past year, the bears are poking holes in the training and inferencing phases of this AI revolution, suggesting how Nvidia’s pace of revenue and earnings growth could taper off.

Barclays’ credit research analyst Sandeep Gupta highlighted that:

“AI chip demand will eventually normalize once the initial training build has been completed. The inference phase of AI is going to require less computing power than the training phase.”

Now admittedly, there is credibility to the point that chip demand will eventually slow once data center customers have sufficiently transformed their infrastructure away from general-purpose computing (CPU-based) to accelerated computing (GPU-based).

For context, the training phase is where enormous amounts of data are fed into AI models to teach them to recognize and understand various forms of text, images and videos, to be able to respond to user queries when these models are deployed.

The inferencing phase is indeed where these models are deployed. The AI models are used to power various forms of end-user applications (e.g., ChatGPT or Microsoft Copilot) to be able to generate their own text, images and videos based on what users prompt them to do.

Training will be a continuous process

While it is true that inferencing will grow as a proportion of total AI workloads as we proceed ahead in this AI revolution, it is important to understand that training is not going to end.

New AI models will continuously be built as companies learn more and more about the strengths and drawbacks of their current models through deployment via various applications for end-users. For instance, OpenAI continues to introduce new versions of its GPT models, the latest of which being GPT-4 Turbo.

In fact, in a previous article about Microsoft Azure’s AI-driven growth prospects, we discussed the following:

“key point made by the CEO [Satya Nadella] was that these workloads have “lifecycles.” What does he mean by that? Well keep in mind that new and more advanced AI models will continue to be introduced over time, which cloud customers will adopt to power their business services, replacing their old workloads using previous generation models.”

Furthermore, tech firms will also strive to build more powerful and versatile AI models as new use-cases of generative AI arise across industries, which will require increasingly more powerful AI chips.

For instance, OpenAI’s latest Sora AI model offers text-to-video capabilities, which requires a lot more computational power to train compared to the text-to-text GPT models. And in order for such video-generation models to offer real value in the world of entertainment, there will need to be numerous iterations of these models to achieve new capabilities like synchronizing sounds with the video displays, which indeed will require the most advanced accelerated GPUs available.

As the race heats up among tech companies to continuously produce newer and more versatile AI models, Nvidia’s data center customers such as the cloud service providers (e.g., Microsoft Azure, Google Cloud, etc.), consumer internet companies (e.g., Meta Platforms) and Enterprise software companies (e.g. Adobe, ServiceNow, etc.), will remain emboldened to regularly upgrade their AI infrastructure as Nvidia continues to launch new iterations of its AI chips and other data center products (such as networking equipment).

Nvidia will continue to lead in the inferencing phase

Now moving onto another risk, the bears have argued that while Nvidia has dominated the market for AI chips that are used for training, it may not dominate the market for inferencing. For context, the global AI Inference Chip Market was estimated to be valued at $15.8 billion in 2023, and expected to become a $90.6 billion market by 2030.

The training stage is deemed to be a lot more compute intensive than the inferencing phase. So the argument made by bears and Nvidia’s competitors is that customers will not need Nvidia’s ultra-powerful and expensive chips for the inferencing of their AI models, and instead will purchase less powerful chips from the likes of AMD and Intel.

Now here’s the problem with that argument.

It is important to understand that Nvidia’s H100 GPUs can be used for both training and inferencing. It is not as if the H100s can only be used for training, and that data center customers have to look for alternative chips for the inferencing phase. In fact, on the Q4 2024 Nvidia earnings call, CFO Colette Kress and CEO Jensen Huang had shared that:

Colette Kress

We estimate in the past year approximately 40% of data center revenue was for AI inference.

…

Jensen Huang

The estimate is probably understated. And — but we estimated it.

Consider the fact that GPU-specialized cloud service provider CoreWeave, which is determined to be the 7th largest customer of Nvidia’s AI chips, had estimated last year that “each H100 would retain their value for six years and would only tick down 50% over the subsequent two years.”

Although, different customers may depreciate their assets over varying time periods.

Generally, high-end GPUs like the H100 might experience faster depreciation in the first few years as newer generations with improved performance are released. This initial depreciation might slow down later in their lifespan as they settle into a niche usage within data centers that don’t require cutting-edge performance.

The point is, customers could continue using these chips for inferencing once they are done with training the AI models.

That being said, GPUs are often part of larger server systems, and depreciation rates are more likely to be calculated for entire systems rather than individual components like the H100.

In fact, on the past several quarterly earnings calls, Nvidia has repeatedly highlighted that the strong revenue growth rates for its data center segment was driven by the rising sales of its HGX systems, which are server systems that combine “four or eight AI GPUs (such as A100s or H100s) together using Nvidia’s networking solutions (Infiniband) and NVLink technology” and also includes optimized software stacks, as explained in the previous article discussing Nvidia’s data center growth strategy.

And again, these HGX systems are designed to be used for both training and inferencing.

Now we don’t know for how long these data center customers expect to use these HGX systems. Although what is important to note is the software layer of these server systems.

HGX systems come with an optimized software environment to run deep learning frameworks like TensorFlow and PyTorch. Note that these frameworks are not included within the HGX servers, but need to be installed separately.

Nonetheless, these deep learning frameworks like TensorFlow and PyTorch offer optimized libraries for both training and inference on H100s and Nvidia HGX systems. These libraries ensure smooth transitions between the training and inferencing stages.

If customers indeed decide to leverage these software libraries that strive to facilitate seamless transitions from training to inferencing on the same chips and servers, it would undermine the bearish argument that the data center customers will purchase massive numbers of new chips from alternative providers to run their inferencing workloads.

In fact, this is a classic strategy used by tech companies to leverage the stickiness of software services to keep customers entangled into their ecosystem. And it’s kind of a no-brainer. Did the bears really think Nvidia was going to leave the door open for rivals to swoop into the market amid the shift from training to inferencing?

You could bet your bottom dollar that Nvidia will have planted its seeds well beforehand to ensure customers continue using its hardware and software services as they move onto powering their inferencing workloads.

And the software advantage does not stop there, as we haven’t yet touched upon the power of the CUDA software package.

CUDA is a programming platform and development toolkit that provides the foundation for running computing tasks on Nvidia GPUs. It offers tools and libraries like cuDNN to accelerate deep learning frameworks like TensorFlow and PyTorch, which are then used to build and deploy AI inference applications.

Third-party developers build AI inference applications that are compatible with Nvidia’s GPUs, selling them as a service to Nvidia GPU and CUDA users, to help improve the ease and speed of inferencing large, complex AI models.

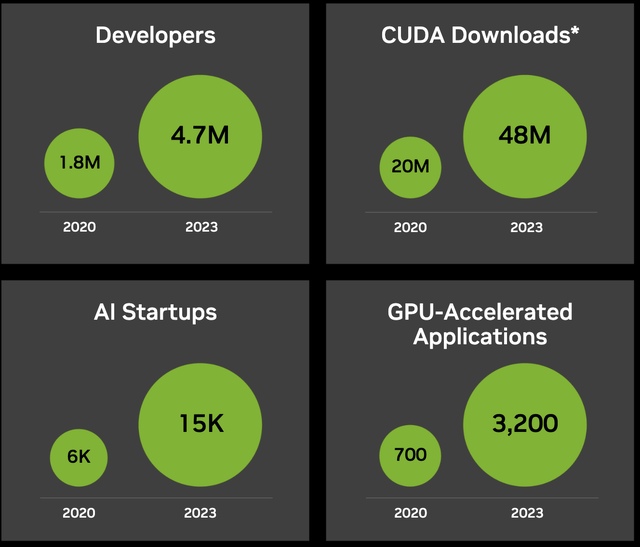

During an investor presentation in February 2024, Nvidia had disclosed that the number of cumulative CUDA downloads had reached 48 million by the end of 2023.

Nvidia’s massive installed base of several hundred million GPUs and 48 million CUDA users offers these third-party developers an extensive reach to customers that they cannot find on competitors’ platforms.

As a result, more and more developers become incentivized to produce AI inference applications specifically for Nvidia’s GPUs. And the growing number of inference applications on the Nvidia platform in turn attracts even more customers to use Nvidia’s hardware and software solutions for their inferencing workloads. This again attracts even more developers as the accessibility to the growing customer base enables them to generate more income through the Nvidia ecosystem.

As Nvidia has revealed in the statistics cited above, the number of developers on its platform has surged to 4.7 million, and the number of GPU-accelerated applications (not just inferencing) has grown to 3,200.

The point is, this virtuous network effect helps Nvidia sustain its market dominance as the AI industry shifts from training to inferencing.

So while bears argue that customers could buy more cheaper alternatives during the inferencing phase, Nvidia’s savvy software strategy could indeed induce customers to upgrade to Nvidia’s next-generation H200 or B100 chips instead, thereby enabling the tech giant to produce recurring data center revenue via a regular upgrade cycles, much like Apple’s strategy with the iPhone.

Now this is not to say that data center customers may not purchase chips from alternative providers at all amid the transition towards the inferencing phase of this revolution, as they could indeed strive to diversify away from Nvidia to a certain extent to avoid becoming over-dependent on a single supplier.

In fact, chief rival Advanced Micro Devices (AMD) CEO Lisa Su had shared on the Q4 2023 AMD earnings call how their own ROCm software (competing against Nvidia’s CUDA) was helping attract more AI workloads onto its own AI chips (MI300), and even raising the guidance for their 2024 Data Center GPU sales forecasts from $2 billion $3.5 billion:

The additional functionality and optimizations of ROCm 6 and the growing volume of contributions from the Open Source AI Software community are enabling multiple large hyperscale and enterprise customers to rapidly bring up their most advanced large language models on AMD Instinct accelerators.

…

For example, we are very pleased to see how quickly Microsoft was able to bring up GPT-4 on MI300X in their production environment and rollout Azure private previews of new MI300 instances aligned with the MI300X launch.

Furthermore, AI chip startups like SambaNova and Groq have also seen surging demand for their GPUs to be used for inferencing workloads.

And Intel CEO Pat Gelsinger had shared on the Q4 2023 Intel earnings call that:

“Our accelerator pipeline for 2024 grew double digits sequentially in Q4 and is now well above $2 billion and growing.”

So customers are certainly diversifying to non-Nvidia chips too.

Risks to bullish thesis on Nvidia stock

Customers producing their own AI chips: One of the bears’ favorite arguments is that while demand for Nvidia’s AI GPUs may be soaring now, the fact that its biggest customers are all increasingly designing and using their own chips poses a significant threat to Nvidia’s future sales prospects.

Inevitably, future sales growth from these data center customers will slow as those tech behemoths strive to reduce their reliance on Nvidia. While cloud service providers like Microsoft Azure and Google Cloud will indeed try to encourage their customers to shift their workloads to instances powered by their own chips, Nexus still believes that Nvidia’s chips and data center solutions will maintain a strong presence in these data centers for the foreseeable future, thanks to the brand power and extensive software ecosystem Nvidia has been able to build around its hardware, making it difficult for data center customers to cut ties with Nvidia completely.

Furthermore, recently it was revealed that Nvidia is pursuing a new business venture whereby it will offer custom chip designing services to data center customers, the way Broadcom and Marvell Technology currently offer. In other words, companies that want to design their own chips will now be able to work with Nvidia’s chip designers, and will be able to incorporate Nvidia’s Intellectual Property into their custom chips.

As per a Reuters report:

“Nvidia officials have met with representatives from Amazon, Meta, Microsoft, Google and OpenAI to discuss making custom chips for them

…

According to estimates from research firm 650 Group’s Alan Weckel, the data center custom chip market will grow to as much as $10 billion this year, and double that in 2025.”

Hence, this move by Nvidia offers a great hedge against the risk of data center customers increasingly replacing Nvidia’s off-the-shelf GPUs with their own chips.

Cost-efficient model training and inferencing: Training and inferencing AI models are certainly expensive, time-consuming and energy-demanding computing tasks, requiring an enormous number of accelerated GPUs.

In an effort to achieve cost-efficiencies, customers are likely to find new ways of designing these AI models, and new techniques for training and inferencing such models, so that these computing tasks can be completed using fewer GPUs.

In fact, in a previous article discussing Google Cloud’s savvy strategy for driving cost efficiencies, we discussed a technique called “compute-optimal scaling” that the tech giant had used when building its PaLM 2 model. This approach essentially allowed Google to build its Large Language Model [LLM] using fewer parameters, allowing for faster inferencing, and requiring less compute power.

It is prudent to assume that Nvidia’s other customers like OpenAI and AWS will also strive for more cost-efficient model-building, training and inferencing techniques that reduce the number of GPUs required from Nvidia going forward.

Nvidia’s Financial Performance & Valuation

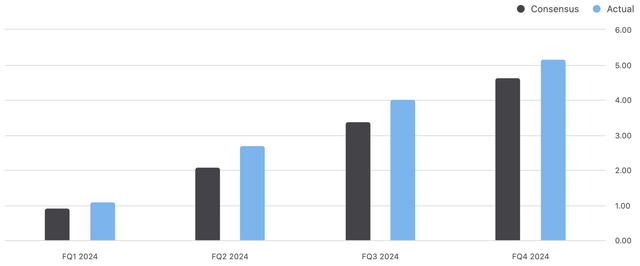

For every single quarter in FY 2024, Nvidia beat the street’s expectations for both the preceding quarter’s earnings and the next quarter’s guidance.

And Q4 2024 was no different, with total revenue growing by 265% year-over-year, and the company guiding $24 billion in revenue for Q1 FY 2025, which would imply a year-over-year growth rate of 234%.

Nexus, data compiled from company filings

Nvidia’s market-leading GPU for AI computing has enabled the company to command strong pricing power, with the latest H100 Graphics Processing Unit [GPU] reportedly selling for around $40,000 apiece.

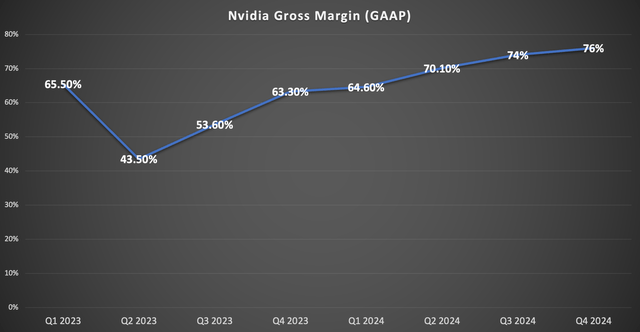

Strong pricing power for Nvidia’s market-leading GPUs has translated into incredible profit margins, with gross margins expanding to 76% over the past year.

Nexus, data compiled from company filings

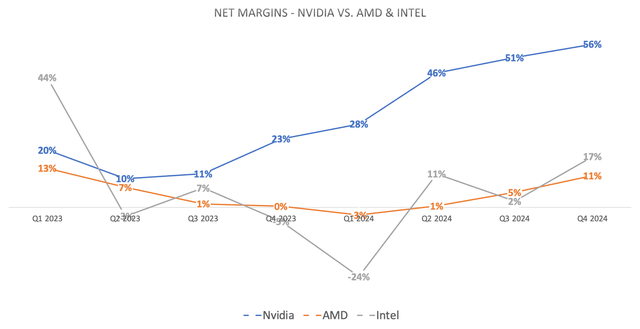

And net profit margins far exceeding those of AMD and Intel.

Nexus, data compiled from company filings

Building on its AI chip leadership, the company’s high profitability could be sustained, if not further expanded, by its growing software endeavors.

On the Q4 2024 Nvidia earnings call, CFO Colette Kress had shared that:

“We also made great progress with our software and services offerings, which reached an annualized revenue run rate of $1 billion in Q4.”

Nvidia generated $60.9 billion in total revenue last year. So an annualized revenue run rate of $1 billion would mean that software only accounted for 1.64% of company-wide revenue last year.

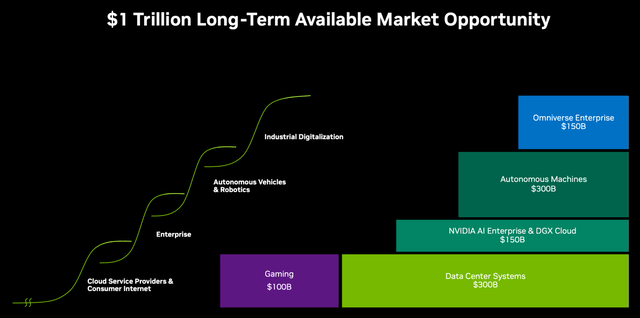

Following the release of the company’s latest earnings, Nvidia also published an investor presentation last month, in which it revealed that the corporation estimates its total long-term market opportunity to be $1 trillion.

More specifically, Nvidia has projected that its long-term market opportunity for ‘Nvidia AI Enterprise software & DGX Cloud’ is $150 billion. Aside from the software opportunity in AI, Nvidia is also pursuing the software opportunity in its Professional Visualization segment, with the long-term ‘Omniverse’ software opportunity also approximated to be $150 billion.

This means that out of the $1 trillion total market opportunity, $300 billion will be software. Therefore, as software revenue grows to contribute 30% to company-wide revenue over time, it should further expand Nvidia’s profit margins, given that software tends to be a higher margin business.

CEO Jensen Huang also assured investors on the Q4 2024 Nvidia earnings call that demand will remain strong through calendar year 2025:

“We guide one quarter at a time. But fundamentally, the conditions are excellent for continued growth calendar ’24, to calendar ’25 and beyond.”

In other words, data center customers are expected to continue spending heavily on transforming their infrastructure for the foreseeable future, to optimally capitalize on the AI revolution.

This was an important remark as strong future guidance is essential for the stock to continue rallying higher.

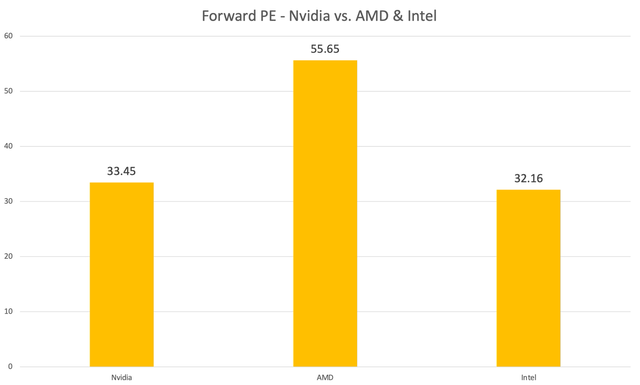

NVDA stock currently has a forward P/E (non-GAAP) of around 33x, which is well below its 5-year average of over 46x. Now for a company that is best positioned to capitalize on the AI revolution, 33x forward earnings is an incredibly attractive valuation, particularly in comparison to its competitors.

Nexus, data compiled from company filings

On a forward P/E basis, Nvidia stock is much cheaper than AMD, and on par with Intel’s valuation.

Although the forward P/E metric does not take into consideration a company’s projected earnings growth rate. Two companies could have the same forward P/E multiple, but if Company A’s earnings are expected to grow at a faster rate than Company B’s earnings, then Company A will be seen as a more attractive investment.

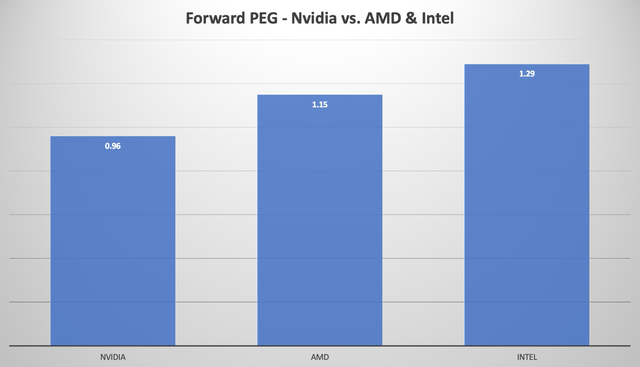

This is where the Forward Price-Earnings-Growth (PEG) ratio comes in, which is essentially a stock’s Forward P/E ratio divided by the estimated future earnings growth rate over a certain period of time. It offers more context in terms whether a stock’s forward P/E is worth paying based on its future earnings prospects.

A forward PEG of 1 would imply that a stock is trading at fair value. Although popular stocks rarely tend to trade at a forward PEG of 1, as the markets tend to assign a premium valuation to such securities based on a variety of factors such as quality and track record of executive team, product strength and differentiation, market share positioning and competitive moat factors.

Historically, Nvidia has commanded a forward PEG of 2.25 based on a 5-year average. Forward PEG ratios above 2 are typical for highly sought-after technology stocks.

But here’s the incredible part, despite all the excitement around AI and Nvidia’s unique positioning to capitalize on the AI revolution thanks to its leadership position in offering accelerated GPUs, the stock only trades at a forward PEG of 0.96, below fair value. While AMD and Intel stock both trade above fair value.

Nexus, data compiled from company filings

Hence, even after its parabolic rally of around 234% over the past year, Nvidia remains the more compelling buy over its rivals.

Now as mentioned earlier, while a forward PEG of 1 is usually considered a “fair value” for a stock, popular technology stocks rarely tend to trade at such a level, as the markets tend to assign a certain premium to such securities based on various factors.

Nvidia already dominates the AI chip market. And as we discussed earlier, Nvidia’s software strategies should enable the tech giant to continue dominating the AI industry amid the shift from training to inferencing. Additionally, the semiconductor giant is well-positioned to sustain its high profit margins, especially as 30% of the company’s revenue is comprised of software revenue over time, as per Nvidia’s long-term market opportunity noted earlier.

Therefore, Nvidia stock deserves to trade at a premium valuation relative to fair value, and should trade closer to its 5-year average forward PEG of 2.25.

Now as mentioned earlier, the forward PEG is simply the stock’s forward PE multiple divided by the expected earnings growth rate, with the formula being:

Forward PEG = Forward P/E ratio / Expected EPS growth rate

So based on the current forward PEG ratio of 0.96 and forward P/E of 33.45, it implies an expected earnings growth rate of 34.84%, calculated as:

Forward P/E ratio / Forward PEG = Expected EPS growth rate

33.45 / 0.96 = 34.84%

Now in order for the stock to trade at a forward PEG of 2.25, Nvidia can command a forward P/E of 78.4x, calculated as:

Expected EPS growth rate / Forward PEG = Forward PE ratio

34.84 x 2.25 = 78.4

NVDA currently trades at a forward P/E of 33.45x (based on the closing price of $822.79), and in order for the stock to trade in line with its 5-year average forward PEG of 2.25, the stock should be trading at a forward P/E of 78.4x. This would be more than double its current valuation, implying a stock price of around $1,928.

Therefore, Nvidia stock remains undervalued based on its historical valuation trends and AI-driven growth potential, making it a compelling “buy” for investors.

Analyst’s Disclosure: I/we have a beneficial long position in the shares of NVDA either through stock ownership, options, or other derivatives. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.