Summary:

- Micron is set to announce its Q2 2024 earnings results on March 20 with focus areas on memory market recovery and increased demand for HBM in AI applications.

- Micron aims to allocate 30% of sales to capex and 10% to R&D, following industry peers’ practices.

- Micron’s HBM3x memory and its volume production are expected to contribute to revenue growth in 2024 and 2025.

- Micron’s share of the HBMx market will reach 2.9% in 2024 growing to 15% in 2025.

Roman Starchenko

Micron Technology, Inc. (NASDAQ:MU) announces its Fiscal 2Q2024 earnings call on March 20, 2024. Key areas of focus will be on a recovery in the memory market and increased demand for High Bandwidth Memory (“HBM”), particularly aimed at artificial intelligence (“AI”) applications.

MU’s guidance for Q2 revenue, given in its Q1 call, is between $5.1B and $5.5B, with the mid-point above the $4.99B estimate. On an adjusted basis, it expects to lose between $0.21 and $0.35 per share.

There are several key trends that are expected to influence Micron’s performance in this quarter.

Memory Pricing and Capex

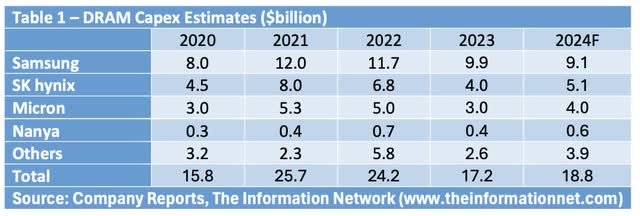

Memory Pricing Trends: The DRAM industry, including leaders like Micron and Samsung Electronics Co., Ltd. (OTCPK:SSNLF), has observed a strategic pullback in memory production capacity investments (capex), as shown in Chart 1.

Micron aims to allocate approximately 30% of its sales to capital expenditures and around 10% of its sales to research and development. These allocations are in line with the practices of industry peers.

Although a robust demand recovery is anticipated in the second half of 2024, SK hynix is not expected to pursue aggressive capital expenditure (“capex”) increases this year. Instead, the company’s focus seems to be on technology migration rather than expanding production capacity. SK Hynix has outlined a capex plan of W13 trillion for 2024, representing a nearly 50% year-over-year increase but only reaching 70% of the levels seen in 2022-23. The majority of this spending is likely to occur in the latter half of 2024.

For Samsung, capex on memory primarily focused on enhancing infrastructure at the Pyeongtaek facility in Korea and expanding production capacity for High Bandwidth Memory (HBM), DDR5, and other advanced nodes. Capex will likely drop to about W49 trillion in 2024.

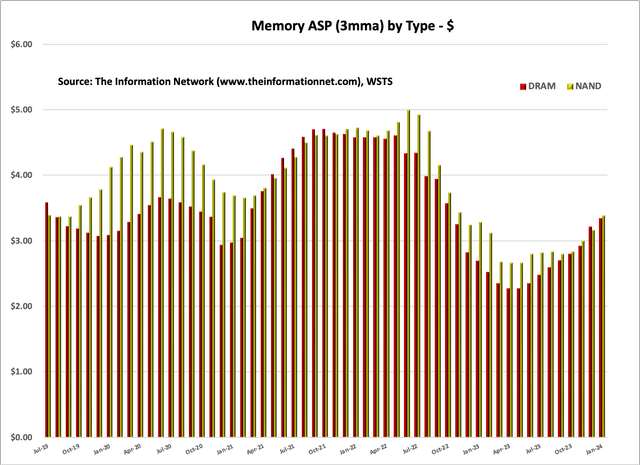

This controlled approach to supply has beneficially impacted memory pricing. Additionally, demand for memory replenishment from smartphones and PCs witnessing growth. Chart 1 shows the recovery of DRAM ASPs (average selling prices).

Chart 1

HBM Memory

Generative AI Impact: The surge in generative AI tools, notably those like ChatGPT, is significantly contributing to the heightened demand for memory. These AI applications require substantial DRAM and storage resources for effective operation.

Micron was late to the HBM sector crucial for AI model training and inferencing, which I reported in a June 23, 2023 Seeking Alpha article entitled Micron: Late To The Game AI Strategy And Politics Do Not Make For A Strong Company.

Nevertheless, it has made significant strides with the announcement of its advanced second-generation HBM3 memory, which I discussed in a January 1, 2024, Seeking Alpha article entitled Micron: Korean Memory Competitors Are Just Too Formidable, and noted:

“Micron positions its HBM3 Gen2 as a significant advancement, boasting a 50% improvement over base HBM3 chips from competitors Samsung Electronics and SK hynix. Micron’s offering also provides 50% more memory density than the HBM3 Gen2, also known as HBM3E, introduced by rivals. The company anticipates a 15% performance boost compared to the 8 GT/second target set for SK hynix’s HBM3E memory.”

In a February 26, 2024 press release, Micron announced it has begun volume production of its HBM3E (High Bandwidth Memory 3E) solution. Micron’s 24GB 8H HBM3E will be part of NVIDIA Corporation (NVDA) H200 Tensor Core GPUs, which will begin shipping in the second calendar quarter of 2024.

Sanjay Mehrotra noted in the company’s fiscal Q1 2024 earnings call:

“We are on track to begin our HBM3E volume production ramp in early calendar 2024 and to generate several hundred million dollars of HBM revenue in fiscal 2024. We expect continued HBM revenue growth in 2025, and we continue to expect that our HBM market share will match our overall DRAM bit share sometime in calendar 2025.”

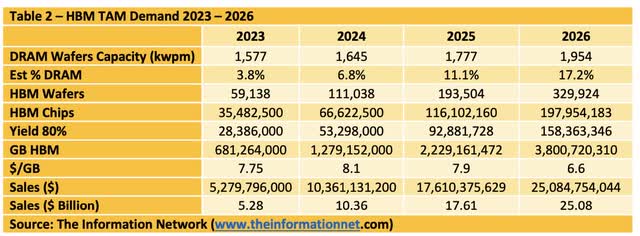

What share of the market is “several hundred millions of dollars?” While Micron’s fiscal 2024 is one quarter different from a calendar year, for simplicity, using $10.36 billion for CY 2024 and $300 million as “several hundred millions”, I estimate that Micron will have a 2.9% share of the HBMx market in 2024, as per Table 2.

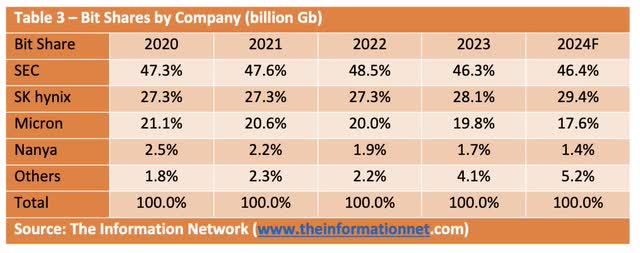

Mehrotra above also estimates that in CY 2025, Micron’s HBMx share will match its overall DRAM bit share.

According to Table 3 above, that means that its share should be in the 15% range if I extrapolate to 2025. Now multiplying the 15% share by total HBMx revenues of $17.61 billion comes to $2.6 billion in HBMx for Micron.

Table 4 summarizes this analysis.

Competitive Analysis

Micron

Micron is benefiting from the expected recovery in traditional memory demand and the rapidly increasing demand for HBM. This positions the company well in both conventional and high-performance memory markets.

SK hynix

The demand for HBM from Nvidia, a key customer of SK Hynix, is projected to remain robust. Initially, Nvidia’s H100 is expected to spur the demand for HBM3E, which will be further propelled by the introduction of the H200 in the second quarter of 2024 and the B100 in the latter half of the year. Specifically, the H100 GPU is anticipated to utilize 80GB of 12-layer HBM3 (24GB), while the H200 and B100 GPUs are expected to employ 144GB (24GBx6) and 288GB (36GBx8) of 8-layer HBM3E, respectively.

To preserve its market position, SK Hynix is strategizing to concentrate on the 8-layer HBM3E segment, anticipated to be prevalent this year. Although Micron and Samsung Electronics are projected to enhance their market stance in HBM3E by 2025, the overall market for HBM3E is expected to witness accelerated growth. This growth trajectory is attributed to AMD, hyperscalers, and AI startups gradually transitioning from HBM2E and HBM3 to adopting HBM3E. This shift underscores the evolving landscape of the HBM market, highlighting the strategic moves by major players to capture the burgeoning demand for advanced memory solutions in high-performance computing and AI technologies.

Samsung

Samsung Electronics has taken a significant step ahead in the high-bandwidth memory market by initiating the supply of 12-layer HBM3E (36GB) product samples to its customers, positioning itself several months in advance of its competitors. This move comes even as Samsung continues to finalize qualification testing for its 8-layer HBM3 product. The completion of these tests is anticipated in the second quarter of 2024, with the company expecting to start generating revenue from this product in the second half of the year.

Looking ahead, the qualification for the 12-layer HBM3E product is likely to be wrapped up in the third quarter of 2024. However, significant revenue contributions from this advanced memory solution are projected to commence in 2025. Samsung’s aggressive timeline in rolling out its 12-layer HBM3E samples underscores its commitment to maintaining a leading edge in the competitive memory market, particularly in areas requiring high memory bandwidth such as artificial intelligence, high-performance computing, and advanced graphics processing. This strategy not only demonstrates Samsung’s technological prowess but also its strategic foresight in anticipating market needs and positioning itself to meet these demands ahead of others in the industry.

While the adoption of 12-layer HBM3E technology in AI accelerators is not yet confirmed, there is a strong expectation for robust customer demand for such products in the future. One significant potential application is in Nvidia’s X100 series, scheduled for release in 2025, which is anticipated to incorporate 12-layer HBM3E memory. This would offer a substantial maximum capacity ranging from 216GB (36GBx6) to 288GB (36GBx8) per GPU, catering to the increasing memory requirements of AI workloads.

Moreover, starting from 2025, the demand for 8-layer HBM3E is expected to extend beyond established players like Nvidia to include AMD, hyperscalers, and accelerator start-ups. This broadening demand base is likely to drive significant market expansion and increase market exposure for HBM3E technology. As AI applications continue to evolve and demand for higher-performance computing solutions grows, the adoption of advanced memory solutions like 12-layer HBM3E is expected to play a crucial role in meeting these escalating requirements.

Bottom Line

I continue to rate Micron a Hold. While the memory market is increasing, I show in Table 3 that its bit growth share is eroding. On the strong growth prospects of HBMx, in 2024, I project Micron’s share at just 2.9%. I give Micron the benefit of the doubt that its HBMx share will match its DRAM share, but investors must recognize that competitors are not standing still.

Sk hynix has demonstrated 12-layer HBMx and Samsung will do so in Q3. Hynix already has a lock on Nvidia’s AI chips, and it is only because of limited capacity that Nvidia moved to Micron. Now that SK hynix’s Fab 4 is operational and a new HBMx plant is planned for the U.S. state of Indiana.

One only has to look at Table 1 above, to see that Samsung and SK hynix will outspend Micron on DRAMs.

Editor’s Note: This article discusses one or more securities that do not trade on a major U.S. exchange. Please be aware of the risks associated with these stocks.

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, and no plans to initiate any such positions within the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha’s Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.

This free article presents my analysis of this semiconductor equipment sector. A more detailed analysis is available on my Marketplace newsletter site Semiconductor Deep Dive. You can learn more about it here and start a risk free 2 week trial now.